AI chap8

advertisement

Chapter 8

Inference in first-order logic

Inference in FOL,

removing the quantifiers

i.e., converting KB to PL

then use Propositional inference

which is easy to do

Inference rules for quantifiers

In KB, we have

A rule called Universal Instantiation (UI)

substituting a ground term for the variable

SUBST( , ) denotes

the result of applying the substitution to the

sentence

v

SUBST({v/g}, )

Here the examples are

{x / John}, {x / Richard}, {x / Father(John)}

Existential Instantiation:

For any sentence , variable v, and

constant k that does not appear elsewhere

in the KB:

v

SUBST({v/k}, )

x Crown(x) OnHead(x, John)

If C1 does not appear elsewhere in the KB

then we can infer:

Crown(C1) OnHead(C1, John)

Reduction to Propositional

Inference

Main idea

for existential quantifiers

find a ground term to replace the variable

remove the quantifier

add this new sentence to the KB

for universal quantifiers

find all possible ground terms to replace the variable

add the set of new sentences to the KB

apply UI to the first sentence

from the vocabulary of the KB

{x / John}, {x / Richard} – two objects

we then obtain

view other facts as propositional variables

use inference to induce Evil(John)

Propositionalization

The previous techniques

applying this technique to

every quantified sentence in the KB

we can obtain a KB consisting of

propositional sentences only

however, this technique is very inefficient

in inference

it generates other useless sentences

Unification and Lifting

Unification

a substitution θ such that applying on two

sentences will make them look the same

e.g., θ = {x / John} is a unification

applying on x King ( x) Greedy( x) Evil( x)

it becomes

x King ( John) Greedy( John) Evil( John)

and we can conclude the implication

using King(John) and Greedy(John)

Generalized Modus Ponens (GMP)

The process capturing the previous steps

A generalization of the Modus Ponens

also called the lifted version of M.P.

For atomic sentences pi , pi', and q,

there is a substitution such that

SUBST(, pi)= SUBST(, pi'), for all i:

Generalized Modus Ponens

Unification

UNIFY, a routine which

takes two atomic sentences p and q

return a substitution

that would make p and q look the same

it returns fail if no such substitution exists

Formally, UNIFY(p, q)=

where SUBST(, p) = SUBST(, q)

is called the unifier of the two sentences

Standardizing apart

UNIFY failed on the last sentence

in finding a unifier

reason?

two sentences use the same variable name

even they are having different meanings

so, assign them with different names

internally in the procedure of UNIFY

= standardizing apart

MGU

Most Generalized Unifier

there may be many unifiers for two sentences

which one is the best?

the one with less constraints

e.g., UNIFY(Knows(John, x), Knows(y, z))

one unifier = { y/John, x/John, z/John }

another = {y/John, z/x} – the best

even if z and x are not yet found

Forward and backward chaining

Forward chaining

start with the sentences in KB

generate new conclusions that

in turn allow more inferences to be made

usually used

when a new fact is added to the KB

and we want to generate its consequences

First-order Definite clauses

the normal form of sentences for FC

can contain variables

either atomic or

an implication whose

antecedent is a conjunction of positive literals

consequent a single positive literal

Not every KB can be converted into a set of

definite clauses, but many can

why? the restriction on single-positive-literal

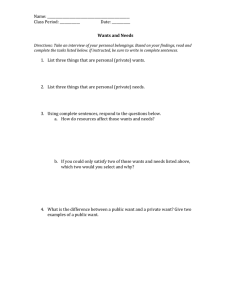

Example

We will prove that West is a Criminal

First step

translate these facts as first-order definite

clauses

next figure shows the details

For forward chaining, we will have two

iterations

The above is the proof tree generated

No new inferences can be made at this

point using the current KB

such a KB is called a fixed point of the

inference process

Backward chaining

start with something we want to prove

(goal/query)

find implication sentences

that would allow to conclude

attempt to establish their premises in turn

normally used

when there is a goal to prove (query)

Backward-chaining algorithm

This is better to illustrate with a proof tree

One remark

backward chaining algorithm uses

composition of substitutions

SUBST(COMPOSE(θ1, θ2), p)

= SUBST(θ2, SUBST(θ1, p))

it’s used because

different unification are found for different goals

we have to combine them.

Resolution

Modus Ponens rule

can only allow us to derive atomic conclusions

{A, A=>B}├ B

However, it is more natural

to allow us derive new implication

{A => B, B => C} ├ A=>C, the transitivity

a more powerful tool: resolution rule

CNF for FOL

Conjunctive Normal Form

a conjunction (AND) of clauses

each of them is a disjunction (OR) of literals

the literals can contain variables

e.g.,

Conversion to CNF

6 steps

Skolemize

process of removing

i.e., translate x P(x) into P(A), A is a new

constant

If we apply this rule in our sample, we have

which is completely wrong

since A is a certain animal

To overcome it, we use a function to represent any

animal, these functions = Skolem functions

Drop universal quantifiers

all variables now are assumed to be

universally quantified

Fortunately, all the above steps can be

automated

Resolution inference rule

Example proof

Resolution proves that KB |= by

proving KB α unsatisfiable, i.e., empty clause

First convert the sentences into CNF

next figure, empty clause

so, we include the negated goal Criminal(West)

Example proof

This example involves

skolemization

non-definite clauses

hence making inference more complex

Informal description

The following answers: Did Curiosity kill the cat?

First assume Curiosity didn’t kill the cat.

Add it into KB

Empty clause, so the assumption is false

Resolution strategies

Resolution is effective

but inefficient

because it is like forward chaining

the reasoning is randomly tried

There are four general guidelines in

applying resolution

Unit preference

When using resolution on two sentences

one of the sentences must be a unit clause

(P, Q, R, etc.)

The idea is to produce a shorter sentence:

e.g., P Q => R and P

will produce Q => R

hence reduce the complexity of the clauses

Set of support

Identifying a subset of sentences from the KB

Every resolution combines a sentence

from the subset

and another sentence from the KB

The resolvent (conclusion) of the resolution

is added to the subset

and continue the resolution process

How to choose this set?

a common approach: the negated query

to prove the query, assume negative

and prove the contradiction

advantage: goal-directed

Input resolution

Every resolution combines

one of the input sentences (facts)

from the query

or the KB

with some other sentence

Next fig

For

each resolution,

at

least one of the sentences from the query or KB

Subsumption (inclusion, 包含)

eliminates

all sentences

that

are subsumed by (i.e., more specific

than) an existing sentence in the KB

If

P(x) is in KB, x means all arguments

then

we don’t need to store the specific

instances of P(x): P(A), P(B), P(C) …,

Subsumption

helps keep the KB small