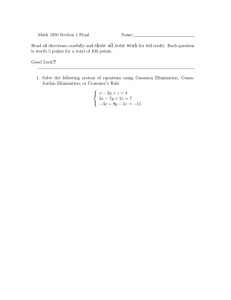

Algorithm Analysis (Big O)

advertisement

Algorithm Analysis

(Big O)

1

Complexity

In

examining algorithm efficiency we

must understand the idea of complexity

Space complexity

Time Complexity

2

Space Complexity

When memory was expensive we focused on

making programs as space efficient as possible

and developed schemes to make memory appear

larger than it really was (virtual memory and

memory paging schemes)

Space complexity is still important in the field of

embedded computing (hand held computer

based equipment like cell phones, palm devices,

etc)

3

Time Complexity

Is

the algorithm “fast enough” for my

needs

How much longer will the algorithm

take if I increase the amount of data it

must process

Given a set of algorithms that

accomplish the same thing, which is the

right one to choose

4

Algorithm Efficiency

a measure of the amount of resources consumed in

solving a problem of size n

Benchmarking: implement algorithm,

time

space

run with some specific input and measure time taken

better for comparing performance of processors than for

comparing performance of algorithms

Big Oh (asymptotic analysis)

associates n, the problem size,

with t, the processing time required to solve the problem

5

Cases to examine

Best case

if the algorithm is executed, the fewest

number of instructions are executed

Average case

executing the algorithm produces path

lengths that will on average be the same

Worst case

executing the algorithm produces path

lengths that are always a maximum

6

Worst case analysis

Of

the three cases, only useful case

(from the standpoint of program

design) is that of the worst case.

Worst

case helps answer the software

lifecycle question of:

If its good enough today, will it be good

enough tomorrow?

7

Frequency Count

examine

a piece of code and predict the

number of instructions to be executed

for each instruction predict how many times

e.g. each will be encountered as the code runs

Inst

#

1

2

3

Code

F.C.

for (int i=0; i< n ; i++)

n+1

{ cout << i;

n

p = p + i;

n

}

____

3n+1

totaling the counts produces the F.C. (frequency count)

8

Order of magnitude

In the previous example:

best_case = avg_case = worst_case

Example is based on fixed iteration n

By itself, Freq. Count is relatively meaningless

Order of magnitude -> estimate of performance vs.

amount of data

To convert F.C. to order of magnitude:

discard constant terms

disregard coefficients

pick the most significant term

Worst case path through algorithm ->

order of magnitude will be Big O (i.e. O(n))

9

Another example

Inst # Code

1

2

for (int i=0; i< n ; i++)

for int j=0 ; j < n; j++)

F.C.

F.C.

n+1

n+1

n(n+1)

n2+n

3

{ cout << i;

n*n

n2

4

p = p + i;

n*n

n2

____

}

3n2+2n+1

discarding constant terms produces :

3n2+2n

clearing coefficients :

Big O = O(n2)

n2+n

picking the most significant term: n2

10

What is Big O

Big

rate at which algorithm performance

degrades as a function of the amount of

data it is asked to handle

For

O

example:

O(n) -> performance degrades at a linear

rate O(n2) -> quadratic degradation

11

Common growth rates

12

Big Oh - Formal

Definition

Definition of "big oh":

f(n)=O(g(n)), iff there exist constants c and n0

such that:

f(n) <= c g(n) for all n>=n0

Thus, g(n) is an upper bound on f(n)

Note:

f(n) = O(g(n))

is NOT the same as

O(g(n)) = f(n)

The '=' is not the usual mathematical operator

"=" (it is not reflexive)

13

Big-O Notation

Comparing Algorithms

and

ADT Data Structures

14

Algorithm Efficiency

a measure of the amount of resources consumed in

solving a problem of size n

benchmarking – code the algorithm, run it with some

specific input and measure time taken

time

space

better for measuring and comparing the performance of

processors than for measuring and comparing the performance

of algorithms

Big Oh (asymptotic analysis) provides a formula that

associates n, the problem size, with t, the processing

time required to solve the problem

15

big Oh

measures an algorithm’s growth rate

how fast does the time required for an algorithm to

execute increase as the size of the problem increases?

is an intrinsic property of the algorithm

independent of particular machine or code

based on number of instructions executed

for some algorithms is data-dependent

meaningful for “large” problem sizes

16

Computing

x * x * x .. * x

(n times)

recursive definition

for n >= 0

iterative definition

n

x

x0 = 1

xn = x * xn-1

(for n > 0)

another recursive definition

x0 = 1

xn = (xn/2)2

xn = x * (xn/2)2

(for n > 0 and n is even)

(for n > 0 and n is odd)

17

Iterative Power function

double IterPow (double X, int N) {

double Result = 1;

while (N > 0) {

Result *= X;

N--;

{

return Result;

}

Total instruction count:

1

n+1

n

n

critical region

1

3n+3

algorithm's computing time (t) as a function of n is: 3n + 3

t is on the order of f(n) - O[f(n)]

O[3n + 3] is n

18

Recursive Power function

double RecPow (double X, int N) {

if (N == 0)

return 1;

else

return X * RecPow(X, N - 1);

}

Base case

1

1

Recursive case

1

1 + T(n-1)

total: 2

2 + T(n-1)

Number of times base case is executed:

1

Number of times recursive case is executed: n

Algorithm's computing time (t) as a function of n is: 2n + 2

O[2n + 2] is n

19

Another Power Function

Base case

double Pow3 (double X, int N) {

if (N == 0)

1

return 1;

1

else {

double halfPower = Pow3(X, N/2);

if (N % 2 == 0)

return halfPower * halfPower;

else

return X * halfPower * halfPower;

}

}

total: 2

Recursive case

1

T(n/2)

1

1(even)

1(odd)

3 + T(n/2)

Number of times base case is executed:

1

Number of times recursive case is executed: log2 n

Algorithm's computing time (t) as a function of n is: 3 log2 n + 2

O[3 log2 n + 2] is log2 n

20

Computational Complexity

Computing time, T(n), of an algorithm is a function

of the problem size (based on instruction count)

T(n) for IterPow is: 3n + 3

T(n) for RecPow is: 2n + 2

T(n) for Pow3 is: 3 log2 n + 2

Computational complexity of an algorithm is the rate

at which T(n) grows as the problem size grows

is expressed using "big Oh" notation

growth rate (big Oh) of 3n+3 and of 2n+2 is: n

big Oh of 3 log2 n + 2 is: log2 n

21

Common big Ohs

constant

logarithmic

linear

n

log n

quadratic

cubic

exponential

O(1)

O(log2 N)

O(N)

O(N log2 N)

O(N2)

O(N3)

O(2N)

22

Comparing Growth Rates

2n

n2

n log2 n

n

T(n)

log2 n

Problem Size

23

An Experiment

Execution time (in seconds)

2^25

2^50

2^100

IterPow

.71

1.15

2.03

RecPow

3.63

7.42

15.05

.99

1.15

1.38

Pow3

(1,000,000 repetitions)

24

Uses of big Oh

compare

algorithms which perform the

same function

search algorithms

sorting algorithms

comparing

data structures for an ADT

each operation is an algorithm and has a big

Oh

data structure chosen affects big Oh of the

ADT's operations

25

Comparing algorithms

Sequential search

growth rate is O(n)

average number of

comparisons done is

n/2

n

100

500

1000

5000

n/2

50

250

500

2500

Binary search

growth rate is O(log2 n)

average number of

comparisons done is

2((log2 n) -1)

2((log2 n)-1)

12

16

18

24

26