Chapter 16.pptx

advertisement

Chapter 16

• Sorting and algorithm analysis

Chapter 16 part 1

Some Sort Algorithms

•

•

•

•

Bubble Sort

Selection Sort

Insertion Sort

Quicksort

"Seeing" as an algorithm "sees" data

• When we "look" at a data set, we tend to

"see" the data set in parallel, "all at once".

• We need to train ourselves to "see" as a

computer sees things, pretty much "one item

at a time".

• It takes a little discipline to answer the

question "what would I do next, if I had only a

computer's limited vision?"

Bubble Sort

• for (maxE=a.length-1;MaxE >=0; MaxE--) {

for (i=0; i<maxE; i++ ) {

if (a[i) > a[i+1]) swap(a,i,i+1);

}

}

static void swap(int[] a,i,j) {

int temp;

temp = a[i];

a[i] = a[j];

a[j] = temp;

}

• Note: each pass of inner loop "bubbles" the largest to

the end.

Selection Sort

• A key concept: the "currently biggest (or smallest)

one".

• When we are starting out, looking at the first

item, it is the "currently biggest one".

• We examine every other element, one at a time,

and ask the question: Is it bigger than the

currently biggest one?

– If it is, it becomes the "currently biggest one".

• Since we have to examine every item in the list, it

is easy to see that this is "order n" (more on that

later).

Selection Sort

• for (i=0;i<a.length-1;i++) {

locMin = i;

for (j=i+1; j<a.length; j++) {

if (a[j] < locMin) locMin = j;

}

if (locMin != i) swap(a,i,locMin);

}

• Fewer swaps than bubble sort.

Insertion Sort

• This is how you pick up a hand of cards.

• Appropriate data structures make it easy to

implement

• With inappropriate data structure, this may

involve a lot of data-moving.

• Conceptually, create an (empty) "sorted subset"

one-by-one, add elements from the unsorted

array, adding each one in the right place so that

the "sorted subset" remains sorted.

Quicksort

• Invented in 1960

• First widely-used algorithm to break the n2

barrier

• It is a fundamentally recursive algorithm

• The partition method is at the heart of the

algorithm

Partition method of Quicksort

• Find some arbitrary member of the array.

• Call it the pivot value

• Rearrange the array so that the array is

partitioned by the pivot value

– All members lower than the pivot are before it.

– All members higher than the pivot are after it.

A partitioned array

• After partitioning, an array is three sub-arrays:

– A sub-array where all the members are lower than

the pivot (note: this sub-array is not initially

sorted)

– A single-member array (the pivot itself)

– A sub-array where all members are higher (or

equal to) the pivot

Completing the Quicksort

• After the array is partitioned, all we have to do is

re-arrange the first sub-array and last sub-array

so they are both sorted.

• A recursive call on each of these does this.

• For randomly mixed data, Quicksort performs at

nLog(n) speed, though it can be as bad as n2 for

partially-sorted input.

• Many other sorting algorithms are variations of

Quicksort. They strive to keep themselves

nLog(n).

Sequential Searching

• Look at each item, one at a time, and ask: "Is

this the one we are looking for?"

• On average, we look at n/2 items before we

find the one we are looking for.

• If our target is at the end of the list, or not

there at all, we "look at" all n items.

• There is no expectation about the order of the

items in which we are searching.

The Binary Search

• This is for searching into a sorted data structure.

• It is essentially the "game of 20 questions".

– Let's play the game.

– One person generates a random integer in the range

0-1,000,000

– The other attempts to guess the number in 20 "yesno" questions

– begin with "Is it greater than 500,000?"…

• You can always win because 1,000,000<220

Binary Search

• Just apply the "20 questions" strategy to a

search.

• Iteratively (or recursively) divide the data

structure into halves.

Chapter 16 part 3

Analysis of Algorithms

• Experiment vs. analysis

• Limits of experiment

– measures programs vs algorithms

– depends on language, compiler, operating system.

– depends on specific input

Computational Problems & Basic Steps

• A computational problem is a problem to be

solved using an algorithm.

– a collection of instances.

– Size of an instance refers to the memory required

– A Basic Step is can executed in time bounded by a

constant regardless of the size of the input. (e.g.

Swap two integers)

– contrast to find the largest element in an array.

This not basic, it depends on the size of the array.

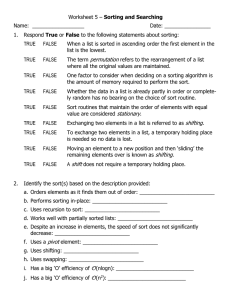

Complexity of Algorithms

• Consider a sequential search.

• Worst case complexity.

– sequential search: n

– Binary search: k*log(n)

– Selection sort: 1+2+3+…n-1 = (n-1)*n/2 n2/2

• Average case complexity can be used if the

relative frequencies (distributions) of the

different inputs are likely to occur in practice

are known.

Asymptotic Complexity

f ( x)

3n 5n

lim

lim

2

g ( x ) x

n

x

2

lim

x

lim 3

x

5

3

n

3n

1

5n * 2

n

1

n2 * 2

n

2

Big O notation

•

•

•

•

•

•

•

f(n) is in O(g(n)) if f(n) ≤ Kg(n) for n≥1 and some K.

O(1): constant time

O(log n) : logarithmic time

O(n): linear time

O(nlog n): "n log n" time

O(n2): "n squared time"

Union of O(n1) O(n2) O(n3)…: Polynomial

Time

• N-P – Non-polynomial

N-P – Non-polynomial time

• Travelling salesman problem

• Factor the product of two big primes

• These problems can form a class of "easy to

do, but very hard to undo" problems.

• Such situations are the basis of practical

cryptography.

![Problem 1 (Maximum Subarray). Given an array A[1,n] such that A[i](http://s2.studylib.net/store/data/018261657_1-81df34d7a98168d618c800e680a27a3f-300x300.png)