Data Science Challenges for Homeland Security

advertisement

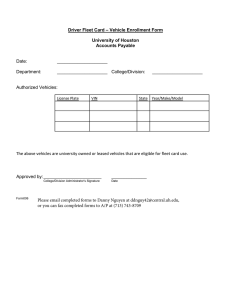

Data Science Challenges for Homeland Security Fred S. Roberts CCICADA Center Rutgers University Piscataway, NJ USA froberts@dimacs.rutgers.edu 1 Founded 2009 as a DHS University Center of Excellence The Data Challenge • Virtually all of the activities in the homeland security enterprise require the ability to reach conclusions from massive flows of data – Arriving fast – From disparate sources – Subject to error/uncertainty • Data Science: 2 – – – – – – – Incomparably positioned to address this massive flow of data Gain rapid situational awareness Assist in identifying new threats Make rapid risk assessments Mitigate the effects of natural or man-made disasters Allow homeland security community to work more efficiently Protect the privacy of individuals Data Science Application Areas • Data Science (DS) methods are applicable to a wide variety of homeland security applications • CCICADA researchers already heavily involved in these with homeland security practitioner partners, e.g.: – Intelligence analysis of text – Disease event detection – Port of entry inspection 3 Data Science Application Areas • DS methods are applicable to a wide variety of homeland security applications • CCICADA researchers already heavily involved in these with homeland security practitioner partners, e.g.: – Author identification – Rapid summarization of crime data – Bioterrorism sensor location B-T sensor, Salt Lake City Olympics 4 Data Science Application Areas • DS methods are applicable to a wide variety of homeland security applications • CCICADA researchers already heavily involved in these with homeland security practitioner partners, e.g.: – Nuclear detection using moving detectors – Stadium evacuation – Privacy-preserving data sharing 5 Data Science Application Areas • DS methods are applicable to a wide variety of homeland security applications • CCICADA researchers already heavily involved in these with homeland security practitioner partners, e.g.: – Predicting paths of plumes – Analyzing open source data – Thwarting attacks on cyberinfrastructure by detecting abnormalities in network traffic. 6 Data Science Application Areas • DS methods are applicable to a wide variety of homeland security applications • CCICADA researchers already heavily involved in these with homeland security practitioner partners, e.g.: – Assessing risks in waterways – Planning for evacuations during heat events from climate change – Understanding economic impact of terrorist events and natural disasters 7 Outline • Port Security: Risk Scoring from Ships’ Manifest Data • Visualization of Manifest Data • Making use of Uncertainty: Placing Nuclear Detectors in Randomly Moving Vehicles • Crime Information Management: Enhancing Force Deployment in Law Enforcement and Counter-terrorism: Work with Port Authority of New York/New Jersey • Biosurveillance: Early Warning from Entropy • Privacy-preserving Data Analysis • Data Analysis Challenges Arising from Climate Change 8 Port Security: Risk Scoring from Ships’ Manifest Data Xueying Chen, Jerry Cheng and Minge Xie Rutgers University Project supported by Domestic Nuclear Detection Office 9 Risk Scoring: Manifest Data •Manifest/bill of lading •Data either text or numerical/categorical •Increased emphasis by US Customs and Border Protection (CBP) on documents submitted prior to a shipping container reaching the US •Data screened before ship’s arrival in US •Identifying mislabeled or anomalous shipments may prove useful in finding nuclear materials 10 Data Description • We obtained from CBP one month’s data of all cargo shipments to all US ports • Jan 30, 2009 – Feb 28, 2009 • Description – Foreign port (origin) – Domestic port (destination) Aggregation – Item description – Item count Inconsistencies 11 Manifest Data: Sample 12 Clean/Readable Manifest Data 13 Data Description • Data has errors and ambiguities • Does 150 waters mean 150 bottles of water or 150 cases of bottles of water? • What does “household goods” mean? • Still, there are things we can do with the data. 14 Manifest Data • Manifest descriptions of products such as… – Soft drink concentrates – Ten knockdown empty cartons – Ikea home furnishing products • …should match classifications of container types, ship types, or port of departure types. • Anomalies may be discoverable when product descriptions are closely associated with container, ship, or port classifications. • E.g., a shipment of IKEA products may have more in common with specific container, ship, or port than a shipment containing airplane parts. 15 Some Stats of Manifests (1/30/09-2/28/09) • # of manifests per day: 26,577 • # of ships per day: 307 Among the cargos coming to Long Beach, CA • # of manifests per day: 3,648 • # of ships per day: 54 • # of originating countries: 16 16 Mining of Manifest Data • Goal: Predict risk score for each container – Quantify the likelihood of need for inspection – Based on covariates/characteristics of a container’s manifest data. • Methods: – We are developing machine learning algorithms to detect anomalies in manifest data. – Text mining on verbiage fields leads to useful characteristics. – Then regression based on the useful characteristics or “covariates” – “Penalized regression” using LASSO and Bayesian Binary Regression software developed by our group. 17 Mining of Manifest Data • A complication is that we do not know the risk status of the containers in our data. • We got around this by simulation and assuming risk scores of some containers in order to test our methods. • Our approach used “penalized regression” with explanatory variables chosen from the covariates contained in the manifest data, e.g., voyage number, foreign port, contents of containers, etc. • A first step was to do a correlation analysis to group covariates. 18 Correlation Analysis of Variables Preliminary to “Risk Scoring” of Containers 19 Penalized Regression Model: y = Xβ + ε, subject to ρ(β) ≤ λ y = vector corresponding to the risk score; X = matrix corresponding to the characteristics/contents of shipping containers; ε is the random error; β are model parameters; ρ is the penalty function and λ is a tuning parameter. • Learning the correlation between risk score and the covariates can help determine the importance of different covariates 20 Examples • Example 1 risk score=β1×(voyage number)+β2×(inbound entry type) + ε • Example 2 risk score=β1×(voyage number)+β2×(dp of unlading)+β3×(foreign port)+β4×contents+ε • More sophisticated analysis replaces individual variables by groups of closely correlated variables. 21 Real Data Analysis • Simulation setting – Use the simulation to select potential covariates and simulate the risk scores for each shipment – Run penalized regression over p=216 variables which are created from vessel country code, voyage number, dp of unlading, foreign port, inbound entry type, contents information, etc. n=24,000 samples (manifests) – The method chooses the “model” (the explanatory covariates) that best predict the risk score y. • Goals: – Model selection accuracy: pick up the true influential covariates – Prediction: predict the risk score for future shipments 22 Real Data Analysis Sample result for 373 predicted risk scores 23 Real Data Analysis Sample result: Box plot of E(y) – estimated y = error (Middle 50% of errors are in the box) 24 Conclusion • This method performs effectively in picking up important variables and removing unimportant variables. • The selected model can identify the shipments with high risk scores while maintaining a low rate of false alarms 25 Visualization of Manifest Data Work of James Abello, Tsvetan Asamov Rutgers University Sponsored by Domestic Nuclear Detection Office 26 Visualization of Manifest Data • Visualizing data can give us insight into interconnections, patterns, and what is “normal” or “abnormal” • Our visual analysis methods are based on tools originally developed at AT&T for detection of anomalies in telephone calling patterns – e.g., quick detection that someone has stolen your AT&T calling card. • The visualizations are interactive so you can “zoom” in on areas of interest, get different ways to present the data, etc. 27 Visualization of Manifest Data • For port p, a vector contents[p] gives the number of items of each kind of commodity shipped out of port p in a given time period. • We devise similarity measures between ports p and q as a function of the dot product of their contents vectors. • Contents[p,q] gives the number of items of each kind of commodity shipped from port p to port q in a given time period. • We represent such vectors using edge-weighted, labeled graphs that can be visualized using our software. 28 General View of Port to Port Traffic Color-coded connections represent number of shipments 29 Shanghai, LA, Newark, Singapore Vertex Size encodes number of shipments 30 Zooming into Shanghai (gray) Zooming into a vertex gives more data about traffic 31 Contents To Port Pairs Vertices are KeyWords and Port Pairs (color coded by degree), Edges encode number of containers (or shipments) with that keyword for the corresponding Port Pair 32 Contents To Port Pairs (cont) ( Vertices color coded by WeightRatio ) 33 Temporal Evolution of Manifest Data Fix a commodity. Each vertex represents all shipments from foreign to US ports on a given day. Cluster by similarity. Notice how all Tuesdays and Wednesdays are well clustered 34 34 Can also Cluster by Ports Note similarity, e.g., Cincinnati, OH and Brunswick, GA 35 Making use of Uncertainty/Randomness in Detection/Prevention Protocols Placing Nuclear Detectors in Randomly Moving Vehicles Work of Jerry Cheng, Fred Roberts, Minge Xie Rutgers University Supported by Domestic Nuclear Detection Office 36 Making use of Uncertainty/ Randomness in Detection/Prevention Protocols Placing Nuclear Detectors in Randomly Moving Vehicles • Goal: of uncertainty/randomness: keep the adversary guessing and increase their potential cost. 37 Nuclear Detection using Taxicabs and/or Police Cars 38 Nuclear Detection Using Vehicles • Distribute GPS tracking and nuclear detection devices to taxicabs or police cars in a metropolitan area. – Feasibility: New technologies are making devices portable, powerful, and cheaper. – Some police departments are already experimenting with nuclear detectors. • Taxicabs are a good example because their movements are subject to considerable uncertainty. • Send out signals if the vehicles are getting close to nuclear sources. • Analyze the information (both locations and nuclear signals) to detect potential location of a source. 39 Nuclear Detection Using Vehicles Issues of Concern in our Project: Our discussions with law enforcement suggest reluctance to depend on the private sector (e.g., taxicab drivers) in surveillance However, are there enough police cars to get sufficient “coverage” in a region? How many vehicles are needed for sufficient coverage? How does the answer depend upon: – Routes vehicles take? – Range of the detectors? – False positive and false negative rates of detectors? • • • • 40 Detectors in Vehicles – Model Components • Source Signal Model – Definition: random variable S - the indicator of nuclear signal from a source – Values 1 (existence of source) or 0 – The closer to the source, the higher the probability P(S=1) – Key parameter: maximum detection range • Source Detection Model – Random variable D: – Values 1 (the sensor detects the source) or 0 – Model parameter: False positive rate P(D=1|S=0) The probability of detecting a nonexistent signal – Model parameter: False negative rate P(D=0|S=1) The probability of not detecting a true signal. 41 Detectors in Vehicles – Model Components • In our early work, we did not have a specific model of vehicle movement. • We assumed that vehicles are randomly moved to new locations in the region being monitored each time period. • If there are many vehicles with sufficiently random movements, this is a reasonable first approximation. • It is probably ok for taxicabs, less so for police cars. 42 Vehicles – Clustering of Events • Definition of Clusters: – Unusually large number of events/patterns clumping within a small region of time, space or location in a sequence – A cluster of alarms suggests there is a source • Use statistical methodology: – Formal tests: provide statistical significance against random chance. • Traditional statistical method is via Scan Statistics – Scan entire study area and seek to locate region(s) with unusually high likelihood of incidence – E.g, use: 43 maximum number of cases in a fixed-size moving window Diameter of the smallest window that contains a fixed number of cases Nuclear Detection using Taxicabs Manhattan, New York City . . . .. . . . . ........ . . . . . . . + GPS tracking 44 device Nuclear sensor device . . . dirty bomb? A simulation of taxicab locations at morning rush hour Taxicabs - Simulation • First stage of work • Generated data in Manhattan and did a simulation – applying the clustering approach with success • Used spatclus package in R: software package to detect clusters • In the simulations, we have considered both moving and stationary sources. • Our emphasis then turned to comparing taxicab “coverage” to police car “coverage” using our simulations. 45 Number of Vehicles Needed • The required number of vehicles in the surveillance network can be determined by statistical power analysis – The larger # of vehicles, the higher power of detection • An illustrative example: – A surveillance network covers area 4000 ft by 10000 ft Roughly equal to the area of the roads and sidewalks of Mid/Downtown Manhattan – N vehicles are randomly moving around in the area Fix key parameters – Effective range of a working detector – False positive & false negative rates for detectors – The ranges and rates we used are not realistic, but we wanted to test general methods, & not be tied to today’s technology – A fixed nuclear source randomly placed in the area 46 Number of Vehicles Needed • • • • • 47 First Model Effective range of detector: 150 ft. False positive rate 2% False negative rate 5% Varied number of vehicles (= number of sensors) and ran at least 50 simulations for each number of vehicles. For each, measure the power = P(D=1/S=1) = probability of detection of a source. Number of Vehicles (Sensors) Needed • Sensor range=150 feet, false positive=2%, false negative=5%. Detection Power 0.8 0.7 0.6 Power 0.5 0.4 0.3 0.2 0.1 0 1500 2000 2500 3000 3500 4000 Number of Sensors Conclusion: Need 4000 vehicles to even get 75% power. 48 Number of Vehicles Needed • NYPD has 3000+ vehicles in 76 precincts in 5 boroughs. Perhaps 500 to 750 are in streets of Mid/Downtown Manhattan at one time. • Preliminary conclusion: The number of police cars in Manhattan would not be sufficient to even give 30% power. • So, if we want to use vehicles, we need a larger fleet, as in taxicabs. Modified Model • What if we have a better detector, say with an effective range of 250 ft.? • Don’t change assumptions about false positive and false negative rates. 49 Number of Vehicles (Sensors) Needed •Sensor range=250 feet, false positive=2%, false negative=5%. Detection Power 1.02 1 0.98 Power 0.96 0.94 0.92 0.9 0.88 0.86 1500 2000 2500 3000 3500 4000 Num ber of Sensors Conclusion: 2000 vehicles already give 93% power. 50 Number of Vehicles Needed • There are not enough police cars to accomplish this kind of coverage. • Taxicabs could do it. • There are other problems with our model as it relates to police cars: – Police cars tend to remain in their own region/precinct. – Police cars don’t move around as randomly or as frequently as taxicabs 51 Police Car Coverage • There are roughly 20 police precincts in Manhattan (actual number = 22) • Suppose we divide the region into 20 equal-sized and shaped subregions. • We place k police cars in each subregion and at each time period move them to a random spot in the subregion. • This may be a bit more realistic than placing them randomly in the entire region. • We place a fixed nuclear source randomly in the whole region. 52 Police Car Coverage • Assume number of police vehicles in use in each subregion is 25. • A total of 500 police vehicles. • Assume each has a detector with effective range 250 ft. and false positive, false negative rates of 2% and 5%, respectively. • We again ran simulations. • The power is 35%. • Not very good. 53 Police Car Coverage • Note: using 500 taxicabs and allowing them to range through the whole region gives about the same power. • It is not yet clear whether the power will generally differ significantly if we have a fixed number of vehicles, but in one case allow them to range only through subregions, and in another allow them to range through the whole region. • This is a research issue. 54 Hybrid Model: Police Cars + Taxicabs • Keeping detectors with effective range of 250 ft., false positive and false negative rates of 2% and 5%, respectively. • Use 500 police cars split into 25 in each of 20 regions. • In addition, use 2000 taxicabs ranging through the whole region. • Now get 98% power. 55 Effect of False Positive, False Negative Rates Modified Model • We also experimented with change of false positive and false negative rates. 56 Different Error Rates • Compare (false positive=5%, false negative=10%) vs. (2%,5%) • Sensor range=250 feet Detection Power 1 0.95 Power 0.9 errors:5%,10% 0.85 errors:2%,5% 0.8 0.75 0.7 1500 2000 2500 3000 Number of Sensors 57 3500 4000 Next Step: Add a Random Movement Model • Adding a movement model makes the analysis more realistic. • We take a street network. • We assume that vehicles move along until they hit an intersection. • At each intersection, they continue straight or turn left or right according to a random process. 58 Screen Shot of Simulation Tool for Street Grids & Traffic Movements “Arena” simulation software 59 Detectors in Vehicles – Simulation • Take a 25 by 25 block region – Roughly the lower Manhattan/Wall Street area. – Use our simulator tool with vehicle movements 60 Detectors in Vehicles - Simulation • Sensor range: 75ft to 250 ft. (.75 units to 2.50 units) • False positive (FPR) and negative rates (FNR): (2%, 2%) and (5%, 5%) • One stationary nuclear source in the study region. • Vary # of sensors (vehicles) from 500 to 3,000 with increments of 500. – Repeat each setting 500 times and compute the percentage of correctly detecting the true source within a certain period of time. Criteria: 61 –The detected cluster covers the true source location. –The cluster is statistically significant. Detection Power: error rates - (5%,5%) Power Power = 1 – Type II error (= 1 – Probability of missing detection = P(D=1/S=1) Note: power = 70% when number of sensors (vehicles) is 1500 with mid-ranged sensors. 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 500 r=0.75 r=1.00 r=1.25 1000 1500 2000 Number of Sensors 62 2500 3000 Detection Power: error rates - (2%,2%) Power Note: power = 91% when number of sensors is 1500 with mid-ranged sensors (and almost 100% with higher-ranged sensors) 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 500 r=0.75 r=1.00 r=1.25 1000 1500 2000 Number of Sensors 63 2500 3000 Comparing to Earlier Results •Note: We saw that power = 91% when number of sensors is 1500 with mid-ranged sensors (range 100 ft.) (and almost 100% with higher-ranged sensors, range 125 ft.) with error rates (2%,2%) •Is this inconsistent with our earlier work (without movement model) where we concluded that with a 150 ft. range and error rates (2%,5%), 4000 vehicles needed to even get 75% power? •No: earlier work had much larger area being covered. 64 Detection Power: error rates - (2%,2%) (Police Car Scenario: Higher Range ) Power < 30% with 300-400 sensors (200 ft or 250 ft, 2% error rate) You would need 500 sensors to reach 70% power with 250ft. range # of Police Patrol Cars in each precinct is about 25 to 50 The study region is roughly a precinct (25 by 25 blocks) Therefore police cars alone are not enough for detection even with more powerful detectors. 1 0.9 0.8 0.7 Power 0.6 r=200 0.5 r=250 0.4 0.3 0.2 0.1 0 300 400 500 600 700 800 900 1000 Number of Sensors 65 1100 1200 1300 1400 1500 Additional Work on Vehicles 66 Additional Work on Vehicles • There are many more explorations we are working on: – Modifying the key parameters of effective range and false positive and false negative rates – Exploring hybrid models with some taxis and some police cars – Exploring a hybrid model that includes some stationary detectors – Inserting more than one source – Bringing in moving sources – Exploring reports over x successive time periods for varying x – Exploring different decision rules for reports over x time periods. 67 Detectors in Cell Phones • Similar ideas for placing sensors in cell phones have been proposed and tested by the Radiation Laboratory at Purdue University and at Lawrence Livermore. • At a meeting with the NYC Police Department, where we presented our taxicab and police car work, we were encouraged to explore applying our methods to the cell phone idea. 68 Crime Information Management: Enhancing Force Deployment in Law Enforcement and CounterTerrorism Using Data & Visual Analytics Work of Bill Pottenger and colleagues at CCICADA, PNNL, START, INTUIDEX Sponsored by DHS In collaboration with the Port Authority of NY and NJ 69 A Joint Project Of: DHS Center for the Study of Terrorism and Responses to Terrorism University of Maryland 70 71 PANYNJ Project •We have been working with the Port Authority of New York and New Jersey to bring them enhanced analytics to support risk and threat assessment from their existing data. •Goal: an integrated, real-time, crime information management system. 71 72 Motivation • Information is critical in Law Enforcement today – Potential: better informed decisions on threat and risk assessment, force deployment, officer safety, crime and terrorism prevention, ... – Challenges: volume, quality, access, distribution, formats • Marry law enforcement and counter-terrorism initiatives to aid in decision making – Data Collection – Secure Sharing – Data Analysis • Build on “CompStat” system in use by PANYNJ police & other PDs. 72 CompStat Next Generation 73 • CompStat NG™ (Next Generation) • Provides crime and counter-terrorism information in an enterprise architecture (web-based) • Employs advanced visual and data analytics for crime analysis and counterterrorism for use in force deployment • Enables real-time situational awareness on any networked device (Assessment Wall, PC, smart phone) – Integrates Key S&T Technologies – Aimed at enabling dramatic improvements in fighting crime and in counter-terrorism 73 74 Step 1: Existing IT and Data Access 74 • Database on old legacy VAX, separate network • Migrate data from old database to workstation network • Data cleansing – Recognize anomalies and notify PANYNJ staff – Cleaned on future extractions • Host in central Data Warehouse – Parallel with production system 75 CompStat NG™ Data Warehouse • Incorporate information and support real-time updating from – Global Terrorism Database @ START – PAPD Access/SQL/Oracle DBs, etc. • Integrate multiple sources of information • Integrate information extraction capability from police incident data and the GTDB 75 Step 2: Determine R&D Technology to Deploy • Most agencies have enormous quantities of unprocessed, inaccessible textual data • Information extraction technology is needed in the law enforcement community • Data is distributed in a “System of Systems” (David Boyd, DHS OIC) – Continuously growing amount of data –75% Untapped – Network sophistication of offenders is growing (globalization interjurisdictional crime / terrorism) – Consolidation & coordination of data is needed 76 76 77 Entity Extraction Technology • Intuidex’s IxEEE™ engine • Extracts textual named entities from virtually any data source and puts them to work as useful data • Bridges the gap between unstructured & structured data • Puts formerly “buried” data to work 77 Entity Extraction from Law Enforcement Data • Data Entry Validation for PAPD Incident Report Data Entry Application • Automatic Database Population • Extraction from Police Narratives, GTDB News Sources 78 78 79 CompStat NG™ Visual Analytics • PNNL Law Enforcement Information Framework (LEIF) • PNNL IN-SPIRE Assessment Wall 79 Assessment Wall 80 Step 3: Develop On and Off-Site • On-Site • Deployments and Integration of System • Off-Site – Commanding Officer’s Application (COs App) Integration of Vital Information Summary Crime Statistics Recap reports on demand 80 CompStat NG™ COs App 81 81 82 Selected Project Results • Deployments at PANYNJ CompStat NG™ Data Warehouse Commanding Officer’s Application Law Enforcement Information Framework IN-SPIRE Assessment Wall • Ongoing research at Rutgers – Knowledge Discovery – using Higher Order Naïve Bayes machine learning methods • Have Developed New Capabilities – On-demand statistics reports – Real-time Web-based analytics tools – Automatic data entry validation 82 R&D Next Steps: Example: MO Search • Leverage technology for more helpful modus operandi (MO) search / matching in LEIF –Modus operandi: a particular way or method of doing something (e.g., perpetrating a crime –Very common law enforcement activity –Criminals tend to follow same MO • Based on prior NIJ funded BPD_MO project • Able to be invoked from LEIF (browser; service) 83 84 Closing Comment •PANYNJ has adopted this vision as part of their strategic long-term goals •PA Executive Director Tony Shorris’ Goal: A “national model” for counter-terrorism and crime prevention and analysis! •Similar methods under discussion with police departments nationwide 84 Biosurveillance: Early Warning from Entropy Initiated as a DHS MSI Funded Summer Program through DyDAn Continuing under DHS-DyDAn-CCICADA Support 85 Work of Nina Fefferman Abdul-Aziz Yakubu Asamoah Nkwanta Ashley Crump Devroy McFarlane Anthony Ogbuka Nakeya Williams A New Possibility For Biosurveillance: Entropy • Early detection of disease outbreaks critical for public health. • Entropy quantifies the amount of information communicated within a signal • Signal strength may change when an outbreak starts • We are hoping to detect changes in signal strength early into the onset of an outbreak 86 smallpox Our Ultimate Goal: Effective Biosurveillance Shannon’s Entropy Formula n H ( X ) E ( I ( X )) p ( xi ) log 2 p ( xi ) i 1 I ( X ) is the information content of X p( xi ) Pr( X xi ) is the probability mass function of X 120 100 Incidence We want to be able to take incoming disease data and, as early as possible, notice when an outbreak is starting 87 80 60 40 20 0 1 73 145 217 289 361 433 505 577 649 721 793 865 937 Week Biosurveillance Methods • Current methods of outbreak detection are often hit or miss. • A frequently used method: CuSum – Compares current cumulative summed incidence to average – Needs a lot of historical “non-outbreak” data (bad for newly emerging threats) – Has to be manually “reset” • Other methods have similar problems 88 Biosurveillance Using Entropy Reported Incidence Data 120 Incidence 100 We apply 3 preprocessing steps 80 60 40 20 0 1 86 171 256 341 426 511 596 681 766 851 936 Week Entropy Entropy Outcome 4.5 4 3.5 3 2.5 2 1.5 1 0.5 0 1 84 167 250 333 416 499 582 665 748 831 914 Iteration 89 We stream the processed data through our entropy calculation Biosurveillance Using Entropy: The 3 Preprocessing Steps 1. Binning the Incidence Data: Number of categories 2. Analyzing within a Temporal Window: Number of time points lumped into one observation 3. Moving the temporal window according to different Step Sizes 90 Binning •Assign each “count” to a bin or category. •Binning lets us try to focus on biologically meaningful differences. Weekly Disease Incidence (Number of Cases) Data: 3, 2, 4, 5, 8, 10, 12, 40, 35, 17, 37, 20, 23, 25, 4,… Bin 1 Bin 2 Bin 3 Binned Data: 1, 1, 1, 1, 2, 2, 2, 4, 4, 3, 4, 3, 3, 3, 1 91 Bin 4 Method of Binning can Really Change the Outcome Method 1 Method 2 1.2 0.8 Entropy Entropy 1 0.6 0.4 0.2 0 1 92 81 161 241 321 401 481 561 641 721 801 881 961 Iteration Number of bins = 2 4.5 4 3.5 3 2.5 2 1.5 1 0.5 0 1 84 167 250 333 416 499 582 665 748 831 914 Iteration Number of bins = 14 Window Size The window for number of data points to look at at one time should be large enough to detect when a change has happened (some data from “before” and some from “after” the outbreak starts), but small enough that it can’t entirely contain rapid peak. Window Size = 7 Incidence Data: Step Size = 1 3, 2, 4, 5, 8, 10, 12, 40, 35, 17, 37, 20, 23, 25, 4,… Calculate Entropy Disease Incidence 120 100 80 60 40 20 0 1 93 E(1) 110 219 328 437 546 655 764 873 982 Weeks 1.2 3.5 1 3 0.8 Entropy Entropy Window Size can Also Have a Huge Impact 0.6 0.4 2.5 2 1.5 1 0.2 0.5 0 0 1 36 71 106 141 176 211 246 281 316 351 386 421 456 491 Iteration 94 Window Methodsize 1=3 1 71 141 211 281 351 421 491 561 631 701 771 841 911 981 Iteration Window size Method 2 = 14 Step Size We allow windows to overlap. The window might need to ‘walk along’ the data, not just expand to always include more and more history. Step size tells us how continuous the process is (e.g. how much overlap with the last window) Window Size = 7 Incidence Data: Step Size = 1 3, 2, 4, 5, 8, 10, 12, 40, 35, 17, 37, 20, 23, 25, 4,… Calculate Entropy etc. for all the data 95 E(1), E(2) Step Size Adjusting step size can eliminate glitches like weekends and holidays in daily datasets. 1.2 0.8 Entropy Entropy 1 0.6 0.4 0.2 0 1 1 82 163 244 325 406 487 568 649 730 811 892 973 Iteration Step size = 1 96 4 3.5 3 2.5 2 1.5 1 0.5 0 36 71 106 141 176 211 246 281 316 351 386 421 456 491 Iteration Step size = 5 Computing an Entropy Output We produce a new data stream by doing this over again, walking the window along the binned data, using our step size Window Size = 6 Incidence Data: Step Size = 1 3, 2, 4, 5, 8, 10, 12, 40, 35, 17, 37, 20, 23, 25, 4,… This is the 9th window since step size is 1 97 Calculate Entropy E(1), E(2), E(3), E(4), E(5), E(6), E(7), E(8), E(9), … Biosurveillance using Entropy • Our preliminary results show this method can work. • Favorable when compared to CuSum and other methods. 120 Incidence 100 80 60 40 20 951 751 551 901 701 501 851 651 451 801 601 551 401 501 451 401 351 301 251 201 151 51 101 1 0 Week 3.5 Entropy 3 2.5 2 1.5 1 0.5 Window Count 98 751 701 651 601 351 301 251 201 151 101 51 1 0 We need more work to test it to make sure it’s sensitive and specific enough Biosurveillance using Entropy Next step: Make selection of preprocessing parameters automatic • Right now, all parameters (number of bins, how to bin, how large the window size, how long the step size) is all determined manually by trial and error • To make this useful for actual surveillance, we are working to design algorithms to select optimal parameters for these three preprocessing steps based on small samples of training data and known outbreak definitions 99 Privacy Preserving Data Analysis Work of Rebecca Wright and colleagues at Rutgers and elsewhere. Partner Institutions: Rutgers, Yale, Stanford, University of New Mexico, NYU Sponsors: National Science Foundation DHS through DyDAn/CCICADA 100 Data Privacy • Privacy-preserving methods of data handling seek to provide sufficient privacy as well as sufficient utility. • Complications: 101 – Multiple data sources – Ability to infer information by combining multiple sources – Difficult to understand extent to which limited personal identifiers still allow identification Sweeney: 87% of the US population can be uniquely identified by their date of birth, 5-digit zip code, and gender. – Privacy means different things to different people and different societies and in different situations Advantages of Privacy Protection • Protection of personal information: protects individuals and helps maintain their trust • Protection of proprietary or government-sensitive information • Enables collaboration between different data holders (since they may be more willing or able to collaborate if they need not reveal their information) • Compliance with legislative policies (e.g., HIPAA, EU privacy directives) 102 Secrecy vs. Privacy Encryption works reasonably well to protect secrecy of data in transit and in storage. Alice Bob c = EK(m) Encrypts message m Decrypts c to obtain m In contrast, privacy is about what Bob can and will do with m. 103 Some of Our Privacy Preserving Data Analysis Work Our work uses a distributed cryptographic approach. • Privacy-preserving construction of Bayesian networks from vertically partitioned data. • Privacy-preserving frequency mining in the fully distributed model (enables naïve Bayes classification, decision trees, and association rule mining). • An experimental platform for PPDA. • Privacy-preserving clustering: k-means clustering for arbitrarily partitioned data and a divide-and-merge clustering algorithm for horizontally partitioned data. • Privacy-preserving reinforcement learning, partitioned by observation or by time. 104 Sample Future Direction • Incorporate differential privacy [Dwork, et al.] into graph analysis • Differential privacy is a measure of the increased risk to privacy by participating in a statistical database • Literature has methods for achieving any degree of privacy using this measure. 105 Climate Change An Area with Vast Homeland Security Challenges Work of Nina Fefferman, Endre Boros, Melike Gursoy Sponsored by Dept. of Defense 106 Climate Change Concerns about global warming. Resulting impact on health –Of people –Of animals –Of plants –Of ecosystems 107 Climate Change •Some early warning signs: –1995 extreme heat event in Chicago 514 heat-related deaths 3300 excess emergency admissions –2003 heat wave in Europe 35,000 deaths –Food spoilage on Antarctica expeditions Not cold enough to store food in the ice 108 Climate Change •Some early warning signs: –Malaria in the African Highlands –Dengue epidemics –Floods, hurricanes 109 Climate Change is a Homeland Security Problem •Potential for future conflicts over shortages of –Potable water and water for agriculture –Land due to flooding –Food due to different growing seasons or unavailability of land •Potential for spread of disease to new places •Potential for civil unrest 110 Dengue fever Habitat change for mosquitoes SEES: Science, Engineering, and Education for Sustainability • New US National Science Foundation Initiative • SEES integrates issues of environment, energy, and economics, with an emphasis on climate change. • SEES is concerned with the 2-way interaction of human activity with environmental processes. • Combining existing and new monies, SEES budget is $660M in FY10 and est. $765M in FY11. • Major data science challenges in SEES 111 SEES: Science, Engineering, and Education for Sustainability “The two-way interaction of human activity with environmental processes now defines the challenges to human survival and wellbeing. Human activity is changing the climate and the ecosystems that support human life and livelihoods.” Dust storm in Mali 112 SEES: Science, Engineering, and Education for Sustainability “Reliable and affordable energy is essential to meet basic human needs and to fuel economic growth, but many environmental problems arise from the harvesting, generation, transport, processing, conversion, and storage of energy.” 113 SEES: Science, Engineering, and Education for Sustainability “Climate change is a pressing anthropogenic stressor, but it is not the only one. The growing challenges associated with climate change, water and energy availability, emerging infectious diseases, invasive species, and other effects linked to anthropogenic change are causing increasing hardship and instability in natural and social ecologies throughout the world.” 114 Sustainability • A key is to define “sustainability” and to develop metrics of our progress toward a sustainable life style • There are a great many data science challenges in climate change science and in sustainability science 115 Climate Change • Some of the key areas where data science challenges arise: Dimensions of Biodiversity Water Sustainability and Climate Ocean Acidification Decadal and Regional Climate Prediction Using Earth System Models 116 Climate Change • Data science is relevant to all of these areas. 117 Sample Research Area: Extreme Events due to Global Warming Similar interests at CDC’s new math modeling program We anticipate an increase in number and severity of extreme events due to global warming. More heat waves. More floods, hurricanes. 118 Problem 1: Evacuations during Extreme Heat Events One response to such events: evacuation of most vulnerable individuals to climate controlled environments. Modeling challenges: Where to locate the evacuation centers? Whom to send where? Goals include minimizing travel time, keeping facilities to their maximum capacity 119 Problem 2: Rolling Blackouts during Extreme Heat Events A side effect of such events: Extremes in energy use lead to need for rolling blackouts. Modeling challenges: Understanding health impacts of blackouts and bringing them into models Design efficient rolling blackouts while minimizing impact on health Lack of air conditioning Elevators no work: vulnerable people’s over-exertion Food spoilage Minimizing impact on the most vulnerable populations 120 Problem 3: Pesticide Applications after a Flood •Pesticide applications often called for after a flood •Chemicals used for pesticidal and larvicidal control of mosquito disease vectors are themselves harmful to humans •Maximize insect control while minimizing health effects 121 Problem 4: Emergency Rescue Vehicle Routing to Avoid Rising Flood Waters Emergency rescue vehicle routing to avoid rising flood waters while still minimizing delay in provision of medical attention and still get afflicted people to available hospital facilities 122 Optimal Locations for Shelters in Extreme Heat Events • Work based in Newark NJ • Data includes locations of potential shelters, travel distance from each city block to potential shelters, and population size and demographic distribution on each city block. • Determined “at risk” age groups and their likely levels of healthcare needed to avoid serious problems • Computing optimal routing plans for at-risk population to minimize adverse health outcomes and travel time • Using techniques of probabilistic mixed integer programming and aspects of location theory constrained by shelter capacity (based on predictions of duration, 123 onset time, and severity of heat events) Pesticides after Floods: Maximal Insect Control, Minimal Chemical Exposure • We are working to compute optimal application patterns for control chemicals • Minimizing: – Disease risk from vector-borne diseases – Adverse health outcomes from human exposure to control chemicals • Using techniques of: – Control Theory – Stochastic Simulation 124 Thank you! 125