Sketching and Streaming Entropy via Approximation Theory

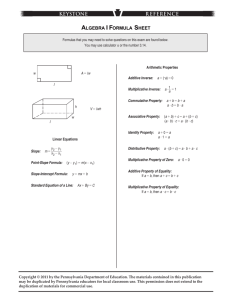

advertisement

Sketching and Streaming Entropy via Approximation Theory Nick Harvey (MSR/Waterloo) Jelani Nelson (MIT) Krzysztof Onak (MIT) Streaming Model m updates x = (9, (0, 2, (1, 0, 0, 5, 0, 1, …,12) …, 0) x ∈ ℤn Algorithm Goal: Compute statistics, e.g. ||x||1, ||x||2 … Trivial solution: Store x (or store all updates) O(n·log(m)) space Goal: Compute using O(polylog(nm)) space Streaming Algorithms (a very brief introduction) • Fact: [Alon-Matias-Szegedy ’99], [Bar-Yossef et al. ’02], [Indyk-Woodruff ’05], [Bhuvanagiri et al. ‘06], [Indyk ’06], [Li ’08], [Li ’09] p x Can compute (1±) p = (1±)Fp using O(-2 logc n) bits of space (if 0 p2) O(-O(1) n1-2/p · logO(1)(n)) bits (if 2<p) • Another Fact: Mostly optimal: [Alon-Matias-Szegedy ‘99], [Bar-Yossef et al. ’02], [Saks-Sun ’02], [Chakrabarti-Khot-Sun ‘03], [Indyk-Woodruff ’03], [Woodruff ’04] – Proofs using communication complexity and information theory Practical Motivation • General goal: Dealing with massive data sets – Internet traffic, large databases, … • Network monitoring & anomaly detection – Stream consists of internet packets – xi = # packets sent to port i – Under typical conditions, x is very concentrated – Under “port scan attack”, x less concentrated – Can detect by estimating empirical entropy [Lakhina et al. ’05], [Xu et al. ‘05], [Zhao et al. ‘07] Entropy • Probability distribution a = (a1, a2, …, an) • Entropy H(a) = -Σ ailg(ai) • Examples: – a = (1/n, 1/n, …, 1/n) : H(a) = lg(n) – a = (0, …, 0, 1, 0, …, 0) : H(a) = 0 • small when concentrated, LARGE when not Streaming Algorithms for Entropy • How much space to estimate H(x)? – [Guha-McGregor-Venkatasubramanian ‘06], [Chakrabarti-Do Ba-Muthu ‘06], [Bhuvanagiri-Ganguly ‘06] – [Chakrabarti-Cormode-McGregor ‘07]: multiplicative (1±) approx: O(-2 log2 m) bits additive approx: O(-2 log4 m) bits ~ -2) lower bound for both Ω( • Our contributions: – Additive or multiplicative (1±) approximation – Õ(-2 log3 m) bits, and can handle deletions – Can sketch entropy in the same space First Idea If you can estimate Fp for p≈1, then you can estimate H(x) Why? Rényi entropy Review of Rényi • Definition: H p ( x) p p log x p / x 1 1 p Hp(x) 0 1 p 2 … Claude AlfredShannon Rényi • Convergence to Shannon: lim p 1 H p ( x) H ( x) Overview Analysis of Algorithm • Set p=1.01 and let ~x = x / x 1 ~ • Compute y (1 ) x p p (using Li’s “compressed counting”) ~ p log( x p ) log( 1 ) 1 • Set H log( y) 1 p 1 p 1 p ~ ~ • So H H ( x) 100 H1.01 ( x) 100 H (x ) As p1 this gets better this gets worse! Making the tradeoff • How quickly does Hp(x) converge to H(x)? • Theorem: Let x~ be distr., with mini x~i ≥ 1/m. Multiplicative Approximation Let 1 p 1 O . Then log m H ( x~) 1 1 H p ( x~) Additive Approximation . Then Let 1 p 1 O log 2 m 0 H (~x) H p ( x~) • Plugging in: O(-3 log4 m) bits of space suffice for additive approximation Proof: A trick worth remembering • Let f : ℝ ℝ and g : ℝ ℝ be such that lim p 1 f ( p) 0 lim p 1 g ( p) 0 lim p 1 f ( p) L g ( p) • l’Hopital’s rule says that lim p 1 f ( p) L g ( p) • It actually says more! It says at least as fast as f ( p ) g ( p) f ( p) g ( p) does. converges to L Improvements • Status: additive approx using O(-3 log4 m) bits • How to reduce space further? – Interpolate with multiple points: Hp1(x), Hp2(x), ... LEGEND Shannon Single Rényi Hp(x) Multiple Rényis 0 1 p 2 … Analyzing Interpolation Hp(x) 0 1 2 p • Let f(z) be a Ck+1 function • Interpolate f with polynomial q with q(zi)=f(zi), 0≤i≤k • Fact: f ( y ) q( y ) (b a) k 1 sup f ( k 1) ( z ) z[ a ,b ] where y, zi [a,b] • Our case: Set f(z) = H1+z(x) • Goal: Analyze f(k+1)(z) … Bounding Derivatives • Rényi derivatives are messy to analyze ~ p 1 x p • Switch to Tsallis entropy f(z) = S1+z(x), S p ( x) p 1 • Can prove Tsallis also converges to Shannon Define: Gk ( z ) n k x log ( xi ) i ~ 1 z ~ i 1 k ( 1) k j k!G ( z ) (1) k!(1 G0 ( z )) j (k ) f ( z) k 1 k j 1 j 1 z z j ! k ( k 1) k 1 sup f z O H ( x ) log m Fact: z[ a ,b ] (when a=-O(1/(k·log m)), b=0) can set k = log(1/ε)+loglog m Key Ingredient: Noisy Interpolation • We don’t have f(zi), we have f(zi)±ε • How to interpolate in presence of noise? • Idea: we pick our zi very carefully Chebyshev Polynomials Tk ( x) cos( k arccos( x)) • Rogosinski’s Theorem: q(x) of degree k and |q(βj)|≤ 1 (0≤j≤k) |q(x)| ≤ |Tk(x)| for |x| > 1 • • • • Map [-1,1] onto interpolation interval [z0,zk] Choose zj to be image of βj, j=0,…,k ~ Let q(z) interpolate f(zj)±ε and q(z) interpolate f(zj) ~ r(z) = (q(z)-q(z))/ ε satisfies Rogosinski’s conditions! Tradeoff in Choosing zk Tk grows quickly once leaving [z0, zk] z0 • zk close to 0 |Tk(preimage(0))|still small • …but zk close to 0 high space complexity • Just how close do we need 0 and zk to be? 0 zk The Magic of Chebyshev • [Paturi ’92]:Tk(1 + 1/kc) ≤ 1-(c/2) 4k e . Set c = 2. • Suffices to set zk=-O(1/(k3log m)) • Translates to Õ(-2 log3 m) space The Final Algorithm (additive approximation) • Set k = lg(1/) + lglg(m), zj = (k2cos(jπ/k)-(k2+1))/(9k3lg(m)) (0 ≤ j ≤ k) ~ ~ • Estimate S1+zj = (1-(F1+zj/(F1)1+zj))/zj for 0 ≤ j ≤ k ~ • Interpolate degree-k polynomial q(zj) = S1+zj ~ ~ • Output q(0) Multiplicative Approximation • How to get multiplicative approximation? – Additive approximation is multiplicative, unless H(x) is small – H(x) small x large [CCM ’07] • • • • Suppose xi x and define RFp xip i i We combine (1±ε)RF1 and (1±ε)RF1+zj to get (1±ε)f(zj) Question: How do we get (1±ε)RFp? Two different approaches: * * – A general approach (for any p, and negative frequencies) – An approach exploiting p ≈ 1, only for nonnegative freqs (better by log(m)) Questions / Thoughts • For what other problems can we use this “generalize-then-interpolate” strategy? – Some non-streaming problems too? • The power of moments? • The power of residual moments? CountMin (CM ’05) + CountSketch (CCF ’02) HSS (Ganguly et al.) • WANTED: Faster moment estimation (some progress in [Cormode-Ganguly ’07])