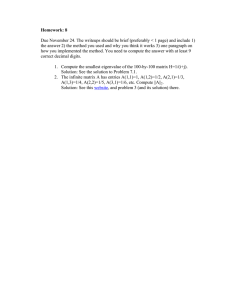

Approximating Edit Distance in Near-Linear Time

advertisement

Approximating Edit Distance

in Near-Linear Time

Alexandr Andoni

(MIT)

Joint work with Krzysztof Onak (MIT)

1

Edit Distance

For two strings x,y ∑n

ed(x,y) = minimum number of edit

operations to transform x into y

Edit operations = insertion/deletion/substitution

Example:

ED(0101010,

1010101) = 2

Important in: computational biology, text

processing, etc

2

Computing Edit Distance

Problem: compute ed(x,y) for given x,y{0,1}n

Exactly:

O(n2) [Levenshtein’65]

O(n2/log2 n) for |∑|=O(1) [Masek-Paterson’80]

Approximately in n1+o(1) time:

n1/3+o(1) approximation [Batu-Ergun-Sahinalp’06], improving

over [Myers’86, BarYossef-Jayram-Krauthgamer-Kumar’04]

Sublinear time:

≤n1-ε vs ≥n/100 in n1-2ε time [Batu-Ergun-Kilian-MagenRaskhodnikova-Rubinfeld-Sami’03]

3

Computing via embedding into ℓ1

Embedding: f:{0,1}n → ℓ1

such that ed(x,y) ≈ ||f(x) - f(y)||1

up to some distortion (=approximation)

Can compute ed(x,y) in time to compute f(x)

Best embedding by [Ostrovsky-Rabani’05]:

distortion = 2Õ(√log n)

Computation time: ~n2 randomized (and similar

dimension)

Helps for nearest neighbor search, sketching,

but not computation…

4

Our result

Theorem: Can compute ed(x,y) in

n*2Õ(√log n) time with

2Õ(√log n) approximation

While uses some ideas of [OR’05]

embedding, it is not an algorithm for

computing the [OR’05] embedding

5

Sketcher’s hat

2 examples of “sketches” from embeddings…

[Johnson-Lindenstrauss]: pick a random ksubspace of Rn, then for any q1,…qnRn, if q̃i is

projection of qi, then, w.h.p.

||qi-qj||2 ≈ ||q̃i-q̃j||2 up to O(1) distortion.

for k=O(log n)

[Bourgain]: given n vectors qi, can construct n

vectors q̃i of k=O(log2 n) dimension such that

||qi-qj||1 ≈ ||q̃i-q̃j||1 up to O(log n) distortion.

6

Our Algorithm

x

y

i

z=

z[i:i+m]

For each length m in some fixed set L[n],

compute vectors vimℓ1 such that

||vim – vjm||1 ≈ ed( z[i:i+m], z[j:j+m] )

Dimension of vim is only O(log2 n)

Vectors {vim} are computed recursively from {vik}

corresponding to shorter substrings (smaller kL)

Output: ed(x,y)≈||v1n/2 – vn/2+1n/2||1

(i.e., for m=n/2=|x|=|y|)

7

Idea: intuition

||vim – vjm||1 ≈ ed( z[i:i+m], z[j:j+m] )

How to compute {vim} from {vik} for k<<m ?

[OR] show how to compute some {wim} with same

property, but which have very high dimension (~m)

Can apply [Bourgain] to vectors {wim},

Obtain vectors {vim} of polylogaritmic dimension

Incurs “only” O(log n) distortion at this step of

recursion (which turns out to be ok).

Challenge: how to do this in Õ(n) time?!

8

Key step:

embeddings of

shorter substrings

Main Lemma: fix n vectors viℓ1k, of

dimension k=O(log2n).

Let s<n. Define Ai={vi, vi+1, …, vi+s-1}.

Then we can compute vectors qiℓ1k such that

||qi – qj||1≈ EMD(Ai, Aj) up to distortion logO(1) n

Computing qi’s takes Õ(n) time.

EMD(A,B)=min-cost bipartite matching*

* cheating…

embeddings of

longer substrings*

9

Proof of Main Lemma

EMD over n sets Ai

O(log2 n)

minlow ℓ1high

O(1)

minlow ℓ1low

O(log n)

minlow tree-metric

O(log3n)

sparse graph-metric

[Bourgain]

(efficient)

“low” = logO(1) n

Graph-metric: shortest

path on a weighted graph

Sparse: Õ(n) edges

mink M is semi-metric on

Mk with “distance”

dmin,M(x,y)=mini=1..kdM(xi,yi)

O(log n)

ℓ1low

10

Step 1

EMD over n sets Ai

O(log2 n)

minlow ℓ1high

q.e.d.

11

minlow ℓ1high

Step 2

O(1)

minlow ℓ1low

Lemma 2: can embed an n point set from ℓ1H into

minO(log n) ℓ1k, for k=log3n, with O(1) distortion.

Use weak dimensionality reduction in ℓ1

Thm [Indyk’06]: Let A be a random* matrix of

size H by k=log3n. Then for any x,y, letting

x̃=Ax, ỹ=Ay:

no contraction: ||x̃-ỹ||1≥||x-y||1 (w.h.p.)

5-expansion: ||x̃-ỹ||1≤5*||x-y||1 (with 0.01 probability)

Just use O(log n) of such embeddings

Their min is O(1) approximation to ||x-y||1, w.h.p.

12

Efficiency of Step 1+2

From step 1+2, we get some embedding

f() of sets Ai={vi, vi+1, …, vi+s-1} into

minlow ℓ1low

Naively would take Ω(n*s)=Ω(n2) time to

compute all f(Ai)

Save using linearity of sketches:

f() is linear: f(A) = ∑aA f(a)

Then f(Ai) = f(Ai-1)-f(vi-1)+f(vi+s-1)

Compute f(Ai) in order, for a total of Õ(n) time

13

minlow ℓ1low

Step 3

O(log n)

minlow tree-metric

Lemma 3: can embed ℓ1 over {0..M}p into

minlow tree-m, with O(log n) distortion.

For each Δ = a power of 2, take O(log n)

random grids. Each grid gives a mincoordinate

∞

Δ

14

minlow tree-metric

Step 4

O(log3n)

sparse graph-metric

Lemma 4: suppose have n points in

minlow tree-m, which approximates a

metric up to distortion D. Can embed into

a graph-metric of size Õ(n) with distortion

D.

15

sparse graph-metric

Step 5

O(log n)

ℓ1low

Lemma 5: Given a graph with m edges,

can embed the graph-metric into ℓ1low with

O(log n) distortion in Õ(m) time.

Just implement [Bourgain]’s embedding:

Choose O(log2 n) sets Bi

Need to compute the distance from each node

to each Bi

For each Bi can compute its distance to each

node using Dijkstra’s algorithm in Õ(m) time

16

Summary of Main Lemma

EMD over n sets Ai

O(log2 n)

minlow ℓ1high

O(1)

oblivious

minlow ℓ1low

O(log n)

min

low

tree-metric

O(log3n)

sparse graph-metric

non-oblivious

Min-product helps to

get low dimension

(~small-size sketch)

bypasses impossibility

of dim-reduction in ℓ1

Ok that it is not a

metric, as long as it

is close to a metric

O(log n)

ℓ1low

17

Conclusion

Theorem: can compute ed(x,y) in

n*2Õ(√log n) time with 2Õ(√log n) approximation

18