courses:cs240-201601:cfgs.pptx (541.6 KB)

advertisement

CMPU-240 • Language Theory and Automata • Spring 2016

Context Free Grammars

Not him!

…spells name wrong!

Context-Free Grammars

• We have seen finite automata and

regular expressions as equivalent

means to describe languages

– Simple languages only

– Regular langs vs. Nonregular langs e.g., {anbn}

• Context-free grammars are a more

powerful way to describe languages

– Can describe recursive structure

– Can use regex to describe strings (tokens)

CFGs

• First used to describe human languages

– Natural recursion among things like noun, verb, preposition

and their phrases because e.g. noun phrases can appear

inside verb phrases and prepositional phrases, and vice

versa

NP The cat

PP in

NP the hat

PP with

NP a feather …

• Important application of CFGs: specification

and compilation of programming languages

– Parser describes and analyzes syntax of a program

– Algorithms exist to construct parser from CFG

Context-free Languages and

Pushdown Automata

• CFLs are described by CFGs

• Include the regular languages plus

others

• Pushdown automata are to CFLs

as finite automata are to RLs

– Equivalent: recognize same languages

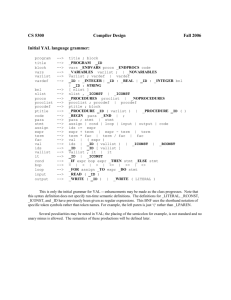

Example of a CFG

A aAb

AB

Bε

• Production rules: substitutions

• Non-terminals: variable that can have a

substitutions

• Terminals: symbols that are part of the

alphabet, no substitutions

• Start variable: left side of top-most rule

Generating strings

•

Use a grammar to describe a language

by generating each string as follows:

1. Write down the start variable

2. (a) Find a variable that is written down and a rule

that starts with that variable (i.e. the variable is on

the left of the arrow)

(b) Replace the variable with the right side for that

rule

3. Repeat step 2 until no variables remain

Example Derivation

• Sequence of substitutions is called a

derivation

• Derivation of aaabbb from grammar G:

A aAb aaAbb aaaAbbb

aaaBbbb aaaεbbb = aaabbb

G:

What language

is this?

Hint: it is not

Klingon…

A aAb

AB

Bε

Why is it not Klingon?

•

•

Headline: Paramount Claims Crowdfunded 'Star Trek' Film

Infringes Copyright to Klingon Language

www.hollywoodreporter.com/thr-esq/paramount-claimscrowdfunded-star-trek-874985

1.

2.

“The lawsuit by Paramount Pictures/CBS over Axanar, a fan-funded Star Trek film, is

boldly going places where no man — or Klingon — has gone before.”

Besides this geek reference, there is… another geek reference:

1.

2.

3.

4.

3.

4.

5.

the copyrightable nature of Klingon actually became a subject in a dispute between Oracle and Google.

Oracle looked to protect its Java API code from Google's attempt to use it in Android software. A federal

appeals court ruled in May 2014 that one could copyright an API (Round n winner: Oracle).

Round n+1: Google hasn't lost yet: the case returned to the District Court to consider a Fair Use defense.

The author notes Charles Duan… “found a metaphor in the language of Klingon… copyrighting an API is akin to

copyrighting a language, and what Oracle was doing was an attempt to stop Google from speaking a language.”

See: www.fastcompany.com/3048218/app-economy/heres-the-scariest-thing-about-theoracle-google-software-copyright-battle

https://en.wikipedia.org/wiki/Clean_room_design

www.slate.com/articles/technology/future_tense/2015/06/oracle_v_google_klingon_and_

copyrighting_language.single.html

Why is it not Klingon? (part 2)

•

From the Slate article (& to complete the connection)

by Charles Duan:

Copyright allows an author to stop anyone else from retyping,

printing, or otherwise copying the protected material. But

exactly what material is Oracle claiming protection in? The

declaring code: a collection of words—a vocabulary—attached

to certain contextual rules as to how those words are to be

used—a grammar. A vocabulary plus a grammar equals a

language, “a body of words and the systems for their use.”

We now return to our regularly scheduled presentation…

CFG for English

<sentence>

<noun-phrase>

<verb-phrase>

<prep-phrase>

<cmplx-noun>

<cmplx-verb>

<article>

<noun>

<verb>

<prep>

<noun-phrase> <verb-phrase>

<cmplx-noun>

| <cmplx-noun> <prep-phrase>

<cmplx-verb>

| <cmplx-verb> <prep-phrase>

<prep> <cmplx-noun>

<article> <noun>

<verb>

| <verb> <noun-phrase>

a | the

boy | girl | flower

touches | likes | sees

with

Non-terminals in brackets <>

Terminals in lower case

| means “or”

Derivation

<sentence>

<noun-phrase> <verb-phrase>

<cmplx-noun> <prep-phrase><verb-phrase>

<article> <noun><prep-phrase><verb-phrase>

the <noun><prep-phrase><verb-phrase>

the boy<prep-phrase><verb-phrase>

the boy<prep> <cmplx-noun><verb-phrase>

the boy with <cmplx-noun><verb-phrase>

the boy with <article> <noun><verb-phrase>

the boy with the <noun><verb-phrase>

the boy with the girl <verb-phrase>

the boy with the girl <cmplx-verb>

the boy with the girl <verb> <noun-phrase>

the boy with the girl sees <noun-phrase>

the boy with the girl sees <article> <noun>

the boy with the girl sees the <noun>

the boy with the girl sees the flower

<sentence>

<noun-phrase> <verbphrase>

<noun-phrase> <cmplx-noun>

| <cmplx-noun>

<prep-phrase>

<verb-phrase> <cmplx-verb>

| <cmplx-verb>

<prep-phrase>

<prep-phrase> <prep> <cmplx-noun>

<cmplx-noun> <article> <noun>

<cmplx-verb> <verb>

| <verb>

<noun-phrase>

<article>

a | the

<noun>

boy | girl | flower

<verb>

touches | likes | sees

<prep>

with

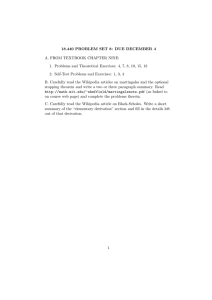

Formal CFG Definition

• Productions = rules of the form

head body

– head is a variable

– body is a string of zero or more variables and/or terminals

• Start Symbol = variable that represents "the

language"

• Notation: G = (V, , P, S)

–

–

–

–

V = variables

= terminals

P = productions

S = start symbol

Derivations, or, The Substitution Game

• A whenever there is a production

A

– (Subscript with name of grammar, e.g., G, if necessary

to distinguish)

– Example: abbAS abbaAbS

•

* means string can become in zero

or more derivation steps

– Examples:

• abbAS * abbAS (zero steps)

• abbAS *

abbaAbS (one step)

• abbAS * abbaabb (three steps)

Greek letters stand for

for strings of terminals

and non-terminals

Language of a CFG

• L(G) = set of terminal strings w such that S *

G w, where S is the start symbol.

• Notation

– a, b,… are terminals, … y, z are strings of terminals

– Greek letters are strings of non-terminals (variables) and/or

terminals, often called sentential forms

– A,B,… are non-terminals (variables)

– S is typically the start symbol

Leftmost/Rightmost Derivations

• We have a choice of variable to replace at

each step

– Derivations may appear different only because we make the

same replacements in a different order

– To avoid such differences, we may restrict the choice

• A leftmost derivation always replaces the

leftmost variable in a sentential form

– Yields left-sentential forms

• Rightmost derivation defined analogously

rm

lm

• , , etc., used to indicate derivations are

leftmost or rightmost

Example

• A simple example generates strings of as and

bs such that each block of as is followed by at

least as many bs

S AS |

A aAb | Ab | ab

• Note vertical bar separates different bodies

for the same head

Example

• Leftmost derivation:

S AS AbS abbS abbAS

abbaAbS abbaabbS abbaabb

• Rightmost derivation:

S AS AAS AA AaAb Aaabb

Abaabb abbaabb

Note: we derived the same string

Derivation Trees

• Nodes = variables, terminals, or

– Variables label interior nodes, terminals and label

leaves

– A leaf can be only if it is the only child of its parent

• A node and its children from the left

must form the head and body of a

production

Example

S

S AS |

A aAb | Ab |

S

A

A

b

a

A

A

S

b

Ambiguous Grammars

• A CFG is ambiguous if one or more terminal

strings have multiple leftmost derivations from

the start symbol

– Equivalently: multiple rightmost derivations, or multiple parse trees

• Example

– Consider S AS | , A Ab | aAb | ab

– The string aabbb has the following two leftmost derivations from

S:

1. S AS aAbS aAbbS aabbbS aabbb

2. S

AS AbS aAbbS aabbbS aabbb

Intuitively, we can use A Ab first or second to generate the extra b

S AS |

A Ab | aAb | ab

Two Parse Trees

1.

S AS

aAbS

aAbbS

aabbbS

aabbb

aabbb

S

a

b

A

A

b

b

S

a

a

S

A

S

A

a

2. S AS

AbS

aAbbS

aabbbS

aabbb

aabbb

A

b

A

b

b

Inherently Ambiguous

Languages

• A CFL L is inherently ambiguous if every CFG for L is

ambiguous

– Such things exist, see Sipser book

Add a new

variable and

use it to

The language of our example grammar is not inherently ambiguous, force

evenall a-b

pairs to be

though the grammar is ambiguous

generated

Change the grammar to force the extra bs to be generated last:

before

generating

additional

(optional)

bs

• Example

–

–

S AS |

A aAb | Ab | ab

S AS |

A aAb |B

B Bb |

S

One Parse Tree

S AS |

A aAb |B

B Bb |

S AS

aAbS

aaAbbS

aaBbbS

aaBbbb

aabbb

aabbb

aabbb

S

A

a

A

a

A

b

b

B

B

b

Why Care?

• Ambiguity of the grammar implies that at least some

strings in its language have different structures

(parse trees)

• Thus, such a grammar is unlikely to be useful for a

programming language, because two structures for the

same string (program) implies two different meanings

(executable equivalent programs) for this program

• Common example: the easiest grammars for

arithmetic expressions are ambiguous and need to be

replaced by more complex, unambiguous grammars

• An inherently ambiguous language would be

absolutely unsuitable as a programming language,

because we would not have any way of finding a unique

structure for all its programs

Expression Grammar

EE+E|E*E|a

Result of addition

is operand to

multiplication

operator

String: a + a * a has two

possible derivations (parse

trees):

E

E

E

a

+

E

Result of

multiplication is

operand to

addition operator

E

E

E

E

a

a

a

*

*

E

E

+

a

a

Removal of Ambiguity

1. Enforce higher precedence for * by forcing it

to occur later in the derivation

EE+E|T

T T * T | a

1. Eliminate right recursion for E E + T and

T T * T to avoid arbitrary choice of rule to

apply

EE+T|T

T T * a | a

Ambiguity removed from expression

grammar

E

E

+

T

a

a

EE+T|T

TT*a|a

T

a

*

String: a + a * a has only one

possible derivation (parse tree)

Another example

• The notorious dangling else:

<stmt> if <expr> then <stmt>

| if <expr> then <stmt> else <stmt>

| <other-stmt>

• Two derivations for

Else matches 1st then

if <expr> then if <expr> then <stmt> else <stmt>

<stmt>

if <expr> then <stmt>

if <expr> then <stmt> else <stmt>

Else matches 2nd then

<stmt>

if <expr> then <stmt> else <stmt>

if <expr> then <stmt>

Preventing the Dangling Else

<stmt> if <expr> then <stmt>

| if <expr> then <stmt> else <stmt>

| <other-stmt>

• How do we remove the ambiguity?

– Introduce new non-terminals to distinguish matched and unmatched

then/else

<stmt> <matched-stmt> | <unmatched-stmt>

<matched-stmt> if <expr> then <matched-stmt> else

<matched-stmt>

| <other-stmt>

<unmatched-stmt> if <expr> then <stmt>

| if <expr> then <matched-stmt> else

<unmatched-stmt>

<matched-stmt> | <unmatched-stmt>

if <expr> then <matched-stmt> else <matched-stmt>

| <other-stmt>

<unmatched-stmt> if <expr> then <stmt>

| if <expr> then <matched-stmt> else <unmatched-stmt>

<stmt>

<matched-stmt>

• One derivation for

if <expr> then if <expr> then <stmt> else <stmt>

<stmt>

<unmatched-stmt>

if <expr> then <stmt>

if <expr> then <matched-stmt> else <unmatched-stmt>

Else matches 2nd then

Pushdown Automata

• …adds a stack to a FA

• Typically non-deterministic

• An automaton equivalent to

CFGs

Pushdown Automata In Use

• Consider this java sample code

public class Unchecked_Demo {

public static void main(String args[]){

int num[]={1,2,3,4};

System.out.println(num[5]);

}

}

• What happens when it runs?

Exception in thread "main"

java.lang.ArrayIndexOutOfBoundsException: 5

at Exceptions.Unchecked_Demo.main(Unchecked_Demo.java:8)

• The process is referred to as “Unwinding, or

popping, the stack”

Schematic of a Finite Automaton

Finite control

a a b a c

input

Schematic of a Pushdown

Automaton

Finite control

b c c a a

x

y

z

stack

input

Notation

x, y

p

z

q

If at state p with next input symbol x and

top of stack is y:

– go to state q and replace y by z on

stack

• x = : ignore input, don’t read

• y = : ignore top of stack and push z

• z = : pop y

Formal PDA

P = (Q, , , , q0,F)

•

•

•

•

Q, , q0, and F have their meanings from FA

= stack alphabet

= transition function

Ge = GÈ{e}

åe å

δ : Q Σ ε ε P (Q ε )

Takes a state, input symbol (or ) and a stack symbol and gives

=

you a finite number of choices of:

1.

A new state (possibly the same)

2.

A string of stack symbols (or ) to replace the top stack symbol

∪ {e }

PDAs à la Sipser

• No intrinsic way to test for an empty stack

– Get the same effect by initially putting a special symbol $ on

the stack

• Machine knows stack is empty if it sees $ during

computation

• No intrinsic way to know when end of input string is

reached

– PDA achieves that effect because the accept state takes

effect only when the machine is at the end of the input

N.B.: Different textbook authors use

different conventions and notations

For

an bn :

a,

q1

, $

q2

a

b,a

b,a

$ is a special

“bottom of

stack” symbol

q3

, $

q4

•q1 = starting to see a group of as and bs

•q2 = reading as and pushing as onto the stack

•q3 = reading bs and popping as until the as are all

popped

•q4 = no more input and empty stack; accept

Instantaneous Descriptions

(IDs)

• For a FA, the only thing of interest is its

state

• For a PDA, we want to know its state

and the entire contents of its stack

• Represented by an ID (q, w, ), where

– q = state

– w = waiting input

– = stack [top on left]

Moves of the PDA

• If (q,a,X) contains (p,), then

(q,aw,X) (p,w,)

– Extend to * to represent 0, 1, or many moves

– Subscript by name of the PDA, if necessary

– Input string w is accepted if (q0,w,$) * (p, , ) for

any accepting state p

– L(P) = set of strings accepted by P

(q1, aabb, )

(q2, aabb,$)

(q2, abb, a$)

(q2, bb, aa$)

(q3, b, a$)

Example

(q3, , $)

(q4, , )

a,

q1

, $

q2

a

b,a

b,a

q3

, $

q4

Palindromes

a , a

b , b

, $

Input:

aaabcbaaa

c,

a , a

b , b

, $

Palindromes

a , a

b , b

, $

Input:

aaabcbaaa

c,

a , a

b , b

, $

$

Palindromes

a , a

b , b

, $

Input:

aaabcbaaa

c,

a , a

b , b

, $

a $

Palindromes

a , a

b , b

, $

Input:

aaabcbaaa

c,

a , a

b , b

, $

a a $

Palindromes

a , a

b , b

, $

Input:

aaabcbaaa

a , a

b , b

c,

, $

a a a $

Palindromes

a , a

b , b

, $

Input:

aaabcbaaa

c,

a , a

b , b

, $

b a a a $

Palindromes

a , a

b , b

, $

Input:

aaabcbaaa

c,

a , a

b , b

, $

b a a a $

Palindromes

a , a

b , b

, $

Input:

aaabcbaaa

a , a

b , b

c,

, $

a a a $

Palindromes

a , a

b , b

, $

Input:

aaabcbaaa

c,

a , a

b , b

, $

a a $

Palindromes

a , a

b , b

, $

Input:

aaabcbaaa

c,

a , a

b , b

, $

a $

Palindromes

a , a

b , b

, $

Input:

aaabcbaaa

c,

a , a

b , b

, $

$

Palindromes

a , a

b , b

, $

a , a

b , b

c,

Input:

aaabcbaaa

ACCEPT!

, $

PDA Exercise

Draw the PDA acceptor for

L = { x {a,b}* | na(x) = 2nb(x) }

NOTE: The empty string is in L

PDA Exercise

• Main idea: use stack to keep count of # of a’s

and/or b’s needed to get a valid string. 3 Cases:

1. baa (scan b first, scanned zero a’s so far)

–

Push 2 a’s onto stack as reminder we need to scan two a’s.

2. aab (scan two a’s first, scanned zero b’s so far)

–

Push 4 (!) A’s onto stack as reminder we have read 2 a’s and need

to scan one b. The capital A’s (2 of them together) connote

“negative a” or, we’ve seen an a already.

3. aba (scan one a first, combination of first 2 cases)

–

–

Push 2 A’s onto stack as part of case 2 above. (greenish(?) ab)

Then, push 1 a onto stack as part of case 1 above. (periwinkle ba)

(dark blue b: color combination of periwinkle and greenish. My interpretation. Just go with it :-)

6

(read a,

pop a)*

, $

a, a

(read b push

an a)*

,

1

b,

2

a, $

a,

3

$

A

b, A

,

A

5

a

, A

a

, A

4

, A

(read a:

push 2 A’s)*

read b:

(pop 2 A’s)*

6

, $

a, a

,

1

a

b,

2

a, $

a,

$

A

3

5

, A

b, A

,

a

, A

A

4

, A

Pattern: scan

b first! need

to scan two

a’s in future

Pattern: scan

b first! need

to scan two

a’s in future

{

{

(1,baabaa, )

(2,baabaa,$)

(5,aabaa,a$)

(2,aabaa,aa$)

(2,abaa,a$)

(2,baa,$)

(5,aa,a$)

(2,aa,aa$)

(2,a,a$)

(2,,$)

(6,,)

accept!

Case 1: baabaa

6

, $

a, a

,

1

a

b,

2

a, $

a,

$

A

3

5

, A

b, A

,

a

, A

A

4

, A

Pattern: scan

a first! need

to push two

A’s on stack

Together,

stack reminds

us to scan B

(just repeating state)

Pattern: scan

a first! need

to push two

A’s on stack

{

{

(1,aab, )

(2,aab,$)

(3,ab,A$)

(2,ab,AA$)

Case 2: aab

(2,ab,AA$)

(3,b,AAA$)

(2,b,AAAA$)

(4,,AAA$)

(4,,AA$)

(4,,A$)

(2,,$)

(6,,)accept!

6

, $

a, a

,

1

a

b,

2

a, $

a,

$

A

3

5

, A

b, A

,

a

, A

A

4

, A

Pattern: scan

a first! need

to push two

A’s on stack

{

{

Pattern: scan b

next. Need to ack

1 a scanned (pop

As) & 1 a to scan

in future - push a

(1,aba, )

(2,aba,$)

(3,ba,A$)

(2,ba,AA$)

(4,a,A$)

(5,a,$)

(2,a,a$)

(2,,$)

(6,,)

Case 3: aba

accept!

6

, $

a, a

,

1

a

b,

2

a, $

a,

$

A

3

5

, A

b, A

,

a

, A

A

4

, A

Pattern: scan

a first! need

to push two

A’s on stack

{

{

Pattern: scan b

next. Need to ack

1 a scanned (pop

As) & 1 a to scan

in future - push a

(1,aba, )

(2,aba,$)

(3,ba,A$)

(2,ba,AA$)

(4,a,A$)

(5,a,$)

(2,a,a$)

(2,,$)

(6,,)

accept!

Case 3: aba

Now we can

scan complex

strings (!)

: bbaaaa

abbaaaaab

aababbaaaa

6

, $

a, a

,

1

a

b,

2

a, $

a,

$

A

3

5

, A

b, A

,

a

, A

A

4

, A

Pattern: scan

a first! need

to push two

A’s on stack

{

{

Pattern: scan b

next. Need to ack

1 a scanned (pop

As) & 1 a to scan

in future - push a

(1,aba, )

(2,aba,$)

(3,ba,A$)

(2,ba,AA$)

(4,a,A$)

(5,a,$)

(2,a,a$)

(2,,$)

(6,,)

accept!

Case 3: aba

Now we can

scan complex

strings (!)

: bbaaaa

abbaaaaab

aababbaaaa

Well, maybe not the

last one… (why?)

Another PDA Exercise

Draw the PDA acceptor for

L = { aibjck | i = j + k }

Another PDA Exercise

Draw the PDA acceptor for

L = { aibjck | i = j + k }

• How do we approach this one?

– Consider L = { aibi } then go on from there…

• See earlier charts for details

Deterministic PDAs

• The PDAs we are dealing with are invariably

non-deterministic

• A DPDA never has a choice of move

– (q, a, Z) has at most one member for any q, a, Z (including a

= ).

– If (q, , Z) is nonempty, then (q, a, Z) must be empty for all

input symbols a

• Why Care?

– Parsers are DPDAs

– Thus, the question of what languages a DPDA can accept is

really the question of what programming language syntax can be

parsed conveniently

Non-deterministic PDAs

• A non-deterministic PDA allows nondeterministic transitions (e.g., defines multiple

possible moves for a given configuration)

• Nondeterministic PDAs are strictly stronger

then deterministic PDAs

• In this respect, the situation is not similar to

the situation of DFAs and NFAs

• Non-deterministic PDAs are equivalent to CFLs

Real compilers

• However, unambiguous, deterministic

CFGs are complicated and too

restricted

• Real parsers ‘cheat’ by looking

ahead one token

– This places certain restrictions on the grammar,

but not as many

• STAY TUNED: Learn about LL(1) and LR(1) grammars

in CMPU 331

– Some ambiguities (e.g. dangling else) are easily

handled with one token lookahead

APPENDIX

APPENDIX

APPENDIX

What? Another Digression?

Or… I want to be real compiler

•

Recall node traversal algorithms from data structures…

–

–

–

•

In order traversal yields: 1 + 2 * 3 (but what does that mean? Is the result 9? 7?)

Pre order traversal yields +1 * 2 3 (perhaps more clear)

Post order traversal yields 1 2 3 * + (Reverse Polish Notation)

We discussed CFGs and derivations. In relation to LL/LR Parsers…

–

–

–

LL(1) == Left to right, Leftmost derivation, 1 token look ahead. LR(1) == Left to right, Rightmost derivation, 1 token look ahead.

LL parser outputs a pre-order traversal of the parse tree

LR parser outputs a post-order traversal

Equivalence of Parse Trees,

Leftmost, and Rightmost Derivations

• The following about a grammar G = (V,,

P, S) and a terminal string w are all

equivalent:

* w (i.e., w is in L(G)).

1. S

* w

2. S

lm

* w

3. S

rm

4. There is a parse tree for G with root S and yield

(labels of leaves, from the left) w.

• Obviously (2) and (3) each imply (1).

Parse Tree Implies LM/RM

Derivations

• Generalize all statements to talk about an

arbitrary variable A in place of S.

– Except now (1) no longer means w is in L(G).

• Induction on the height of the parse tree.

• Basis: Height 1: Tree is root A and leaves

w = a1, a2, …, ak.

• A

w

rm w must be a production, so A

lm

and A w.

*

*

• Induction: Height > 1: Tree is root A with children

= X1, X2, …, Xk.

• Those Xi's that are variables are roots of shorter

trees.

– Thus, the IH says that they have LM derivations of their yields.

• Construct a LM derivation of w from A by starting

* X X …X , then using LM derivations

with A

lm

1 2

k

from each Xi that is a variable, in order from the

left.

• RM derivation analogous.

Derivations to Parse Trees

• Induction on length of the derivation.

• Basis: One step. There is an obvious parse tree.

• Induction: More than one step.

– Let the first step be A X1X2 …Xk.

– Subsequent changes can be reordered so that all changes to X1 and

the sentential forms that replace it are done first, then those for X2,

and so on (i.e., we can rewrite the derivation as a LM derivation).

– The derivations from those Xi's that are variables are all shorter than

the given derivation, so the IH applies.

– By the IH, there are parse trees for each of these derivations.

– Make the roots of these trees be children of a new root labeled A.

Example

• Consider derivation S AS AAS AA

•

•

A1A A10A1 0110A1 0110011

Sub-derivation from A is: A A1 011

Sub-derivation from S is: S AS A

0A1 0011

• Each has a parse tree, put them together with

new root S.

Only-If Proof (i.e., Grammar PDA)

•

Prove by induction on the number of steps in the leftmost derivation

S * that for any x, (q, wx, S) |-* (q, x, ) , where

1.

w =

2.

is the suffix of that begins at the leftmost variable ( = if there is no

variable).

•

•

•

Also prove the converse, that if (q,wx, S) |-* (q, x, ) then S w.

Inductive proofs in book.

As a consequence, if y is a terminal string, then S y iff

(q,* y, S) |-* (q,, ), i.e., y is in L(G) iff y is in N(A).

*