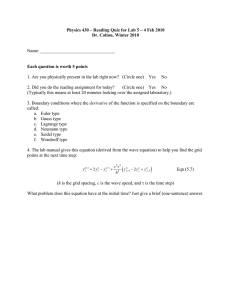

Fleming_PDEs.ppt

advertisement

Monte Carlo Methods in Partial Differential Equations Random Walk Methods • Based on diffusion algorithms • Applicable to elliptic and parabolic eqn’s • Variations: – Random walks on grids – Brownian motion simulation – Walk on spheres – Green’s first passage Laplace’s Equation with Dirichlet Boundary Conditions u 0 on G 2 u f ( x) on G Finite Difference Approx. • For the 2D problem via centered difference: u j ,k 14 [u j 1,k u j 1,k u j ,k 1 u j ,k 1 ] uj-1,k uj,k-1 uj,k Uj+1,k Uj,k+1 Finite Difference Approx. • How does it work? – Start at a boundary an iterate over the grid points until you reach the desired accuracy • How can we build a Monte Carlo algorithm from this? – Notice that each grid point is the average of its neighbors….. Randomizing the algorithm • Notice that the value at each grid point is the average its neighbors, which are in turn the average of their neighbors, until a boundary point is found • Consider the value at one point in the grid. – Randomly pick a neighbor with probability 0.25. We don’t know it’s value, but we know it is also the average of its neighbors, so pick one of those. – Continue this until a boundary point is picked. The value at this boundary point is now an estimate of the value of the previous point, which is an estimate of the previous point, etc, etc, all the way back to the original point. – Repeat this N times, add the estimates, then divide by N. – This is the random walk on the grid method Markov Chain • The walk on the grid is a Markov Chain process and satisfies three conditions: – The probability of moving from one grid point to another depends only on the current grid point, not on any previous moves (Markov property) – In order to converge it must satisfy ergodicity: • The chain can reach any point in the grid from any other point (irreducibility) • The chain eventually terminates at a boundary point (aperiodicity) – P(x)T(x,y) = P(y)T(y,x) where P(x) is the stationary distribution of the chain and T(x,y) is the transition probability of going from x to y. (detailed balance) Laplace’s Equation with Variable Coefficients • Consider the problem: u xx sin( x)u yy 0 on G u f ( x) on G This is slightly more complicated, due to the variable coefficient Finite Difference Approx. • The central difference approximation for this is: ui , j ui , j 1 ui , j 1 sin x j (ui 1, j ui 1, j ) 2(1 sin x j ) • Notice that the coefficients of the u’s are all positive and sum to 1 • The value at point is now the weighted average of its neighbors • We can do the same thing we did before, except we now choose each neighbor with probability equal to its weight Can we do better? • Consider what happens as we let the grid spacing go to zero – For Laplace’s equation this becomes simple Brownian motion – The value of u(x0) becomes the expected value of f at the boundary point where the particle exits the domain • We can solve Laplace’s equation by directly simulating Brownian motion but this is slow. • What else can we do? Walk on Spheres • Consider a sphere centered around x0 – At some future time the particle has an equal chance to land at any point on the surface of the sphere. – If the process is ergodic, it must eventually end up on the surface of the sphere • So why simulate Brownian directly? – Instead, directly simulate the jump from the center to the surface. – At each step, we now make much larger time steps Walk on Spheres • How large can our sphere be? – As large as possible. Find the closest boundary point and make that distance the radius of the sphere • How do we reach the boundary? – Introduce an epsilon layer just inside the boundary. The particle reaches the boundary when it’s inside this epsilon layer – The epsilon layer introduces a bias into the estimate, but we can control the size of the bias by the size of epsilon Walk on other shapes • We are not constrained to walking on spheres – Spheres are nice in this case because of the uniform distribution of exit points • We can walk instead on any surface that we can write p(x0,x) for in a convenient way. – Usually this is the intersection of simple shapes Green’s Function First Passage • The transition probability for a surface is related to the Green’s function for the operator and surface under consideration p( x0 , x) n G( x0 , x) • If we know the Green’s function for the domain, we can directly simulate the transition from the starting point to the boundary • If the domain can be broken into sub domains where we know the Green’s function for the sub domain, we can directly simulate the transition from the starting point to either the boundary or another sub domain • For example – a union of spheres Walk on the Boundary • The idea: – Rewrite the PDE as an integral equation – Using the integral representation, write a Monte Carlo estimator of the solution that involves a Markov chain of random walks on the boundary An Example • Consider the exterior Dirichlet problem: • The solution to this can be written in integral equation form as: Example con’t • Assume no charges outside of G • The charge density satisfies: Monte Carlo Estimate • We can solve this with a walk on the boundary. • Our transition probability is uniform in solid angle: • The charge density can now be estimated as the expected value of this walk on the boundary. • An estimator for the potential can also be written directly. Why use Monte Carlo methods? • • • • • No “curse of dimensionality” Very fast estimates of point values Able to handle complex boundaries No discretization errors Simple to parallelize My Current Research • We’ve seen how we can derive a Monte Carlo method that estimates a finite difference solution • Can we do this with other deterministic techniques? – Finite elements – Boundary elements • Can we combine Monte Carlo methods with deterministic techniques to leverage the strengths of both? – Split domain into sub domains – Solve in each sub domain with the appropriate method – Join the solutions using domain decomposition techniques • What is the fundamental relationship between deterministic and Monte Carlo techniques for PDE’s?