Computation Spreading: Employing Hardware Migration to Specialize CMP Cores On-the-fly Koushik Chakraborty

advertisement

Computation Spreading: Employing

Hardware Migration to Specialize CMP

Cores On-the-fly

Koushik Chakraborty Philip Wells

Gurindar Sohi

{kchak,pwells,sohi}@cs.wisc.edu

1

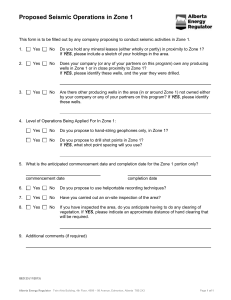

Paper Overview

Multiprocessor Code Reuse

Poor resource utilization

Computation Spreading

New model for assigning computation within

a program on CMP cores in H/W

Case Study: OS and User computation

Investigate performance characteristics

Chakraborty, Wells, and Sohi

ASPLOS 2006

2

Talk Outline

Motivation

Computation Spreading (CSP)

Case study: OS and User compution

Implementation

Results

Related Work and Summary

Chakraborty, Wells, and Sohi

ASPLOS 2006

3

Homogeneous CMP

Many existing systems are homogeneous

Sun Niagara, IBM Power 5, Intel Xeon MP

Multithreaded server application

Composed of server threads

Typically each thread handles a client request

OS assigns software threads to cores

• Entire computation from one thread execute on

a single core (barring migration)

Chakraborty, Wells, and Sohi

ASPLOS 2006

4

Code Reuse

Many client requests are similar

Similar service across multiple threads

Same code path traversed in multiple cores

Instruction footprint classification

Exclusive – single core access

Common – many cores access

Universal – all cores access

Chakraborty, Wells, and Sohi

ASPLOS 2006

5

Multiprocessor Code Reuse

Chakraborty, Wells, and Sohi

ASPLOS 2006

6

Implications

Lack of instruction stream specialization

Redundancy in predictive structures

• Poor capacity utilization

Destructive interference

No synergy among multiple cores

Lost opportunity for co-operation

Exploit core proximity in CMP

Chakraborty, Wells, and Sohi

ASPLOS 2006

7

Talk Outline

Motivation

Computation Spreading (CSP)

Case study: OS and User compution

Implementation

Results

Related Work and Summary

Chakraborty, Wells, and Sohi

ASPLOS 2006

8

Computation Spreading (CSP)

Computation fragment = dynamic instruction

stream portion

Collocate similar computation fragments from

multiple threads

Enhance constructive interference

Distribute dissimilar computation fragments

from a single thread

Reduce destructive interference

Reassignment is the key

Chakraborty, Wells, and Sohi

ASPLOS 2006

9

Example

time

T1 T2 T3

A1 B2 C3

B1 C2 A3

C1 A2 B3

C

A

N

O

N

I

C

A

L

A1 B2 C3

B1 C2 A3

C1 A2 B3

P1 P2 P3

A1 B2 C3

C

S

P

A3 B1 C2

A2 B3 C1

Chakraborty, Wells, and Sohi

ASPLOS 2006

10

Key Aspects

Dynamic Specialization

Homogeneous multicore acquires specialization via

retaining mutually exclusive predictive state

Data Locality

Data dependencies between different computation

fragments

Careful fragment selection to avoid loss of data

locality

Chakraborty, Wells, and Sohi

ASPLOS 2006

11

Selecting Fragments

Server workloads characteristics

Large data and instruction footprint

Significant OS computation

User Computation and OS Computation

A natural separation

Exclusive instruction footprints

Relatively independent data footprint

Chakraborty, Wells, and Sohi

ASPLOS 2006

12

Data Communication

Core 1

Core 2

T1-User

T2-User

T1

T2

T1-OS

T2-OS

Chakraborty, Wells, and Sohi

ASPLOS 2006

13

Relative Inter-core Data

Communication

Apache

OLTP

OS-User Communication is limited

Chakraborty, Wells, and Sohi

ASPLOS 2006

14

Talk Outline

Motivation

Computation Spreading (CSP)

Case study: OS and User compution

Implementation

Results

Related Work and Summary

Chakraborty, Wells, and Sohi

ASPLOS 2006

15

Implementation

Migrating Computation

Transfer state through the memory subsystem

• ~2KB of register state in SPARC V9

• Memory state through coherence

Lightweight Virtual Machine Monitor

Migrates computation as dictated by the CSP Policy

Implemented in hardware/firmware

Chakraborty, Wells, and Sohi

ASPLOS 2006

16

Threads

Implementation cont

Software

Stack

Virtual CPUs

OS Comp

User Comp

Physical

Cores

User Cores

Chakraborty, Wells, and Sohi

Baseline

OS Cores

ASPLOS 2006

17

Threads

Implementation cont

Software

Stack

Virtual CPUs

Physical

Cores

User Cores

Chakraborty, Wells, and Sohi

OS Cores

ASPLOS 2006

18

CSP Policy

Policy dictates computation assignment

Thread Assignment Policy (TAP)

Maintains affinity between VCPUs and

physical cores

Syscall Assignment Policy (SAP)

OS computation assigned based on system

calls

TAP and SAP use identical assignment for

user computation

Chakraborty, Wells, and Sohi

ASPLOS 2006

19

Talk Outline

Motivation

Computation Spreading (CSP)

Case study: OS and User compution

Implementation

Results

Related Work and Summary

Chakraborty, Wells, and Sohi

ASPLOS 2006

20

Simulation Methodology

Virtutech SIMICS MAI running Solaris 9

CMP system: 8 out-of-order processors

2 wide, 8 stages, 128 entry ROB, 3GHz

3 level memory hierarchy

Private L1 and L2

Directory base MOSI

L3: Shared, Exclusive 8MB (16w) (75 cycle load-to-use)

Point to point ordered interconnect (25 cycle latency)

Main Memory 255 cycle load to use, 40GB/s

Measure impact on predictive structures

Chakraborty, Wells, and Sohi

ASPLOS 2006

21

L2 Instruction Reference

Chakraborty, Wells, and Sohi

ASPLOS 2006

22

Result Summary

Branch predictors

9-25% reduction in mis-predictions

L2 data references

0-19% reduction in load misses

Moderate increase in store misses

Interconnect messages

Moderate reduction (after accounting extra

messages for migration)

Chakraborty, Wells, and Sohi

ASPLOS 2006

23

Performance Potential

Migration Overhead

Chakraborty, Wells, and Sohi

ASPLOS 2006

24

Talk Outline

Motivation

Computation Spreading (CSP)

Case study: OS and User compution

Implementation

Results

Related Work and Summary

Chakraborty, Wells, and Sohi

ASPLOS 2006

25

Related Work

Software re-design: staged execution

Cohort Scheduling [Larus and Parkes 01], STEPS

[Ailamaki 04], SEDA [Welsh 01], LARD [Pai 98]

CSP: similar execution in hardware

OS and User Interference [several]

Structural separation to avoid interference

CSP avoids interference and exploits synergy

Chakraborty, Wells, and Sohi

ASPLOS 2006

26

Summary

Extensive code reuse in CMPs

45-66% instruction blocks universally accessed in

server workloads

Computation Spreading

Localize similar computation and separate

dissimilar computation

Exploits core proximity in CMPs

Case Study: OS and User computation

Demonstrate substantial performance potential

Chakraborty, Wells, and Sohi

ASPLOS 2006

27

Thank You!

Chakraborty, Wells, and Sohi

ASPLOS 2006

28

Backup Slides

Chakraborty, Wells, and Sohi

ASPLOS 2006

29

L2 Data Reference

L2 load miss comparable, slight to moderate increase

in L2 store miss

Chakraborty, Wells, and Sohi

ASPLOS 2006

30

Multiprocessor Code Reuse

Chakraborty, Wells, and Sohi

ASPLOS 2006

31

Performance Potential

Chakraborty, Wells, and Sohi

ASPLOS 2006

32