torrey.ecml05.ppt

advertisement

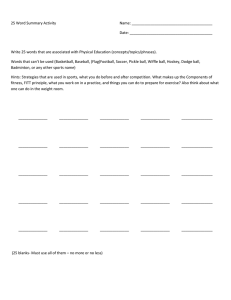

Using Advice to Transfer Knowledge Acquired in One Reinforcement Learning Task to Another Lisa Torrey, Trevor Walker, Jude Shavlik University of Wisconsin-Madison, USA Richard Maclin University of Minnesota-Duluth, USA Our Goal Transfer knowledge… … between reinforcement learning tasks … employing SVM function approximators … using advice Transfer Exploit previously learned models Learn first task knowledge acquired Learn related task Improve learning of new tasks with transfer without transfer performance experience Reinforcement Learning +2 0 -1 state…action…reward…new state Q-function: value of taking action from state Policy: take action with max Qaction(state) Advice for Transfer Based on what worked in Task A, I suggest… Task A Solution Task B Learner I’ll try it, but if it doesn’t work I’ll do something else. Advice improves RL performance Advice can be refined or even discarded Transfer Process Task A experience Task A Q-functions Transfer Advice Task B experience Task B Q-functions Advice from user (optional) Mapping from user Task A Task B Advice from user (optional) RoboCup Soccer Tasks KeepAway BreakAway Keep ball from opponents Score a goal [Stone & Sutton, ICML 2001] [Maclin et al., AAAI 2005] RL in RoboCup Tasks KeepAway BreakAway Features (time left) Actions Pass, Hold Rewards Each time step: +1 Pass, Move, Shoot At end: +2, 0, or -1 Transfer Process Task A experience Task A Q-functions Transfer Advice Task B experience Task B Q-functions Mapping from user Task A Task B Approximating Q-Functions Given examples State features Si= <f1 , … , fn> Estimated values y Qaction(Si) Learn linear coefficients y = w1 f1 + … + wn fn + b Non-linearity from Boolean tile features tilei,lower,upper = 1 if lower ≤ fi < upper Support Vector Regression Q-estimate y state S Linear Program minimize ||w||1 + |b| + C||k||1 such that y - k Sw + b y + k Transfer Process Task A experience Task A Q-functions Transfer Advice Task B experience Task B Q-functions Mapping from user Task A Task B Advice Example if distance_to_goal 10 and shot_angle 30 then prefer shoot over all other actions Need only follow advice approximately Add soft constraints to linear program Incorporating Advice Maclin et al., AAAI 2005 if v11 f1 + … + v1n fn d1 … and vm1 f1 + … + vmn fn dn then Qshoot > Qother for all other Advice and Q-functions have same language Linear expressions of features Transfer Process Task A experience Task A Q-functions Transfer Advice Task B experience Task B Q-functions Mapping from user Task A Task B Expressing Policy with Advice Qhold_ball(s) Old Q-functions Qpass_near(s) Qpass_far(s) Advice expressing policy if Qhold_ball(s) > Qpass_near(s) and Qhold_ball(s) > Qpass_far(s) then prefer hold_ball over all other actions Mapping Actions Qhold_ball(s) Old Q-functions Qpass_near(s) Qpass_far(s) hold_ball move pass_near pass_near pass_far Mapping from user Mapped policy if Qhold_ball(s) > Qpass_near(s) and Qhold_ball(s) > Qpass_far(s) then prefer move over all other actions Mapping Features Mapping from user Q-function mapping Qhold_ball(s) = w1 (dist_keeper1)+ w2 (dist_taker2)+ … Q´hold_ball(s) = w1 (dist_attacker1)+ w2 (MAX_DIST)+ … Transfer Example Old model Qx = wx1f1 + wx2f2 + bx Mapped model Q´x = wx1f´1 + wx2f´2 + bx Qy = wy1f1 Q´y = wy1f´1 Qz = if + by wz2f2 + bz Advice Q´x > Q´y if Q´z = + by wz2f´2 + bz Advice (expanded) wx1f´1 + wx2f´2 + bx > wy1f´1 + by and Q´x > Q´z and wx1f´1 + wx2f´2 + bx > wz2 f´2 + bz then prefer x´ then prefer x´ to all other actions Transfer Experiment Between RoboCup subtasks From 3-on-2 KeepAway To 2-on-1 BreakAway Two simultaneous mappings Transfer passing skills Map passing skills to shooting Experiment Mappings Play a moving KeepAway game Pass Pass, Hold Move Pretend teammate is standing in the goal Pass Shoot imaginary teammate Experimental Methodology Averaged over 10 BreakAway runs Transfer: advice from one KeepAway model Control: runs without advice Results Probability (Score Goal) 0.8 0.6 0.4 0.2 0 0 2500 5000 7500 10000 Games Played Analysis Transfer advice helps BreakAway learners Improvement is delayed 7% more likely to score a goal after learning Advantage begins after 2500 games Some advice rules apply rarely Preconditions for shoot advice not often met Related Work: Transfer Remember action subsequences [Singh, ML 1992] Restrict action choices [Sherstov & Stone, AAAI 2005] Transfer Q-values directly in KeepAway [Taylor & Stone, AAMAS 2005] Related Work: Advice “Take action A now” [Clouse & Utgoff, ICML 1992] “In situations S, action A has value X ” [Maclin & Shavlik, ML 1996] “In situations S, prefer action A over B” [Maclin et al., AAAI 2005] Future Work Increase speed of linear-program solving Decrease sensitivity to imperfect advice Extract advice from kernel-based models Help user map actions and features Conclusions Transfer exploits previously learned models to improve learning of new tasks Advice is an appealing way to transfer Linear regression approach incorporates advice straightforwardly Transferring a policy accommodates different reward structures Acknowledgements DARPA grant HR0011-04-1-0007 United States Naval Research Laboratory grant N00173-04-1-G026 Michael Ferris Olvi Mangasarian Ted Wild