Incorporating N-gram Statistics in the Normalization of Clinical Notes

advertisement

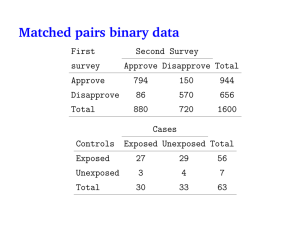

Incorporating N-gram Statistics in the Normalization of Clinical Notes By Bridget Thomson McInnes 1 Overview Ngrams Ngram Statistics for Spelling Correction Spelling Correction Ngram Statistics for Multi Term Identification Multi Term Identification 2 Ngram Her dobutamine stress echo showed mild aortic stenosis with a subaortic gradient. Bigrams Her dobutamine Dobutamine stress Stress echo Echo showed Showed mild Mild aortic Aortic stenosis Stenosis with With a A subaortic Subaortic gradient Trigrams her dobutamine stress dobutamine stress echo stress echo showed echo showed mild showed mild aortic mild aortic stenosis aortic stenosis with stenosis with a a subaortic gradient 3 Contingency Tables Word 2 ! Word 2 Word 1 n11 n12 n1p ! Word 1 n21 n22 n2p np1 np2 npp • n11 = the joint frequency of word1 and word2 • n12 = the frequency word 1 occurs and word 2 does not • n21 = the frequency word 2 occurs and word 1 does not • n22 = the frequency word 1 and word 2 do not occur • npp = the total number of ngrams • n1p, np1, np2, n2p are the marginal counts 4 Contingency Tables echo ! echo stress 1 0 1 !stress 0 10 10 1 10 Her dobutamine Dobutamine stress Stress echo Echo showed Showed mild Mild aortic Aortic stenosis Stenosis with With a A subaortic Subaortic gradient 11 1 1 1 1 1 1 1 1 1 1 1 5 Contingency Tables Expected Values Word 2 ! Word 2 Word 1 n11 n12 n1p ! Word 1 n21 n22 n2p np1 np2 npp • Expected Values • m11 = (np1 * n1p) / npp • m12 = (np2 * n1p) / npp • m21 = (np1 * n2p) / npp • m22 = (np2 * n2p) / npp 6 Contingency Tables echo ! echo stress 1 0 1 !stress 0 10 10 1 10 11 • Expected Values • m11 = ( 1 * 1 ) / 11 = 0.09 What is this telling you? • m12 = ( 1 * 10) / 11 = 0.91 ‘this is’ occurs twice in our example. • m21 = ( 1 * 10) / 11 = 0.90 The expected occurrence of ‘this is’ if they are independent is .09 (m11). • m22 = (10 * 10) / 11 = 9.09 7 Ngram Statistics Measures of Association Log Likelihood Ratio Chi Squared Test Odds Ratio Phi Coefficient T-Score Dice Coefficient True Mutual Information 8 Log Likelihood Ratio Word 2 ! Word 2 Word 1 n11 n12 n1p ! Word 1 n21 n22 n2p np1 np2 npp Log Likelihood = 2 * ∑ ( nij * log( nij / mij) ) The log likelihood ratio measures the difference between the observed values and the expected values. It is the sum of the ratio of the observed and expected values 9 Chi Squared Test Word 2 ! Word 2 Word 1 n11 n12 n1p ! Word 1 n21 n22 n2p np1 np2 npp x2 = ∑ pow( (nij – mij), 2) / mij The chi squared test also measures the difference between the observed values and the expected values. It is the sum of the difference between the observed and expected values 10 Odds Ratio Word 2 ! Word 2 Word 1 n11 n12 n1p ! Word 1 n21 n22 n2p np1 np2 npp Odds Ratio = (n11 * n22) / (n21 * n12) The odds ratio is the ratio is the total number of times an event takes place to the total number of times that it does not take place. It is the cross product ratio of the 2x2 contingency table and measures the magnitude of association between two words 11 Phi Coefficient Word 2 ! Word 2 Word 1 n11 n12 n1p ! Word 1 n21 n22 n2p np1 np2 npp Phi = ( (n11 * n22) - (n21 * n12) ) / Sqrt(np1 * n1p * n2p * np2) The bigrams are considered positively associated if most of data is along the diagonal (meaning if n11 and n22 are larger than n12 and n21) and negatively associated if most of the data falls off the diagonal. 12 T Score Word 2 ! Word 2 Word 1 n11 n12 n1p ! Word 1 n21 n22 n2p np1 np2 npp T Score = ( n11 – m11 ) / sqrt( n11 ) The tscore determines whether there is some non random association between two words. It is the quotient of your known and expected divided by the square root of your known 13 Dice Coefficient Word 2 ! Word 2 Word 1 n11 n12 n1p ! Word 1 n21 n22 n2p np1 np2 npp Dice coefficient = 2 * n11 / (np1 + n1p) The dice coefficient depends on the frequency of the events occurring together and their individual frequencies. 14 True Mutual Information Word 2 ! Word 2 Word 1 n11 n12 n1p ! Word 1 n21 n22 n2p np1 np2 npp TMI = (nij / npp) * ∑ log( nij / mij) True Mutual Information measures to what extent the observed frequencies differ from the expected. 15 Spelling Correction Using context sensitive information through the bigrams to determine the ranking of a given set of possible spelling corrections for a misspelled word. Given: First content word prior to the misspelled word First content word after the misspelled word List of possible spelling corrections 16 Spelling Correction Example Example Sentence: List of Possible corrections: artic aortic Statistical Analysis : her Her dobutamine stress echo showed mild aurtic stenosis with a subaortic gradient. Basic Idea dobutamine stress echo showed mild POS stenosis with subaortic gradient 17 Spelling Correction Statistics Possible 1 : Possible 2: mild artic 0.40 mild aortic 0.66 artic stenosis 0.03 aortic stenosis 0.30 Weighted average 0.215 Weighted average 0.46 • This allows us to take into consideration finding a bigram with word prior to the misspelling and after the misspelling • The possible word with its score are then returned 18 Types of Results Types of Results Gspell only Context sensitive only Hybrid of both Gspell and Context Taking the average of the Gspell and context sensitive scores Note : this turns into a backoff method when no statistical data is found for any of the possibilities Backoff method Use only the context sensitive score unless it does not exists then revert to the Gspell score 19 Preliminary Test Set Test set : partially scrubbed clinical notes Size : 854 words Number of misspellings : 82 Includes Abbreviations 20 Preliminary Results GSPELL Results : GSPELL Precision Recall 0.5357 0.7317 Fmeasure 0.6186 Context Sensitive Results: Measure of Association Precision Recall Fmeasure PHI 0.6161 0.8415 0.7113 LL 0.6071 0.8293 0.7010 TMI 0.6071 0.8293 0.7010 ODDS 0.6071 0.8293 0.7010 X2 0.6161 0.8415 0.7113 TSCORE 0.5625 0.7683 0.6495 DICE 0.6339 0.8659 0.7320 21 Preliminary Results Hybrid Method Results: Measure of association Precision Recall Fmeasure PHI 0.6607 0.9024 0.7629 LL 0.6339 0.8659 0.732 TMI 0.6607 0.9024 0.7629 ODDS 0.6250 0.8537 0.7216 X2 0.6339 0.8659 0.732 TSCORE 0.6071 0.8293 0.701 DICE 0.6696 0.9146 0.7732 22 Notes on Log Likelihood Log Likelihood is used quite often with context sensitive spelling correction Problem with large sample sizes The marginal values are very large due to the sample size Increases the expected values so the actually values are commonly so much lower than the expected values Very independent and very dependent ngrams end up with the same value Noticed similar characteristics with true mutual information 23 Example of Problem hip ! hip follow n11 88951 ! follow 65729 65740 88962 69783140 69848869 69872091 69937831 n11 Log Likelihood 11 145.3647 190 143.4268 86 0.09864 24 Conclusions with Preliminary Results Dice coefficient returns the best results Phi coefficient returns the second best Log Likelihood and True Mutual Information should not be used Need to now test the program with a more extensive test bed which is in the process of being created 25 NGram Statistics for Multi Term Identification Can not use previous statistics package Memory constraints due to the amount of data Would like to look for longer ngrams Alternative : Suffix Arrays (Church and Yamamoto) Reduces the amount of memory Two Arrays Two Stacks Contains the corpus Contains identifiers to the ngrams in the corpus Contains the longest common prefix Contains the document frequency Allows for ngrams up to the size of the corpus to be found 26 Suffix Arrays To be or not to be to be or not to be to be or not to be be or not to be • Each array element is considered a suffix or not to be • A Ngram is from a suffix until the end of the array not to be to be be 27 Suffix Arrays [0] [1] [2] [3] [4] [5] to be or not to be be or not to be or not to be not to be to be be = = = = = = 5 1 3 2 4 0 => => => => => => be be or not to be not to be or not to be to be to be or not to be Actual Suffix Array : 5 1 3 2 4 0 28 Term Frequency Term frequency (tf) is the number of times a ngram occurs in the corpus To determine the tf of an ngram: Sorted the suffix array tf = j – i + 1 j = first occurrence i = last occurrence [0] [1] [2] [3] [4] [5] = = = = = = 5 1 3 2 4 0 => => => => => => be be or not to be not to be or not to be to be to be or not to be 29 Measures of Association Residual Inverse Document Frequency (RIDF) RIDF = - log (df / D) + log(1 – exp(-tf/D) ) Compares the distribution of a term over documents to what would be expected by a random term Mutual Information (MI) MI(xYz) = log tf( xYz ) * tf( Y ) tf( xY) * tf( Yz ) Compares the frequency of the whole to the frequency of the parts 30 Present Work Calculated the MI and RIDF for the clinical notes for each of the possible sections: CC, CM, IP, HPI, PSH, SH and DX Retrieved the respective text for each heading Calculate the ridf and mi each possible ngrams with a term frequency greater than 10 for the data under each sections Noticed that different multi terms appear for each of the different sections 31 Conclusions Ngram statistics can be applied directly and indirectly to various problems Directly Spelling correction Compound word identification Term extraction Name identification Indirectly Part of Speech tagging Information Retrieval Data Mining 32 Packages Two Statistical Packages Contingency Table approach Measures for bigrams Measures for trigrams Log Likelihood, True Mutual Information, Chi Squared Test, 0dds Ratio, Phi Coefficient, T Score, and Dice Coefficient Log Likelihood and True Mutual Information Suffix Array approach Measures for all lengths of ngrams Residual Inverse Document Frequency and Mutual Information 33