Academic Assessment Handbook

advertisement

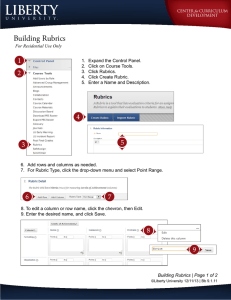

Handbook for the Assessment of Student Learning STATE UNIVERSITY OF NEW YORK AT DELHI JUNE 2011 1 What is assessment? Academic assessment is the process of gathering data to improve teaching and learning. This begins with forming clear, measurable statements describing the expected outcomes of student learning, coupled with learning opportunities that provide students with the ability to achieve those outcomes. To measure learning, institutions develop measurement tools that are aligned with the student learning outcomes, systematically gathering data based on the measurement tool. The results can then be interpreted to determine how well student learning matches our curricular expectations, and help to direct change. After implementing change, the process begins again and continues cyclically so that teaching and learning continually improve. Exams, labs, projects, papers, etc. Rubrics Standards & benchmarks Provide learning opportunities Change outcomes, change assessment tools, change goals, change rubrics Why do assessment? SUNY Delhi faculty is comprised dedicated teachers who want their students to learn. The assessment process provides feedback on the teaching and learning process while opening up lines of communication between faculty and staff. With data, faculty and staff can determine what is and is not working in order to make informed decisions about changes to their courses and to the programs in order to expend resources wisely. For students, the assessment process helps them learn more effectively by providing clear expectations for what they should know and learn. 2 What are the components of assessment? Program Goals Student Learning Outcomes Curriculum Mapping Assessment Tools Rubrics Setting Standards or Benchmarks Gathering Evidence Closing the Loop Goals What you, your program, or your college want to achieve. For example, SUNY Delhi wants its transfer students to succeed at their four-year institution of choice; the culinary department wants to offer a quality, state-of-the-art educational program; Professor Burger wants her students to appreciate Shakespeare. Student Learning Outcomes Student learning outcomes are goals describing what students take with them from a program or a course. Learning outcomes typically explain not only what students will know or be able to do, but why. Saying that students in your public speaking course will submit an outline for their introductory speech may be a course objective or course requirement; but the learning outcome is that students understand the importance of organization when speaking in public. (So all student learning outcomes are goals—but not all goals are student learning outcomes.) Not all learning outcomes are course-based. Some are programmatic—and, in theory, might appear in more than one course outline in a program. In theory, all of a program’s student learning outcomes should appear at least once in the aggregate course outlines/syllabi for required courses in a program. What are the difference between program student learning outcomes and course student learning outcomes? Program student learning outcomes are the overarching learning that occurs in multiple courses across the curriculum. They are broad descriptions of what the student should know, how they should think about the discipline, or what the student should be able to do when they finish the program. In writing these, one should also consider the institutional goals and how the program student learning outcomes support the institutional goals. As with all student learning outcomes, they should be written in terms that are measurable. In contrast, course student learning outcomes are more specific and describe what the student should know, think or be able to do when they finish the course. They should be more detailed than program student learning outcomes and should be related to the course content, tests and the other graded work of the course. 3 Excellent guidelines and examples of writing student learning outcomes may be found at the University of Connecticut Assessment website: http://assessment.uconn.edu/docs/HowToWriteObjectivesOutcomes.pdf Bloom’s Taxonomy, a multi-tiered classification of thinking and learning skills into six levels of complexity that range from basic levels of knowledge acquisition to more complex levels of creating and synthesizing (Bloom & Krathwohl, 1956). In the 1990’s, Bloom’s Taxonomy was revised to reflect the emphasis on student learning outcomes in that the classifications are action verbs rather than nouns. Bloom’s Taxonomy might prove useful in writing student learning outcomes that include action verbs and encourage higher order thinking and learning. Bloom’s Taxonomy (New Version) Remembering: can the student recall or remember the information? o define, duplicate, list, memorize, recall, repeat, reproduce state Understanding: can the student explain ideas or concepts? o classify, describe, discuss, explain, identify, locate, recognize, report, select, translate, paraphrase Applying: can the student use the information in a new way? o choose, demonstrate, dramatize, employ, illustrate, interpret, operate, schedule, sketch, solve, use, write. Analyzing: can the student distinguish between the different parts? o appraise, compare, contrast, criticize, differentiate, discriminate, distinguish, examine, experiment, question, test. Evaluating: can the student justify a stand or decision? o appraise, argue, defend, judge, select, support, value, evaluate Creating: can the student create new product or point of view? o assemble, construct, create, design, develop, formulate, write. 4 Objectives Describe the details of goals or student learning outcomes—or the tasks that must be done to achieve a goal (the means to the end itself—which is the learning outcome). A course objective may be to have students complete 20 hours of community service. The goal isn’t to have students complete the task: the goal is that students understand the value of working as a team and develop a sense of responsibility to their communities. What are the difference between goals and objectives? Goals state intended outcomes in general terms and objectives state them in specific terms. What are the difference between objectives and outcomes? Objectives are intended results of instruction while outcomes are the achieved results of what was learned. Objectives are more teacher centered and are written in terms of what the teacher intends to do and the subject matter that the teacher intends to cover. Outcomes are more student centered in that they describe what the student should learn and take away from the course or program. Curriculum Mapping Once program level and course level student learning outcomes have been written, a curriculum map can be written to examine how they fit together. Curriculum mapping is the process of linking particular content and skills to particular courses. Creating a curriculum map can determine where learning outcomes overlap, where they are missing or where they need improvement. In designing a curriculum map, create a grid by writing the broad program student learning outcomes down the left side and courses and internships across the top, then check off the learning outcomes that are addressed in each course. Student Learning Outcome 1 Student Learning Outcome 2 Student Learning Outcome 3 Course 1 x Course 2 x Course 3 Course 4 x x x x Excellent guidelines and examples of curriculum maps may be found at the University of West Florida Center for Teaching and Learning website http://uwf.edu/cutla/curriculum_maps.cfm. Assessment Tools Student learning can be assessed both directly and indirectly. Direct assessment is tangible, selfexplanatory evidence of exactly what students have and have not learned and can be measured 5 by tools such as tests, papers, presentations and projects. Indirect assessment is less clear and less convincing than direct assessment in that it is a sign that students are probably learning. Indirect assessment includes measures of student perceptions like surveys (alumni, student, employer) and evaluations. Questions to ask about Student Learning Outcomes and Assessment Tools: Is it possible to assess this outcome? What types of activities (tests, quizzes, projects, essays) will best assess this outcome? How important is this outcome? What percentage of the final grade should be based on this outcome? Are the activities in this course actively measuring the outcomes? Are the outcomes student-centered or course centered? Do the questions on my exams directly measure the outcomes? Do my assignments help student achieve the outcomes? Is the time that students will spend on the assignment measuring this outcome worth it? (Is the time you’ll spend grading the assignment worth it?) Are my outcomes too broad? Too specific? Why am I asking students to do the assignments I am requiring? (The answers to this ought to be the learning outcomes.) When creating the assessment tool to measure the student learning outcome, it is important to word the question or assignment in a way that clearly indicates the product that should be produced. Without a clearly written question, students may complete the assignment without learning what we want them to learn. While research papers, essays and tests can be used to assess student learning outcomes, there are many ways that students can demonstrate their learning beyond the typical assignment. The following shows examples of assignments that can be used to address higher order thinking according to Bloom’s Taxonomy. 6 7 Rubrics A rubric is a scoring guide that seeks to evaluate a student's performance based on the sum of a full range of criteria rather than a single numerical score. Why Should I Use Rubrics? Rubrics improve student performance by clearly showing them how you will evaluate their work and what you expect. Rubrics help students judge the quality of their own work better. Rubrics make assessment more objective and consistent—reducing student complaints and arguments about grades. Rubrics force the teacher to clarify criteria in specific terms, eliminating unintended vague or fuzzy goals. Rubrics reduce the amount of time you spend evaluating student work. Rubrics make students more confident in their own peer evaluations. Rubrics provide useful feedback to you regarding how effective your teaching has been. Rubrics give students specific, structured feedback about their strengths and weaknesses. Rubrics are easy to use and easy to explain. Types of Rubrics Rating Scale Rubric Uses checkboxes with a rating scale showing the degree to which required items appear in a completed assignment. Descriptive Rubric Like rating scale rubrics, except that the checkboxes are replaced by descriptions of what must be done to earn each possible rating. Steps to Creating an Effective Rubric Look for models—someone else may already have designed a rubric that could be modified for your use. List the things you are looking for in the completed assignment. Leave wiggle room: some percentage of the assignment should be based on “originality” or “effort,” or you risk seeing neither in the final product. Create a rating scale with at least three levels. Label levels with descriptive names, not numbers. Fill in the boxes (for descriptive rubrics) Try it out—and modify it if it isn’t giving you the results you want. 8 Example Rubrics Descriptive Rubric for a Science Research Paper Beginning 1 Developing 2 Accomplished 3 Exemplary 4 Introduction Does not give any information about what to expect in the report. Gives very little information. Gives too much information-more like a summary. Presents a concise leadin to the report. Research Does not answer any questions suggested in the template. Answers some questions. Answers some questions and includes a few other interesting facts. Answers most questions and includes many other interesting facts. Procedure Not sequential, most steps are missing or are confusing. Some of the steps are understandable; most are confusing and lack detail. Most of the steps are understandable; some lack detail or are confusing. Presents easy-tofollow steps which are logical and adequately detailed. Data & Results Data table and/or graph missing information and are inaccurate. Both complete, minor inaccuracies and/or illegible characters. Both accurate, some ill-formed characters. Data table and graph neatly completed and totally accurate. Conclusion Presents an illogical explanation for findings and does not address any of the questions suggested in the template. Presents an illogical explanation for findings and addresses few questions. Presents a logical explanation for findings and addresses some of the questions. Presents a logical explanation for findings and addresses most of the questions. Grammar & Spelling Very frequent grammar and/or spelling errors. More than two errors. Only one or two errors. All grammar and spelling are correct. Timeliness Report handed in more than one week late. Up to one week late. Up to two days late. Report handed in on time. Total Score 9 Checklist Rubric for Art Problem Solving Assignment Not often Usually Always PROBLEM FINDING (Task definition) The student makes a plan or draws a preliminary sketch. Comments: FACT FINDING (Information seeking and locating resources) The student brainstorms ideas in order to have several solutions from which to choose. Comments: SOLUTION FINDING (Synthesis: putting all the information together) The student is willing to try new things and make changes in his/her art. The student asks questions when he/she does not understand. The student listens to teacher's suggestions for improvement. The student works hard to finish project or task. Comments: EVALUATING The student looks for things he/she can improve. Comments: 10 Checklist Rubric for Automotive Program (courtesy Steve Tucker) Note to instructor: Prepare the vehicle by setting fan on any speed except high and temp. any place but full cold, A/C control off. (award 1 point for each yes) NO SAFETY GLASSES and/or failure to follow safety rules = minus 5 points. Part 1: Performance Test The student properly prepared the vehicle for the performance test ___yes ____no (fan on high, full cold, normal A/C) Installed the thermometer in the correct location ___yes ____no Correctly interpreted pressure gauge reading on low side ___yes ____no Correctly interpreted pressure gauge reading on high side ___yes ____no Correctly interpreted performance test results or faulty readings. ___yes ____no Part 2: Leak detection Probe is used in the correct position (below a line) ¼” away ___yes ____no Circles fittings and connections in lines/hoses ___yes ____no Moves detector probe at proper rate ( no faster than 1- 2”/second) ___yes ____no Knows the minimum PSI required for test (minimum of 50) ___yes ____no Knows how detector will react when a leak exists. ___yes ____no Overall performance: excellent (all “yes” with a high level of expertise) 5 points satisfactory (2 or less “no” acceptable expertise) 3.5 points more preparation needed (three or more “no”) 2.5 points Total points______ Comments: 11 Checklist Rubric for Automotive Program (courtesy Steve Tucker) Rating Scale and Recommendation Automotive Heating and Air Conditioning Name____________________________ Date________ RATING SCALE: # 1 No knowledge of subject matter, unable to perform minimal amount of task, considerable preparation required. #2 Inadequate Knowledge/skill. More preparation needed. # 3 Basic understanding, minimal knowledge & skills, requires close supervision, significant preparation required. # 4 Moderate understanding & skills, requires some guidance, more preparation required, exceeded allocated time limit. # 5 Entry level, demonstrates excellent knowledge & skills, requires very little guidance, within allocated time limit. Based on classroom knowledge, practical and live work performance, for each of the following the student demonstrated: A/C system Diagnosis and repair: ____5 ____4 ____3 ____2 ____1 Refrigeration System Component Diagnosis and Repair: ____5 ____4 ____3 ____2 ____1 Heating/Ventilation Diagnosis and Repair: ____5 ____4 ____3 ____2 ____1 Cooling System Diagnosis And Repair: ____5 ____4 ____3 ____2 ____1 Control System Diagnosis and Repair: ____5 ____4 ____3 ____2 ____1 Refrigerant Recovery, Recycling And Handling: ____5 ____4 ____3 ____2 ____1 Instructor____________________________ Date__________ 12 Rubric Template Beginning 1 Developing 2 Accomplished 3 Exemplary 4 Score In the shaded areas, list the aspects of the assignment you are looking for. In the boxes to the right, list the descriptions of the performances that merit those ratings. Use as many rows as you need. More excellent examples of rubrics by subject area may be found at the Teacher Planet website www.rubrics4teachers.com. Setting Benchmarks or Standards Before beginning the collection of assessment results, benchmarks or standards should be set. Standards may be local (set by a program at SUNY Delhi), external (set by an external accrediting agency or other organization), or historic (improving over time). To set appropriate benchmarks, research what other programs at other colleges are doing, discuss ideas with faculty members in the program, and use examples of previous student work to inform your decision. Competencies/Proficiencies Types of student learning outcomes that are focused on skills rather than knowledge. “Students must be able to type 90 words per minute” is a proficiency outcome. Standards/Benchmarks Specific scores used to measure success in achieving outcomes. A department might set a goal to have 75% of its students pass LITR 260 with a C or better—the “C” would be the standard we want them to be measured against. Measurement Criteria The standards used to measure student success in meeting course objectives. Competencies and Standards or Benchmarks are common measurement criteria in skills-based courses. Measurement Criteria generally define quality in the course and specify how the instructor will 13 determine the students’ level of understanding. Every Student Learning Outcome should be measured; the method used to measure it should appear in the measurement criteria. Course information documents at SUNY Delhi (commonly called “syllabi” or “course outlines”) must include measurable student learning outcomes and measurement criteria as categories. Course outlines and syllabi also, by necessity, contain other things: some outlines will list benchmarks and proficiencies under “measurement criteria”; some will list objectives in the “measurable student learning outcomes” section. Course outlines and syllabi often contain objectives and goals that are not measurable—not everything of value in academics can be set against a yardstick. Gathering Evidence The procedure for gathering assessment data can be overwhelming. It is important to develop a system that is both manageable and meaningful. The following are suggestions from other SUNY Delhi faculty members: 1. Don’t reinvent the wheel: Re-examine the assessment tools that you are currently using – many of them are probably already assessing the student learning outcomes of the course. If not, how can they be adapted to measure the student learning outcomes of your course? 2. Take a representative sample: In courses with multiple sections, choose a representative sample of student work to assess rather than assessing every student in every section. 3. Break it up: Rather than collecting data for every student learning outcome each semester, choose a two or three student learning outcomes to assess each semester. Complete the assessment of all student learning outcomes on a two or three year cycle. 4. Collect student work and assess it at the end of the semester: Keep all assignments used for assessment purposes rather than handing them back to students. At the end of the semester, using a grading rubric, sit down with a group of fellow faculty members and rate each piece of student work according to the rubric. Typically results are reported as percentages. For example, 10% of students exceeded the standard, 40% of students met the standard, 20% of students approached the standard, and 30% of students did not meet the standard. Reporting the Results Results should be reported on the assessment web site once it is fully implemented. In the meantime, report results to your division dean, who will in turn share them with the Document Repository in the Library and the Deans’ Council and Provost’s Office. 14 Closing the Loop One of the most difficult parts of the assessment process is to use the results to improve teaching and learning or “close the loop.” However, in order for assessment to be effective, this is the most important part of the process. Once you have gathered and analyzed the data: 1. Re-examine the instruction: Is the student learning outcome being addressed completely in the class? How can the instruction be improved to address the student learning outcome? How can the course materials like handouts, textbooks, PowerPoints, and assignments be improved? 2. Re-examine the student learning outcomes and goals: Are they appropriate for this course or should they be covered in another course? 3. Re-examine the rubric: Did the rubric accurately measure the assignment? In other words, did the grade assigned by the rubric match your “gut feeling” on what the grade should be? If not, how can it be adapted to better measure the assignment? 4. Re-examine the assessment tool: Did the assessment tool measure the student learning outcome? If not, can it be reworded so that students produce what is intended? 5. Re-examine the assessment process: Could this student learning outcome be assessed in a better way? 15 References: Anderson, L. W., & Krathwohl, D. R. (2001). A taxonomy for learning, teaching, and assessing: A revision of Bloom’s taxonomy of educational objectives. New York: Longman. Bloom, B. S., & Krathwohl, D. R. (1956). Taxonomy of educational objectives: The classification of educational goals by a committee of college and university examiners. Handbook I: Cognitive Domain. New York: Longman, Green. Center for University Teaching, Learning, and Assessment. (2011). Retrieved from http://www.uwf.edu/cutla/index.cfm Suskie, L. (2009). Assessing student learning: A common sense guide. San Francisco, CA: Jossey-Bass. University of Connecticut Assessment Prime. (n.d.). Retrieved from http://assessment.uconn.edu/primer/index.html