PRINT

advertisement

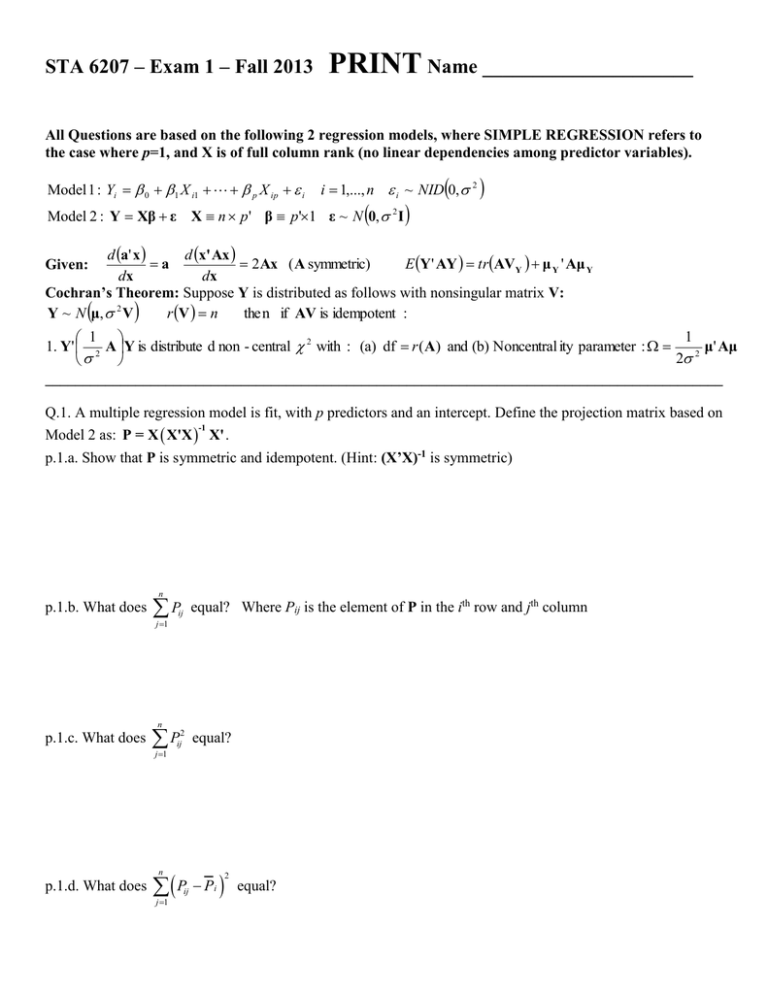

STA 6207 – Exam 1 – Fall 2013 PRINT Name _____________________ All Questions are based on the following 2 regression models, where SIMPLE REGRESSION refers to the case where p=1, and X is of full column rank (no linear dependencies among predictor variables). Model 1 : Yi 0 1 X i1 p X ip i i 1,..., n i ~ NID 0, 2 Model 2 : Y Xβ ε X n p' β p'1 ε ~ N 0, 2 I d a' x d x' Ax a 2Ax ( A symmetric) E Y' AY trAVY μ Y ' Aμ Y dx dx Cochran’s Theorem: Suppose Y is distributed as follows with nonsingular matrix V: Y ~ N μ, 2 V r V n the n if AV is idempotent : Given: 1 1 1. Y' 2 A Y is distribute d non - central 2 with : (a) df r ( A) and (b) Noncentral ity parameter : μ' Aμ 2 2 __________________________________________________________________________________________ Q.1. A multiple regression model is fit, with p predictors and an intercept. Define the projection matrix based on -1 Model 2 as: P = X X'X X' . p.1.a. Show that P is symmetric and idempotent. (Hint: (X’X)-1 is symmetric) n p.1.b. What does P j 1 equal? Where Pij is the element of P in the ith row and jth column ij n p.1.c. What does P j 1 2 ij equal? P P n p.1.d. What does j 1 ij i 2 equal? Q.2. A multiple linear regression model is fit, relating height (Y, mm) to hand length (X1, mm) and foot length (X2, mm), for a sample of n = 20 adult males. The following partial computer output is obtained, for model 1 with 2 predictors. ANOVA df Regression Residual Total SS 37497 19772 57269 MS F F(0.05) #N/A #N/A #N/A #N/A #N/A Coefficients Standard Error t Stat P-value Intercept 1055.78 132.86 7.95 0.0000 Hand 1.26 0.55 2.28 0.0357 Foot 1.71 0.39 4.42 0.0004 p.2.a Complete the table. Do you reject the null hypothesis H0: ? Yes or No p.2.b. Give the predicted height of a man with a hand length of 210mm and a foot length of (260mm). Just give the point estimate, not confidence interval for the mean or a prediction interval. p.2.c. Give an unbiased estimate of the error variance 2 p.2.d. The coefficient of determination represents the proportion of variation in heights “explained” by the model with hand and foot length as predictors. What is the proportion explained for this model? Q.3. The total salaries (X, in millions of pounds) and the number of points earned (Y) for the n = 20 English Premier League teams in 1995/6 are used to fit a simple linear regression model (Model 2, with p = 1). For this problem, we will treat this as a sample from a population of all possible league teams. Team Arsenal Aston Villa Blackburn Bolton Chelsea Coventry Everton Leeds Liverpool Manchester City Manchester United Middlesbrough Newcastle Nottingham Forest Queens Park Sheffield Southampton Tottenham West Ham United Wimbledon p.3.a. Compute Int 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 X'X -1 WageK Points(Y) 10.1 63 7.7 63 10.8 61 3.3 29 9.2 50 5.8 38 10.1 61 10.1 43 13.2 71 6.4 38 13.3 82 6.5 43 19.7 78 8.5 58 5 33 6.4 40 4.1 38 9.8 61 6.2 51 4.7 41 X'X X'Y 20 170.9 170.9 1745.15 ^ β and p.3.b. Compute the fitted value and residual for Arsenal. p.3.c. Given Y 52.1 and Y Y 20 i 1 i 2 4367.8 p.3.d. Obtain a 95% Confidence Interval for 1 Compute SSRegression and SSResidual 1042 9870.3 Q.4. For the simple regression model (scalar form): Yi 0 1 X i i X X 1 i S XX i 1 ^ we get: p.4.a. Derive n i 1,..., n i ~ NID 0, 2 n ^ ^ 1 Yi , Y Yi , 0 Y 1 X i 1 n ^ ^ E 1 , E Y , E 0 ^ ^ ^ ^ ^ p.4.b. Derive V 1 , V Y , Cov 1 , Y , V 0 , Cov 1 , 0 Q.5. For the Analysis of Variance in model 2, with n observations and p predictors, complete the following parts. p.5.a. Write the Regression and Residual sums of squares as quadratic forms. p.5.b. Derive the distributions of SSRegression/ and SSResidual/ p.5.c. Show that SSRegression/ and SSResidual/ are independent p.5.d. What is the sampling distribution of MSRegression/MSResidual when p = 0? df | 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 40 50 60 70 80 90 100 110 120 130 140 150 160 170 180 190 200 | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | t.05 t.025 6.314 2.920 2.353 2.132 2.015 1.943 1.895 1.860 1.833 1.812 1.796 1.782 1.771 1.761 1.753 1.746 1.740 1.734 1.729 1.725 1.721 1.717 1.714 1.711 1.708 1.706 1.703 1.701 1.699 1.697 1.684 1.676 1.671 1.667 1.664 1.662 1.660 1.659 1.658 1.657 1.656 1.655 1.654 1.654 1.653 1.653 1.653 1.645 12.706 4.303 3.182 2.776 2.571 2.447 2.365 2.306 2.262 2.228 2.201 2.179 2.160 2.145 2.131 2.120 2.110 2.101 2.093 2.086 2.080 2.074 2.069 2.064 2.060 2.056 2.052 2.048 2.045 2.042 2.021 2.009 2.000 1.994 1.990 1.987 1.984 1.982 1.980 1.978 1.977 1.976 1.975 1.974 1.973 1.973 1.972 1.960 2 .05 3.841 5.991 7.815 9.488 11.070 12.592 14.067 15.507 16.919 18.307 19.675 21.026 22.362 23.685 24.996 26.296 27.587 28.869 30.144 31.410 32.671 33.924 35.172 36.415 37.652 38.885 40.113 41.337 42.557 43.773 55.758 67.505 79.082 90.531 101.879 113.145 124.342 135.480 146.567 157.610 168.613 179.581 190.516 201.423 212.304 223.160 233.994 --- F.05,1 F.05,2 F.05,3 F.05,4 F.05,5 F.05,6 F.05,7 F.05,8 161.448 18.513 10.128 7.709 6.608 5.987 5.591 5.318 5.117 4.965 4.844 4.747 4.667 4.600 4.543 4.494 4.451 4.414 4.381 4.351 4.325 4.301 4.279 4.260 4.242 4.225 4.210 4.196 4.183 4.171 4.085 4.034 4.001 3.978 3.960 3.947 3.936 3.927 3.920 3.914 3.909 3.904 3.900 3.897 3.894 3.891 3.888 3.841 199.500 19.000 9.552 6.944 5.786 5.143 4.737 4.459 4.256 4.103 3.982 3.885 3.806 3.739 3.682 3.634 3.592 3.555 3.522 3.493 3.467 3.443 3.422 3.403 3.385 3.369 3.354 3.340 3.328 3.316 3.232 3.183 3.150 3.128 3.111 3.098 3.087 3.079 3.072 3.066 3.061 3.056 3.053 3.049 3.046 3.043 3.041 2.995 215.707 19.164 9.277 6.591 5.409 4.757 4.347 4.066 3.863 3.708 3.587 3.490 3.411 3.344 3.287 3.239 3.197 3.160 3.127 3.098 3.072 3.049 3.028 3.009 2.991 2.975 2.960 2.947 2.934 2.922 2.839 2.790 2.758 2.736 2.719 2.706 2.696 2.687 2.680 2.674 2.669 2.665 2.661 2.658 2.655 2.652 2.650 2.605 224.583 19.247 9.117 6.388 5.192 4.534 4.120 3.838 3.633 3.478 3.357 3.259 3.179 3.112 3.056 3.007 2.965 2.928 2.895 2.866 2.840 2.817 2.796 2.776 2.759 2.743 2.728 2.714 2.701 2.690 2.606 2.557 2.525 2.503 2.486 2.473 2.463 2.454 2.447 2.441 2.436 2.432 2.428 2.425 2.422 2.419 2.417 2.372 230.162 19.296 9.013 6.256 5.050 4.387 3.972 3.687 3.482 3.326 3.204 3.106 3.025 2.958 2.901 2.852 2.810 2.773 2.740 2.711 2.685 2.661 2.640 2.621 2.603 2.587 2.572 2.558 2.545 2.534 2.449 2.400 2.368 2.346 2.329 2.316 2.305 2.297 2.290 2.284 2.279 2.274 2.271 2.267 2.264 2.262 2.259 2.214 233.986 19.330 8.941 6.163 4.950 4.284 3.866 3.581 3.374 3.217 3.095 2.996 2.915 2.848 2.790 2.741 2.699 2.661 2.628 2.599 2.573 2.549 2.528 2.508 2.490 2.474 2.459 2.445 2.432 2.421 2.336 2.286 2.254 2.231 2.214 2.201 2.191 2.182 2.175 2.169 2.164 2.160 2.156 2.152 2.149 2.147 2.144 2.099 236.768 19.353 8.887 6.094 4.876 4.207 3.787 3.500 3.293 3.135 3.012 2.913 2.832 2.764 2.707 2.657 2.614 2.577 2.544 2.514 2.488 2.464 2.442 2.423 2.405 2.388 2.373 2.359 2.346 2.334 2.249 2.199 2.167 2.143 2.126 2.113 2.103 2.094 2.087 2.081 2.076 2.071 2.067 2.064 2.061 2.058 2.056 2.010 238.883 19.371 8.845 6.041 4.818 4.147 3.726 3.438 3.230 3.072 2.948 2.849 2.767 2.699 2.641 2.591 2.548 2.510 2.477 2.447 2.420 2.397 2.375 2.355 2.337 2.321 2.305 2.291 2.278 2.266 2.180 2.130 2.097 2.074 2.056 2.043 2.032 2.024 2.016 2.010 2.005 2.001 1.997 1.993 1.990 1.987 1.985 1.938 | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | | |