Probability (PPT)

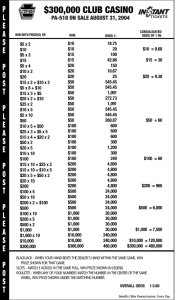

advertisement

Probability Randomness • Long-Run (limiting) behavior of a chance (nondeterministic) process • Relative Frequency: Fraction of time a particular outcome occurs • Some cases the structure is known (e.g. tossing a coin, rolling a dice) • Often structure is unknown and process must be simulated • Probability of an event E (where n is number of trials) : Number of times E occurs P ( E ) lim n n Set Notation • • • • • S a set of interest Subset: A S A is contained in S Union: A B Set of all elements in A or B or both Intersection: AB Set of all elements in both A and B Complement: Ā Set of all elements not in A A A S A( B C ) AB AC A ( BC ) ( A B)( A C ) DeMorgan' s Laws : A B A B n n Ai Ai i 1 i 1 AB A B n n Ai Ai i 1 i 1 Probability • • • Sample Space (S)- Set of all possible outcomes of a random experiment. Mutually exclusive and exhaustive form. Event- Any subset of a sample space Probability of an event A (P(A)): 1. P(A) ≥ 0 2. P(S) = 1 3. If A1,A2,... is a sequence of mutually exclusive events (AiAj = ): P Ai P Ai i 1 i 1 Counting Rules for Probability (I) • Multiplicative Rule- Brute-force method of defining number of elements in S – – – – Experiment consists of k stages The jth stage has nj possible outcomes Total number of outcomes = n1...nk Tabular structure for k = 2 Stage 1\ Stage2 1 2 … n2 1 1 2 … n2 2 n2+1 n2+2 … 2n2 … … … … … n1 (n1-1)n2+1 (n1-1)n2+2 … n1 n2 Counting Rules for Probability (II) • Permutations: Ordered arrangements of r objects selected from n distinct objects without replacement (r ≤ n) – Stage 1: n possible objects – Stage 2: n-1 remaining possibilities – Stage r : n-r+1 remaining possibilities n! P n(n 1)...( n r 1) (n r )! n r Counting Rules for Probability (III) • Combinations: Unordered arrangements of r objects selected from n distinct objects without replacement (r ≤ n) – The number of distinct orderings among a set of r objects is r ! – # of combinations where order does not matter = number of permutations divided by r ! n P n! r r ! r ! ( n r )! n r Counting Rules for Probability (IV) • Partitions: Unordered arrangements of n objects partitioned into k groups of size n1,...nk (where n1 +...+ nk = n) – Stage 1: # of Combinations of n1 elements from n objects – Stage 2: # of Combinations of n2 elements from n-n1 objects – Stage k: # of Combinations of nk elements from nk objects n n n1 n n1 ... nk 1 ... nk n1 n2 (n n1 ... nk 1 )! n! (n n1 )! ... n1!(n n1 )! n2 !(n n1 n2 )! nk !(n n1 ... nk 1 nk )! n! n1!...nk ! Counting Rules for Probability (V) • Runs of binary outcomes (String of m+n trials) – Observe n Successes (S) – Observe m Failures (F) • k = minimum # of “runs” of S or F in the ordered outcomes of the m+n trials Case 1 : Equal runs of S and F (Series begins with one, ends with other) : m 1 n 1 2 k 1 k 1 P (2k runs ) m n m Case 2 : One more run of S or F (Series begins and ends with same one) : m 1 n 1 m 1 n 1 k k 1 k 1 k P (2k 1 runs ) m n m Runs Examples – 2006/7 UF NCAA Champs Football: n 14 W, m 1 L: Wins : 2 runs Losses: 1 Run 3 2k 1 k 1 Note: 15 ways to have 1 Loss (Game 1, Game 2,..., Game 15) 2 Ways to have 2 Runs (Game 1 Loss or Game 15 Loss) 2 13 P (3 runs) 1 P(2 runs) 1- 15 15 Basketball: n 35 W, m 5 L: Wins : 5 runs Losses: 4 Runs 9 2k 1 k 4 5-1 35 1 5-1 35 1 4 4 1 4-1 4 5984 185504 P(9 runs) .2910 658008 40 5 Conditional Probability and Independence • In many situations one event in a multi-stage experiment occurs temporally before a second event • Suppose event A can occur prior to event B • We can consider the probability that B occurs given A has occurred (or vice versa) • For B to occur given A has occurred: – At first stage A has to have occurred – At second stage, B has to occur (along with A) • Assuming P(A), P(B) > 0, we can obtain “Probability of B Given A” and “Probability of A Given B” as follow: P( AB) P( B | A) P( A) P( AB) P( A | B) P( B) If P(B|A) = P(B) and P(A|B) = P(A), A and B are said to be INDEPENDENT Rules of Probability Complement ary Events : P A 1 P ( A) Additive Rule : P A B P( A) P( B) P( AB) If A and B are mutually exclusive : P A B P( A) P( B ) Multiplica tive Rule : P ( AB) P ( A) P ( B | A) P ( B) P ( A | B ) If A and B are independen t : P AB P( A) P( B) Bayes’ Rule - Updating Probabilities • Let A1,…,Ak be a set of events that partition a sample space such that (mutually exclusive and exhaustive): – each set has known P(Ai) > 0 (each event can occur) – for any 2 sets Ai and Aj, P(Ai and Aj) = 0 (events are disjoint) – P(A1) + … + P(Ak) = 1 (each outcome belongs to one of events) • If C is an event such that – 0 < P(C) < 1 (C can occur, but will not necessarily occur) – We know the probability C will occur given each event Ai: P(C|Ai) • Then we can compute probability of Ai given C occurred: P C P(C | A1 ) P( A1 ) P(C | Ak ) P( Ak ) P(C | Ai ) P( Ai ) P( Ai and C ) P( Ai | C ) P(C | A1 ) P( A1 ) P(C | Ak ) P( Ak ) P(C ) Example - OJ Simpson Trial • Given Information on Blood Test (T+/T-) – Sensitivity: P(T+|Guilty)=1 – Specificity: P(T-|Innocent)=.9957 P(T+|I)=.0043 • Suppose you have a prior belief of guilt: P(G)=p* • What is “posterior” probability of guilt after seeing evidence that blood matches: P(G|T+)? P (T ) P (T G ) P (T I ) P (G ) P (T | G ) P ( I ) P (T | I ) p * (1) (1 p*)(.0043) P (T G ) P (G ) P (T | G ) p * (1) p* P (G | T ) P (T ) P (T ) p * (1) (1 p*)(.0043) .9957 p * .0043 Source: B.Forst (1996). “Evidence, Probabilities and Legal Standards for Determination of Guilt: Beyond the OJ Trial”, in Representing OJ: Murder, Criminal Justice, and the Mass Culture, ed. G. Barak pp. 22-28. Harrow and Heston, Guilderland, NY OJ Simpson Posterior (to Positive Test) Probabilities P(G ) .10 Prior Probabilit y of Guilt : .10(1) .10 P(G | T ) .9627 .10(1) .90(.0043) .10387 P(G|T+) as function of P(G) 1 0.8 P(G|T+) 0.6 0.4 0.2 0 0 0.2 0.4 0.6 P(G) 0.8 1 1.2 Northern Army at Battle of Gettysburg Regiment I Corps II Corps III Corps V Corps VI Corps XI Corps XII Corps Cav Corps Arty Reserve Sum Label A1 A2 A3 A4 A5 A6 A7 A8 A9 Initial # 10022 12884 11924 12509 15555 9839 8589 11501 2546 95369 Casualties 6059 4369 4211 2187 242 3801 1082 852 242 23045 P(Ai) 0.1051 0.1351 0.1250 0.1312 0.1631 0.1032 0.0901 0.1206 0.0267 1 P(C|Ai) 0.6046 0.3391 0.3532 0.1748 0.0156 0.3863 0.1260 0.0741 0.0951 P(C|Ai)*P(Ai) 0.0635 0.0458 0.0442 0.0229 0.0025 0.0399 0.0113 0.0089 0.0025 0.2416 P(C) P(Ai|C) 0.2630 0.1896 0.1828 0.0949 0.0105 0.1650 0.0470 0.0370 0.0105 1.0002 • Regiments: partition of soldiers (A1,…,A9). Casualty: event C • P(Ai) = (size of regiment) / (total soldiers) = (Column 3)/95369 • P(C|Ai) = (# casualties) / (regiment size) = (Col 4)/(Col 3) • P(C|Ai) P(Ai) = P(Ai and C) = (Col 5)*(Col 6) •P(C)=sum(Col 7) • P(Ai|C) = P(Ai and C) / P(C) = (Col 7)/.2416 CRAPS • Player rolls 2 Dice (“Come out roll”): – – – – 2,3,12 - Lose (Miss Out) 7,11 - Win (Pass) 4,5,6.8,9,10 - Makes point. Roll until point (Win) or 7 (Lose) Probability Distribution for first (any) roll: Roll Probability Outcome 2 1/36 Lose 3 2/36 Lose 4 3/36 Point 5 4/36 Point 6 5/36 Point 7 6/36 Win 8 5/36 Point 9 4/36 Point 10 3/36 Point 11 2/36 Win After first roll: •P(Win|2) = P(Win|3) = P(Win|12) = 0 •P(Win|7) = P(Win|11) = 1 •What about other conditional probabilities if make point? 12 1/36 Lose CRAPS • Suppose you make a point: (4,5,6,8,9,10) – – – – You win if your point occurs before 7, lose otherwise and stop Let P mean you make point on a roll Let C mean you continue rolling (neither point nor 7) You win for any of the mutually exclusive events: • P, CP, CCP, …, CC…CP,… • If your point is 4 or 10, P(P)=3/36, P(C)=27/36 • By independence, and multiplicative, and additive rules: k 27 3 27 3 P (CP) C P ) P (CC 36 36 36 36 k Win P CP CCC CP 3 P( P) 36 k 3 27 3 27 3 3 27 P ( Win) 36 36 36 36 36 36 36 i 0 1 1 3 1 ( 4 ) 36 1 27 / 36 3 12 i CRAPS • Similar Patterns arise for points 5,6,8, and 9: – For 5 and 9: P(P) = 4/36 P(C) = 26/36 – For 6 and 8: P(P) = 5/36 P(C) = 25/36 i 1 4 26 4 4 36 2 Points 5 and 9 : P( Win ) 36 i 0 36 36 1 26 / 36 36 10 5 i 1 5 25 5 5 36 5 Points 6 and 8 : P( Win ) 36 i 0 36 36 1 25 / 36 36 11 11 Finally, we can obtain player’s probability of winning: CRAPS - P(Winning) Come Out Roll 2 3 4 5 6 7 8 9 10 11 12 Sum P(Roll) 1/36 2/36 3/36 4/36 5/36 6/36 5/36 4/36 3/36 2/36 1/36 1 P(Win|Roll) 0 0 1/3 2/5 5/11 1 5/11 2/5 1/3 1 0 P(Roll&Win) 0 0 1/36 2/45 25/396 1/6 25/396 2/45 1/36 1/18 0 P(Roll&Win) 0 0 0.02777778 0.04444444 0.06313131 0.16666667 0.06313131 0.04444444 0.02777778 0.05555556 0 0.49292929 P(Win) P(Roll|Win) 0 0 0.05635246 0.09016393 0.12807377 0.33811475 0.12807377 0.09016393 0.05635246 0.11270492 0 1 Note in the previous slides we derived P(Win|Roll), we multiply those by P(Win) to obtain P(Roll&Win) and sum those for P(Win). The last column gives the probability of each come out roll given we won. Odds, Odds Ratios, Relative Risk • Odds: Probability an event occurs divided by probability it does not occur odds(A) = P(A)/P(Ā) – Many gambling establishments and lotteries post odds against an event occurring • Odds Ratio (OR): Odds of A occurring for one group, divided by odds of A for second group • Relative Risk (RR): Probability of A occurring for one group, divided by probability of A for second group P( A) odds ( A) P( A) odds ( A | Group 1) OR (Group 1/Group 2) odds ( A | Group 2) P( A | Group 1) RR (Group 1/Group 2) P( A | Group 2) Example – John Snow Cholera Data • 2 Water Providers: Southwark & Vauxhall (S&V) and Lambeth (L) Provider\Status Cholera Death No Cholera Death Total S&V 3706 263919 267625 Lambeth 411 171117 171528 Total 4117 435036 439153 3706 .013848 .013848 odds( D | S & V ) .014042 267625 1 .013848 411 .002396 P ( D | L) .002396 odds ( D | L) .002402 171528 1 .002396 .013848 .014042 RR 5.78 OR 5.85 .002396 .002402 P( D | S & V )