2015 0722Minutes

advertisement

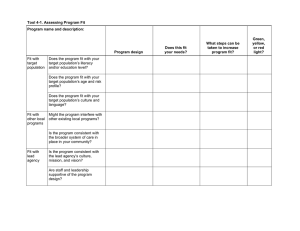

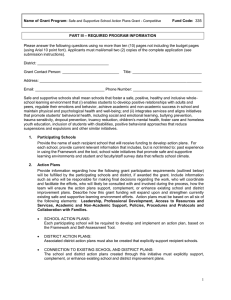

Safe and Supportive Schools Commission Summer Retreat at Harvard Law School, Cambridge July 22, 2015 – 10:00am-3:30pm Minutes Members Present: Rachelle Engler Bennett (Co-Chair), Susan Cole (Co-Chair), Donna M. Brown, Sara Burd, Bill Diehl, John Doherty, Katherine Lipper, Anne Silver, Judith Styer Others Attending/Participating: Colleen Armstrong, Shawn Connelly, Cathy Cummins, Shella Dennery, Anne Eisner, Anne Gilligan, Anne Berry Goodfellow, Michael Gregory, Lisa Harney, Joe Ristuccia, Rebecca Shor, Margot Tracy I. Welcome and Agenda Overview II. Commission members were welcomed by both Co-Chairs, and Susan (Cole) shared an overview of the day’s agenda. Safe and Supportive Schools Line Item – Susan then shared with the Commission the 7061-9612 Line Item language for Safe and Supportive Schools that was signed by Gov. Baker (see the orange sheet in Retreat Packet). This line item was funded at $500,000 to the Department of Elementary and Secondary Education (DESE) for fiscal year 2015-2016 (FY16), with a minimum of $400,000 to be used for Safe and Supportive Schools Grants and up to $100,000 for DESE to use on technical assistance, conferences (which grantees shall attend), an evaluation of the grant program, and work related to the safe and supportive schools framework and tool [per M.G.L. Ch. 69, S.1P (f)]. This funding is a major victory for Safe and Supportive Schools and represents an important opportunity for the Commission’s work to have a significant impact across the Commonwealth. The Retreat Packets also contained minutes (all in goldenrod): from the Commission Meeting on May 7, 2015, as well as from two small group meetings (the Access to Services/Collaboration with Families small group and the Professional Development small group). Additionally, the packets contained an updated Safe and Supportive Schools Commission Member Contact List (attached to these minutes). Diving Deeper into the Safe and Supportive Schools Framework and Self-Assessment Tool In order for the Commission to propose improvements to what will become the Safe and Supportive Schools Framework and Self-Assessment Tool (currently called the Behavioral Health and Public Schools Framework and Self-Assessment Tool), it is first critical for each member to understand what the Framework and Tool are and how they work. Therefore, during this part of the retreat, Commission members divided up and worked in three small groups to gain first-hand working knowledge of the Framework and Self-Assessment Tool. 1 Superintendent John Doherty distributed a fictional (but realistic) scenario describing a regional school in Western MA (see light blue sheet in the Retreat Packet). Using the information and data about this school as described in the scenario, the three small groups each proceeded to complete the online Self-Assessment Tool as if they were a team of educators at this fictitious school. The goal was to use the tool to generate a set of recommendations about what the school should do to solve some of the problems identified in the scenario. In the process of working through the scenario, groups were also instructed to keep track (on post-it notes) of thoughts and suggestions for how to improve both the content and the usability of the Framework and the Tool. (These thoughts and suggestions formed the basis of further small group discussions later in the day, and are on an attachment to these minutes.) Prior to separating into groups, Joe Ristuccia offered some important perspectives on the Self-Assessment Tool based on his work with dozens of schools as they have completed it: III. Prior to beginning completion of the tool, it is very useful for school-based teams to start by having a conversation about the urgencies and priority needs in their school and then to keep these urgencies in mind while they fill out the tool. There are two main aspects to the Self-Assessment Tool. There is a diagnostic function and there is an implementation function. It is important for the Commission to figure out how the tool can be optimally designed to help schools with both of these functions without confusing the two functions. Proposed Additions/Edits to the Framework and/or Self-Assessment Tool After the morning small group discussions, all participants gathered together in the main room to add post-it notes containing ideas and questions about improving the Framework and Self-Assessment Tool to flip chart paper on the walls. Beginning categories were offered as a starting place, and participants were asked to add and arrange/rearrange the notes into clusters with added or edited category names along the way. The group ended up with four main themes: 1) Overarching Comments; 2) Content of the Framework/Tool; 3) Updating the Framework/Tool to Reflect Current Legislation/Requirements/Initiatives; and 4) Usability of the Tool (Mechanics as well as Guides/Resources). After each theme was discussed briefly in the large group, all in the room were given three stickers (green, blue, and yellow) and were asked to place the stickers on their three top choices (themes or ideas) they would be most interested in contributing to discussions on during the next segment of the agenda. Then, participants divided into four small groups (one for each of the four themes above) according to their choice. Each group took the piece of flip chart paper for its theme, with all of the post-it notes attached. In their small groups, each tried to organize the ideas and questions represented on the post-its, and then choose one or 2 more from the list for which to begin to brainstorm more concrete and specific suggestions. (See the attached document “Flip-Chart Notes on Suggested Tool Improvements”) IV. Large Group Discussion Each of the four small groups reported back to the full Commission and shared some of their thoughts, questions, and proposed ideas for moving forward. Group 1 – Overarching Comments This group reported that it found it more helpful to address the ideas generated in this category by framing questions for the whole Commission to be thinking through. Here are some of the questions posed by the group: o Can we more clearly describe what the purpose of the self-assessment tool is? o Is it an assessment tool? A planning tool? Both? o What is the theory of change underlying the tool? In approaching these questions it is good to go back to the law and see what it says as a jumping off point for discussion. (NOTE: See Next Steps attachment for some helpful references to the law.) In defining the purpose of the tool, it is important that the Commission grasp opportunities to connect this tool/initiative to other tools/initiatives that are out there or being developed. The small group feels strongly that we don’t want work on safe and supportive schools to be siloed away from the rest of the work that happens in schools. We should be asking ourselves, “How, in the field, are educators more likely to incorporate this tool into their overall school improvement planning, rather than seeing it as something separate from that?” It would be very helpful to develop some kind of visual representation of all the efforts at DESE and/or other state agencies that depicts all the similar work going on. This will help us see visually how the Commission can align with or connect to all of the other efforts. Timing – How does the need to rework the tool line up with the Safe and Supportive Schools grants going out this fall? How do we coordinate the timing of these things? Should there be waves of improvements? Some quick ones for the next grantees, and then get their input for a second wave of improvements later? (This led to a discussion about the RFP process for the next round of grants that is summarized in the “Grant Program” section below.) Group 2 – Improved Content This small group chose to discuss the content area of “crisis management” as an area that is inadequately addressed in the current version of the tool. It decided to look at each of the six operational elements of the Framework/Tool and think about what would need to change or be added in order to weave in crisis management. 3 The group discovered that by changing just a few words here and there they could have an enormous positive effect on the tool. One of the things the group discovered was how important it was to separate out multiple concepts in each question. For example, one question in the tool asks about both “crisis plans” and “safety plans.” These are not the same thing and they need to be disaggregated. Once you disaggregate this in one place, you realize it has implications and ripple effects for all the other areas of the tool. The group also discovered that “crisis management” was an area that cut through all six elements of the Framework. In other words, for a school to work on its crisis management it would need to take actions in each of the six areas of the Framework and the tool is helpful for facilitating this type of planning. The group thought that there is a huge potential for the tool to include links to other resources/guides so that folks can go deeper on topics that they identify as priorities for action. For example, Boston Children’s Hospital has a webpage with all kinds of examples of different types of safety plans, and the tool could link to this page. (After the retreat, Shella Dennery provided a link to this webpage for future reference: http://www.childrenshospital.org/centers-and-services/programs/a-_e/boston-childrens-hospital-neighborhood-partnerships-program/resources.) Group 3 – Updating the Framework/Tool to Reflect Current Legislation/Initiatives This group struggled with the fact that every person’s definition of key terms (e.g., Social Emotional Learning) is different. The same is true of Safe and Supportive Schools. So it is really important that the definition of safe and supportive schools in the law is clarified so that everyone knows what we are talking about and that this work is inclusive of other relevant initiatives. Our goal in drafting a working definition should be to find the clearest and most accessible and flexible way of aligning all of the legislation, mandates, requirements, and initiatives so that ideally regardless of who you are it means the same thing to everyone and everyone can jump into the work from wherever they are. This group’s discussion was closely linked to that of the Overarching Comments small group. If we can get clarity on the first group’s question about the purpose of the tool, then we can figure out how to make the tool responsive to all the initiatives. It would be helpful if there were some kind of “crosswalk” between all the laws, regulations, and initiatives so we can see how they all overlap and relate to each other. Next Step – The group proposed that there be some kind of subcommittee to look at everything out there that connects to or overlaps with Safe and Supportive Schools to try to digest and summarize the landscape. It is important to look not just at legislation, but also at initiatives or trends in the field as well. A member expressed the view that we can’t just align initiatives, we have to do better than that. We need to cut the duplication, streamline, integrate... So far it feels like we have only been looking at the relationships between initiatives in a twodimensional way; instead, we need to be looking at them in a three-dimensional way. 4 Another member suggested that the RFP for the grants include as a criterion a question about how the school’s proposed effort will be streamlined with or integrated into what is already going on at the school rather than be implemented as a siloed off initiative. (Again, see also the discussion of the Grant Program below.) Group 4 – Usability of the Tool This group reported that the post-it notes on its flip chart ranged from very concrete recommendations about how to improve the tool to much larger suggestions or questions about the tool’s purpose and overall structure. The group looked at all of the post-it notes and tried to discern the categories they clustered in to, and the group reported out an overview of the categories it came up with (but did not go into detail about every single recommendation on the post-it notes). The categories they noted are outlined below (Answer Options; Structure of the Tool; Simplify the Tool/Action Steps/Improved Navigation; Data; Resources; and TA/Guidance). Answer Options - One of the concrete recommendations was that we reconsider the answer choice options for each of the assessment items in the tool. As one example, there is no “we are not doing this at all” answer choice, which results in a lot of wasted energy figuring out what answer to pick if a school is not currently engaged in an assessment item. Structure of the Tool – There were several suggestions about how to improve the structure of the tool. One of these that generated a great deal of consensus is that the “assessment” function of the tool and the “planning” function of the tool need to be disaggregated from each other. The current tool asks schools to prioritize each individual item for action as they go through the tool when it makes much more sense for a school to complete the full assessment first before making choices about what to prioritize. (One group member developed a diagram to represent visually how the tool might work, and the group found this diagram helpful. See the diagram attached to the minutes.) Simplify the Tool / Action Steps / Improved Navigation – There were many suggestions about simplifying the tool visually. Consensus was that the current version of the tool is very cluttered visually and this contributes to it feeling overwhelming to educators. In addition, the assessment items (the name of which should be changed from “action steps”) should be simplified by disaggregating multiple concepts in the same item. Recommendation – The group strongly recommends that DESE engage an expert in tool design and self-assessment science to help with the structure and visual design of the tool. There are people who know how to do this well, and we shouldn’t waste our energy trying to become experts in tool design (to make specific recommendations about this) when there are already experts out there (who would know better what to do and how to do it). It is understood that this kind of expertise and work would likely entail some cost. Data – One of the great things about the tool is that it generates a lot of data, both at the individual school level and at the aggregate district and statewide levels. However, it is not necessarily clear what data will be the most helpful at each of 5 these levels and, particularly at the school level, it isn’t clear how to actually use the data once a team has completed the tool. The Commission should get feedback on which data is most helpful at each level and then make sure there is guidance for users on what to do with the data. Also, members of this group felt the tool should generate visual representations of the data that are easy to analyze and understand, like a data “dashboard” or the like. Resources – There was a strong consensus that the tool should link users to helpful resources and information and there were many suggestions for specific resources to include. The group thought that the best place to link to resources is in the “planning” portion of the tool, that schools would use after doing the assessment and choosing their priorities for action. Guidance – There was essentially unanimous consensus that the online tool needs to include more guidance to users about what it is, the theory of change behind it, and how to use it. There should also be guidance about how to use the data, as mentioned above. There was also consensus that the form and content of this guidance will need to flow from decisions that are made about the purpose of the tool and its revised content. Safe and Supportive Schools Grant Program Woven throughout the “report-backs” from the small groups was discussion about the Safe and Supportive Schools Grant Program and the relationship between the new round of grantees and the revision of the Framework and Self-Assessment Tool. A summary of that discussion is included here. DESE reported that the goal is to get the RFP for the grant program out as quickly as possible. The tentative plan is that the grants (as in prior years) will continue to be in the amount of $10,000, which would fund 40 schools. There was discussion about timing, and that it would be very difficult to do a complete revision of the tool and make it available before the next round (2015-2016) of grantees are named and begin their work, so this year’s grantees are likely to use the current version of the tool. However, the new RFP is expected to include a requirement that grantees will provide feedback on the tool as part of the evaluation process, which can inform recommendations for improvements. Many Commission members expressed the desire to see and give feedback to DESE on the RFP. DESE staff will look into what may be possible to do without comprising the eligibility of any potential applicants. There was a question about whether collaboratives can apply for grants. DESE answered that, while collaboratives cannot serve as the fiscal agent for the grants based on the line-item language that will fund the grants, they could participate through supporting a consortium of their districts to apply for grants. The recommendation was made and strongly supported that DESE provide T/A to prospective grantees to generate interest and also help them understand the purpose of the grant, the tool, and the action planning process. Forms of T/A could include webinars, bidders’ conferences, etc. 6 V. A question was also raised about the evaluation of the grant program, which is provided for in the FY16 line item. A comment was made that it would be helpful for the Commission to figure out – before the RFPs go out and the grants are awarded – what information we want to be getting back from grantees at the end of the year; that it would be helpful for grantees to know what to expect and, if we want any “pre” and “post” comparisons, to decide what information to collect on the front end. The point was also made that, even though we should plan what data and information we will want to gather from all grantees, we also need to recognize there is some unpredictability because the grant program is also about helping schools/districts identify the data they want to collect, which we cannot know ahead of time. When the RFP is posted, DESE staff will be sending the Commission a link, so all can help spread the word to all eligible to apply. Next Steps The full Commission charted out its next steps on the two overarching tasks required by the law: 1) proposing to the Board of Elementary and Secondary Education (BESE) a process for “updating, improving and refining” the Safe and Supportive Schools Framework and Self-Assessment Tool, and 2) preparing our first annual report to the Governor and the Legislature. (See the attached document “Next Steps for Safe and Supportive Schools Commission.”) Upcoming Commission Meetings October 2, 2015: 1-4pm at DESE in Malden November 18, 2015: 10am-1pm at Newton Public Schools Attachments Next Steps for Safe and Supportive Schools Commission Flip-Chart Notes on Suggested Tool Improvements Safe and Supportive Schools Commission Member Contact List (last updated July 2015) Diagram created in a small group discussion 7