PartVII

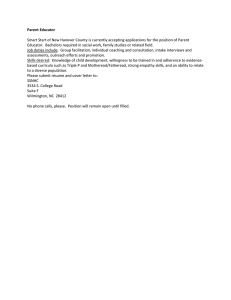

advertisement