Research Agenda External version January 2016

advertisement

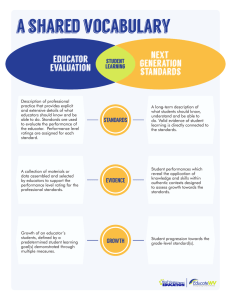

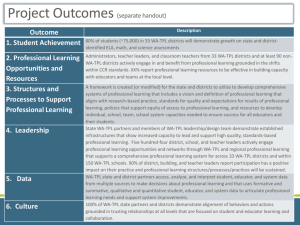

Research Agenda External version January 2016 ESE’s Goal and Strategies ESE’s goal is to prepare all students for success in the world that awaits them after high school. We are implementing five strategies aimed at reaching that goal: Strengthening standards, curriculum, instruction, and assessment Developing all educators Turning around the lowest performing schools and districts Using data and technology to support teaching and learning Supporting students’ social and emotional health More information on the strategies and the specific programs and initiatives that support them is available at http://www.doe.mass.edu/commissioner/. ESE has defined a research agenda related to the agency’s goals and strategies so that we can improve their implementation and measure their outcomes. This document describes the specific research projects currently underway in each priority area, whether conducted by internal staff or external partners, and also identifies questions for which we need additional support. In addition, the agency is interested in identifying ways for districts to use their staff, time, and fiscal resources more effectively to accomplish these objectives, so we have identified additional research questions related to that issue. We welcome proposals from independent, non-partisan researchers interested in conducting work in the areas identified in the research agenda, particularly those where we have an interest but no resource yet identified. Please contact Carrie Conaway at cconaway@doe.mass.edu with questions. ESE Research Agenda (external version) – updated 1/28/16 page 1 1: Success after high school How should we measure college and career readiness? What impacts do state policies and programs have on college and career readiness? Q Topic 1a Rigorous high school program of study (MassCore) 1b 1c How 1a.1 How many students have completed MassCore? How many are on track to completing MassCore? How do the data reported in SIMS compare to those reported in SCS? Internal 1a.2 What are the current graduation requirements in the state’s high schools, and how similar are they to the requirements of MassCore? Internal High school graduation and supports 1b.1 What is the impact of the programs supported through the state’s Academic Support grant program? How does it vary by instructional focus and by age/gradespan of the students served? What are the estimated short- and long-term economic costs and benefits of the program to the Commonwealth? External 1b.2 What is the impact of the state’s MassGrad initiative, comparing participating schools to similar non-participating schools? External 1b.3 What is the quality of implementation and the impact of the state’s EWIS implementation pilot? How does the EWIS intervention affect student outcomes? How does EWIS implementation vary across sites/schools? What resources and supports are needed for effective implementation? External 1b.4 What is the impact of the Success Boston initiative on student higher education outcomes? External Career development 1c.1 What are we learning from demonstration sites about expansion, replication, and sustainability of integrating college and career readiness services for all students? What outcomes are associated with ICCR activities, and how do they vary by context and implementation? External 1c.2 To what extent are schools and districts implementing career development education Offerings: Internal ESE Research Agenda (external version) – updated 1/28/16 page 2 Q Topic 1c.3 1d 1e 1f How opportunities for students, and what is the quality of those opportunities? What specific programs are offered? What is the impact of these programs on student outcomes? Quality/impact: Support needed What is the impact of summer employment on student outcomes? External Advanced coursework 1d.1 What is the impact of the state’s Advancing STEM AP program, particularly on economically disadvantaged students? Are skills taught to teachers through this program being transferred to classroom instruction? Why or why not? External 1d.2 What is the impact of performing at the Advanced level on the MCAS on later course-taking and college enrollment? External Charter schools and school choice 1e.1 What is the impact of the state’s charter schools on student outcomes? How does the impact vary for students with disabilities and English language learners? External 1e.2 Is there an association between student discipline practices and later attrition or dropout from charter schools? External 1e.3 Can centralized assignment mechanisms help measure school quality? External 1e.4 How do the impacts of Massachusetts charter schools on student outcomes compare to those in other states? External 1e.5 What is the impact of the METCO program on student outcomes? External 1e.6 What is the impact of the state’s Expanded Learning Time program on student outcomes? Has it changed since the previous evaluation concluded in 2012? Support needed 1e.7 What is the impact of the state’s Innovation Schools program on student outcomes? Support needed Time to postsecondary degree 1f.1 How long does it take MA public college students to complete postsecondary programs? How does this vary for students coming from the MA public K–12 system vs. other students? What factors from the K–12 and higher education systems influence time to degree? ESE Research Agenda (external version) – updated 1/28/16 Internal page 3 Q 1g Topic How 1f.2 What factors predict a student’s likelihood of enrolling in developmental education in college and their success in those courses if enrolled? Internal 1f.3 How many public K–12 students participate in dual enrollment, and which types of programs do they participate in? What predicts participation and success both in the program and in later postsecondary opportunities? How does participation in dual enrollment influence time to degree? Internal 1f.4 How does participation in a Chapter 74 vocational-technical program with articulated credits influence time to postsecondary degree? Internal 1g.1 How do students’ perceptions of their college and career readiness relate to their performance on statewide assessments? Internal 1g.2 How does student performance on the grade 12 NAEP correlate with academic preparedness for college? External 1g.3 What is the impact of the Massachusetts Comprehensive School Counseling Program on college readiness, access, and success? External 1g.4 How do students’ stated plans after high school correlate with what they actually do? How do they change from grade 8 to grade 10? External 1g.5 To what extent do educators offer opportunities for students to cultivate and exhibit creativity and innovation in their learning? External 1g.6 What are the eventual labor market outcomes for the state’s students? Internal for now; anticipate additional research needs in future years 1g.7 How will student enrollment and demographic composition change over the next ten years, statewide and by region? Support needed Other ESE Research Agenda (external version) – updated 1/28/16 page 4 2: Strengthen standards, curriculum, instruction, and assessment How have districts progressed in terms of implementing the 2011 curriculum frameworks? What supports are still needed for effective curriculum framework implementation? What are the academic outcomes for special populations of students, and how can we strengthen them? How can we improve state and local assessment practices? Q Topic 2a Curriculum framework implementation 2b 2c How 2a.1 How are districts progressing in implementing the 2011 curriculum frameworks in English language arts and mathematics? (and other frameworks once updated) What are their perceptions of the state’s implementation supports? Internal & external 2a.2 Which strategies for implementing the 2011 curriculum frameworks are most strongly related to student achievement on state assessments? External 2a.3 How should we measure the alignment of standards, assessments, instruction, and curriculum materials? External State curriculum programming 2b.1 What is the impact of the state’s literacy partnership grant program? How well is it being implemented by partners? External 2b.2 What is the impact of the Massachusetts Math and Science Partnerships program? How well is it being implemented by partners? External English language learners 2c.1 What has been the impact of the state’s RETELL training program for core academic teachers on English language learner student assessment results? Internal 2c.2 To what extent are teachers trained in RETELL implementing the techniques learned in the RETELL courses? Support needed 2c.3 What is the impact of the Gateway Cities Summer Academies and enrichment programs on students, teachers, and families? External ESE Research Agenda (external version) – updated 1/28/16 page 5 Q Topic 2d Special education 2d.1 2e How What is the impact of being educated in classrooms with students with disabilities on their typically developing peers? External Assessment 2e.1 Psychometrics and validity of MCAS 2.0 How should we link 2015, 2016, and 2017 assessment data? What are appropriate and inappropriate uses of 2015, 2016, and 2017 assessment data? How does performance on PARCC correlate with other measures of college and career readiness? (e.g., SAT/AP, high school graduation, college enrollment) How stable are measures of teacher impact as the assessment system changes? Internal & external 2e.2 Mode effect in PARCC How large is the mode effect? Does it vary by grade, subject, or student characteristics? How should we account for the mode effect when using PARCC data for reporting? For research? What are the options for Massachusetts to reduce the mode effect, and what impact and implications would they have? Internal 2e.3 Policy issues associated with assessment transition How should we use the data from the 2015 and 2016 test administrations for 2016 accountability determinations? For future year determinations? What are the competencies required for high school graduation? What would be the impact if Massachusetts were to adapt the scales or standards of PARCC for our own state definitions of college and career readiness? How should we set the performance level required to reach the competency determination when the high school assessment changes? Internal 2e.4 Do state assessment science items work effectively for measuring English language learners’ science knowledge and skills? External 2e.5 How valid are growth measures from statewide standards-based summative assessments for students with disabilities? External ESE Research Agenda (external version) – updated 1/28/16 page 6 Q Topic 2e.6 How How do student growth percentiles correlate with value-added measures? With ACCESS performance levels? ESE Research Agenda (external version) – updated 1/28/16 Internal and external page 7 3: Develop all educators Do educator effectiveness initiatives have observable impacts? Do all students, and particularly high needs students, have equitable access to effective educators? Is the field implementing state initiatives with fidelity? What is the field’s perception of state educator initiatives? How valid are the measures we have developed to support increased educator effectiveness? Q Topic 3a Impact of educator effectiveness initiatives 3b How 3a.1 How much do student outcomes vary across licensure pathways? External 3a.2 Does the educator evaluation framework improve student outcomes? Are lower rated educators improving? Are exemplary educators staying? Is student achievement or growth increasing? Internal 3a.3 Does the educator preparation formal review process improve candidate performance in the workforce? Support needed 3a.4 Is the Performance Assessment for Leaders a useful measure of principals’ impact? What would be the impact of changing the PAL cut score? Support needed 3a.5 Do the data dashboard for the educator evaluation system or the data collected in the ELAR and MTEL systems provide meaningful measures of our impact? Internal Equitable access to effective educators 3b.1 What access do students have to effective educators? How does it vary across subgroups, schools, districts, etc.? Internal and external 3b.2 What strategies implemented by districts and/or the state increase access to effective educators? (e.g., implementation supports for educator evaluation, educator preparation candidate review process, licensure) Support needed ESE Research Agenda (external version) – updated 1/28/16 page 8 Q Topic 3c Fidelity of implementation 3d 3e How 3c.1 Are stakeholders implementing the summative performance and student impact ratings with fidelity? External 3c.2 Are stakeholders implementing student and staff feedback surveys with fidelity? External 3c.3 Are stakeholders implementing the Candidate Assessment of Performance with fidelity? Internal Educator perceptions 3d.1 What is the field’s perception of the implementation of the educator evaluation framework and its component parts? Westat 3d.2 What is the field’s perception of the resources produced by ESE to support implementation? Internal 3d.3 What is the field’s perception of the Candidate Assessment of Performance? Internal 3d.4 What is the field’s perception of the licensure system? Internal 3d.5 What is the field’s perception of the implementation of the formal review process for educator preparation programs Internal 3d.6 Do educator preparation programs find the Edwin tools useful? Internal Validity of educator effectiveness measures 3e.1 Does the educator preparation formal review process measure educator preparation program quality? External 3e.2 Are the student and staff feedback surveys valid measures? External 3e.3 Do ESE’s model rubrics for educator evaluation measure educator effectiveness? Internal (additional support may be needed) 3e.4 Does the Candidate Assessment of Performance improve candidate performance in the workforce? Does the Candidate Assessment of Performance measure candidate’s readiness to enter the workforce? External (pending grant funding) ESE Research Agenda (external version) – updated 1/28/16 page 9 Q 3e Topic How 3e.5 How should educator evaluation, SGP, employment, and MTEL data be used in the educator preparation formal review process? Internal 3e.6 Is there evidence of bias in educator evaluation? External Other educator issues 3e.1 How many licenses of what types are issued each year, overall, by field, and by grade level? What types of licenses do candidates of color hold, and how were they prepared? Are teachers with preliminary licenses different from other teachers in terms of job placement and retention? Internal 3e.2 Are there meaningful differences between the quality of different educator preparation programs/institutions? Are certain programs particularly likely to produce teachers in the extremes of the educator effectiveness distribution? To place teachers in shortage areas? To produce teachers who are effective with certain subgroups? To boost teacher retention? External 3e.3 How much variation in educator effectiveness is there across licensure pathway? External 3e.4 What pre-service teacher education experiences predict the probability that a prospective teacher enters the state’s public teaching force? Of teachers’ effectiveness once they enter teaching? Of teachers’ career paths and attrition? External (pending grant funding) 3e.3 How do working conditions affect teachers’ job choice decisions? External ESE Research Agenda (external version) – updated 1/28/16 page 10 4: Turn around the lowest performing schools and districts Are turnaround schools and districts improving student outcomes at a faster rate than other schools or districts? What factors are correlated with successful turnaround? What role does the statewide system of support play in improving outcomes? Q Topic 4a State accountability and support system 4b How 4a.1 How does identification of persistently lowest performing schools vary when alternative weights, measures, statistical models, time periods, and cut points are used? External 4a.2 What factors contribute to variations in implementation and impact of the School Redesign Grants across districts and schools and over time? How are specific strategies intended to support turnaround employed at schools? External 4a.3 What strategies are the Holyoke, Lawrence, and Southbridge Public Schools implementing as part of their receiverships? How well implemented are they, and what impact have they had on student, educator, and other outcomes? In general, what assistance, supports, and strategies help districts turn around? External; additional support needed, particularly for Southbridge 4a.4 How do the data collected from the CDSA classroom observation tool vary across low, typical, and high performing districts? Internal and external Strategies for turnaround in districts without autonomies 4b.1 What strategies are Springfield’s middle schools implementing as part of the Springfield Empowerment Zone? How well implemented are they, and what impact have they had? Support needed 4b.2 Are there schools that have a similar demographic profile to our Level 4 and 5 schools but are not underperforming? If so, what strategies have they implemented, and what impact have they had? External ESE Research Agenda (external version) – updated 1/28/16 page 11 5: Use data and technology to support teaching and learning Which data and technology tools are districts using to support teaching and learning? What impact do data and technology tools have on improving student outcomes? Q Topic 5a Digital and personalized learning 5b 5a.1 How many districts are implementing personalized learning plans? Flexible learning environments? Competency-based credit accumulation? External (additional support may be needed) 5a.2 How many districts are incorporating digital content and instructional tools as a core feature of instructional time? External (additional support may be needed) 5a.3 What is the impact of participation in competency-based, online courses for credit recovery and acceleration on students’ preparation for postsecondary education? External 5a.4 What is the impact of a public-private consortium on the adoption of blended and personalized learning models in K–12 schools in the Commonwealth? Support needed 5a.5 Which students are being served by virtual schools? For which students and under what conditions is a virtual school environment effective? What does a virtual school education look like, from a teacher and a student perspective? Support needed ESE data tools and supports 5b.1 5c How How many people are using the embedded supports in Profiles and Edwin Analytics from Data in Action? How are they using them? Support needed District technology capacity 5c.1 What are districts’ and schools’ current technology capacities? How does it compare to the requirements for administering online assessments and for moving to greater integration of technology in the classroom? Has the state IT bond for education increased district capacity? ESE Research Agenda (external version) – updated 1/28/16 External page 12 6: Support students’ social and emotional needs Which social and emotional supports are effective in improving student outcomes? Q Topic 6a Interventions and supports 6b How 6a.1 What were the existing conditions in schools participating in The Partnership Project before introduction of the program? How were the TPP professional development courses planned and implemented? What is the impact of TPP PD on educator practice? Under what conditions is the program sustainable? External 6a.2 What is the impact of physical activity interventions in underperforming schools on student outcomes? External 6a.3 What is the impact of full-day kindergarten on cognitive and non-cognitive outcomes among students with and without disabilities? External Bullying and discipline 6b.1 How should we measure bullying for the purpose of the required student survey on bullying practices? Internal 6b.2 How is bullying related to student achievement? School climate? Student discipline reports? Internal 6b.3 How should we identify schools with significant discrepancies between subgroups in their discipline practices? Internal ESE Research Agenda (external version) – updated 1/28/16 page 13 Special topic: Resource use How are staff, time, and fiscal resources distributed across districts, schools, classrooms, and students? Are there differences in spending and staff assignment between schools in our largest districts, and if so, why? What additional data do we need to better answer this question? What are current trends in in-district and out-of-district spending on special education? Contracted services? Transportation? What is the potential for cost savings? What additional data do we need to better answer this question? How should return on investment be measured in an education context? Which state policies and programs have a high rate of return, and which do not? Other external research projects University of Michigan: What is the impact of the Boston Public Schools’ pre-kindergarten program? UMass Donahue Institute: What is the impact of the state’s financial literacy pilot program? National Opinion Research Center: Are there data-masking techniques that can meet confidentiality requirements while still maintaining individual-level, longitudinal data? ESE Research Agenda (external version) – updated 1/28/16 page 14