SDN + Storage

advertisement

SDN + Storage

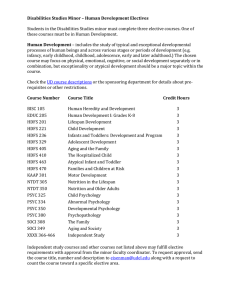

Outline

• Measurement of storage traffic

• Network aware placement

• Control of resources

• SDN + Resource allocation

– Predicting Resources utilization

– Bring it all together

HDFS Storage Patters

– Maps reads from HDFS

• Local read versus Non-local read

• Rack locality or not

Locality!!!

80%

HDFS Storage Patters

– Maps reads from HDFS

• Local read versus Non-local read

• Rack locality or not

Cross-rack

Traffic

80%

HDFS Storage Patters

– Reducers writes to HDFS

• 3 copies of file written to HDFS

• 2 rack local and 1 non-rack local

• Fault tolerance and good performance

THERE MUST BE

CROSS RACK

TRAFFIC

Ideal Goal: Minimize

Congestion

Real Life Traces

• Analyze Facebook traces:

– 33% of time spent in network

– Network links are highly utilized; why?

– Determine cause of network traffic

1. Job output

2. Job input

3. Pre-processing

Current Ways

To Improve HDFS Transfers

• Change Network Paths

– Hedera, MicroTE, C-thru, Helios

• Change Network Rates

– Orchestra, D3

• Increase Network Capacity

– VL2, Portland (Fat-Tree)

The case for Flexible Endpoints

• Traffic Matrix limits benefits

– of techniques that change paths

– of network rates

• Ability to Change Matrix is important

90%

20%

80%

90%

Flexible Endpoints in HDFS

• Recall: Constraint placed by HDFS

– 3 replicas

– 2 fault domains

– Doesn’t matter where as long as constraints are

met

• The source of transfer is fixed!

– However destination, location of 3 replicas is not

fixed

Sinbad

• Determine placement for block replica

– Place replicas to avoid hotspots

– Constraints:

• 3 copies

• Spread across 2 fault domains

• Benefits

– Faster writes:

– Faster transfers

Sinbad: Ideal Algorithm

• Input:

– Blocks of diff size

– Links of diff capacity

• Objective:

– Minimize write time (transfer time)

• Challenges: Lack of future knowledge

– Location & duration of hotspots

– Size and arrival times of new replicas

Sinbad Heuristic

• Assumptions

– Link utilizations are stable

• True for 5-10 seconds

– All block have same size

• Fixed-size large blocks

• Heuristic:

– Pick least-loaded link/path

– Send block from file with least amount to send

Sinbad Architecture

• Recall: original DFS is master-slave

architecture

• Sinbad has similar

Sinbad

• Determine placement for block replica

– Place replicas to avoid hotspots

– Constraints:

• 3 copies

• Spread across 2 fault domains

• Benefits

– Faster writes:

– Faster transfers

Orchestrating the Entire Cluster

• How to control Compute, Network, Storage?

• Challenges from SinBAD

– How to determine future replica demands?

• You can’t control job arrival

• You can control task scheduling

• If you predict job characteristics you can determine future

– How to determines future hot spots?

• Control all network traffic (SDN)

• Use future

Ideal Centralized Entity

• Controls:

– Storage, CPU, N/W

• Determines:

– Which task to run

– Where to run the task

– When to start Network transfer

• What rate to transfer at

• Which network path

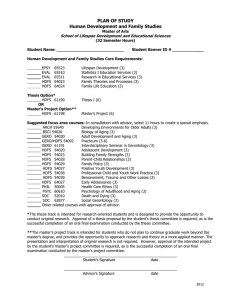

Predicting Job Characteristics

• To predict resources that a job needs to

complete, what do you need?

Predicting Job Characteristics

– Job’s DAG (job’s traces history)

– Computations time for each node

– Data transfer size between nodes

– Transfer time between nodes

Things you absolutely know!

• Input data

– Size of input data

– Location of all replicas

– Split of input data

200

GB

3

Mappers

HDFS

Map

Map

Map

• Job’s D.A.G

– # of Map

– # of Reduce

2

Reducers

Reduce

Reduce

HDFS

Approaches to Prediction:

Input/intermediate/Output Data

• Assumption:

– Map & Reduce run same code over and over

– Code gives the same ratio of reduction

• E.g. 50% reduction from Map to intermediate

• E.g. 90% reduction from intermediate to output

Map

HDFS

200

GB

Map

Map

• Implications:

100

GB

– Given size of input, you can determine size of

future transfers

• Problems:

– Not always true!!!

Reduce

Reduce

HDFS

10

GB

Approaches to Prediction:

Task Run Time

• Assumption:

– Task is dominated by reading input

– Time to run a task is essentially time to read

input

• If Local: Time to read from Disk

• If non-local: Time to read across Network

Map

HDFS

200

GB

Map

Map

• Implication:

100

GB

– If you can model read time you can determine

task run time

• Problems:

– How do you model disk I/O?

– How do you model I/O interrupt contention?

Reduce

Reduce

HDFS

10

GB

Predict Job Runs

• Given:

– Prediction of tasks, transfers, and of Dag

• Can you predict job completion time?

– How do you account for interleaving between

jobs?

– How do you determine optimal # of slots?

– How do you determine optimal network

bandwidth?

1

0 sec

Reduce

23

30

10 sec

HDFS

2

2

10

GB

3

Map

8

1

Map

HDFS

2

100

GB

Reduce

Map

200

GB

23

3

40 sec

• Really easy right?

– But what happens if the network only has 2 slots

• You can’t run map in parallel

Reduce

23

2

30

3 sec 13 sec

HDFS

2

10

GB

3

Map

8

Reduce

Map

1

1

0 sec

100

GB

Map

HDFS

200

GB

2

23

3

33 sec

• Which tasks to run in which order?

• How many slots to assign?

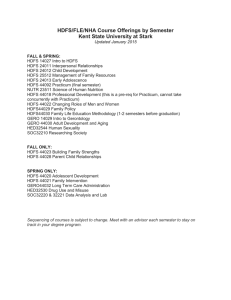

Approaches to Prediction Job Run

Times

• Assumption:

– Job Runtime Function (# slots)

• Implication:

– Given N slots, I can predict completion time

• Jockey Approach [EuroSys’10]

–

–

–

–

Track job progress: fraction of completed tasks

Build a map of [{% done + # of slots} time to complete]

Use simulator to build map

Iterate through all possible combination of # of slots and %done.

• Problems:

– Ignores network transfers: Network congestion

– Cross job contention on server can impact completion time

– Not all tasks are equal: # of tasks done isn’t a good representation of progress

Open Questions

• What about background traffic?

– Control messages

– Other bulk transfer

• What about unexpected events?

– Failures?

– Loss of data?

• What about protocol inefficiencies?

– Hadoop scheduling

– TCP inefficiencies

– Server scheduling