WildFire: A Scalable Path for SMPs Erik Hagersten and Michael Koster

advertisement

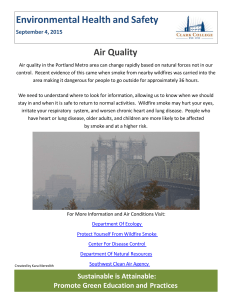

WildFire: A Scalable Path for SMPs Erik Hagersten and Michael Koster Presented by Andrew Waterman ECE259 Spring 2008 Insight and Motivation • SMP abandoned for more scalable cc-NUMA • But SMP bandwidth has scaled faster than CPU speed • cc-NUMA is scalable but more complicated – Program/OS specialization necessary – Communication to remote memory is slow – May not be optimal for real access patterns • SMP (UMA) is simpler – More straightforward programming model – Simpler scheduling, memory management – No slow remote memory access • Why not leverage SMP to the extent that it scales? Multiple SMP (MSMP) • Connect few, large SMPs (nodes) together • Distributed shared memory – Weren't we just NUMA-bashing? • Several CPUs per node => many local memory refs • Few nodes => unscalable coherence protocol OK WildFire Hardware • MSMP with 112 UltraSPARCs – Four unmodified Sun E6000 SMPs • GigaPlane bus (3.2 GB/s within a node) • 16 2CPU or I/O cards per node • WildFire Interface (WFI) is just another I/O board (cool!) – SMPs (UMA) connected via WFI == cc-NUMA (!) • But this is OK... few, large nodes • Full cache coherence, both intra- & inter-node WildFire from 30,000 ft (emphasis on a single SMP node) WildFire Software • ABI-compatible with Sun SMPs – It's the software, stupid! • Slightly (allegedly) modified Solaris 2.6 • Threads in same process grouped onto same node • Hierarchical Affinity Scheduler (HAS) • Coherent Memory Replication (CMR) – OK, so this isn't purely a software technique Coherent Memory Replication (CMR) • S-COMA with fixed home locations for each block – For those keeping score, that means it's not COMA • Local physical pages “shadow” remote physical pages – Keep frequently-read pages close: less avg. latency • Implementation: hardware counters – CMR page allocation handled within OS – Coherence still in hardware at block granularity • Enabled/disabled at page granularity • CMR memory allocation adjusts with mem. pressure Hierarchical Affinity Scheduling (HAS) • Exploit locality by scheduling a process on the last node on which it executed • Only reschedule onto another node when load imbalance exceeds a threshold • Works particularly well when combined with CMR – Frequently-accessed remote pages still shadowed locally after a context switch • Lagniappe locality WildFire Implementation A single Sun E6000 with WFI. Recall: WildFire Interface is just one of 16 standard cards on the GigaPlane bus. WildFire Implementation • Network Interface Address Controller (NIAC) + Network Interface Data Controller (NIDC) == WFI • NIAC interfaces with GigaPlane bus and handles internode coherence • Four NIDCs talk to point-to-point interconnect between nodes – Three ports per NIDC (one for each remote node) – 800MB/s in each direction with each remote node WildFire Cache Coherence • Intra-node coherence: bus + snoopy • Inter-node (global) coherence: directory – Directory state kept at a block's home node • Directory cache (SRAM) backed by memory – Home node determined by high-order address bits – MOSI – Nothing special since scalability not an issue • Blocking directory, 3-stage WB => no corner cases • NIAC sits on bus and asserts “ignore” signal for requests that global coherence must attend to – NIAC intervenes if block's state is inadequate or resides in remote memory WildFire Cache Coherence • Coherent Memory Replication complicates matters – A local shadow page has a different physical address from its corresponding remote page – If a block's state is insufficient, must look up global address in order for WFI to issue remote request • Stored in LPA2GA SRAM • Also cache the reverse lookup (GA2LPA) Coherence Example WildFire Memory Latency • WildFire compared to SGI Origin (2x R10K per node) and Sequent NUMA-Q (4x Xeon per node) • WF's remote mem. latency mediocre (2.5x Origin, similar to NUMA-Q), but less relevant because remote accesses less frequent (1/14 as many as Origin, 1/7 as many as NUMA-Q) Evaluating WildFire • Clever performance evaluation methodology: isolate on WildFire itself by comparing single-node 16-cpu system with two-node, 8cpu/node system – Pure SMP vs. WildFire • Also compare with NUMA-fat – Basically WF with no OS support, i.e. no CMR, no HAS, no locality-aware memory allocation, no kernel replication • And compare with NUMA-thin – NUMA-fat but with small (2 CPU) nodes • Finally, turn off HAS and CMR to evaluate their contribution to WF's performance Evaluating WildFire • WF with HAS+CMR comes within 13% of pure SMP • Speedup(HAS+CMR) >> Speedup(HAS)*Speedup(CMR) • Locality-aware allocation and large nodes are important Evaluating WildFire • Performance trends correlate with locality of reference • HAS + CMR + Kernel Replication + Initial Allocation improve locality of access from 50% (i.e. uniform distribution between two nodes) to 87% Summary • WildFire = a few large SMP nodes + directory-based coherence between nodes + fast point-to-point interconnect + clever scheduling and replication techniques • Pretty good performance (unfortunately, no numbers for 112 CPUs) • Good idea? – I think so, but I doubt much room for scalability • Then again, that wasn't the point • Criticisms? – Authors are very proud of their slow directory protocol – Kernel modifications may not be so slight Questions? Erik Hagersten and Michael Koster Presented by Andrew Waterman ECE259 Spring 2008 WildFire: A Scalable Path for SMPs Erik Hagersten and Michael Koster Presented by Andrew Waterman ECE259 Spring 2008