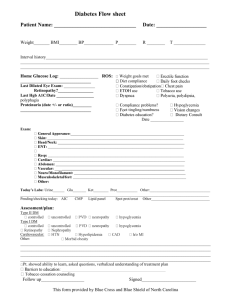

CPS110: Page replacement February 21, 2008 Landon Cox

advertisement

CPS110:

Page replacement

Landon Cox

February 21, 2008

Dynamic address translation

User process

Virtual

address

Translator

(MMU)

Physical

address

Translator: just a data structure

Tradeoffs

Flexibility (sharing, growth, virtual memory)

Size of translation data

Speed of translation

Physical

memory

2. Segmentation

Segment #

Base

Bound

Segment 0

4000

700

Code

Segment 1

0

500

Data segment

Segment 2

Unused

Unused

Segment 3

2000

1000

Segment #

Stack segment

Virtual memory

Physical memory

(3,fff)

.

(3,000)

…

(1,4ff)

.

(1,000)

…

(0,6ff)

.

(0,0)

46ff

.

4000

…

2fff

.

2000

…

4ff

.

0

(Stack)

Offset

(Data)

(Code)

(Code segment)

(Stack segment)

(Data segment)

Virtual addresses

VA={b31,b30,…,b12,b11,…,b1,b0}

High-order bits

Low-order bits

(segment number)

(offset)

3. Paging

Very similar to segmentation

Allocate memory in fixed-size chunks

Chunks are called pages

Virtual addresses

Virtual page # (e.g. upper 20 bits)

Offset within the page (e.g. low 12 bits)

3. Paging

Translation data is a page table

Virtual page #

Physical page #

0

10

1

15

2

20

3

invalid

…

…

1048574

Invalid

1048575

invalid

3. Paging

Translation process

if (virtual page # is invalid) {

trap to kernel

} else {

physical page = pagetable[virtual page].physPageNum

physical address is {physical page}{offset}

}

Page size trade-offs

If page size is too small

Lots of page table entries

Big page table

If we use 4-byte pages with 4-byte PTEs

Num PTEs = 2^30 = 1 billion

1 billion PTE * 4 bytes/PTE = 4 GB

Would take up entire address space!

Page size trade-offs

What if we use really big (1GB) pages?

Internal fragmentation

Wasted space within the page

Recall external fragmentation

(wasted space between pages/segments)

Page size trade-offs

Compromise between the two

x86 page size = 4kb for 32-bit processor

Sparc page size = 8kb

For 4KB pages, how big is the page table?

1 million page table entries

32-bits (4 bytes) per page table entry

4 MB per page table

Paging pros and cons

+ Simple memory allocation

+ Can share lots of small pieces

(how would two processes share a page?)

(how would two processes share an address space?)

+ Easy to grow address space

– Large page tables (even with large invalid section)

Comparing translation schemes

Base and bounds

Unit of translation = entire address space

Segmentation

Unit of translation = segment

A few large variable-size segments/address space

Paging

Unit of translation = page

Lots of small fixed-size pages/address space

What we want

Efficient use of physical memory

Little external or internal fragmentation

Easy sharing between processes

Space-efficient data structure

How we’ll do this

Modify paging scheme

Course administration

Latest thread library score

Thread library scores

70

60

50

40

30

20

10

0

1

2

3

4

5

6

7

8

Group number

9

1t: median = 41 (out of 64)

Total: median = 74% (last semester median = 98%)

10

11

12

Course administration

Latest thread library score

Thread library scores

70

60

50

40

30

20

10

0

2/10

2/12

2/13

2/14

2/14 2/15 2/16 2/17

Initial submit date

2/17

2/18

1t: median = 41 (out of 64)

Total: median = 74% (last semester median = 98%)

2/18

2/19

Course administration

Next week

I will be out of town

Mid-term exam on Tuesday

Amre will proctor exam

No discussion sections

Thursday lecture cancelled

Project 2 out on Thursday

Any questions about exam?

Course administration

Project 2

Posted on the web next Thursday (February 28th)

Write a virtual memory manager

Manage address spaces and page faults

Due 4 weeks from today (March 20th)

Comparison to Project 1

Less difficult concepts (no synchronization)

Lots of bookkeeping and corner cases

No solution to compare to (e.g. thread.o)

4. Multi-level translation

Standard page table

Just a flat array of page table entries

VA={b31,b30,…,b12,b11,…,b1,b0}

High-order bits

(Page number)

Low-order bits

(offset)

Used to index into table

4. Multi-level translation

Multi-level page table

Use a tree instead of an array

VA={b31,b30,…,b22,b21,…, b12,b11,…,b1,b0}

Level 1

Level 2

Low-order bits

(offset)

Used to index into table 2

Used to index into table 1

What is stored in the level 1 page table? If valid? If invalid?

4. Multi-level translation

Multi-level page table

Use a tree instead of an array

VA={b31,b30,…,b22,b21,…, b12,b11,…,b1,b0}

Level 1

Level 2

Low-order bits

(offset)

Used to index into table 2

Used to index into table 1

What is stored in the level 2 page table? If valid? If invalid?

Two-level tree

Level 1

Level 2

0

1

…

NULL

NULL

1023

?

0: PhysPage, Res, Prot

0: PhysPage, Res, Prot

1: PhysPage, Res, Prot

1: PhysPage, Res, Prot

…

…

?

?

1023: PhysPage, Res, Prot

1023: PhysPage, Res, Prot

VA={b31,b30,…,b22,b21,…, b12,b11,…,b1,b0}

Level 1

Level 2

Offset

Two-level tree

Level 1

Level 2

0

1

…

NULL

NULL

1023

0: PhysPage, Res, Prot

0: PhysPage, Res, Prot

1: PhysPage, Res, Prot

1: PhysPage, Res, Prot

…

1023: PhysPage, Res, Prot

…

1023: PhysPage, Res, Prot

How does this save space?

Two-level tree

Level 1

Level 2

0

1

…

NULL

NULL

1023

0: PhysPage, Res, Prot

0: PhysPage, Res, Prot

1: PhysPage, Res, Prot

1: PhysPage, Res, Prot

…

1023: PhysPage, Res, Prot

…

1023: PhysPage, Res, Prot

What changes on a context switch?

Multi-level translation pros and cons

+ Space-efficient for sparse address spaces

+ Easy memory allocation

+ Easy sharing

– What is the downside?

Two extra lookups per reference

(read level 1 PT, then read level 2 PT)

(memory accesses just got really slow)

Translation look-aside buffer

Aka the “TLB”

Hardware cache from CPS 104

Maps virtual page #s to physical page #s

On cache hit, get PTE very quickly

On miss use page table, store mapping, restart instruction

What happens on a context switch?

Have to flush the TLB

Takes time to rewarm the cache

As in life, context switches may be expensive

Replacement

Think of physical memory as a cache

What happens on a cache miss?

Page fault

Must decide what to evict

Goal: reduce number of misses

Review of replacement algorithms

1. Random

Easy implementation, not great results

2. FIFO (first in, first out)

Replace page that came in longest ago

Popular pages often come in early

Problem: doesn’t consider last time used

3. OPT (optimal)

Replace the page that won’t be needed for longest time

Problem: requires knowledge of the future

Review of replacement algorithms

LRU (least-recently used)

Use past references to predict future

Exploit “temporal locality”

Problem: expensive to implement exactly

Why?

Either have to keep sorted list

Or maintain time stamps + scan on eviction

Update info on every access (ugh)

LRU

LRU is just an approximation of OPT

Could try approximating LRU instead

Don’t have to replace oldest page

Just replace an old page

Clock

Approximates LRU

What can the hardware give us?

“Reference bit” for each PTE

Set each time page is accessed

Why is this done in hardware?

May be slow to do in software

Clock

Approximates LRU

What can the hardware give us?

“Reference bit” for each PTE

Set each time page is accessed

What do “old” pages look like to OS?

Clear all bits

Check later to which are set

Clock

Time 0: clear reference bit for page

.

.

.

Time t: examine reference bit

Splits pages into two classes

Those that have been touched lately

Those that haven’t been touched lately

Clearing all bits simultaneously is slow

Try to spread work out over time

Clock

A

PP

VP

Physical page 0

Physical page 1

Physical page 2

E

B

PP

VP

Physical page 3

PP

VP

Physical page 4

D

PP

VP

PP

VP

= Resident virtual pages

PP

VP

C

Clock

A

PP

VP

Physical page 0

E

Physical page 1

Physical page 2

B

PP

VP

Physical page 3

PP

VP

PP

VP

Physical page 4

D

When you need to evict a page:

1) Check physical page pointed to by clock hand

PP

VP

C

Clock

A

PP

VP

Physical page 0

E

Physical page 1

Physical page 2

B

PP

VP

Physical page 3

PP

VP

PP

VP

Physical page 4

D

PP

VP

C

When you need to evict a page:

2) If reference=0, page hasn’t been touched in a while. Evict.

Clock

A

PP

VP

Physical page 0

E

Physical page 1

Physical page 2

B

PP

VP

Physical page 3

PP

VP

PP

VP

Physical page 4

D

PP

VP

C

When you need to evict a page:

3) If reference=1, page has been accessed since last sweep.

What to do?

Clock

A

PP

VP

Physical page 0

E

Physical page 1

Physical page 2

B

PP

VP

Physical page 3

PP

VP

PP

VP

Physical page 4

D

PP

VP

C

When you need to evict a page:

3) If reference=1, page has been accessed since last sweep.

Set reference=0. Rotate clock hand. Try next page.

Clock

Does this cause an infinite loop?

No.

First sweep sets all to 0, evict on next sweep

What about new pages?

Put behind clock hand

Set reference bit to 1

Maximizes chance for page to stay in memory

Paging out

What can we do with evicted pages?

Write to disk

When don’t you need to write to disk?

Disk already has data (page is clean)

Can recompute page content (zero page)

Paging out

Why set the dirty bit in hardware?

If set on every store, too slow for software

Why not write to disk on each store?

Too slow

Better to defer work

You might not have to do it! (except in 110)

Paging out

When does work of writing to disk go away?

If you store to the page again

If the owning process exits before eviction

Project 2: other work you can defer

Initializing a page with zeroes

Taking faults

Paging out

Faulted-in page must wait for disk write

Can we avoid this work too?

Evict clean (non-dirty) pages first

Write out pages during idle periods

Project 2: don’t do either of these!

Hardware page table info

What should go in a PTE?

Protection

Physical page # Resident

(read/write)

Set by OS to control

translation. Checked by

MMU on each access.

Set by OS.

Checked by

MMU on

each access.

Set by OS to

control access.

Checked by MMU

on each access.

Dirty

Reference

Set by MMU

Set by

when page is

MMU

used. Used by

when

OS to see if

page is

modified. page has been

referenced.

Used by

OS to see

if page is

decisions?

modified.

What bits does a MMU need to make access

MMU needs to know if resident, readable, or writable.

Do we really need a resident bit? No, if non-resident, set R=W=0.

MMU algorithm

if (VP # is invalid || non-resident || protected)

{

trap to OS fault handler

}

else

{

physical page = pageTable[virtual page].physPageNum

physical address = {physical page}{offset}

pageTable[virtual page].referenced = 1

if (access is write)

{

pageTable[virtual page].dirty = 1

}

} Project 2: infrastructure performs MMU functions

Note: P2 page table entry definition has no dirty/reference bits

Hardware page table entries

Do PTEs need to store disk block nums?

No

Only the OS needs this (the MMU doesn’t)

What per page info does OS maintain?

Which virtual pages are valid

On-disk locations of virtual pages

Hardware page table entries

Do we really need a dirty bit?

Claim: OS can emulate at a reasonable overhead.

How can OS emulate the dirty bit?

Keep the page read-only

MMU will fault on a store

OS/you now know that the page is dirty

Do we need to fault on every store?

No. After first store, set page writable

When do we make it read-only again?

When it’s clean (e.g. written to disk and paged in)

Hardware page table entries

Do we really need a reference bit?

Claim: OS can emulate at a reasonable overhead.

How can OS emulate the reference bit?

Keep the page unreadable

MMU will fault on a load/store

OS/you now knows that the page has been referenced

Do we need to fault on every load/store?

No. After first load/store, set page readable

Application’s perspective

VM system manages page permissions

Application is totally unaware of faults, etc

Most OSes allow apps to request page protections

E.g. make their code pages read-only

Project 2

App has no control over page protections

App assumes all pages are read/writable