CPS 214 “Live” Video and Audio Streaming •End System Multicast

advertisement

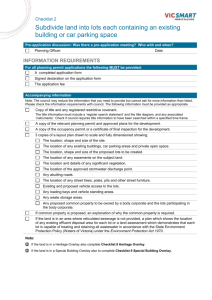

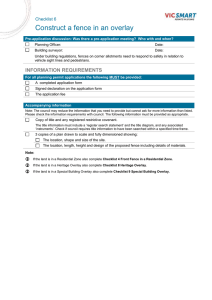

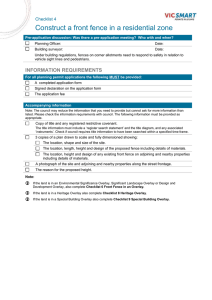

CPS 214 Computer Networks and Distributed Systems “Live” Video and Audio Streaming •End System Multicast •Analysis of Akamai Workload 1 Presentations • Monday, April 21 – – – – – Abhinav, Risi Bi, Jie Jason, Michael Martin, Matt Amre, Kareem • Wednesday, April 23 – – – – Ben, Kyle Jayan, Michael Kshipra, Peng Xuhan, Yang 2 The Feasibility of Supporting Large-Scale Live Streaming Applications with Dynamic Application End-Points Kay Sripanidkulchai, Aditya Ganjam, Bruce Maggs*, and Hui Zhang Carnegie Mellon University * and Akamai Technologies Motivation • Ubiquitous Internet broadcast – Anyone can broadcast – Anyone can tune in 4 Overlay multicast architectures Router Source Application end-point 5 Infrastructure-based architecture [Akamai] + Well-provisioned Router Source Application end-point Infrastructure server 6 Application end-point architecture [End System Multicast (ESM)] + Instantly deployable + Enables ubiquitous broadcast Router Source Application end-point 7 Waypoint architecture [ESM] W + Waypoints as insurance Router Source Application end-point W Waypoint 8 Sample ESM Broadcasts http://esm.cs.cmu.edu Event Duration (hours) Unique Hosts Peak Size SIGCOMM ’02 25 338 83 SIGCOMM ’03 72 705 101 SOSP’03 24 401 56 DISC’03 16 30 20 Distinguished Lectures 11 400 80 AID Meeting 14 43 14 Buggy Race 24 85 44 Slashdot 24 1609 160 Grand Challenge 6 2005 280 9 Feasibility of supporting large-scale groups with an application end-point architecture? • Is the overlay stable enough despite dynamic participation? • Is there enough upstream bandwidth? • Are overlay structures efficient? 10 Large-scale groups • Challenging to address these fundamental feasibility questions – Little knowledge of what large-scale live streaming is like 11 Chicken and egg problem Publishers with compelling content need proof that the system works. System has not attracted large-scale groups due to lack of compelling content. 12 The focus of this paper • Generate new insight on the feasibility of application end-point architectures for large scale broadcast • Our methodology to break the cycle – Analysis and simulation – Leverage an extensive set of real-world workloads from Akamai (infrastructure-based architecture) 13 Talk outline • Akamai live streaming workload • With an application end-point architecture – Is the overlay stable enough despite dynamic participation? – Is there enough upstream bandwidth? – Are overlay structures efficient? • Summary 14 Measurements used in this study • Akamai live streaming traces – Trace format for a request [IP, Stream URL, Session start time, Session duration] • Additional measurements collected – Hosts’ upstream bandwidth 15 An Analysis of Live Streaming Workloads on the Internet Kunwadee Sripanidkulchai, Bruce Maggs*, Hui Zhang Carnegie Mellon University and *Akamai Technologies Akamai live streaming infrastructure Source A A A A A A Reflectors Edge servers 17 Extensive traces ~ 1,000,000 daily requests ~ 200,000 daily client IP addresses from over 200 countries ~ 1,000 daily streams ~ 1,000 edge servers ~ Everyday, over a 3-month period 18 Largest stream 75,000 x 250 kbps = 18 Gbps! 19 Highlight of findings • Popularity of events [Bimodal Zipf] • Session arrivals [Exponential for short time-scales, time-ofday and time-zone-correlated behavior, LOTS of flash crowds] • • • • Session durations [Heavy-tailed] Transport protocol usage [TCP rivals UDP] Client lifetime Client diversity 20 Request volume (daily) Number of requests Weekdays Weekends Missing logs 21 Audio vs. video Video 7% Most streams are audio. Audio 71% Unknown 22% 22 Stream types • Non-stop (76%) vs. short duration (24%) – All video streams have short duration • Smooth arrivals (50%) vs. flash crowds (50%) – Flash crowds are common 23 Client lifetime • Motivating questions – Should servers maintain “persistent” state about clients (for content customization)? – Should clients maintain server history (for server selection problems)? • Want to know – Are new clients tuning in to an event? – What is the lifetime of a client? 24 Analysis methodology • Windows media format • Player ID field to identify distinct users • Birth rate = Number of new distinct users Total number of distinct users 25 Daily new client birth rate Weekends Weekdays Xmas • New client birth rate is 10-100% across all events. • For these 2 events, birth rate is 10-30%. 26 One-timers: tune in for only 1 day In almost all events, 50% of clients are one-timers! 27 Client lifetime (excluding one-timers) y = 3x y=x For most events, average client lifetime is at least 1/3 of the event duration. 28 Client lifetime • Motivating questions – Should servers maintain “persistent” state about clients (for content customization)? Any state should time-out quickly because most clients are one-timers. – Should clients maintain server history (for server selection problems)? Yes, recurring clients tend to hang around for a while. 29 Number of IP Addresses Where are clients from? 1.60E+06 1.40E+06 1.20E+06 1.00E+06 8.00E+05 6.00E+05 4.00E+05 2.00E+05 0.00E+00 Countries Clients are from over 200 countries. Most clients are from the US and Europe. US CN DE ES FR GB CA JP PT CH BE MX NL SE KR BR 30 Analysis methodology • Map client IP to location using Akamai’s EdgeScape tool • Definitions – Diversity index = Number of distinct ‘locations’ that a stream reaches – Large streams are streams that have a peak group size of more than 1,000 clients 31 Time zone diversity Many small streams reach more than half the world! Almost all large streams reach more 32 than half the world. Client diversity • Motivating questions – Where should streaming servers be placed in the network? Clients are tuning in from many different locations. – How should clients be mapped to servers? For small streams which happen to have a diverse set of clients, it may be too wasteful for a CDN to map every client to the nearest server. 33 Summary • Publishers are using the Internet to reach a wider audience than traditional radio and TV • Interesting observations – – – – Lots of audio traffic Lots of flash crowds (content-driven behavior) Lots of one-timers Lots of diversity amongst clients, even for small streams 34 100 80 rtp http mms rtsp 60 40 20 Quicktime Real t un cp kn ow n ud p tc p ud p t un cp kn ow n 0 ud p Percentage of requests Nice pictures…see paper for details Windows media 35 Abandon all hope, ye who enter here. 36 Talk outline • Akamai live streaming workload • With an application end-point architecture – Is the overlay stable enough despite dynamic participation? – Is there enough upstream bandwidth? – Are overlay structures efficient? • Summary 37 When is a tree stable? Not stable More stable Stable nodes X X Interruptions Time Ancestor leaves Less stable X nodes • Departing hosts have no descendants • Stable nodes at the top of the tree 38 Extreme group dynamics 15% stay longer than 30 minutes (heavy-tailed) 45% stay less than 2 minutes! 39 Stability evaluation: simulation • Hosts construct an overlay amongst themselves using a single-tree protocol – Skeleton protocol of the one presented in the ESM Usenix ’04 paper • Findings are applicable to many protocols – Goal: construct a stable tree • Parent selection is key • Group dynamics from Akamai traces (join/leave) • Honor upstream bandwidth constraints – Assign degree based on bandwidth estimation 40 Join Join IP1 IP2 ... 41 Probe and select parent IP2 IP1 IP1 IP2 ... 42 Probe and select parent Parent selection algorithms • Oracle: pick a parent who will leave after me • Random • Minimum depth (select one out of 100 random) • Longest-first (select one out of 100 random) 43 Parent leave X Host leaves 44 Parent leave ? Host leaves All descendants are disconnected 45 Find new parent Host leaves All descendants are disconnected All descendants probe to find new parents 46 Stability metrics • Mean interval between ancestor change • Number of descendants of a departing host Interruptions X Time Ancestor leaves 47 Stability of largest stream Longest-first Random Min depth Oracle: there is stability! 48 Is longest-first giving poor predictions? Oracle, ~100% no descendants Longest-first, 91% Min depth, 82% Random, 72% 49 Percentage of sessions with interval between ancestor change < 5 minutes Stability of 50 large-scale streams Longest-first Random Min depth Oracle There is stability! Of the practical algorithms, min depth performs the best. 50 There is inherent stability • Given future knowledge, stable trees can be constructed • In many scenarios, practical algorithms can construct stable trees – Minimum depth is robust – Predicting stability (longest-first) is not always robust; when wrong, the penalty is severe • Mechanisms to cope with interrupts are useful – Multiple trees (see paper for details) 51 Talk outline • Akamai live streaming workload • With an application end-point architecture – Is the overlay stable enough despite dynamic participation? – Is there enough upstream bandwidth? – Are overlay structures efficient? • Summary 52 Is there enough upstream bandwidth to support all hosts? Video 300 kbps DSL Upstream bandwidth only 128 kbps DSL DSL Saturated tree What if application end-points are all DSL? 53 Metric: Resource index • Ratio of the supply to the demand of upstream bandwidth Resource index == 1 means the system is saturated • Resource index == 2 means the system can support two times the current members in the system Resource Index: (3+5)/3 = 2.7 54 Large-scale video streams A few streams are in trouble or close. 1/3 of the streams are in trouble. Most streams have sufficient upstream bandwidth. 55 Talk outline • Akamai live streaming workload • With an application end-point architecture – Is the overlay stable enough despite dynamic participation? – Is there enough upstream bandwidth? – Are overlay structures efficient? • Summary 56 Relative Delay Penalty (RDP) • How well does the overlay structure match the underlying network topology? RDP = Overlay distance Direct unicast distance US US 50ms US 50ms Europe 20ms US50ms Europe Results are more promising than previous studies using synthetic workloads and topologies. 57 Summary • Indications of the feasibility of application end-point architectures – The overlay can be stable despite dynamic participation – There often is enough upstream bandwidth – Overlay structures can be efficient • Our findings can be generalized to other protocols • Future work: Validate through real deployment – On-demand use of waypoints in End System Multicast – Attract large groups Thank you! 58