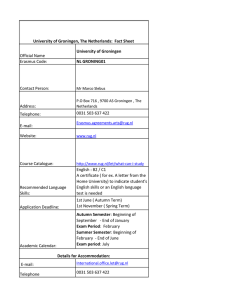

Software Visual Analytics

advertisement

Software Visual Analytics

Tools and Techniques for Better Software Lifecycle Management

prof. dr. Alexandru (Alex) Telea

Department of Mathematics and Computer Science

University of Groningen, the Netherlands

www.cs.rug.nl/svcg

Introduction

Who am I?

• professor in computer science / visualization @ RuG (since 2007)

• chair/steering committee of ACM SOFTVIS / IEEE VISSOFT 2007-2013

• 7 PhD students, over 35 MSc students

• over 150 international publications in data / software visualization

www.cs.rug.nl/~alext

www.solidsourceit.com

Topics of this lecture

• program comprehension

• software maintenance and evolution

• software visual analytics

Slides: www.cs.rug.nl/~alext/SVA

Data Visualization: Principles and Practice

A. K. Peters, 2008

www.cs.rug.nl/svcg

What you will see

•

•

lots of tools, use cases, applications

all shown techniques were applied in the real IT industry

source code

text duplication

code repositories

code quality

code dependencies

design and metrics

P2P networks

program behavior

stock exchange

evolution metrics

structure evolution

team analysis

www.cs.rug.nl/svcg

History of Visual Data Analysis

1985

Scientific Visualization:

- engineering

- geosciences

- medicine

1995

Information Visualization:

- finance

- telecom

- business management

2000

Software Visualization:

- the software industry!

www.cs.rug.nl/svcg

When is data visualization useful?

1. Too much data:

• do not have time to analyze it all (or read the analysis results)

• show an overview, discover which questions are relevant

• refine search

2. Qualitative / complex questions:

• cannot capture question compactly/exactly in a query

• question/goal is inherently qualitative: understand what is going on

3. Communication:

• transfer results to different (non technical) stakeholders

• learn about a new domain or problem

www.cs.rug.nl/svcg

When is visualization NOT useful?

1. Queries:

• if a question can be answered by a compact, precise query, why visualize?

• “what is the largest value of a set”

2. Automatic decision-making:

• if a decision can be automated, why use a human in the loop?

• “how to optimize a numerical simulation”

Key thing to remember:

• visualization is mainly a cost vs benefits (or value vs waste) proposal

• cost: effort to create and interpret the images

• benefits: problem solved by interpreting the images

• discussion in software engineering: lean development [Poppendieck 2006]

✗ B. Lorensen, On the Death of Visualization, Proc. NIH/NSF Fall Workshop on Visualization Research Challenges, 2004

✗ S. Charters, N. Thomas, M. Munro, The end of the line for Software Visualisation? Proc. IEEE VISSOFT, 2003

✗ S. Reiss, The paradox of software visualization, Proc. IEEE VISSOFT, 2005

✓ J. J. van Wijk, The Value of Visualization, Proc. IEEE Visualization, 2005

M. Poppendieck, T. Poppendieck, Lean Software Development, Addison-Wesley, 2006

www.cs.rug.nl/svcg

Software Visualization

Definition:

• “Software visualization is concerned with the static or animated 2D or 3D visual

representation of information about software systems based on their structure, history, or

behavior in order to help software engineering tasks” [Diehl, 2006]

For whom:

• developers:

•

testers:

•

architects:

•

managers:

•

business:

Goals:

understand large code bases quicker

develop and refactor quicker

create and manage large test suites quicker

invest testing effort more efficiently

understand very large software systems

compare code with design documents

reverse-engineer structure from code

overview long-duration projects

correlate quality with process decisions

assess quality of process and product decisions

support in/outsourcing activities

communicate with technical stakeholders easily

reduce cost/time, increase quality and productivity!

www.cs.rug.nl/svcg

Software Visualization – Really needed?

Surveys:

• software industry forecast: 457 billion $ (2013), 50% larger than in 2008 [www.infoedge.com]

• comparison: total US health care spending 2.5 trillion $ (2009) [www.usatoday.com/news/health]

• 80% of development costs spent on maintenance [Standish’84, Corbi’99]

• 50% of this is spent for understanding the software!

Practice:

• 40% engineers find SoftVis indispensable, 42% find it not critical [Koschke ’02]

• visual tools or tool plugins become increasingly accepted in software engineering

• Visual Studio, Eclipse, Rational Rose, Together, JReal, DDD, …

• See new Visual Studio 2010 dependency visualization!

Research:

• Software visualization is now an established research area

• own events: ACM SoftVis, IEEE VISSOFT

• related events: InfoVis, VAST, ICSE, I(W|C)PC, WCRE, OOPSLA, SIGSOFT, ECOOP, …

A few billion of lines of code later: Using static analysis to find bugs in the real world (A. Bessey et al.), CACM, 2010

Software visualization in software maintenance, reverse engineering, and re-engineering: a research survey (R. Koschke), JSME, 2003

Measuring the ROI of software process improvement (R. van Solingen), IEEE Software, 2004

The paradox of software visualization (S. Reiss), IEEE VISSOFT, 2005

The end of line for software visualisation? (S. Charters, N. Thomas, M. Munro), IEEE VISSOFT, 2003

www.cs.rug.nl/svcg

Software Visualization vs Visual Programming

is examined by

creates

software

visualization

tool

is analyzed by

software visualization

The software

The user

visual programming

generates

creates

is read by

visual

programming

tool

The tools

www.cs.rug.nl/svcg

Software Visualization vs Visual Programming

Visual Programming

Software Visualization

• usually for small programs

• for small…huge programs

• mostly used in forward engineering (FE)

• most used in reverse engineering (RE)

• usually at ‘component’ level

• at line-of-code…system level

• is still not very popular

• starts becoming quite popular

• complemented by ‘classical’ textual

programming

• complemented by ‘classical’ code text

reading

• can work at several levels of details

• can work at several levels of detail

• quite well integrated in the engineering

pipeline

• not yet tightly integrated in the engineering

pipeline

We’ll talk about software visualization, not visual programming

www.cs.rug.nl/svcg

Software Visualization – Methods

Method

Dataset

Techniques

table lenses

1. Multivariate visualization

tables

parallel coordinates

hierarchical node-link layouts

2. Relational visualization

trees / hierarchies

treemaps

general graphs

force-directed layouts

matrix plots

compound digraphs

bundled layouts

general documents

graph splatting

source code

dense pixel techniques

diagrams

areas of interest

3. Text visualization

context and focus

4. Interaction techniques

multiple views

semantic zooming

Will discuss all these with InfoVis and SoftVis examples…

www.cs.rug.nl/svcg

Software Visualization Pipeline

Software analysis

software

engineering

software

data

• design

• development

• testing

• maintenance

internal

data

data

filtering

• static analysis

• dynamic analysis

• debugging

• evolution analysis

insight,

decisions

human

interpretation

data

acquisition

• graph extraction

• metric computation

• dataflow analysis

• control flow analysis

enriched data

Software visualization

image

graphics

rendering

• graph drawing

• texture mapping

• antialiasing

• interaction

visual

representations

data

mapping

• graph layouts

• attribute mapping

The two sub-pipelines need to be strongly connected!

www.cs.rug.nl/svcg

1. Software structure visualization

Goal:

• get insight in how a program is structured, from code lines up to modules and packages

• different levels of detail = different visualizations

code lines

functions

• containment relations (usually)

• can also be association relations

(e.g. provides, requires, uses,

calls, owns, …)

classes

files

components

packages

level of

complexity

applications

www.cs.rug.nl/svcg

1.1. Code structure visualization

Source code

• show code in structured way, helps understanding purpose

• lexical highlighting: show code type

• indentation: show structure

lexical highlighting (Visual C++ 9.0)

syntax highlighting (Xcode 2.0)

• scalability: limited by font size

• expressivity: typically limited by complexity of lexical constructs

• implemented by most modern development tools

www.cs.rug.nl/svcg

Source code structure

Syntax highlighting

• more structure:

• generalize lexical highlighting to full code syntax

• adapt shaded cushions idea from treemaps to code blocks

source

code

syntax tree

+

cushion

texture

cushion

profile f(x)

border size x

G. Lommerse, F. Nossin, L. Voinea, A. Telea, The Visual Code Navigator: An Interactive Toolset for Source Code Investigation, IEEE InfoVis, 2005

www.cs.rug.nl/svcg

Source code structure

• classical code editor

• indentation shows some structure

Images from CSV tool (www.cs.rug.nl/svcg/SoftVis/VCN)

www.cs.rug.nl/svcg

Source code structure

• blend in structure cushions…

• color shows construct type (functions, loops, control statements, declarations, …)

Images from CSV tool (www.cs.rug.nl/svcg/SoftVis/VCN)

www.cs.rug.nl/svcg

Source code structure

• more structure cushions…

Images from CSV tool (www.cs.rug.nl/svcg/SoftVis/VCN)

www.cs.rug.nl/svcg

Source code structure

• cushions generalize syntax highighting

‘flat’ syntax highlighting

Images from CSV tool (www.cs.rug.nl/svcg/SoftVis/VCN)

‘cushioned’ syntax highlighting

www.cs.rug.nl/svcg

Source code structure

Brushing

• show details on demand as function of the mouse pointer

• widespread concept in InfoVis

move the mouse somewhere…

Images from CSV tool (www.cs.rug.nl/svcg/SoftVis/VCN)

www.cs.rug.nl/svcg

Source code structure

Brushing – spotlight cursor

• fade out cushions as function of distance to mouse pointer

• easy to do: blend a radial transparency texture

bring the text around in focus…

Images from CSV tool (www.cs.rug.nl/svcg/SoftVis/VCN)

www.cs.rug.nl/svcg

Source code structure

Brushing – structure cursor

• highlight (desaturate) cushion under mouse

• emphasizes structure

…of focus on a whole syntactic block

Images from CSV tool (www.cs.rug.nl/svcg/SoftVis/VCN)

www.cs.rug.nl/svcg

Source code structure

Dense pixel techniques

• how to show 10000 lines of code on one screen?

• adapt table lens idea

• zoom out text view (keep layout)

• replace characters by single pixels

The SeeSoft tool from AT&T [Eick et al. ‘92]

zoomed-out

code drawn

as pixel lines

color shows

code age

source code at usual font size

• scales to ~50 KLOC on one screen

• correlations between files become possible

• syntactic structure not emphasized

www.cs.rug.nl/svcg

Source code structure

Dense pixel techniques

• good to show data attributes (code age, bugs, quality metrics, …)

• does not show structure

VTK class library

• 1 C++ file

• 10 headers

• ~7000 lines

VTK library: www.kitware.org/vtk

www.cs.rug.nl/svcg

Source code structure

Add structure cushions

• we start seeing things

source

headers

comment

class

iteration

if

…..

VTK library: www.kitware.org/vtk

www.cs.rug.nl/svcg

Source code structure

Enhancements

• use two metrics per structure block

• area color: code complexity [McCabe ‘76]

• border color: number of casts

• multiple correlated views

• well-known InfoVis technique

• table lens: find outliers (complex functions)

• code view: get detailed insight

Example

• find most complex functions in two files

• check if they do many typecasts

A. Telea, L. Voinea, SolidFX: An Integrated Reverse-engineering

Environment for C++, ACM SOFTVIS, 2008

Image created with the SolidFX tool (www.solidsourceit.com)

www.cs.rug.nl/svcg

1.2. Module structure

Compound digraphs

• particular type of graphs, very frequent in SoftVis

• two types of edges

• containment – e.g. software hierarchy (files, modules, classes, functions, …)

• association – e.g. software interactions (calls, inherits, uses, provides, requires, …)

• how to visualize this?

Compound digraph of a C Hello World program

Node-link layouts

• (very) bad choice!

• mix containment and association edges

• occlusions, clutter

• layout may change significantly if you

• add/remove a few nodes/edges

• change the layout parameters slightly

• how to teach one to read such a layout?

folders & files

functions

www.cs.rug.nl/svcg

Nested layouts

Principle

• show containment by box spatial nesting

• show associations by node-link diagrams

• SHriMP layout (Simple Hierarchical Multi-Perspective) [Storey and Muller ’95]

• very popular, tens of tool implementations

• limited scalability, clutter

SoftVision tool [Telea et al. ’02]

Creole tool (www.thechiselgroup.org/creole)

www.cs.rug.nl/svcg

Nested layouts

Variations of the principle

• extend the hierarchical DAG layout [Sugiyama et al. ’81] to incorporate nesting

• lay out children containers using original method

• compute container bounding box

• lay out parent level using sizes from children

• route edges using orthogonal paths (eliminate crossings)

aiSee tool (www.absint.com/aisee)

• one of the best compound digraph layouts in existence

• clean and clear images, ‘engineering diagram’ look

• can show 2..3 hierarchy levels simultaneously

G. Sander, Graph Layout through the VCG tool, Proc. Graph Drawing, 1995

Practical intro: T. Würthinger, Visualization of Program Dependence Graphs, http://ssw.jku.at/Research/Papers/Wuerthinger07Master

Hierarchically bundled edges

Best existing technique to showing containment and associations

• application: modularity / coupling assessment

• hard to quantify in a metric…

• …but extremely easy to see!

Modular system

• blue = caller, red = called

• all functions in the yellow file

call the purple class

• green file has many self-calls

Monolithic system

• blue = virtual, green = static functions

• red class has many virtual calls

(possible interface class)

Decoupled system

• many intra-module calls

• few inter-module calls

• typical for library software

www.cs.rug.nl/svcg

Hierarchically bundled edges

• if associations are correlated with structure, HEBs shows this clearly!

• images are intuitive for most users

• edge overlaps instead of edge crossings!

• overlaps communicate information

• easy to visually follow a bundle

• built-in scalability (bundling = free aggregation)

Structure and associations of C# system

Enhancements

• node and edge color coding

• edge alpha blending

• saturated = high density = many edges

• transparent = low density = few edges

• aggregation and navigation

• collapse and expand nodes

• replace multiple edges by single one

• shaded hierarchy cushions

• emphasize structure

Image generated with SolidSX tool (www.solidsourceit.com)

www.cs.rug.nl/svcg

Multiple views

Code view

SolidSX tool (www.solidsourceit.com)

Tree

browser

Treemap

Table lens

HEB view

Images generated with SolidSX tool (www.solidsourceit.com)

www.cs.rug.nl/svcg

Enhancements

Image-based edge bundles (IBEB)

• produce a simplified view of system modularity

original HEB

new IBEB

• A is connected to B…

•…but how?

• A1 is connected to B1…

• A2 is connected to B2

A. Telea, O. Ersoy, Image-based edge bundles: Simplified visualization of large graphs, CGF 2010

www.cs.rug.nl/svcg

1.3. Attributes and structure

Use the 3rd dimension: city metaphor

• xy plane: structure (treemap technique)

• z axis: metrics

• color + xy size : extra metrics

JHotDraw system (www.jhotdraw.org)

visualized with CodeCity (www.usi.inf.ch/phd/wettel/codecity.html)

R. Wettel, M. Lanza, Program comprehension through software habitability, IEEE ICPC, 2007

see also Codstruction tool (codstruction.wordpress.com)

www.cs.rug.nl/svcg

1.4. Architecture Diagrams

Diagrams

• design or architectural information

• can be also generated from source code (reverse engineering)

UML (Unified Modeling Language)

• most accepted (but not only) diagram notation in the engineering community

• class

• object

• sequence

• use case

• statecharts

• activity

• package

• component

• deployment

• variants of node-link layouts

• special semantics (icons, positions, text annotations)

• most often drawn by hand

• UML 1.0 most known and used

• UML 2.0 and beyond: more powerful but quite complex

The UML Notation Standard (www.uml.org)

www.cs.rug.nl/svcg

UML Diagrams

• add software metrics to support e.g. software quality analysis

• show several metrics per element with icons

• metric encoded as icon size, shape, color

• compare metrics of the same element

• compare metrics across elements

The MetricView tool

A: visualization

B: UML browser

C: metric layout

D: metric data

MetricView tool (www.cs.rug.nl/svcg/SoftVis/ArchiVis)

www.cs.rug.nl/svcg

UML Diagrams

• smoothly navigate between structure and metrics

• use transparency

diagram: opaque

metrics: transparent

diagram: transparent

metrics: opaque

metrics

structure

MetricView tool (www.cs.rug.nl/svcg/SoftVis/ArchiVis)

www.cs.rug.nl/svcg

UML Diagrams

• 2D vs 3D again

• same idea as CodeCity but

• use different xy layout

• can also show associations

• flip scene to smoothly navigate from 2D to 3D view

2D view (=3D seen from above)

3D view

MetricView tool (www.cs.rug.nl/svcg/SoftVis/ArchiVis)

www.cs.rug.nl/svcg

Metric lens

• embed a small table lens within each diagram element

• scroll/sort columns in sync for all elements

Graphical editor

• areas = subsystems

• LOC and complexity metrics

• most classes have low metrics

• 1 large, complex class

(hard to maintain code!)

• luckily, not in the system core

Area metrics

• show areas of interest: groups of components sharing some property

• show metrics defined on areas of interest (complexity, testability, portability, size, …)

• continuous rainbow colormap

• continuous (smooth) metrics

• discrete colormaps

• discrete metrics

www.cs.rug.nl/svcg

2. Software Behavior Analysis

What is software behavior?

• the collection of information that a running program generates

• also called a program trace

• data: the values generated by the program

• internal values (e.g. local variables, stack, registers, …)

• external values (e.g. graphical/console input/output, log files, …)

• control: the states the program passes through

• data collection

• log files

• instrumentation (debuggers, profilers, code coverage analyzers, …)

Use cases

• optimization:

• testing:

• debugging:

• education:

• research:

determine performance bottlenecks (profiling)

check program correctness vs specifications

find incorrect program constructions leading to test failures

learn algorithm behavior by seeing its execution

test/refine new algorithms

www.cs.rug.nl/svcg

Behavior Analysis Challenges

E. Dijkstra, Comm. ACM 11(3), 1968

Program trace visualization

Callstack view

• visualize call stack depth over time with additional metrics (e.g. cache hits/misses)

• like Jinsight’s per-thread execution view

• shaded cushions: ‘ruler’ for the y axis

• antialiasing: same idea as for the timeline view

callstack view

cache L2 miss

cache L1 miss

time (samples)

www.cs.rug.nl/svcg

Program trace-and-structure visualization

• program trace: icicle plot (as before)

• program structure (treemap)

• layout the two atop of each other

• correlate them by means of mouse interaction

time (samples)

J. Trümper, A. Telea, ViewFusion: Correlating Structure and Activity Views for Execution Traces, TPCG 2012

Tool implementation: www.softwarediagnostics.com

www.cs.rug.nl/svcg

Visualizing software deployment

Gammatella

• deployment yields multiple copies of a given software system

• collect runtime data from actual deployed copies

• visually analyze it to

• understand platform-specific problems

• detect potential bottlenecks, bugs, optimization possibilities, …

execution bar

file view

code view

system view

metric view

Memory visualization

• N concurrent processes p1, …, pN

• pi allocates blocks aij = (memstart, talloc, memend , tdealloc), talloc< tdealloc, memstart < memend

• blocks do not overlap in space but may overlap in time

Visualization usage

• understand how memory allocators perform in practice

Memory visualization with the MemoView tool

address

space

time

color = process ID

memory fill

Application

• embed previous visualization in a matrix, one cell per bin

• color = waste

• application: Symbian OS, Nokia

Execution trace-and-structure visualization

Execution traces

T = { ci }, ci = ( fcaller , fcallee , t)i , f ProgramFunctions , t [0,tmax]

• set of function-call events

Visualization

• hierarchical edge layout (program structure)

• massive sequence view (calls over time)

execution traces in the ExtraVis tool

ExtraVis tool: www.win.tue.nl/~dholten/extravis

Massive sequence view

• combines structure with calls

Top

• system structure (icicle plot)

Bottom

• calls over time

• 1 call c = ( fcaller , fcallee , t) 1 line (xstart , y , xend , y)

•y=t

• xstart = position of fcaller in structure view (red)

• xend = position of fcallee in structure view (green)

• similar / regular call patterns visible

• zoomable view

• importance-based sampling, like in MemoView

Application

JHotDraw graphics editor

• create new drawing

• insert 5 figures

• repeat above 3 times

• use sequence view to find execution

of the above operations

• verify results on source code

with the HEB view

start-up

new drawing

repeat 1

insert 1

repeat 2

insert 2

insert 3

repeat 3

cleanup

insert 4

insert 5

3. Software Maintenance and Evolution

delivery

• five main phases

size? cost?

size? cost?

time

“Software development does not stop when a system

is delivered but continues throughout the lifetime of the

system” [Sommerville, 2004]

Software evolution

• the set of maintenance activities done after the 1st software release until its lifetime end

• duration and cost of maintenance are far larger than the first four phases

• 80% of the lifecycle costs, 50% of which are understanding

• hence, an excellent terrain for SoftVis!

www.cs.rug.nl/svcg

Costs of Maintenance

• defect removal cost as function of lifecycle phase [Balaram ‘04]

• relative cost of correcting an error [Boehm et al ‘00]

“This planet has invested so far $2300 billion in the maintenance of COBOL programs” [Foster ’91]

Relative maintenance cost

• 60% [Hanna 1993]

• 70% [Lientz & Swanson 1980]

• 80% [Brown 1990, Coleman 1994, Pfleeger 2001]

• 90% [Sommervile 2004]

• apparently there is a steady rise…

S. Balaram (senior VP HP R&D, Bangalore) Building software with quality and speed, www.symphonysv.com

B. Boehm, C. Abts, S. Chulani, Software development cost estimation approaches – A survey, Ann. Soft. Eng., 2000

Maintenance Pipeline

end users

developers

bugs,

improvements,

features, …

estimate cost

vs benefit

change

management

tracking

system

estimate

needed effort

change

request

find affected

code

change

management

impact

analysis

release

planning

analysis / decision-making

(focus of evolution SoftVis)

describe

changes

revise current

system

write change

plan

design

changes

code

changes

standard development pipeline

new bug

system is used in

real life…

new

requirement

environment and

requirements

evolve…

test

changes

system

release

Software Evolution

Lehman’s 8 Laws:

•

The Law of Continuing Change (1974)

•

The Law of Increasing Complexity (1974)

•

The Law of Self Regulation (1974)

•

The Law of Conservation of Organizational Stability (1980)

•

The Law of Conservation of Familiarity (1980)

•

The Law of Continuing Growth (1980)

•

The Law of Declining Quality (1996)

•

The Feedback System Law (1996)

Derived from feedback system theory

Validated empirically in practice over many years

M. Lehman, J. Ramil, P. Wernick, D. Perry, W. Turski, Metrics and Laws of Software Evolution—The Nineties

View,” Proc. 4th Intl. IEEE Software Metrics Symposium (METRICS '97), 1997,

available online at: http://www.ece.utexas.edu/~perry/work/papers/feast1.pdf

www.cs.rug.nl/svcg

Laws of Software Evolution – Layman’s vs Lehman’s view

Essentially the 8 laws say this:

•

Software must and will change whether you like it or not

•

Maintenance keeps being pumped in unless you want a disaster

•

Stuff that matters (size, quality, complexity, …) changes slowly on the average

•

Understanding effort keeps being pumped in unless you want a disaster

•

Things will get worse unless you keep pumping in effort to fix them

•

It’s not simple – we actually don’t know what happens out there!

Hence

•

SoftVis and evolution analysis do have a case!

My own view

•

SoftVis has its best chance to be meaningful in software evolution and maintenance

–

highest benefits and return-on-investment

–

by far largest datasets

–

we don’t really know how to make design and development more efficient

www.cs.rug.nl/svcg

So where are we?

Are you bored?

•

good. This is the real world out there in software engineering

•

we must intimately understand this world if we want to use SoftVis to improve it

•

if not, we’ll just generate pretty & useless pictures

So where does SoftVis go?

•

impact analysis

•

quality analysis

•

decision making support

So where are the pretty pictures?

•

they come up next

•

but remember: they must be focused to serve a real purpose

•

lean engineering principle applies:

–

diminish waste

–

increase value

www.cs.rug.nl/svcg

Software repositories

•

also called source control management (SCM) systems

•

central tool for storing change

•

–

revisions

–

files, folders

–

delta storage to minimize required space (using e.g. cmp or diff functions)

–

typically semantics-agnostic: just store changes in files

–

collaborative work (shared check-in, check-out, permissions)

–

more advanced system also store change requests (e.g. CM/Synergy)

–

work with command-line, web, or IDE interfaces

if not, we’ll just generate pretty & useless pictures

Examples

•

CVS

–

•

Subversion (SVN)

–

•

automated build; change request support; however, more complex than CVS/Subversion

ClearCase

–

•

probably the best (known) open-source SCM; atomic commits; efficient binary file support; …

CM/Synergy

–

•

pretty old and outdated; revisions are per-file (physical, not logical); no atomic commits; …

Build auditing; dependency management; high scalability

Jit, Mercurial, Visual SourceSafe, …

3.1. Evolution at line level

•

take one file in a repository

•

visualize its changes across two versions

line

groups

version

detail

• unit of analysis: line blocks (as detected by diff)

• shows insertions, deletions, constant blocks, drift

• cannot handle more than 2 versions

Evolution at line level

•

visualization tool: CVSscan

•

correlated views, details on demand

L. Voinea, A. Telea, J. J. van Wijk, CVSscan:

Visualization of source code evolution, ACM SOFTVIS, 2005

code metric bar: code size

change per separate line

time metric bar: code size

change in time

detailed code view

around mouse cursor

move mouse in code view: show code that

will be inserted / was removed from current location

Evolution at line level – several files

file

lines

• extend idea to a few (1..4) files

• stack several line-level views, one per file

• line color = construct type

• helps doing cross-file correlations

• large size jumps = code refactoring

• less wavy patterns = stable code

• horizontal patterns = unchanged code

comments

time

(version)

function bodies

strings

function headers

Multiscale visualization

• we often want to see files with similar evolution

• define a file-level evolution similarity metric

• good choice: similarity of change moments

• cluster all files using this metric

• bottom-up agglomerative clustering

• visualize cluster tree and user-selected ‘cut’ in the tree

C1

C2

C4

C5

(level of detail = size)

C1

C2

C3

visualization of

selected cut: one

cushion per cluster

C4

C5

C3

cluster tree: icicle plot; color = cluster cohesion

3.2. Evolution at file level

Trend Analyzer (SolidTA)

www.solidsourceit.com

•

2D dense pixel layout

•

x = time, y = files; one file = one horizontal band; one version = one band segment

time (version)

project

activity

number of

commits

files sorted

by order

in folders

color = version author ID

Evolution at file level

• which are the most changed files, and who worked in those?

• sort files on activity, color on author ID

most

active

files

files sorted

by activity

More on SolidTA: www.solidsourceit.com

Evolution at file level

• what type of code came when in the project’s lifetime?

• sort files on age, color on file type

oldest

files

activity ~

age…

files sorted

by age

.tcl, .py

More on SolidTA: www.solidsourceit.com

.h

.cxx

.in

.pdf

Evolution at file level

• which are the bug reports / fixes and how do they correlate?

• color on presence of keywords “bug” and “fix” in commit logs

files sorted

by age

no hits

More on SolidTA: www.solidsourceit.com

“bug”

“fix”

“bug” and “fix”

Multiscale visualization

What is the risk of releasing the software now?

• show bug-reports density using texture splatting on the evolution view

• group files by change similarity

• color by with directory name to correlate file similarity with file location

•

•

•

largest debugging activity localized to a single folder

changes in that folder do not propagate outside (change-similar files are in 1 cluster)

hence, system is well-structured for localized maintenance!

L. Voinea, A. Telea, How do changes in buggy Mozilla files propagate? ACM SOFTVIS 2006

www.cs.rug.nl/svcg

3.3. Project level visualization

• upper-level decision makers do not have time to look at the evolution at each file

• visualize aggregated evolution metrics at project level

Team risk analysis

•

•

software projects are done by developer teams over years

find if team composition is risky for the project’s maintenance

•

•

•

extract project evolution from software repositories

compute impact of each developer over each file / function / ….

visualize impact evolution in an aggregated manner

Project level visualization

• aggregate impact (#files modified by each developer) over time

• visualize resulting time series using the ‘theme river’ metahphor [Havre et al ‘02]

Project A (open-source)

•

software grows in time

•

impact is balanced over most developers

Project B (commercial)

•

software grows in time at about the same rate

•

but one developer owns most of the code

•

what if this person leaves the team?!

More details on SolidTA: www.soursourceit.com

4. Software Visual Analytics

“The science of analytical reasoning facilitated by interactive visual interfaces”

[Wong and Thomas ’04, Tomas and Cook, ‘05]

The Sensemaking Loop

• going from ‘raw’ data to meaning (semantics)

• data hypothesis (in)validation conclusions

• in simple terms: combine analysis and visualization

P. Wong, J. Thomas, Visual analytics, IEEE Comp. Graphics & Applications, 24(5), 2004

J. Thomas, K. Cook, Illuminating the Path: The R&D Agenda for Visual Analytics, NVAC, 2005

www.cs.rug.nl/svcg

Visual analytics vs Software visualization

Related but not identical

SoftVis

Visual Analytics

Goal

present data

solve problems

Methods

visualization

visualization and analysis

Challenges

visual clarity, scalability,

interaction effectiveness, …

assist the user on the whole path

from raw data to finding a solution

Techniques mapping, layout, interaction

data mining + all SoftVis techniques

History

relatively new (since ~2002)

well-established (since ~1995)

Integration

Data

mining

SoftVis

Software Visual Analytics

www.cs.rug.nl/svcg

Software Visual Analytics Challenges

So Software Visual Analytics = SoftVis + data mining; What’s the big deal?

Data size

• exabytes of more (visualize entire ‘data warehouses’)

• SoftVis techniques do not scale to this size

Data heterogeneity

• “any type” of data how to capture this in a uniform data model?

• missing, incomplete, incorrect, conflicting data how to analyze / visualize this?

Multidisciplinary

• databases, data mining, SoftVis: traditionally separate communities

• understanding it all: too much for any single community

Infrastructure

• need integral / longitudinal solutions, not small, isolated applications / prototypes

• development effort becomes a major bottleneck (how to do this as a researcher?)

• how to evaluate integral solutions within a limited budget?

www.cs.rug.nl/svcg

Software Visual Analytics

Visual Analytics applied to Software Engineering

graphs, dense pixel displays, charts, …

reasons for process/product quality degradation, bugs,

low performance, low productivity, what-if questions, …

problem model (capture essentials from refined data stream)

high-level analysis (static analysis, clone detection, quality metrics)

low-level analysis (parsing, instrumentation, repository data mining)

source code, design documents, program execution, repositories

Example 1: Build Optimization

1. Context

• major embedded software company (NASDAQ 100)

• industrial 17.5 MLOC code base of C code

• modified daily by >500 developers worldwide

2. Problem

• high build time (>9 hours)

• modifying a header causes very long recompilations

• testing becomes very hard; perfective maintenance (refactoring) nearly impossible

3. Questions

• why is the build time so long?

• what impact has a code change on the build time?

• how is a change impact spread over the entire code base?

• how to refactor the code to improve modularity and build time?

A. Telea, L. Voinea, Visual Software Analytics for the Build Optimization of Large-Scale Software Systems, Comp. Statistics, 2010, to appear

Build Optimization

Three analyses – three tools in a unified toolset

TableVision tool

Build process analysis

• why is the build slow?

INavigator tool

CM/Synergy

repository

Extracted

data

Build cost

model

Dependency analysis

• how does a code change

affect build time?

IRefactor tool

Refactoring analysis

• how to rewrite code to

improve build time?

www.cs.rug.nl/svcg

Gathering Raw Data

• measure build time using UNIX tools time(x)

build time

CPU time

large

small

• build time = CPU + I/O + network + paging + other processes

large

small

large

small *

small *

CPU time

build time

I/O time

+

network

preventive actions

build is I/O bound!

corrective actions

* assume no other CPU-intensive processes besides compilation

www.cs.rug.nl/svcg

Sensemaking: First Steps

• simple histogram of build time

time (sec)

0

translation units

Build time depends significantly on the translation unit!

A useful build cost model must consider the per-unit build cost

and not only the number of translation units

* assume no other CPU-intensive processes besides compilation

www.cs.rug.nl/svcg

Build Cost Model – First Attempt

Build cost

• sources:

• binaries:

• headers:

“how much it costs to build a file”

number of lines of code in the source + (in)directly included headers

negligible (linking is cheap)

zero (headers don’t get compiled)

Build impact:

• sources:

• headers:

“how much it costs to rebuild the system when a file is modified”

build cost of the source itself

number of sources using that header

Example

BC = 0

BC = 3

BI = 3

lib

BC = 0

c

BC = 2

BI = 2

lib

* both application and system headers are considered

c

BI = 1

BI = 2

h

h

BI = 2

h

www.cs.rug.nl/svcg

Build Cost Model – Validation

low-impact headers

sorted on

model’s impact

12th highest-impact header (reality)

classified as 21st (model)

high-impact headers

headers

•

•

model’s

impact

time build

measurements

model is close to reality but not perfect

deviations are important!

More details on SolidBA: www.soursourceit.com

www.cs.rug.nl/svcg

Build Cost Model – Refinement

Build cost

• sources:

• binaries:

• headers:

“how much it costs to build a file”

number of (in)directly included headers

negligible (linking is cheap)

zero (headers don’t get compiled)

Build impact:

• sources:

• headers:

“how much it costs to rebuild the system when a file is modified”

the build cost of the source itself

sum of build costs of all sources including header (in)directly

Example

BC = 0

lib

BC = 0

lib

More details on SolidBA: www.soursourceit.com

BC = 3

BI = 3

c

BC = 2

BI = 2

c

BI = 3

BI = 5

h

h

BI = 5

h

www.cs.rug.nl/svcg

Build Cost Model 2 – Validation

low-impact headers

sorted on

first model’s impact

refined model classifies

outlier correctly

high-impact headers

headers

•

refined

model’s

impact

first

model’s

impact

time build

measurements

refined model delivers same header-order (in terms of impact) as actual measurements

More details on SolidBA: www.soursourceit.com

www.cs.rug.nl/svcg

Build Cost Model 2 – Validation

Let’s look at the whole picture

actual time

measurements

first model

refined model

header files

•

•

refined model nicely matches reality, including subtle ‘outliers’

why is this so? (see next slide)

More details on SolidBA: www.soursourceit.com

www.cs.rug.nl/svcg

Build Cost Model 2 – Validation

Analyze deeper:

• compilation cost dominated by I/O (preprocessing headers)

• I/O cost dominated by file opening/closing on this platform

• hence the justification of impact = # totally opened headers

Conclusions

To reduce build time, we should:

• either massively accelerate network

• reduce per-header build impact

• reduce impact of change on build time

More details on SolidBA: www.soursourceit.com

highly costly / complex

header impact analysis

header refactoring

www.cs.rug.nl/svcg

System-wide impact analysis

1.

2.

3.

4,5.

6.

Find subsystems are expensive to build

For a subsystem, find headers have high build impact

Zoom in to highest impact headers

For a high-impact header, see how its impact spreads over sources

For a header, see its cost breakdown over its include-set

1

3

2

4

5

More details on SolidBA: www.soursourceit.com

6

www.cs.rug.nl/svcg

Subsystem-level impact analysis

Method

• color system tree by cost (blue=low, red=high)

• select desired subsystem

• right panel shows build impact for each

header / source in that subsystem

Findings

!

• most headers have a low build impact

• however, a few have a very high impact

• touching those incurs a high build cost!

because they are used in many sources

because they include many headers

More details on SolidBA: www.soursourceit.com

www.cs.rug.nl/svcg

Refactoring analysis

• OK, we have a high-impact header h: how easy it to reduce that impact?

• visualize the build cost distribution of h over the sources which use it

Case 1: easy refactoring

• build cost spread unevenly over the targets including selected header h

• to decrease cost due to h, we only need to change a few targets

build impact of h is located mainly

in one single place!

Refactoring analysis

Case 1: difficult refactoring

• build cost spread evenly over the targets including selected header h

• to decrease cost due to h, we need to change almost all targets

selected high-impact

header

build impact of h

More details on SolidBA: www.soursourceit.com

www.cs.rug.nl/svcg

Refactoring analysis - Refinement

• not all headers change equally often (e.g. system headers)

• new metrics:

• build impact * change frequency

• impact distribution: impact (%) of a header contained in the 10% most expensive of its targets

• easy & quick to use

flat, low distribution (~15%): impact is

spread uniformly over all targets. Hence, we

cannot improve by refactoring a few targets

sorted by

impact*change

skewed distribution (50%): half of impact is

concentrated in 10% most expensive targets.

Hence, refactoring these is an interesting option

Refactoring support

• OK, we found a high-impact header; how to decide a refactoring plan?

• show dependencies header clients using hierarchical DAG layout

Example 1: MIXTmet.h, used by 38 sources, high impact

MIXTmet.h

• build impact due to direct header

inclusion

• hard to decrease via refactoring

Example 2: WS_support.h, used by 48 sources, high impact

WS_support.h

*

• build impact channeled via one

intermediate header:

WS_sim1_support.h (*)

• simpler refactoring may be

possible

Refactoring support (2)

• say we want to include a header: is this potentially expensive?

• show header’s own include graph colored by build impact

Example: TDMD_types.h, used by 30 sources

• not a high-impact header itself

• but it includes high-impact headers!

• hence using this header introduces potentially expensive changes

cdefs.h

pyconfig.h

TDMD_types.h

More details on SolidBA: www.soursourceit.com

Visual Tool: INavigator

Refactoring support (3)

How much costlier becomes the system build if we add an #include?

• select a “source” header

– the one in which we want to #include

• select a “destination” header – the one to be #included

• show the build cost increase

Example: What if we #include DNCHUI_chset.h in TDMD_types.h?

source

target

build impact increases from

9633 to 9783, i.e. 1.5%

Refactoring support (4)

• previous methods OK for manual header-by-header refactoring only

How to refactor a large system?

• system S = {fi}i, S = Headers U Sources

• header hi Headers = {sj}j , sj Symbols (function declarations, variables, types, macros, …)

• include relations

inc : S P(Headers), inc( f ) = {hi} f includes hi

• symbol use relations

use : Symbols P(Headers), use(s) = {hi} s is used by hi

• in typical systems, not all symbols sj h in a header are used together

Automatic refactoring idea

• find high-impact header h (see last slides)

• split h into h1 , h2 ; h1U h2 = h by putting symbols used together in same hi

• recursively split h1 , h2

• replace inc(h) by inc(h1) and/or inc(h2)

More details on SolidBA: www.soursourceit.com

www.cs.rug.nl/svcg

Refactoring support (4)

• intuitively: put symbols used together (by many sources) in same header

• include newly created headers instead of original ‘monolithic’ one

• why this is good

• decrease build costs (by decreasing the included code)

• decrease build impact (by decreasing the number of included headers)

The IRefactor analysis tool

• suggests refactoring possibilities and shows gained build impact

Refactoring visualization

header

0

≥5

Refactoring cost

(how many files must

include both headers

after refactoring)

symbols

2 colors

Build impact

(build impact of

the header)

min

max

Best refactoring candidates:

• low refactoring cost

• high build impact parents

• low build impact children

Refactoring cost

Build impact

Refactoring visualization

suggested decomposition levels

header under analysis

Color: refactoring

cost

(how many additional

headers)

Color: refactoring

benefit

(% reduction in build

impact )

decomposition details:

(how to split symbols in smaller

headers and how to #include

these headers)

More details on SolidBA: www.soursourceit.com

Refactoring visualization

Example of bad candidate for header refactoring

refactoring cost

As we gain benefits, we also

increase costs

refactoring gain

Example 2: Post-Mortem Assessment

Situation

•

client: established embedded software producer

•

product: 8 years evolution (2002-2008)

–

3.5 MLOC of code in C166 dialect (1881 files)

–

1 MLOC of headers (2454 files)

–

15 releases

–

3 teams = ~60 people (2 x EU, 1 x India)

•

product failed to meet requests, at end...

Questions

•

what happened right/wrong?

•

how to prevent such errors in the future?

A. Telea, L. Voinea, Case Study: Visual Analytics in Software Product Assessments, IEEE Vissoft, 2009

www.cs.rug.nl/svcg

Our context

Constraints

•

data: source code repository only

•

time: answers needed in max. 1 week

•

we were unfamiliar with the application

Questions

•

how to get the most insight & best address questions with these

constraints?

•

remainder of this talk: description of our ‘visual analytics’ approach

www.cs.rug.nl/svcg

Methodology

Raw data

Data enrichment

Enriched data

Visualization

static

analysis

• call graphs

• dependency graphs

• static metrics

• code duplication

charts

treemaps

• modification requests (MRs)

• authors

• changes / type document

graphs

timelines

repository

evolution

analysis

refine

stakeholders

ask

interpret

• perfect instance of a visual analytics process:

• multiple data types, multiple tools

• tight combination of data extraction, processing, visualization

• incremental hypothesis refinement

present

observations

& questions

final

conclusions

Requirements: MR Duration

files

time

MR related check-in

R1.3 - start

little increase in the file curve – most activity in old files

suggests too long maintenance & closure of requirements

Requirements: MR Duration

time

graph: # commits referring to MRs

within a given id range

in mid 2008, activity related to

MRs from 2006 still takes place

MR ids (1 bar=100 MRs)

Team: Code Ownership

package

module

file

#developers

1

>8

1

>90

#modification requests (MRs)

>30

1

MR closure (days)

team A

team B

large part of software affected by long open-standing MRs

Most of these are assigned to team A (largest team)…

…and this team was reported to have communication problems!

team C

Code: Dependencies

package

module

file

uses

= call, type, variable, macro, …

is used

iface

Most dependencies occur via the iface, basicfunctions

and platform packages

Filter out these allowed dependencies…

…to discover unwanted dependencies

These are accesses that bypass established interfaces

Code: Call graph

High coupling at package level

This image does not tell us very much

Select only modules which are mutually call dependent…

…to discover layering violations

Not a strict layering in the system (as it should be)

Code: Quality Metrics

Moderate code + dependency growth

• does not explain product’s

problems

Average complexity/function > 20

Total complexity: up 20% in R1.3

• testing can be hard!

• possible cause of product’s

problems

Code: Duplication

External duplication

• links: modules that contain similar code

blocks of >25 LOC

Internal duplication

• color: # duplicated blocks within a file

Little external/internal duplication

Arguably not a problem for testing

1

# duplicated blocks

60

Documentation

delay

• 30% of files are documentation

• updated regularly

• grow in sync with rest of code base

854 doc +

html

1688 other files

time

Docs (sorted on activity)

time

• 40% of docs frequently updated

• rest seem to be ‘stale’

Code is arguably well documented…

…so refactoring is likely to be doable

Start from up-to-date docs

Example 3: Database visual analysis

Situation

•

client: top-3 Swiss bank

•

product: 8 years evolution (2004-2012)

–

Oracle/SQL/MS Access database solution

–

~5000 tables, 60000 fields,

–

mix of TS-SQL, Visual Basic, MS Access macros

–

code needed 24-hour uptime

•

product was unmaintainable, at end...

Questions

•

how can we understand the business logic?

•

how can we refactor the database design for better maintenance?

www.cs.rug.nl/svcg

Stakeholders

Technical

personnel

New report implementations

- how to efficiently communicate

changes to business layer?

3

2

Business

experts

New report implementations

- how to efficiently validate new

reports?

4

1

Final

stakeholders

Source code

(Access, SQL, Toad, Oracle, VB, …)

Business logic (BL) specifications

- how to efficiently translate BL

Into technical details?

Business logic

(rules, conditions, limits, …)

Reporting requests

- business-level specifications

- tight deadlines

Business reports

(the final facts & figures)

www.cs.rug.nl/svcg

Visual Analytics Solution

Access Analyzer (SolidAA)

•visual end-to-end data flow analysis across entire reporting platform

•targeted question: “Where does this (report) data come from?”

•targeted users: business & technical

•fully handles any MS Access / SQL database

•technical details: full parsing of MS Access, SQL, symbolic Visual Basic interpretation (!)

root

root

Benefits

•

•

•

•

reverse engineering cost: days

learning cost: days

development cost: few months

client estimated savings: ~500 KEUR

More details on SolidAA: www.soursourceit.com

www.cs.rug.nl/svcg

Conclusions

Software Visual Analysis

•

Effective and efficient for answering concrete problems in the IT industry

• program comprehension

• reverse engineering

• software maintenance and evolution

• software quality assessment

•

Many techniques

• program analysis

• data visualization

• lots of system integration and tool building effort

•

Challenging but worthwhile

• break the old patterns

• learn new techniques

• big cost savings: years / hundreds of EUR

Thank you for your interest!

Alex Telea

a.c.telea@rug.nl

www.cs.rug.nl/svcg

Tool References

Type of data / problem

Tool

Source code / bugs

Source code / C++ syntax, queries

Source code / testing, bugs

Package & library interfaces

Code evolution / file level

Code evolution / syntax level

Generic graphs & software architectures (1)

Software architectures (2)

Generic graphs and trees

Compound digraphs

UML diagrams

UML diagrams and metrics

Dynamic memory allocation logs

Program traces and structure

Software behavior (execution)

Code clones

Software structure and metrics in 3D

SeeSoft

CSV (see VCN)

Tarantula, Gammatella

DreamCode

SolidSTA

CodeFlows

SoftVision (see VCN), ArgoUML

Rigi, aiSee/aiCall/VCG, Creole

GraphViz, Tulip

SolidSX

SugiBib

MetricView

MemoView

ExtraVis

Jinsight, Jumpstart

SolidSDD

CodeCity

Tool References

Tool

CSV (see VCN)

Tarantula

DreamCode, CodeFlows

SolidSTA, SolidSX

SoftVision (see VCN)

Rigi

Creole

GraphViz

Tulip

ArgoUML

SugiBib

ExtraVis

MetricView, MemoView

aiSee/aiCall/VCG

Code clones

Treemaps

URL

www.cs.rug.nl/svcg/SoftVis

www.cc.gatech.edu/aristotle/Tools/tarantula

www.cs.rug.nl/svcg/SoftVis

www.solidsourceit.com

www.cs.rug.nl/SolidSX

www.rigi.csc.uvic.ca

www.thechiselgroup.org

www.graphviz.org

www.labri.fr/tulip

www.argouml.org

www.sugibib.de

www.win.tue.nl/~dholten/extravis

www.cs.rug.nl/SoftVis

rw4.cs.uni-sb.de/~sander

www.solidsourceit.com

www.cs.umd.edu/hcil/treemap-history