Facilitating Group Development of Program Rubrics: A Guide for College Assessment Leaders (pptx)

advertisement

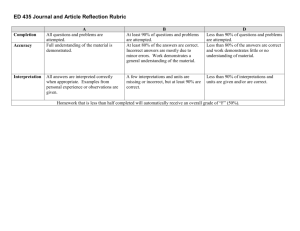

A Guide for College Assessment Leaders Ursula Waln, Director of Student Learning Assessment Central New Mexico Community College › Rubrics help reduce subjectivity in evaluations that are inherently subjective – Appropriate when: › Learning manifests in varying degrees of sophistication › Formative attainment goals can be described › Evaluation seeks to differentiate of levels of performance – “Either/or” dichotomies (such as right or wrong, present or not, can do it or cannot) are more appropriately evaluated with tests or checklists › Clarify program goals – Promote a shared understanding of the desired student learning outcomes (SLOs) – Help faculty see connections between course and program learning outcomes › Serve as norming devices – Identify benchmarking indicators of achievement – Describe progressive levels of competency development in ways that clearly guide ratings › Help aggregate diverse classroom assessments – Provide the means to translate findings into a common program assessment language In written, oral, numeric or visual formats, the student will: Did it awesomely Did it Kind of did it Didn’t do it Mastery 90-100% A 3 points Proficient 80-89% B 2 points Developing 70-79% C 1 point Non-attempt or Emerging 0-69% D-F 0 points Demonstrate organization and/or coherence of ideas, content, and/or formulas Material is sharply focused and organized. The student presents a logical organization of ideas around a common theme that demonstrates an advanced understanding of the subject matter. Material is mostly focused and organized. The student presents logical constructions around a common theme that reflects meaning and purpose. The student’s ideas and organizational patterns reflect a common theme that demonstrates a basic understanding of the subject matter. Ideas are disorganized or may lack development in some places. The material lacks focus and organization with few or no ideas around common theme. Student struggles to demonstrate her/his understanding of the subject matter. Produce communication appropriate to audience, situation, venue, and/or context Demonstrates a thorough understanding of context, audience, and purpose that is responsive to the assigned task(s) and focuses on all elements of the work. Demonstrates adequate consideration of context, audience, and purpose and a clear focus on the assigned task(s) (e.g., the task aligns with audience, purpose, and context). Demonstrates a basic awareness of context, audience, purpose, and to the assigned tasks(s) (e.g., begins to show awareness of audience's perceptions and assumptions). Struggles to demonstrate attention to context, audience, purpose, and to the assigned tasks(s) (e.g., expectation of instructor or self as audience). › Course-level assessments – Connect to program rubrics through SLO alignment – Need to be based on course outcomes to be useful at the course level – May be formative or summative, direct or indirect, qualitative or quantitative – Can be unique Varying assessment type and focus → a broader body of evidence › Program-level assessments can vary too – Internal and/or external stakeholder input › Students, employers, faculty, community members › Surveys, focus groups, interviews – Standardized/licensing/ certification exam results – Accreditor and/or consultant feedback – Practicum/internship evaluations – Peer/benchmark comparisons › Start with well-written, measurable program outcome statements – Upon successful completion of this program, the student will be able to… › Make any necessary revision before developing rubrics › Solicit faculty input and agreement through inclusive processes: 1. Brainstorming 2. Grouping 3. Prioritizing 4. Rubric Refinement › Ask the faculty how students demonstrate learning related to the competency – Remind everyone that brainstorming is a process of generating ideas without censure – Get the ideas down in writing where all can see (e.g., using a flip chart, projection, or note cards) – Note themes that emerge as potential component criteria, but avoid pigeonholing ideas as this can stifle and/or canalize the flow of ideas › Group ideas to identify key criteria for assessing the competency › Combine related ideas, with a focus on broad representation › Rephrase ideas as needed to describe indicators of learning › Reorder or number the indicators of learning based on progressive levels of sophistication/mastery › Discuss options for performance-level headings and organization – Number of levels – Words that could be used as headings – Ascending or descending order of sophistication › One person (or a small group) can now draft a rubric based on the group’s input › Strive for clear, concise descriptions of observable demonstrations of learning – Broad enough to be applicable to multiple courses – Specific enough to provide unambiguous rating scales › Ask the faculty for input – Performance-level descriptions need to be truly representative – All faculty need to be able to translate their course learning outcomes to the program rubric › Revisit and revise as needed – After implementation, follow up with the faculty to assess how well the rubric worked › Curriculum mapping › Student Learning Outcome statement writing/refinement Ursula Waln uwaln@cnm.edu › Facilitating rubric development › Rubric drafting 505-224-4000 x 52943 › Course-level assessments LSA 101C › Program-level assessments › Data analysis › Representing findings in graphics