1.2 Unconstrained Optimization

advertisement

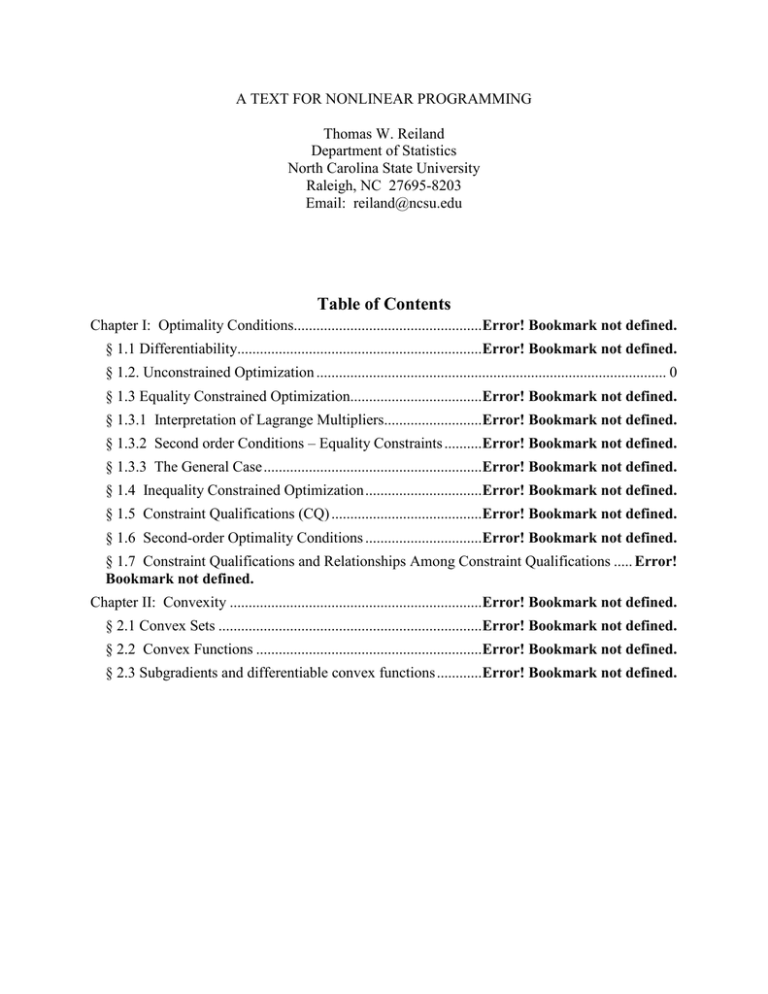

A TEXT FOR NONLINEAR PROGRAMMING

Thomas W. Reiland

Department of Statistics

North Carolina State University

Raleigh, NC 27695-8203

Email: reiland@ncsu.edu

Table of Contents

Chapter I: Optimality Conditions..................................................Error! Bookmark not defined.

§ 1.1 Differentiability.................................................................Error! Bookmark not defined.

§ 1.2. Unconstrained Optimization ............................................................................................. 0

§ 1.3 Equality Constrained Optimization...................................Error! Bookmark not defined.

§ 1.3.1 Interpretation of Lagrange Multipliers..........................Error! Bookmark not defined.

§ 1.3.2 Second order Conditions – Equality Constraints ..........Error! Bookmark not defined.

§ 1.3.3 The General Case ..........................................................Error! Bookmark not defined.

§ 1.4 Inequality Constrained Optimization ...............................Error! Bookmark not defined.

§ 1.5 Constraint Qualifications (CQ) ........................................Error! Bookmark not defined.

§ 1.6 Second-order Optimality Conditions ...............................Error! Bookmark not defined.

§ 1.7 Constraint Qualifications and Relationships Among Constraint Qualifications ..... Error!

Bookmark not defined.

Chapter II: Convexity ...................................................................Error! Bookmark not defined.

§ 2.1 Convex Sets ......................................................................Error! Bookmark not defined.

§ 2.2 Convex Functions ............................................................Error! Bookmark not defined.

§ 2.3 Subgradients and differentiable convex functions ............Error! Bookmark not defined.

1.2 Unconstrained Optimization

1.2-1

§ 1.2. Unconstrained Optimization

Consider the following problem:

Max f ( x), x E n , f has continuous 1st order partial derivatives (i.e. differentiable)

Theorem 1.1 Suppose there is a direction d E n such that f ( x0 )T d 0 . Then there exists

0 such that for t , 0 t , f ( x0 td ) f ( x0 ) .

f ( x 0 td ) f ( x 0 )

0

t 0

t

By the definition of a limit, there exists 0 for 0 t

f ( x 0 td ) f ( x 0 )

0

(eventually this is positive)

t

Hence for any t, 0 t , then f ( x0 td ) f ( x0 ) 0 . QED.

Proof: f ( x 0 )T d lim

Corollary 1.1 If x is a finite local maximum of f ( x) then

f ( x ) 0

(1)

Proof: Suppose f ( x ) 0 ; choose d f ( x ) . Then f ( x )T f ( x ) 0

Theorem 1.1 implies that there exists 0 such that

f ( x d ) f ( x ) for 0 #

This contradicts the fact that x is a local maximum. QED.

Remark: Moreover, (1) holds if x is any local optimum of f ( x) . (1) is a necessary

condition to identify candidate points and is not sufficient to guarantee optimality. These

candidates are often called critical (stationary) points and include local max/min points, global

max/min points, and saddle points.

Example 1-3: f ( x) x3 , f ( x) 3x 2 , 3x 2 0 x 0 but this is only an inflection point.

Theorem 1.2 Let the function f ( x), x E n , have continuous 2nd order partial derivatives.

f ( x ) 0

If

(2)

n

And zT 2 f ( x ) z 0 , z E , z 0 ( 2 f negative definite at x )

(3)

Then x is a local maximum point of f. If the inequality is reversed in (3), then x is a

local minimum of f. Together, (2) and (3) are sufficient conditions.

Proof: Since f is twice differentiable at x , for each x E n

1

2

f ( x) f ( x ) f ( x )T ( x x ) ( x x )T 2 f ( x )( x x ) x x ( x ; x)

()

2

where ( x ; x) 0 as x x

Suppose x is not a local maximum point

1.2 Unconstrained Optimization

For

1.2-2

1

1

0 , k is a positive integer, there exists a vector xk such that x xk and

k

k

f ( xk ) f ( x )

Consider the sequence {xk } , k 1, 2,..., ; since f ( x ) 0 and f ( xk ) f ( x ) , and

x x

denoting d k k

Then () implies the following:

xk x

()

f ( xk ) f ( x )

xk x

2

(x x )

1 ( xk x )T

f ( x ) k

( x ; xk )

2 xk x

xk x

Since f ( xk ) f ( x ) , then this must be positive.

f ( xk ) f ( x ) 1 T

d k f ( x )d k ( x ; xk ) 0

()

2

2

xk x

But d k 1 for every k and so there exists a convergent subsequence of {d k } , say {d k } ,

that converges to d where d 1 . Since all d k ’s are in the unit ball in E n which is compact;

every sequence in a compact set S has a convergent subsequence which converges to an element

in S.

Since ( x ; x) 0 as k , implies the following:

lim

k

k

1 T

1

d k f ( x )d k ( x ; xk ) d T f ( x )d 0 #

2

2

This contradicts (3) so x must be a local max. QED.

Note: “ 0 ” in (3) not adequate.

Example 1-4: f ( x) x3

f ( x) 3x 2 , 2 f ( x) 6 x

At x 0 , f ( x) 0 and zT f ( x ) z 0 but x is not a local maximum.

Example 1-5: max f ( x) x 4

f ( x) 4 x3 0 x 0 is a candidate point.

2 f ( x) 12 x 2

2 f (0) 0

So zT 2 f (0) z 0 and the sufficient conditions from

Theorem 1.2 are not satisfied (too strong in this case) even

though 0 is a maximum point.

Example 1-6: min f ( x1 , x2 ) (2 x1 x2 ) 2 ( x2 1) 2

1.2 Unconstrained Optimization

1.2-3

8 x1 4 x2

2(2 x1 x2 )2

f ( x)

2(2 x1 x2 )(1) 2(x 2 1) 4 x1 4 x2 2

8 4

2 f ( x)

4 4

1

x1*

8 x1 4 x2

Critical Points f ( x)

2

0

*

4 x1 4 x2 2

x2 1

1

f ,1 0

2

8 4 z1

2

2

z2

z 8 z1 4 z2 8 z1 z2 0 z 0

4

4

2

*

Therefore, x is a local (and global) minimum of f.

z1

Example 1-7: min f ( x1 , x2 ) ( x1 x2 ) 2

2( x1 x2 )

f ( x)

0 x1 x2

2( x1 x2 )

2 2

1

so z T 2 f ( x) z 2 z12 4 z1 z2 2 z22 0 ; this equals 0 at z

2 f ( x)

2 2

1

So sufficient conditions are not satisfied even though x1 x2 provides minimum.

Special Cases:

1) One Variable: Given f : E1 E1 , x is an unconstrained local maximum (minimum) of

f ( x )

2 f ( x )

0 and

0 (> 0).

x

x 2

Suppose f ( x ) 0

i. If the first nonzero derivative of f is of odd degree, then x is neither a local maximum

or minimum.

f ( x ) if

ii. If the first nonzero derivative of f is positive and of even degree, then x is a local

minimum.

iii. If the first nonzero derivative of f is negative and of even degree, then x is a local

maximum.

2) Two Variables: Given f : E 2 E1 , x is an unconstrained local maximum (minimum) of

f ( x ) f ( x )

2 f ( x )

2 f ( x ) 2 f ( x ) 2 f ( x )

0,

f ( x ) if

0

(>

0),

and

0

x1

x2

x12

x12

x22

x1x2

Theorem: The symmetric matrix S ( si j ) nn is positive definite if and only if s11 0 and all

leading minors have determinant greater than zero.

e.g.

s11

s21

s12

0,

s22

, S 0

1.2 Unconstrained Optimization

1.2-4

Theorem: The symmetric matrix S ( si j ) nn is negative definite if and only if s11 0 and

s11

s12

s21

s22

s11

s12

s13

0, s21

s31

s22

s32

s23 0,

s33

, (1)n S 0 .

Theorem 1.3 2nd Order Conditions

If f is twice continuously differentiable and if x is a local maximum of f, then

(4)

f ( x ) 0

T 2

And z f ( x ) z 0 z

(5)

If

f ( x ) 0

(6)

And

z f ( x) z 0

(7)

T

2

For every x in some open ball BS ( x ) around x and z , then x is a local maximum. If the

inequalities in (5) and (7) are reversed, then the theorem applies to a local minimum. Conditions

(4) and (5) constitute necessary conditions while (6) and (7) describe sufficient conditions.

Theorem 1.4 Let f be twice continuously differentiable.

If f ( x ) 0

(8)

And z f ( x) z 0, z, z 0, x x in open ball around x

(9)

Then f has a strict local maximum at x . Reversing the inequality in (9) implies x is a strict local

minimum of f.

T

2

Example 1-8: f ( x) x 2 p , p is some positive integer

f ( x) 2 px(2 p 1) 0 at x 0 , satisfying necessary conditions in Corollary 1.1.

2 f ( x) 2 p(2 p 1) x 2( p 1)

For p 1 : 2 f ( x) 2 x0 2 0 , at x 0 , sufficient conditions met from Theorem 1.2

for local minimum.

For p 1 : 2 f ( x) 0 so sufficient conditions in Theorem 1.2 for a local minimum at

x 0 are not satisfied, yet f has a minimum at x 0 . Using Theorem 1.3, at x 0 , all

conditions for a local minimum (both necessary and sufficient) from Theorem 1.3 are satisfied.

Necessary Conditions:

f ( x ) 2 p( x ) 2 p 1 0 at x 0 satisfies (4)

2 f ( x ) 2 p(2 p 1)( x )2( p 1) 0 at x 0 so zT 2 f (0) z 0 z satisfies (5)

Sufficient Conditions:

f ( x ) 0 at x 0 satisfies (6)

1.2 Unconstrained Optimization

1.2-5

2 f ( x) 2 p(2 p 1)( x) 2( p 1) so zT 2 f ( x) z 0 in any neighborhood of x 0

which satisfies (7)

In fact, by Theorem 1.4, x 0 is a strict local minimum since zT 2 f ( x) z 0 x 0 .