Pairwise Support Vector Machines and their Application to Large Scale Problems

advertisement

J ou rnal of Machine L ea rning Rese arch 1 3 (201 2) 2 279-22 92

S u bmit ted 8/11; Re vised 3/12; P u bli sh ed 8 / 1 2

P airwise S up port Vec t or Mac hin e s and th e i r A pp lication to Lar ge

Scale Pr oblems

C ar l Brun ner

A ndr eas F isch e r

C. BRUNNER@ GMX. NET

ANDREAS. FISCHER@TU- DRESDEN. DE

I ns tit u t e for Numeri cal Mathematic s

Te c hn i sc he Un i¨ ve r s it a t Dr e sden

01062 Dr es d e n , Ger ma ny

Klau s L uig

Th orst en T hies

LUIG@ COGNITEC. COM

THIES@COGNITEC. COM

Co gn i te c Sys te m s Gmb H

Gr os senha i n er Str. 10 1

01127 Dr es d e n , Ger ma ny

Editor: C ori n na C ort es

Abst r a ct

)be

P a irwi se cl as si fi c ati o n i s the t as k t o pre d ic t whet ha,eb rotfhaepea(xiraa, bmp

l es

lon g to the

sa m e cla ss o r t o d i f f er en tIncla

p ar

ss tiescular

. , inter cl as s g e n e ral iz at io n prob l ems c an b e t re ate d

in this w a yIn

. pai rwis e cl as si fi c ati o n, th e order of the tw o inp ut e xample s s h ou l d no t af fe ct the

cla ss ifica ti o n re sult . T o ac h i e v e t h i s, p a rti cula r k er n el s as w e ll as the use o f s ymmetr ic tr aining s

in th e fr ame w ork o f sup po r t v ec tor ma ch i n e s were sug ges te d . The paper d i sc u s se s bo t h a p proa

in a gen e ra l w ay a n d e st abli shes a s tr o ng con nect ion bet

In aween

d dit them.

ion , an e f fici ent imp l ementa ti o n i s dis cuss ed wh i ch a llo ws the t ra ining of s e v er al mil li o ns of pai rs . Th e v alue o f thes e

co ntr ib u t ion s is con firme d by e x c el lent res ults o n the la b e le d f ac es i n t h e w i ld b e n c h mar k .

K e y w ords:p a ir w i se sup po r t v ect o r mac h i n e s, i n t erc la ss g ener al iza ti o n, p a ir w i se k e rnels , la

sca le p r o blems

1. Intr o duction

T o e xtend binar y classifier s to mu lticla ss classification se v e ral modifica tio ns ha v e bee n suggested,

for e xample the one ag ainst all technique, the o ne a g ainst one te c hn iq ue, or directed ac yclic gra ph s

see Duan and K eer thi (2005), H ill and D oucet ( 2007 ) , H su and L in (2002), and R ifkin and K lauta u

(2004) for furth er information , dis cussio ns, and c ompariso ns. A more rece nt appr oach used in the

field o f multic la ss and binar y classification is pairw ise classification ( A berne th y et al., 2009; Bar H ille l et al., 2004a,b; Bar -H ille l and W einsha ll, 2007; Ben-H ur and Nob le, 2005; P hillips, 1999;

Vert et al., 200 7)P. air wis e classification relies on tw o in pu t e xamples in ste ad of one and predicts

w he ther th e tw o input e xamples belong to the same c la ss or to dif fer ent cla sses. This is of particular

adv a n tage if only a s u bse t of cla ss es is kno w n f or trainin g . F or la ter use, a support v ecto r machin

(SV M) th at is a b le to handle pairw is e classification tasks is called pairw is e SV M.

A natu ral requireme nt for a p a ir wis e cla s sifier is that the or der of the tw o in p ut e xample s should

not in fl uenc e the cla ss ificatio n result ( symme

A c try).

ommo n approac h to e nforce this symme try

is the use of selecte d k ernels.

F or pairw ise SVMs, another approach w a s suggested.

Bar -H ille l

c 2012Carl B run ner , Andre as F isch er , Klau s L uig and T ho rst e n Thies.

BRUNNER, F ISCHER,L UIGANDT HIES

et a l. (2004a) propose th e use of trainin g sets with a symme tric str uc

W ture.

e w ill dis c uss both

approac h e s to obtain symmetry in a genera Bl wa ased

y . on this, we will pro vide conditions w hen

these a p pr oaches le a d to th e same classifier

Mor eo v. er , w e sho w empiric ally that th e approach of

using sele cted k e rnels is thre e to four times f aster in trainin g.

A typical pairwis e c la ssifica tio n task ar is es in f ace r ecognition.

Th e re, one is of te n in te re ste d

in the interclass generalization,

w her e none of the persons in the trainin g set is part of the test

set. W e w ill demonstrate th at tr a ining sets with ma n y classes (per sons) are nee d e d to obtain a good

perf orma nce in th e interclass gene ralization. Th e training o n such sets is computatio nally e x pe n s i v e

Therefor e, we d iscuss an ef ficie nt imple menta tion of pair wisTh

e SisVMs.

enable s th e tr ain ing of

pairw ise SV Ms w ith se v er al millio ns of pairs. In this w a y , f o r th e labele d f aces in the wild data base

a performance is a chie v ed whic h is super ior to the current state o f th e ar t.

This pape r is structur ed as follo Iws.

n S e ctio n 2 we gi v e a short intr oduction to pairw ise classification a nd dis c u ss th e symmetry of decision function s obtained by pair wis e SV Ms. A f te rw ards,

in Section 3.1 , we analyz e th e symme try of decision functions f rom pairw ise S VMs that rely on

symmetr ic trainin g sets

T .he ne w connection between th e tw o approa ches for obta in ing symmetry is establis hed in Section 3.2.

T he ef fi c ie nt imp leme ntatio n of pairw ise SV Ms is dis cussed in

Section 4. F in ally , we pr o vide performance me asure me nts in S ec tio n 5.

The ma in c o ntrib utio n of the pa p e r is th at w e sho w the e qu i v ale nce of tw o approac h e s for ob

ing a symme tric classifier f rom pair wis e SV Ms and demonstrate the ef ficie nc y and good interclass

genera liz a tio n pe rformance o f pairw ise SVMs on la r ge scale problems .

2. P a i rwise Classificat i o n

Let X be an ar bitrary set and let

m training e xamples

xi ∈ X w ith i ∈ M ≔ { 1,..., m} be gi v e n.

The cla ss of a tr ain ing e xamp le migh t be un kno w n, b ut we d e ma nd that we kno w f or each pair

( xi , x j ) of training e xamples whether its e xamp le s belo ng to the sa me class or to dif fer ent c la sses.

( xi , x j ) belong to the same c la ss and

Accordingly , we d e fiyne

i j ≔ + 1 if th e e xamples o f th e pair

o

(

,

call it a positive pair. O th erwis e, we yset

.

i j ≔ 1 and c allxi x j ) a ne gative pair

I n pair wis e cla ssific atio n th e aim is to decide whether the e x a mple( sa,of

b) a∈ pair

X× X

belong to the same class or not.In this paper , w e w ill mak e use of pairw ise decis ion functions

f : X × X → . Such a function pre dic ts whether the e xamples

a, b of a pair ( a, b) belo ng to the

same cla ssf ( a, b) > 0) or no t (f ( a, b) < 0). Note that neithera, b need to be long to the set of

training e xamples nor the cla s ses

a, b of

need to belong to th e classes of th e trainin g e xamp le s.

× X → . Let H de n ote an ar bitrary re al

A commo n to ol in machine learning a re k ker: X

nels

h ,∙∙uc

i. Ft orφ : X → H ,

Hilb er t spac e with scalar pr od

R

R

k( s, t ) ≔ hφ( s) , φ(t ) i

defines astandar dk ernel.

I n pairw ise cla ssifica tio n one oftenpair

usewis

s ek e rnels

K : ( X × X ) × ( X × X ) → . I n this

paper w e assume that an y pairw ise k er nel is symme tric , th at is , it holds that

R

K (( a, b) , ( c, d) ) = K (( c, d) , ( a, b))

for all a, b, c, d ∈ X , and that it is positi v e semid efinite ( S ch ¨olk opf a nd S mo la , 2001). F or in sta nce ,

KD (( a, b) , ( c, d)) ≔ k( a, c) + k( b, d) ,

KT (( a, b) , ( c, d)) ≔ k( a, c) ∙ k( b, d)

2280

( 1)

( 2)

P AIRWISES V M SANDL ARGES CALEP ROBLEMS

are symmetric and positi v e semidefi nite.

W e callKD dir ect sum pair w ise k e and

rn e Kl T tensor

(cf . S c h ¨olk opf and S mo la , 2001).

pair wis e k ernel

A natura l and desirable property of an y pairw ise decision functio n is that it should be symmetr ic

in the follo w in g sense

f ( a, b) = f ( b, a)

f or alla, b ∈ X.

⊆ M × M is gi v en. Then, th e pairwis e decis ion function

No w , le t us assume Ithat

f obtained by a

pairw ise SV M can b e written a s

f ( a, b) ≔

∑

α i j yi j K (( xi , x j ) , ( a, b)) + γ

( 3)

( i , j ) ∈I

with bias γ ∈ and α i j ≥ 0 for all ( i , j ) ∈ I . Ob viously , ifKD (1) or KT (2) are used, th en the

decision f u nc tio n is n ot symmetr ic in gener al. T his mo ti v ates us toKcall

balanced

a k e rnel

if

R

K (( a, b) , ( c, d)) = K (( a, b) , ( d, c))

f o r a all, b, c, d ∈ X

holds. T hus, if a balanced k e rnel is used, then (3) is a l w ays a symmetr ic decision F

f uornc tio n.

instance, the follo wing k er nels are bala nc ed

1

KDL (( a, b) , ( c, d) ) ≔ ( k( a, c) + k( a, d) + k( b, c) + k( b, d) ) ,

2

1

KT L(( a, b) , ( c, d) ) ≔ ( k( a, c) k( b, d) + k( a, d) k( b, c)) ,

2

1

2

KM L(( a, b) , ( c, d) ) ≔ ( k( a, c) o k( a, d) o k( b, c) + k( b, d) ) ,

4

KT M(( a, b) , ( c, d) ) ≔ KT L(( a, b) , ( c, d)) + KM L(( a, b) , ( c, d) ) .

( 4)

( 5)

( 6)

( 7)

Vert et al. (2007) callKM L m etric le arning pair wis e k erne

and Kl T L tensor learning pair wis e k er ), learning

nel. Simila rly , we call

KDL , whic h w as intr oduce d in Bar -H ille l e t al. (2004

dir ectasum

and KT M te nsor metr ic le arning pa irw ise k. eF rnel

or re presenting some balanced

pair wis e k ernel

k er n e ls by proje c tio ns see B runner et al. ( 2 011).

3. Symmetric P airwise Decis io n Func t i o ns a nd P a i rwise SVMs

P airw ise SVMs lead to dec is ion function s of the forAs

m de

(3).ta iled a b o v e, if a balan c ed k er nel

is used w ithin a pairw is e SVM, one al w ays ob tains a symme tric de cis ionFfunction.

or pairw ise

SV Ms whic h use

KD (1) as pair wis e k e rnel, it has bee n claimed tha t an y symme tric set of tr a ining

pairs le ads to a symmetr ic decision function ( see B a r -Hillel e t al., 2004a). W e c all a set of tr a ining

pairs symmetr ic, if f or an y tr a ining (pair

a, b) th e pair( b, a) also belongs to the trainin g set.

In

Section 3.1 w e pr o v e the cla im of B a r - H illel et al. (2004a) in a more g e neral c o nte xt whic h inc lude

KT (2). A dditionally , w e sho w in Section 3.2 that under some cond itions a s y mmetric tr a ining

γ. bias term

set leads to the same decision function as balanced k ernels if w e dis r e g ard the SV M

Interestingly , th e applic a tio n of balan c ed k ernels le ads to significa ntly shorter training times (s ee

Section 4.2) .

2281

BRUNNER, F ISCHER,L UIGANDT HIES

3.1 Symmet ric T raining Sets

In th is subsec tio n we sho w tha t the sy mmetry of a pair wis e decis io n function is inde ed achie v ed by

me a ns of symmetr ic trainin g sets.

T o this end, letI ⊆ M × M be a sy mmetric inde x set, in oth er

w ords if( i , j ) belongs toI the n( j , i ) also belongs toI . Further more, we w ill mak e use of pairw ise

k er n eKlswith

K (( a, b) , ( c, d)) = K (( b, a) , ( d, c)) f or alla, b, c, d ∈ X.

( 8)

As an y pairw ise k ernel is ass u med to be symmetric , ( 8) holds f or an y balanced pairw ise k ernel. Note

that th ere are o th er pair wis e k er nels that satisfy (8), for instance for the k ernels gi v en in Equations 1

and 2.

F orIR, I N ⊆ I defined byIR ≔ { ( i , j ) ∈ I |i = j } a ndIN ≔ I \ IR let us consider the dual pairw ise

SV M

min G( α )

α

0 ≤ α i j ≤ C for all ( i , j ) ∈ IN

0 ≤ α i i ≤ 2C for all ( i , i ) ∈ IR

∑ yi j αi j = 0.

s.t.

( 9)

(i , j ) ∈I

with

G( α ) ≔

1

αiα

j k lyi j yk lK (( xi , x j ) , ( xk, xl ) ) o

2 ( i, j )∑

,( k,l ) ∈ I

∑

α i j.

( i , j ) ∈I

Lemma 1 I f I is a symm etric inde x set and (if8)holds, then ther e is a solu tioαˆnof ( 9)w ith

αˆ i j = αˆ j i for a ll( i , j ) ∈ I .

∗

Pr oof B y the theore m of W eie rs tr ass ther e is a α

solution

o f ( 9 )L. e t u s define a n oth er feasible

point α˜ of ( 9 ) by

α˜ i j ≔ α ∗j i for all ( i , j ) ∈ I .

F or easier notatio n w eKset

i j,k l ≔ K (( xi , x j ) , ( xk, xl ) ) . Th e n,

2G( α˜ ) =

∑

α ∗j iα ∗l kyi j yk lKi j,k lo 2

( i , j ) ,( k,l ) ∈ I

∑

α ∗j i.

( i, j )∈I

N ote th at

yi j = y j i holds f or a (lli , j ) ∈ I . By (8) w e fur ther obta in

2G( α˜ ) =

∑

α ∗j iα ∗l ky j iyl kK j i,l ko 2

( i , j ) ,( k,l ) ∈ I

∑

α ∗j i = 2G( α ∗ ) .

(i , j ) ∈I

α˜ is als

The last equality ho ld s sinI is

cea symmetric tr a ining set. H ence

, o a solutio n of (9). S in ce

(9) is con v e x (cf. Sch ¨olk opf and Smola, 2 001) ,

α λ ≔ λα ∗ + ( 1 o λ ) α˜

∈ [y0, 1]. Thus,αˆ ≔ α 1/ 2 has the des ir ed property .

solv e s ( 9 ) f orλan

N ote that a result similar to Lemma 1 is presented by W ei et a l. (2006) for Suppo r t Vector Regressio n.The y , ho we v e r , cla im tha t an y solu tio n o f the corresponding quadratic progr am has the

descr ibed pr o pe rty .

2282

P AIRWISES V M SANDL ARGES CALEP ROBLEMS

α of the optimizatio n

Th eor e m If2 I is a symm etric inde x set and(8)

if holds , th en any solution

pr oble m

(9) lead s to a symm etric pair wis e decis io n function

: X × Xf → .

R

α of (9) le t us defi ne

Pr oof F or an y solution

gα : X × X →

gα ( a, b) ≔

∑

R

by

α i j yi j K (( xi , x j ) , ( a, b) ) .

( i , j ) ∈I

( a, bas

) = gα ( a, b) + γ f or some appropria te

Then, the obta in ed decis io n function can be w rfαitten

1

2

γ ∈ . I f α and α ar e so lu tio ns of (9) then

gα 1 = gα2 c an be d e ri v ed by me ans of c on v e x optiαˆ of (9) w ithαˆ i j = αˆ j i for all

mization theor yA. cc ording to Lemma 1 there is al w a ys a solution

( i , j ) ∈ I . Ob viously , such a solu tio n leads to a symmetric decision f ufαnc

tio n fα is a

ˆ . Hence,

α.

symmetr ic decision function for all solutions

R

3.2 Balanced K er nels vs. Sy m metric T raining S e ts

Section 2 sho w s th at on e c an use balan c ed k er nels to obta in a symme tric pairw ise decis io n functio

by me ans of a pair wis e SV M. As deta iled in S e ctio n 3.1 this c an also be achie v e d by symme tric tr ain ing se tsNo

. w , w e sho w in T heore m 3 tha t the d e cis ion function is the same , re g ardless

w he ther a symme tric training set or a certain bala nc ed k er nel is used. This re sult is also of pra ctical

v alu e, sinc e the a pp r oach w ith balanced k er n e ls leads to s ignificantly shorte r training times (see the

empiric a l r esults in Section 4.2).

SupposeJ is a lar gest subset of a gi v en symme tric inde

I satis

x set

f ying

(( i , j ) ∈ J ∧ j 6 i ) ⇒

=

No w , we c o nsid er the optimization proble m

( j , i) ∈

/ J.

min H ( β)

β

s.t.

0 ≤ βi j ≤ 2C for all ( i , j ) ∈ J

∑ yi jβi j = 0

( 10)

( i, j )∈J

with

H ( β) ≔

1

βi jβ k lyi j yk lKˆ i j,k lo

2 ( i , j ) ,∑

( k,l ) ∈ J

∑

βi j

( i, j )∈J

and

1

( 11)

Kˆ i j,k l ≔ =Ki j,k l+ K j i,k l_,

2

w he re

K is an arbitrar y pairw ise k e rnel. Ob viously

Kˆ is a ,bala nce d k ernel. F or instance,

K= K

if D

ˆ

ˆ

=

=

=

(1) the nK KDL (4) or if K KT ( 2) thenK KT L (5). The assumed symmetryKofyie ld s

Kˆ i j,k l = Kˆ i j,l k = Kˆ j i,k l = Kˆ j i,l k = Kˆk l,i j = Kˆ l k,i j = Kˆ k l, j i = Kˆ l k, j i.

( 12)

N ote th at ( 12) hold s not only f o r k e rnels gi v en by ( 11) b ut for an y balanced k e rnel.

2283

BRUNNER, F ISCHER,L UIGANDT HIES

Th eor e m Let

3 the fu nctio ns

α :gX × X →

gα ( a, b) ≔

hβ( a, b) ≔

and hβ : X × X →

R

R

b e defined by

∑

α i j yi j K (( xi , x j ) , ( a, b) ) ,

∑

βi j yi j Kˆ (( xi , x j ) , ( a, b)) ,

( i , j ) ∈I

( i , j ) ∈J

wh e r e I is a sym metr ic inde x set and J is defined asAdditionally

abo ve . , le t K fulfi(8)

ll an d ˆK be

∗

∗

α of ( 9)and for any solu tioβ nof ( 10)it holds that αg∗ = hβ∗ .

giv e n b(11)

y . Then, for any solution

Pr oof B y means of con v e x optimiz ation theory it can be deri vgαedis th

the

atsame functio n f or

α . nThe sa me hold s h

β. Hence,

an y s o lu tio

for

n

due to Lemma 1 w e can assume

β and an y s o lu tio

α ∗i j = α ∗j i. F orJR ≔ IR a ndJN ≔ J \ JR w e defin β¯e by

that α ∗ is a solution of (9) w ith

_ α ∗i j + α ∗j i if ( i , j ) ∈ JN ,

β¯i j ≔

α ∗ii

if ( i , j ) ∈ JR.

∗

α ∗iby

O b viouslyβ¯,is a f easible point of (10). Then, by (11) and

j = α j i we obtain f or

α ∗i j + α ∗j i

β¯i j

¯

ˆ

=Ki j,k l+ K j i,k l_

βi j Ki j,k l =

( Ki j,k l+ K j i,k l) =

2

2

= α ∗i j Ki j,k l+ α ∗j iK j i,k l,

β¯i i

β¯ii Kˆ ii ,k l =

( Kii ,k l+ Kii ,k l) = α ∗ii Kii ,k l.

2

( i , j ) ∈ JN :

( i , i ) ∈ JR :

( 13)

Then,yi j = y j i imp lies

hβ¯ = gα ∗ .

( 14)

I n a second ste p we p r o vβ¯eistha

a solutio

t

n of pr oble m ( 1 0) . B y kusing

l = yl k, th e symme try

¯

of K , (13) , (12), and th e definitionβ of

one obta in s

2G( α ∗ ) + 2

∑

α ∗i j

( i, j )∈I

=

∑

( i , j )∈I

=

∑

α ∗i j yi j

∑

( k,l ) ∈ JN

α ∗i j yi j

( i , j ) ∈ JN ∪JR

=

∑

= 2H ( β¯) + 2

∑

∑

β¯k lyk lKˆ i j,k l+

∑

∑

α ∗j iy j i

( i , j ) ∈ JN

β¯k lyk lKˆ i j,k l+

( k,l ) ∈ J

!

∗

yk kα k kKi j,k k

( k,k) ∈ JR

( k,l ) ∈ J

β¯i j yi j

( i , j ) ∈ JN

∑

∗

∗

yk l=α k lKi j,k l+ α l kKi j,l k_ +

∑

β¯ii yi i

( i ,i ) ∈ JR

∑

β¯k lyk lKˆ j i,k l

( k,l ) ∈ J

∑

β¯k lyk lKˆ i i,k l

( k,l ) ∈ J

β¯i j .

( i , j ) ∈J

Then, the definition ofβ¯ imp lies

∗

G( α ) = H ( β¯) .

2284

( 15)

P AIRWISES V M SANDL ARGES CALEP ROBLEMS

α¯ by

N o w , let us define

β∗i j / 2 if ( i , j ) ∈ JN ,

α¯i j ≔ β∗j i/ 2 if ( j , i ) ∈ JN ,

β∗ii

if ( i , j ) ∈ JR.

O b viouslyα¯,is a f easible poin t of (9) . Then, by ( 8 ) and (11) w e obta in for

β∗

( k, l ) ∈ JN : α¯k lKi j,k l+ α¯l kKi j,l k = k l( Ki j,k l+ Ki j,l k) = β∗k lKˆi j,k l,

2

β∗k k

( k, k) ∈ JR : α¯k kKi j,k k=

( Ki j,k k+ Ki j,k k) = β∗k kKˆ i j,k k.

2

This , (12), and

yk l = yl k yield

∗

2H ( β ) + 2

β∗i j

∑

( i , j ) ∈J

=

∑

1

β∗k lyk l =Kˆ i j,k l+ Kˆ j i,k l_ +

2

( k,l ) ∈ JN

β∗i j yi j

∑

( i , j ) ∈J

=

1

β∗i j yi j

2 (i ,∑

j )∈J

∑

!

1

β∗k kyk k =Kˆ i j,k k+ Kˆ j i,k k_

2

( k,k) ∈ JR

∑

!

α¯k lyk l=Ki j,k l+ K j i,k l_ .

( k,l ) ∈ I

Then, the definition ofα¯ pro vide βs∗i j = α¯i j + α¯ j i f or( i , j ) ∈ JN andα¯i j = α¯j i. Thus,

∗

2H ( β ) + 2

∑

( i , j ) ∈J

β∗i j =

∑

(i , j ) ∈I

α¯i j yi j

∑

( k,l ) ∈ I

!

α¯k lyk lKi j,k l = 2G( α¯) + 2

∑

α¯i j

(i , j )∈I

follo ws. This implie sG( α¯) = H ( β∗ ) . No w , let u s ass u meβ¯that

is not a solutio n of (10)Th

. e n,

H ( β∗ ) < H ( β¯) hold s and, by ( 15 ) , w e ha v e

∗

∗

G( α ) = H ( β¯) > H ( β ) = G( α¯) .

α ∗of

This is a c o ntradictio n to the optimality

. Hence,β¯ is a solution of (10) and

hβ∗ = hβ¯ follo w s.

Then, w ith (14) w e ha v e the desired r esult.

4. Impl ementa tion

O ne of the mo st widely used techniques f or solvin g S VMs ef ficie ntly is the sequential minimal

optimiz ation (SMO ) (Platt, 1999).

A w ell kno wn imp leme ntatio n of this te chnique is LIBS VM

(Chang and L in , 201 E

1)mpirically

.

, S MO sca le s quadratically with the number of tr ain ing p oin ts

(Pla tt, 1999).N ote th at in pairw ise cla ssifi catio n the train ing p oin ts are th e tr a ining

If all pair s.

possible tr ain ing pair s ar e used,

then the number of trainin g pairs gro w s quadratically w ith the

numberm of tr ain ing e xampleHence,

s.

th e runtime of L IBSV M w ould scale quar tic a lly

m. w ith

In Section 4.1 w e d iscuss ho w the costs for e v a luating pairwis e k er nels , whic h can be e xpr essed

by standard k ernels, can be drastically reduced.

In Section 3 w e dis cussed that one c an either use

balanced k er n e ls or symmetric tr ain ing sets to enf o r ce the symmetr y of a pairwis e de cis ion function.

Ad ditionally w

, e s h o w ed th at both approaches lead to th e same decis io n Section

functio n.4.2

compar es th e nee ded tr a ining times of the approach w ith balanced k er nels and the approac h w ith

symmetr ic trainin g sets.

2285

BRUNNER, F ISCHER,L UIGANDT HIES

4.1 Cach in g the Standard K er nel

In this su bse ctio n balanced k ernels ar e u se d to e nforce the symmetr y of th e pairw ise decis io n f unc

tion. K er nel e v aluations ar e cr ucia l for the perf orma nce of L I B SV M. If w e could c ache th e whole

k er n e l ma trix in R AM we w o uld get a huge incr ease of speed. T oday , this se ems imp ossib le for sig

,250 tr ain ing p a ir s as stor ing the (symmetr ic) k er nel ma trix for this nu mb er

nificantly mo re than 125

of pair s in d ouble precision ne eds approximate ly 59G

NoteB.th at tr ain ing sets with 500 tr a ining

e xamp le s alr e ady re su lt,250

in 125

tr a ining p a irNo

s. w , w e de scribe ho w the costs of k ernel e v aluations can be drastically reduceFd.or e xa mple , le t us se le ct theKkT eL (5)

rnelw ith an ar bitrary

stan da rd k ernel.

F or a sin gle e v aluation

KTofL the sta ndar d k er nel has to b e e v alu ate d four times

with v ector s of

X. A f te rw ards, four arithme tic oper atio ns are needed.

I t is ea sy to see that each sta ndar d k ernel v alue is used for e v alu atin g ma n y dif f erent ele ments

of the k ernel matr ixIn. general, it is possible to cache the sta ndard k erne l v alues for a ll tr a ining

e xamp le s. F or e xample , to c ache the sta ndar d k ernel,000

v alues

e xamples

for 1 0o ne nee d s 400MB.

Thu s , each k ernel e v aluation

KT L of

costs four ar ith metic o pe ration s only . T his d oe s n ot de p e nd on

the chosen s ta ndard k ernel.

T able 1 c ompares the trainin g times w ith and with out caching th e stand a rd k eFrnel

or v alues.

these measurements e xamples f rom the doub le inte r v al ta s k (c f. Section 5.1) ar e use d where each

class is r epresented by 5 e xa mple

KT L iss,chosen as pairwis e k er nel with a lin e ar stan da rd k ernel, a

cache size of 100MB is sele cted for ca ching pair wis e k e rnel v alues, and all possible pair s a re used

for tr ain ing. In T able 1a the trainin g set of each run c on

250of

e xample s of 50 cla sse s w ith

m =sists

dif fer ent dimensions

s

of dimension

n. T able 1b sho w s results for dif fere n t number

m of e xamples

n = 500. Th e spee d up f acto r by the describe d cac h in g te c h niq ue is up to 100.

D imension S tandard k e rnel

(time in mm:ss)

n of

e x a mple snot c ached cached

200

400

600

800

1000

2:08

4:31

6:24

9:41

11:27

N umber

Stand a rd k e rnel

(time in hh :mm)

m of

e xamples no t ca chedcached

0:07

0:07

0:07

0:08

0:09

200

400

600

800

1000

(a) Dif fe rent dime ns io

nsf e x a m p le s

no

0:04

1:05

4:17

12:40

28:43

0:00

0:01

0:02

0:06

0:13

(b ) Dif fere n t numbers

m of e xam p le s

T able 1: T ra ining time with and with out caching the sta ndard k erne l

4.2 Balanced K er nels vs. Sy m metric T raining S e ts

Theorem 3 sho ws that pa ir wis e SV Ms whic h use symme tric tr ain ing sets and pairw ise S VMs w ith

balanced k ernels lead to the same decis io n functio

F orn.symme tric trainin g s ets the numbe r of

training pairs is near ly double d compare d to the number in the case of balan c ed

S imultak ernels .

neously , (11) sho ws tha t e v alu atin g a balanced k er nel is c o mp uta tionally more e xpensi v e compa r

to the corresponding non balan c ed k er n e l.

2286

P AIRWISES V M SANDL ARGES CALEP ROBLEMS

T able 2 compar es the needed trainin g time of both approac h e s. T her e, e xamples from the doub le

interv a l ta sk ( cf. S ec tio n 5.1) o f dime

n =nsio

500nare u se d w her e e ach cla ss is re p r esente d by 5

e xamp le Ks,T a n d its balanced v er sion

KT L w ith linear stand a rd k ernels are chosen a s pairw ise

k er n e l, a cache size of 1 00MB is se le cted for caching the pair wis e k ernel v alue s, and all possible

pairs are used for trainin g . I t tur n s out, that the appr o a ch with balanced k ernels is thre e to four times

f a ste r th an using symme tric trainin gOfs course,

ets . the technique of caching the s ta ndard k er n e l

v alu es a s desc ribed in Section 4.1 is used within all me asure me nts .

Numberm

Symme tric training setBala nc ed k ernel

of e xamp le s

(t in hh:mm)

500

1000

1500

2000

2500

0:0 3

0:4 6

3:2 6

9:4 4

23:1 5

0:01

0:17

0:56

2:58

6:20

T able 2: T ra ining time for symmetr ic tr ain ing sets a nd for bala nce d k ernels

5. Classificat i o n Exper iments

In this section we w ill present results of applying pairw ise S VMs to one sy nth etic data set and to

one real w orld data set. Befor e we come to those data sets in Sections 5.1 and 5.2 we

KTl inin

L tr oduce

po l y

and KT L . Those k e rnels denote

KT L (5) with linear sta ndard k ernel and homogenous polynomial

pol y

pol y

lin

l in

stan da rd k ernel of de gree tw o, respecti

The

v ely

k ernels

.

KM

KT M are defined

L, KM L , KT M, a n d

analogously . In the follo wing, detectio n err or trade-of f curv es (D ET curv es c f. Gama ssi e t al., 2004)

w ill be u s ed to me a sure the p e rformance of a p a ir wis e c la ssifier . Such a cur v e sho ws for a n y f a

ma tch r ate (FMR ) the corresponding f a ls e non matc h rate (FNMR ). A specia l point of in te re st of

such a curv e is the (appr o ximated) equal er ror rate ( E ER ) , that is the v alue for w hich FMR =FN MR

holds.

5.1 Dou ble Inter v al Task

Let us descr ibe the

double in te rval ta of

skdimensionn. T o get s u c h an e xample

x ∈ {o 1, 1} n one

dra w is, j , k, l ∈ so that 2≤ i ≤ j , j + 2 ≤ k ≤ l ≤ n and defines

N

xp ≔

_

1 p ∈ { i ,..., j } ∪ {k,..., l } ,

o 1 otherwis e.

) ≔ ( i , k) . Note that the pair( j , l ) does not influence

The classc of such an e xample is gi v en

c( xby

o 3) ( n o 2) / 2 cla sses .

the class. H ence, the re( n

are

F or o ur me asure me nts we selecte

n = 500dand te sted all k ernels in (4)–(7) w ith a linear standard

k erne l and a homogenous polynomial standard k er nel o f de gre e tw o, r especti v ely . W e cr eate d a te

set consistin g of 750 e xamples of 50 c la sses so th at each c la ss is repr esente d by 15 e xample s. An y

training set w as generated in such a w ay that th e set of classes in the tr ain ing set is dis join t from the

2287

BRUNNER, F ISCHER,L UIGANDT HIES

1

1

lin 50 Classes

K ML

lin 100 Classes

K ML

lin 200 Classes

K ML

poly

K TM 50 Classes

poly

K TM 100 Classes

poly

K TM 200 Classes

0.9

0.8

0.7

0.8

0.7

0.6

FNMR

FNMR

0.6

0.5

0.5

0.4

0.4

0.3

0.3

0.2

0.2

0.1

0.1

0

0.001

lin

K ML

poly

K ML

lin

K TL

poly

K TL

lin

K TM

poly

K TM

0.9

0.01

0.1

1

FMR

0

0.001

0.01

0.1

1

FMR

(a) Dif fe r e n t c lass n umbe r s in tra inin g

(b) D if fere n t k ernels f or 2 00 cla s se s in tra inin g

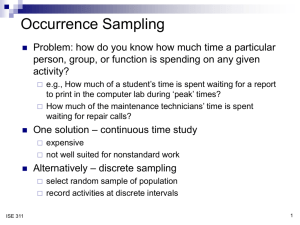

F ig ure 1 : DE T curv es for doub le in te rv al ta sk

set of cla ss es in the te st set. W e cre ate d training se ts consistin g of 50 classes and dif fe rent n umb ers

of e xamples per class. F or tr ain ing all po ss ible training pair s w er e use d .

W e observ ed th at an inc reasing number of e xamp le s per class impro v es th e perfor ma nce in dependently of th e o th er para me te r s. As a tr ade -of f between the needed tr ain ing time and p e rformanc

of the classifier , we decided to use 15 e xamples pe r c la ss for th e me asur

I n de

eme

p e nts

nd .e ntly of

the se le cted k ernel, a penalty paramete

C of 1,r000 tu rned out to be a good choic e. T he kKeDSrnel

led to a bad perfor ma nce r e g ar dle ss of the sta ndard k ernel

Theref

chosen.

o r e, w e omit results f or

KDS.

Figure 1a sho ws that an increa sing numb er of c la sses in the training set impro v es th e per for ma nce significantly . T his holds for a ll k e rnels mentio ned a bo v e. H ere , we only prese nt results f or

pol y

l in

KM

L andKT M . F igur e 1b sho ws the D ET c u r v es for dif f erent k e rnels w he re the tr a ining set consis t

of 20 0 cla sses . In p a rticula r , an y o f the pair wis e k ernels whic h uses a homogeneous p oly no mial o

de gr ee 2 as standard k ernel le ads to b e tte r re sults than its corr espon din g counte r part w ith a lin e a

p ol y

stan da rd k e rnel.

F or FMR s sma ller than 0.07

KT M leads to the best results, w he reas for la r ger

pol y pol y

po l y

FMR s the D ET curv e KsMofL , KT L , andKT M intersect.

5.2 Lab e le d F aces in t h e W ild

In this subsec tio n w e will pr esent results of applying pairw is e SVMs to th e labele d f a ces in the

wild (L FW) data set (Huang et al., 2007). This data set consis,233

ts ofimages

13

of ,5749 pers o ns.

Se v e ral remarks on th is d a ta set are in order . H uang et al. ( 2 007) suggest tw o protocols for per for ma nce me a sureme nts. Here , the unrestric ted pr otocol is u s ed. This protoc o l is a fi x e d te nf old c ro

v alidation where ea ch test set consists of 300 p ositi v e pairs and 300 ne g ati v e p a ir s. Moreo v er , an

person (class) in a training set is not par t of th e cor responding test set.

There ar e se v er al fea ture v ecto rs a v ailab le for the LF W data set. F or the p r esente d measureme

w e ma in ly f ollo w ed L i et al. (2012) a n d used the sca le -in v aria nt f eatur e transform ( S I F T)-bas ed

fea ture v e ctors for the f unn e le d v er sion (Guillaumin e t al., 2009) of LFW. I n addition, the aligned

× 150 pix

images (W olf e t al., 2009) a re used. F or this , the aligned images are cropped

to 80

e ls and

are then normalized by passing th em thr oug h a log function (c f. L i et al.,A2012).

f te rw ards, the

2288

P AIRWISES V M SANDL ARGES CALEP ROBLEMS

1

0.9

lin

K ML

poly

K ML

lin

K TL

poly

K TL

lin

K TM

poly

K TM

0.9

0.8

0.7

0.7

0.6

FNMR

FNMR

0.6

0.5

0.4

0.5

0.4

0.3

0.3

0.2

0.2

0.1

0.1

0

0.001

SIFT

LBP

TPLBP

LBP+TPLBP

SIFT+LBP+TPLBP

0.8

0.01

0.1

1

FMR

0

0.001

0.01

0.1

1

FMR

p ol y

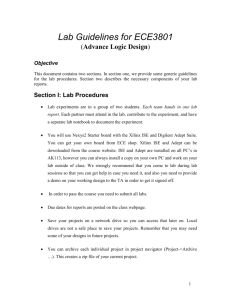

( a ) V ie w 1 p a rtition , dif fere n t k e r ne ls, a d de d up

e c isio icted

n

(b )dUnrestr

p rotocKoT l,M , dif ferent f e atu re v ec to r s,

f unction v a lue s o f S IFT , L P B , a nd T PLB P fe atu“ +r e

v

ec

tors

” stand s for add in g up th e corr e sp ond in g d e cis io n f unction v alu e s

F ig ure 2 : DE T curv es for L FW data set

loca l bin ary patterns (L BP) (Oja la et al., 2002) and three- patc h L BP ( T PLB P) (W olf et al., 2008)

are e xtracted. In contr a st to Li et a l. (2012), the pose is neith er estimated nor sw a p pe d and no PCA

is applied to the data.A s the norm of the L BP featur e v ec tors is not th e same for a ll images we

scaled th em to Euclid e an norm 1.

F or mode l se le ction, the V ie w 1 par titio n of the L FW data bas e is recommended (Huang et al.,

2007). U sin g all possible pairs of this partitio n f or trainin g and for te sting, we obtained that a penalty

pol y

para me te

C rof 1,000 is suita ble.Mor eo v er , f or e ach used featu re v ector , K

the

ernel

ads to

T Mk le

the be st r esults among all used k e rnels and als o if sums of decision f u nc tio n v a lues belo ng in g to

SIFT , LB P, and TP LBP f eatur e v ec tors are

F or

used.

e xample, Figure 2a sho w s the pe rformance

of dif fere nt k ernels, w he re the decis io n function v alues corr esponding to SIFT , LB P, and T PLB P

fea ture v ector s a re added up.

Due to the spe ed up te chniques pr esente d in S ec tio n 4 w e w ere able to tr ain w ith la r ge n umb er

of tr ain ing pairs. H o we v er , if all pair s w e re used for tr a ining, then a n y tr a ining set w ould consis t o

,000,000 pairs and the tr ain ing w ould still nee d too muc hHence,

approximate ly 50

time. w her eas

in an y train ing set all positi v e trainin g pairs were used, the ne g a ti v e tr ain ing pairs w er e r ando mly

,000,000

selecte d in such a w ay th at an y trainin g set consis

ts ofpairs.

2

The trainin g of such a mo del

took less than 24 ho ur s on a sta ndard PC. In Figure 2b w e present th e a v er age D ET curv es obtained

pol y

for KT M and f eature v e ctors based on SIFT , L BP, and TPL BP. Inspired b y Li et al. ( 2 012) , we

determin e d tw o f urther DE T curv es by a d din g up th e dec is ion function v a lues. This le d to v ery goo

results. Further more, w e concatenate d th e SIFT , LBP, a n d T PLB P f eature v ec tors. Surpris in gly , th

training of some of those models needed lo nger than a week.

Therefor e, we do not prese n t th ese

results.

I n T able 3 the mean equal e rror rate (EE R) a nd the standard er ror of the me an (SEM) o btained

fr o m the te nfold cr oss v alidatio n are pr o vided for se v eral ty pes of f eatur e v ectors. Note , that ma n y

of our re sults ar e compar able to the state of the art or e v en better . The curr ent sta te of the ar t can be

found on the homepage of Huang et al. ( 200 7) a nd in th e publication of Li et al. (2012). If only SIFT± 0is

.0040

based fea ture v ectors a re used, then the best kn o w n.125

re sult

0 (EE R± SEM). W ith

2289

BRUNNER, F ISCHER,L UIGANDT HIES

.1252± SE

pairw ise SV Ms w e achie v ed th e same EE R b ut a slig htly higher

0.0062.

M 0 If w e add up

the decision function v alues corr espon din g to th e LBP and T PL BP featur e v ector s, then our result

.1050

0.1210± 0.0046 is w orse compare d to th e sta te of th e

art 0± 0.0051. O ne possible reason

for th is f act might be that w e did not sw ap the pose.

Finally , for the added up dec is ion function

.0947

v alu es corre spon din g to S I F T , L BP a n d TP LBP f eature v ector s, our

per± forma

0.0057nce 0

.

±

.

is better th an 00993 0 0051. F ur thermo re, it is w orth noting th at our stand a rd e rrors of th e me an

are compara b le to th e other pr esente d le ar n in g algorithms although mos t of them use a PC A to

reduce nois e and dimension of the featur e v ectors.

N ote that the re sults of the comme r cia l system

are not directly compar able since it use s outside tr a ining data (for r efer ence s ee Huang e t al., 20 07) .

S IFT

LB P

TP LB P L+ T

S+L +T

CS

P airw ise Mean E ER 0.1252

SVM

SE M

0.0062

0.1497 0.1452 0.1210 0.0947

0.0052 0.0060 0.0046 0.0057

-

State of Mean E ER 0.1250

the A r t SE M

0.0040

0.1267 0.1630 0.1050 0.0993 0.0870

0.0055 0.0070 0.0051 0.0051 0.0030

T able 3:Mea n EE R a nd SE M for LF W data set. S=S I F T , L=LB P, T=T PL BP, +=adding up decision

f u nc tio n v alues , CS=C ommercialface.com

system r2011b

6. Fi na l Remarks

In this p a per we suggested the S VM fr ame w or k for handlin g lar ge p a ir wis e cla ssific atio n p r oble m

W e analy zed tw o approaches to enforce the symmetr y of the o btained claTssifie

o the rs.

best of

our kno w ledge, w e g a v e the first proof th at symme try is indeed achie v ed. Then, we pro v ed that f or

each par ame ter set of one approa ch there is a cor responding para me ter set of the oth er one such th at

both approaches le ad to th e same classifier

Additionally

.

, we sho w ed that the approach b a sed on

balanced k er n e ls le ads to shor te r training times.

W e discussed deta ils of the imp leme ntatio n of a pairw ise SVM solv er and pre sente d numerical

results. Those results demonstrate th at pairw is e SVMs are capable o f successfully treatin g lar ge

scale pa ir wis e classification pr o blems . F ur thermor e, we sho w e d tha t pair wis e SV Ms compete v er

well for a r eal w or ld data se t.

W e w ould lik e to under lin e that some of the dis c u ss ed techniques could be transfer red to oth er

approac h e s for solving pairw ise classification p r oble

F orms

e xample,

.

most of th e results can be

applied ea sily to One Cla ss Supp or t Vec tor Ma chines (S c h ¨o lk opf et al., 2001).

Ackno wledgments

W e w ould lik e to th ank the unkno w n refe rees for their v aluable comments and suggestions.

2290

P AIRWISES V M SANDL ARGES CALEP ROBLEMS

Ref e r e nces

J. Ab e rneth y , F . B a ch, T . Evg e niou, a n d J.-P. Ver t. A ne w appr o a ch to collaborati v e fi lter ing: O

ator estimation with spec tr al re gu larization

J ournal

. of Mac hine Learning Resear

, 10:803–826,

ch

2009.

A. B ar -Hillel a n d D. W einshall. Learnin g dista nce f u nc tio n by coding simila r ity . In Z. G hahr ama ni,

editor Pr

, oceedin g s of the 24th Inte rnational C onfe r ence on Mac hin e Learning

, pages

(ICML ’ 07)

65–72 . A C M, 2007.

A. B ar -Hillel, T . H e rtz , and D . W einshall. Boostin g ma r gin base d dista nce functions for cluster ing. I n C. E. Brodle y , editor

In ,P r ocee d in gs of the 21st Internatio nal Confe r e nce on Mac hine

, pages 393– 400. A CM, 200 4a .

Learning (I C M L ’04)

A. B ar -Hillel, T . H er tz , and D. W ein shall. L e arning dis tance functions for imagePrretrie

o- v al. In

ceedin g s of the IEEE Com puter Socie ty C onfer ence on C ompute r V is io n an d P attern Reco gnition

(CV PR ’04)

, v olume 2, pages 570–577. IEE E Computer Society Press, 2 004b.

A. B en- H ur and W. Sta f ford Nob le. K ernel me thods for predicting protein–protein inte r actions.

, 21(1):38–46, 2005.

B io informatics

C. B r unnerA.

, Fis cher K

, . L uig, and T . Thie s.P air wis ke ernels,support v ectorma chine s,

and the application to la r gescale problems .T echnic aReport

l

MAT H-NM-04-2011, I n¨at

stitute o f Nu mericalMath ema tics,T echnis c hUeni v er sitDresden,

O ctobe r2011. UR L

.

http://www.math.tu-dresden.de/˜fischer

C.-C. C hang and C .-J. L in . LIBSV M: A lib r ary f or su ppor t v e ctor ma chine s.

A CM T r ansactionson Intellig ent Sy ste msand Tec hnolo ,gy 2(3):1 –2 6, 2011. UR L

.

http://www.csie.ntu.edu.tw/˜cjlin/libsvm (August 2011)

K . Du a n a n d S. S. K eerthi. Which is the b e st multic la ss SV M method? A n empiric a l study . In N. C.

O za , R. Po likar , J. Kittle r , and F . Ro li,Peditors,

r oce edings of th e 6th I n ternatio nal Wo r kshop on

, pages

27 8–285. Springer , 2005.

Multiple C la ssifier System

s

M. Gama ssi, M. Lazza roni, M. Mis in o , V. Piuri, D. S a n a , and F . Scotti. A cc urac y and pe rformance

of biome tric syste ms.Pr

In oceedings of the 2 1th I E EE I nstr um e ntatio n and Me asur em ent Tec hnolo gy C onfer ence ( IMTC, ’04)

pages 510–51 5. IEEE , 2 004.

M. Guillaumin ,J. Verbeek,and C. Schmid .Is tha tyou? Metr ic le a rningapproache for

s f ace

identificatio n. InP r ocee d in gs of the 12th Interna tional Confe r ence on Com puter V is ion (ICC V

’09), pages 4 98–505, 2009. U RL

http://lear.inrialpes.fr/pubs/2009/GVS09 (August

20 11) .

S. I. Hill and A . Doucet. A fr ame w or k f or k ernel-based multi- cate gory cla ssific

atio of

n.

J ournal

, 30( c1 h) :525–564, 2007.

A r tifi cia l Inte llig enc e Resear

C.-W. Hsu and C.- J. Lin. A c ompariso n o f me th od s for multiclass support v ectorIE

ma

EEchin es.

T r ansactio ns on Neur al Networks

, 13( 2):415–425, 2 002.

2291

BRUNNER, F ISCHER,L UIGANDT HIES

G. B. Huang, M. Rame sh, T . Ber g, and E. L ea rned-Miller . Labeled f a ces in the wild: A database f or

studying f ace rec og nition in unconstraine d e n vironme nts . T echnic a l R e p or t 07-49, Uni v ersity of

Massachusetts, Amher st, October 2007.http://vis-www.cs.umass.edu/lfw/

UR L

(August

20 11) .

P. Li, Y. Fu, U. Moh a mme d, J. H . E lde r , and S . J. D . Prince . P r obabilistic models for infe rence abou

identity .IEEE T r ansactions on P atte rn A nalysis and Mac h in e I, nte

34:1llig4 ence

4–157, 2012 .

T . Oja la, M. Pietik ¨ain en,and T . M ¨aenp ¨a Multir

¨a. esolu tio ngra y-scale and rotatio n in v ariant te xtur e classification with lo cal bin ary pa tte r ns.I n IEE E T r an sac tio ns on P attern Analy sis a nd Mac hine Intellig ence

, 24(7) :971–987 ,2002. UR L

.

http://www.cse.oulu.fi/MVG/Downloads/LBPMatlab (August 2011)

P. J. P hillips. Support v e ctor machines applied to f ace recognition. In M. S . K earns, S. A. Solla , and

D . A. C ohn, edito rAs,dvances in Neur al Informatio n Pr ocessing System

, page

s 11s 803–809.

MIT Pres s, 19 99.

J. C . Pla tt.F a sttrainin gof support v ec tormachinesusin gsequentialminimal optimiz a tio n.

In

B . Sch¨ olk o pf , C. J. C. B ur g e s, and A . J. S moAladvance

, e d itors,

s in K ernel Meth od

Support

s:

, pages 185–208. MIT Press, 1999.

Vector Learning

R. R ifkin a n d A . K lau tau. In d e fense of one-vs-all cla ss ificatio

n. of Mac hine Learning

J ournal

200 4.

R e sear, c5:101–141,

h

.

B. Sch ¨olk opf and A . J. Smola

Learning

with K ernelsSu

: pport Vecto r Mac hin es, Re gula riz ation ,

O ptimizatio n, and Be yond

. MI T Press, 2001.

B. S c h ¨olk opf, J. C. Platt, J. Sha we-T a y lo r , A . J. Smola, and R. C . W illiams on. Estimating th e s u p

of a high-dimensional distr ib utio

Neur

n. al C omputations

, 13( 7):1443–1471 , 2001.

J. P. Vert, J. Qiu, and W. No ble. A ne w p a ir wis e k er nel for biological netw ork infer ence w ith s u pport

v ecto r machines.

BMC Bioinformatics

, 8(Supp l 10):S8, 2007.

L. W ei, Y. Ya ng , R . M. N ishika w a, and M. N . W ernic k. Learnin g of perceptua l similarity fr om

e xpert re aders for mammo gra m r etr iePvr al.

In

oceedin

gs of the I E EE Internatio nal Sym posiu m

, pages

on Biomedic al Ima ging ( ISB

I ) 13 56–1359. IEE E, 2006.

L. W o lf, T . Hassner , and Y. T aigman. Descrip tor based methods in the

F awild

c es. Iinn Real- Life

Ima g es Work sho p a t the E ur opean Co nfer ence on Com pute r V ision, (ECC

2008. V

UR’08)

L

.

http://www.openu.ac.il/home/hassner/projects/Patchlbp (August 2011)

L. W olf , T . H assner , and Y. T aigman. Simila r ity scor es based on background

P rsamples.

oce ed- In

v olu

2, pages 88–97, 2009.

ings of the 9th Asian Confe r ence on Com puter V ision ( A, C

CVme

’ 09)

2292