Developing Rubrics within the Context of Assessment Methods Peggy Maki Senior Scholar

advertisement

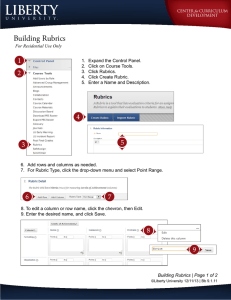

Developing Rubrics within the Context of Assessment Methods Peggy Maki Senior Scholar Assessing for Learning AAHE pmaki@aahe.org Rubrics Rubrics establish a basis upon which you ascertain how well a student is achieving or performing or using or integrating “x” Raters use these to judge work Professors use them to give students feedback Students use them to develop work and to understand how their work meets those standards What are the key elements in a rubric? Levels of achievement Criteria that distinguish good work from poor work Descriptions of criteria at each level of achievement Examples of Levels of Achievement Verbal or numerical rankings (such as conceptual understanding is apparent; conceptual understanding only adequate; conceptual understanding not adequate; does not attempt conceptual understanding Mastery levels (levels of attainment) Holistic (one score overall); analytic National standards for scoring Departmental standards for scoring (developed through consensus) Examples of Criteria for Conceptual Attainment in Fundamental Mathematics Conceptual understanding apparent Consistent notation, with only an occasional error Logical formulation Complete or near complete solution/response Ways to Derive Rubrics: Develop through consensus with colleagues Derive from examples of student work (A-F) Derive from knowledge about transition from novice to expert Draw on students’ experiences Format for Scoring Sheet Level Trait 1 Trait 2 Trait 3 Trait 4 Trait 5 Level Level Indicators Develop a rubric for one outcome: Where You Seek Evidence… Course-embedded In-class Out-of-class Off-campus On-line Some Methods That Provide Direct Evidence Student work samples Collections of student work (e.g., Portfolios) Capstone projects Course-embedded assessment Observations of student behavior Internal juried review of student projects Direct Evidence (continued) External juried review of student projects Externally reviewed internship Performance on a case study/problem Performance on problem and analysis (Student explains how he or she solved a problem) Direct Evidence (continued) Performance on national licensure examinations Response to critical incident Locally developed tests Standardized tests Pre- and post-tests Essay tests blind scored across units Mapping Methods That Do Not Provide Direct Evidence but May Be Combined with Other Methods – Indirect Methods Faculty publications (unless students are involved) Courses selected or elected by students Faculty/student ratios Percentage of students who study abroad Enrollment trends Percentage of students who graduate within five-six years Diversity of student body Focus groups (representative of the population) Interviews (representative of the population) Surveys Other sources of information that contribute to your inference making: NSSE results, grades, participation rates or persistence in support services, course-taking patterns, majors Identify Methods that Will Assess Your Outcome Statement Using Your Rubric: