2013 2014 asses report comp e v2

advertisement

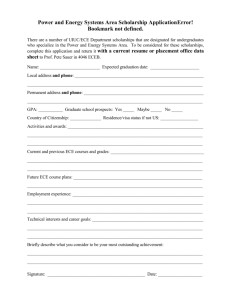

2013-2014 Annual Program Assessment Report College: CECS Department: Electrical and Computer Engineering Program: Computer Engineering Assessment liaison: Dr. Deborah van Alphen 1. Overview of Annual Assessment Project(s). Provide a brief overview of this year’s assessment plan and process. (See the chart at the end of this report (Question 8) for an overview of our 3-year assessment cycle.) Fall ’13 and Spring ’14 were the first two semesters of our 3-semester Implementation Phase, during which we implement the changes that were approved as part of our Program Improvement Plan. (The Program Improvement Plan was recommended by the ECE Department Program Improvement Committee, and approved by the ECE Department faculty in Spring ’13. The plan was based on the results of our Major Assessment conducted in calendar year 2012.) The list below provides the recommended actions that were part of the Program Improvement Plan of Spring ’13, along with a brief comment about the progress that was made during the first two semesters (Fall ’13 and Spring ’14) allotted for their implementation. 1 Add ethics lecture to Senior Design, followed by an assignment and quiz o Done. Design targeted assignments for outcomes h, i and j with courses to be selected by Digital Curriculum Committee o Done by the department faculty at-large, and submitted to our accreditation agency, ABET. Clean up lab manual: ECE 240L o Done Develop videos to instruct students on the proper use of lab equipment o Done - videos were developed to instruct students on the proper use of the digital multimeter, the oscilloscope, and the function generator. These videos are now available from the department web page, and their availability is announced in the appropriate lab courses. Write ECE 309 lab manual and oral report rubric suitable for internet oral reports o Mostly done. Add a section on Simulink to ECE 309 o Mostly done. Require Senior Design students to include a slide in their final oral presentations about at least one professional standard applicable to their project o Done, starting in Fall ’13 and now on-going. Drop the prerequisite quizzes from our formal assessment process o Done. Form faculty committee to eliminate curriculum overlap in math sequence: Math/ECE 280, ECE 309, ECE 350, and ECE 351 o The committee met twice, but some work remains to be done, including writing a report of recommended changes that the department will be asked to approve. The tasks that are not yet completed will be worked on this semester (Fall ’14), which is the last of the three semesters allotted for implementing the Program Improvement Plan. 2. Assessment Buy-In. Describe how your chair and faculty were involved in assessment related activities. Did department meetings include discussion of student learning assessment in a manner that included the department faculty as a whole? All ECE Department faculty members voted on the Program Improvement Plan (as given in Question 1). The actions recommended in the Program Improvement Plan are implemented by individual members or subgroups of the faculty. For example: changes in Senior Design were mostly implemented by the Senior Design instructor; changes to ECE 309 were mostly made by the course coordinator for that course; the faculty committee to eliminate curriculum overlap in the math sequence consisted of four ECE faculty members, including the chair. 2 The assessment process, the assessment results, and the progress being made in implementing the Program Improvement Plan have been discussed in almost all of our monthly department meetings. 3. Student Learning Outcome Assessment Project. Answer items a-f for each SLO assessed this year. If you assessed an additional SLO, copy and paste items a-f below, BEFORE you answer them here, to provide additional reporting space. 3a. Which Student Learning Outcome was measured this year? No SLO’s were measured this year. Our plan calls for us to assess all SLO’s, but only every 3rd year. The most recent Major Assessment Phase (in which data was collected) was in calendar year 2012. (See Question 8 for the over-all assessment plan.) 3b. Does this learning outcome align with one or more of the university’s Big 5 Competencies? (Delete any which do not apply) N/A 3c. What direct and/or indirect instrument(s) were used to measure this SLO? N/A 3d. Describe the assessment design methodology: For example, was this SLO assessed longitudinally (same students at different points) or was a cross-sectional comparison used (Comparing freshmen with seniors)? If so, describe the assessment points used. N/A 3e. Assessment Results & Analysis of this SLO: Provide a summary of how the results were analyzed and highlight findings from the collected evidence. N/A (Our most recent analysis was last done in Sp’13, covering all 14 of our SLO’s; see Annual Report for that semester.) 3f. Use of Assessment Results of this SLO: Describe how assessment results were used to improve student learning. Were assessment results from previous years or from this year used to make program changes in this reporting year? (Possible changes include: changes to course content/topics covered, changes to course sequence, additions/deletions of courses in program, changes in pedagogy, changes to student advisement, changes to student support services, revisions to program SLOs, new or revised assessment instruments, other academic programmatic changes, and changes to the assessment plan.) See answer to Question 1 for the changes that were part of our Program Improvement Plan. All of those recommended actions resulted from our Major Assessment of Spring ’13. 3 4. Assessment of Previous Changes: Present documentation that demonstrates how the previous changes in the program resulted in improved student learning. Most of our major curriculum changes have been motivated by indirect assessment results such as those obtained from our Senior Exit Interview and Survey. For example, from previous surveys and interviews we learned that our students knew they needed to know more MATLAB and that they thought the math course in Differential Equations was too theoretical, lacking in engineering applications. Senior Exit Survey results1 from 2012 and 2013 indicated that the addition of ECE 280 (Applied Differential Equations in EE) and ECE 309 (Numerical Methods in EE) to the curriculum has solved these problems. The relevant survey questions and answers are summarized below. Because the courses are quite new, only a small number of graduating seniors had taken these two courses by the time the surveys were administered. Senior Exit Survey Results re: ECE 280 and ECE 309 (Combined from Fall ’12 and Sp ’13 Surveys) Survey and Question Identification # of Responses of Responses ECE Senior Exit Survey, 2012 & 2013 (#15): If you took the new Diff. Eq. course (ECE 280) from the ECE Dept, did it cover and emphasize engineering applications? Yes No Total ECE Senior Exit Survey, 2012 & 2013 (#16): If you took the new Num. Analysis course (ECE 309), did it cover and emphasize MATLAB? Yes No Total 1 4 10 3 13 76.9 23.1 18 2 20 90 10 The survey results s are based on EE student responses, because as of Spring, ’13, no CompE students had yet taken these courses. 5. Changes to SLOs? Please attach an updated course alignment matrix if any changes were made. (Refer to the Curriculum Alignment Matrix Template, http://www.csun.edu/assessment/forms_guides.html.) N/A – No changes were made. 6. Assessment Plan: Evaluate the effectiveness of your 5 year assessment plan. How well did it inform and guide your assessment work this academic year? What process is used to develop/update the 5 year assessment plan? Please attach an updated 5 year assessment plan for 2013-2018. (Refer to Five Year Planning Template, plan B or C, http://www.csun.edu/assessment/forms_guides.html.) The plan is being emailed as a separate attachment. Because our program is assessed by ABET once every six years, we have an assessment process with a 3-year cycle. Our assessment plan itself is well-established by now, and the plan works in the sense that we are able to collect and process the data that we need to assess the program. However, I would say that our plan is way too intense, requiring us to collect too much data that is of limited value with respect to program improvement. The plan is re-examined once every three years, as we approach the Major Assessment Phase (which is the data collection phase). We reexamine the program objectives, the outcomes, and the courses in which data is to be collected for each outcome. This re-examination is being done this semester (Fall ’14). 7. Has someone in your program completed, submitted or published a manuscript which uses or describes assessment activities in your program? Please provide citation or discuss. No. 8. Other information, assessment or reflective activities or processes not captured above. Our assessment process (shown below) consists of a three-year cycle, with three phases: the Major Assessment Phase (lasting for one year), in which we collect data from exams, surveys, etc., the Major Evaluation Phase (lasting for one semester), in which we evaluate the assessment data and form an Improvement Plan, and the Implementation Phase (lasting for three semesters), in which we implement the Program 5 Improvement Plan. In the Spring of 2013, we completed a Self-Study Report for ABET accreditation, which covers two cycles (hence, 6 years) of our assessment process. For interested readers, the Self-Study Report is available from either the chair or the dean. The three-year cycle is: Major Assessment Phase (collect data: exams, homework, surveys, …) 3 semesters 1 (calendar) year: 2012, 2015, 2018, … Implementation Phase Major Evaluation Phase (Implement Program Improvement Plan) (analyze and evaluate assessment results) 1 semester: Sp ’13, Sp ‘16, … Output: Program Improvement Plan (continued on next page) 6 The table below shows two 3-year cycles of assessment, and the way that the cycles align with the ABET Self-Study Report of Spring, 2013. Our next ABET Self-Study Report will be in 2019. Assessment Process, 2012 - 2017 7