Document 15072477

advertisement

Subject

Year

: T0293/Neuro Computing

: 2009

Self-Organizing Network Model (SOM)

Meeting 10

2

Unsupervised Learning of Clusters

• Neural Networks yang telah dibahas pada bab terdahulu melakukan fungsinya

setelah dilakukan training (supervised)

• SOM adalah neural networks tanpa training (unsupervised)

• SOM melakukan learning berdasarkan pengelompokan data input (clustering of

input data)

• Clustering pada dasarnya adalah pengelompok-kan dari objek-objek yang serupa

&memisahkan objek-objek yang berbeda

3

Clustering dan Pengukuran Keserupaan

• Misalnya kita diberikan sekumpulan pola tanpa ada informasi adanya sejumlah

cluster/pengelompokan. Masalah clustering dalam kasus ini adalah

pengidentifikasian jumlah cluster berdasarkan kriteria-kriteria tertentu.

•

Himpunan pola { X1, X2, ... , XN }, jika akan dikelompokkan memerlukan

‘decision function’. Pengukuran keserupaan yang umum digunakan adalah

‘Eucledian distance’.

|| X - Xi || = ( X - Xi )t ( X - Xi )

• Semakin kecil ‘distance’ semakin serupa pola tersebut.

4

Struktur Pola

• Pola-pola dalam ‘Pattern Space’ dapat didistribusikan ke dalam dua struktur yang

berbeda. Perhatikan struktur berikut.

Cluster

Straight lines

Lines

Class 2

Class 1

Class 3

(a)

Figure 7.10 Pattern structure: (a) natural similarity and

(b) no natural similarity

(b)

Struktur natural selalu berkaitan dengan pengelompokan pola (pattern clustering).

5

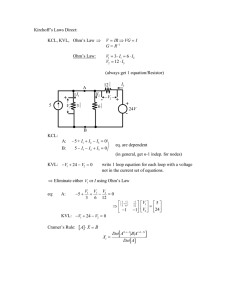

Algoritma SOM

Step 1.

Initialize Weights

Initialize weights from N inputs to the M output

nodes shown in Fig. 17 to small random

values. Set the initial radius of the

neighborhood shown in Fig. 18

Step 2.

Present New Input

Step 3.

Compute Distances to All Nodes

Compute distances dj between the input and

each output node j using

N-1

dj = (xi(t) - wij(t))2

i=0

where xi(t) is the input to node i at time t and

wij(t)is the weight from input node i to output

node j at time t

6

Step 4.

Select Output Node with Minimum Distance

Select node j* as that output node with

minimum dj.

Step 5.

Update Weights to Node j* and Neighbors

Weights are updated for node j* and all nodes

in the neighborhood defined by Nej*(t) as

shown in Fig. 18. New weights are

wij (t+1) = wij (t) + (t) (xi (t) - wij (t))

For j Nej* (t) 0 i N - 1

The term (t) is a gain term ( 0 < (t) < 1) that

decreases in time

Step 6.

Repeat by Going to Step 2

7

Algoritma Kohonen menghasilkan ‘vektor quantizer’ dengan cara

menyesuaikan bobot.

OUTPUT

NODES

X0

X1

Xn-1

Figure 17. Two-dimensional array of output nodes used to form feature maps. Every input is connected to every output node via a

variable connection weight.

8

Algoritma Kohonen yang menghasilkan ‘Feature Maps’ memerlukan neigbourhood di

sekitar node-node

NEj (0)

NEj (t1)

j

NEj (t2)

Figure 18. Topological neighborhoods at different times as feature maps are formed. NEj (t) is the set of nodes considered to be in

the neighborhood of node j at time t. The neighborhood starts large and slowly decreases in size over time. In this example, 0

< t1 < t2.

9