Concurrent Programming Introducing some principles of reentrancy, mutual exclusion and thread-synchronization

advertisement

Concurrent Programming

Introducing some principles of

reentrancy, mutual exclusion and

thread-synchronization

Problems with ‘stash.c’

• We wrote a ‘public clipboard’ device-driver

in order to illustrate ‘sleeping’ and ‘waking’

• But our initial version of that driver module

exhibits some problems – for example, if

we allow more one process to read from

our ‘/dev/stash’ device-file concurrently:

Reader in window #1: $ cat /dev/stash

Reader in window #2: $ cat /dev/stash

Writer in window #3: $ ls /usr/bin > /dev/stash

What is a ‘race condition’ ?

• Without any ‘synchronization’ mechanism,

multiprogramming is vulnerable to ‘races’

in which programs produce unpredictable

and erroneous results, due to the relative

timing of instruction-execution in separate

threads or processes

• An example program demonstrates this

phenomenon (see our ‘concur1.cpp’)

One cure is communication

• What’s needed is some way for the tasks

to be made aware of each other’s actions,

or for a separate ‘supervisor’ program (the

operating system -- or one of its installed

kernel modules) with power to intervene,

and thus to impose some synchronization

• Various mechanisms exist in Linux which

make it possible for tasks to communicate

or for the kernel (and drivers) to mediate

Kernel semaphores

• The ‘race conditions’ that are exhibited by

our ‘stash.c’ device-driver when we use it

with two or more ‘reader’ processes -- or

with two or more ‘writer’ processes -- can

be eliminated by enabling our driver to

enforce a ‘one-writer/one-reader’ policy

• This is easy to do by using ‘semaphores’

Mutual-exclusion syntax

• Declare a semaphore: struct semaphore sem;

• To initialize this semaphore:

init_MUTEX ( &sem );

• To acquire this semaphore:

down_interruptible( &sem );

• To release this semaphore:

up( &sem);

struct file_operations

struct file_operations my_fops =

{

owner:

THIS_MODULE,

read:

my_read,

write:

my_write,

open:

my_open,

release:

my_release,

};

‘open()’ uses file->f_fmode

• You can implement an ‘open()’ method in

your device-driver that lets only one task

at a time open your device for reading:

{

if ( file->f_fmode & FMODE_READ )

down_interruptible( &sem );

return 0; // success

}

‘release()’ uses file->f_fmode

• You can implement a ‘release()’ method in

your device-driver that lets a ‘reader’ task

release its earlier acquired semaphore:

{

if ( file->f_fmode & FMODE_READ )

up( &sem );

return 0; // success

}

‘newstash.c’

• We write a new version of ‘stash.c’ that

illustrates the use of two semaphores to

restrict device-file access to one ‘writer’

and one ‘reader’ at a time

• Other tasks that want to ‘read’ or ‘write’ are

put to sleep if they try to ‘open’ the devicefile, but are woken up when the

appropriate semaphore gets ‘released’

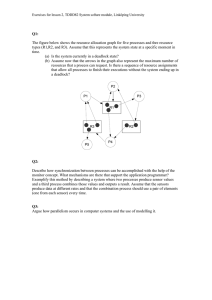

‘struct semaphore’

For a mutual-exclusion semaphore

(i.e., a ‘mutex’), the ‘count’ field will

be initialized to 1, meaning that at

most one task is allowed to acquire

the semaphore at any given moment

sem

count

sleepers

lock

task_list

prev

next

A task ‘aquires the semaphore’ when

it calls the ‘down( &sem )’ function

That function decrements the ‘count’

value, then immediately returns if the

new value of ‘count’ is non-negative;

otherwise, the task is ‘put to sleep’ on

the semaphore’s ‘task_list’ wait-queue

(and ‘sleepers’ is incremented); later,

when another task that had acquired

the semaphore calls the ‘up( &sem )’

function, the value of ‘count’ will get

incremented and any tasks sleeping

on this wait_queue will be awakened

Multiprogramming

• Linux also provides programmers support

for writing applications that are comprised

of more than just a single process

• This is called ‘multiprogramming’

• Again, synchronization is needed in cases

where a ‘race-condition’ might arise, as in

concurrent access to a shared resources

Advantages of multithreading

• For multiprocessor systems (two or more

CPUs), there are potential efficiencies in

the parallel execution of separate threads

(a computing job may be finished sooner)

• For uniprocessor systems (just one CPU),

there are likely software design benefits in

dividing a complex job into simpler pieces

(easier to debug and maintain -- or reuse)

Some Obstacles

• Separate tasks need to coordinate actions,

share data, and avoid competing for same

system resources

• Management ‘overhead’ could seriously

degrade the system’s overall efficiency

• Examples:

– Frequent task-switching is costly in CPU time

– Busy-Waiting is wasteful of system resources

Some ‘work-arounds’

• In place of using ‘pipes’ for the exchange

of data among separate processes, Linux

lets ‘threads’ use the same address-space

(reduces ‘overhead’ in context-switching)

• Instead of requiring one thread to waste

time busy-waiting while another finishes

some particular action, Linux lets a thread

voluntarily give up its control of the CPU

Additional pitfalls

• Every thread needs some private memory

that cannot be ‘trashed’ by another thread

(for example, it needs a private stack for

handling interrupts, passing arguments to

functions, creating local variables, saving

CPU register-values temporarily)

• Each thread needs a way to prevent being

interrupted in a ‘critical’ multi-stage action

Example of a ‘critical section’

• Updating a shared variable:

• Algorithm:

– (1) copy variable’s current value to a register

– (2) perform arithmetical operation on register

– (3) copy register’s new value back to variable

• If a task-switch occurred between any of

these steps, another task could interfere

with the correct updating of this variable

(as our ‘concur1.cpp’ demo illustrates)

‘mutual exclusion’

• To prevent one thread from ‘sabotaging’ the

actions of another, some mechanism is needed

that allows a thread to temporarily ‘block’ other

threads from gaining control of the CPU -- until

the first thread has completed its ‘critical’ action

• Some ways to accomplish this:

– Disable interrupts (stops CPU time-sharing)

– Use a ‘mutex’ (a mutual exclusion variable)

– Put other tasks to sleep (remove from run-queue)

What about ‘cli’?

• Disabling interrupts will stop ‘time-sharing’

among tasks on a uniprocessor system

• But it would be ‘unfair’ in to allow this in a

multi-user system (monopolize the CPU)

• So ‘cli’ is a privileged instruction: it cannot

normally be executed by user-mode tasks

• It won’t work for a multiprocessor system

What about a ‘mutex’?

• A shared global variable acts as a ‘lock’

• Initially it’s ‘unlocked’: e.g., int mutex = 1;

• Before entering a ‘critical section’ of code,

a task ‘locks’ the mutex: i.e., mutex = 0;

• As soon as it leaves its ‘critical section’, it

‘unlocks’ the mutex: i.e., mutex = 1;

• While the mutex is ‘locked’, no other task

can enter the ‘critical section’ of code

Advantages and cautions

• A mutex can be used in both uniprocessor

and multiprocessor systems – provided it

is possible for a CPU to ‘lock’ the mutex

with a single ‘atomic’ instruction (requires

special support by processors’ hardware)

• Use of a mutex can introduce busy-waiting

by tasks trying to enter the ‘critical section’

(thereby severely degrading performance)

Software mechanism

• The operating system can assist threads

needing mutual exclusion, simply by not

scheduling other threads that might want

to enter the same ‘critical section’ of code

• Linux accomplishes this by implementing

‘wait-queues’ for those threads that are all

contending for access to the same system

resource – including ‘critical sections’

Demo programs

• To show why ‘synchronization’ is needed

in multithreaded programs, we wrote the

‘concur1.cpp’ demo-program

• Here several separate threads will all try to

increment a shared ‘counter’ – but without

any mechanism for doing synchronization

• The result is unpredictable – a different

total is gotten each time the program runs!

How to employ a ‘mutex’

• Declare a global variable: int mutex = 1;

• Define a pair of shared subroutines

– void enter_critical_section( void );

– void leave_critical_section( void );

• Insert calls to these subroutines before

and after accessing the global ‘counter’

Special x86 instructions

• We need to use x86 assembly-language

(to implement ‘atomic’ mutex-operations)

• Several instruction-choices are possible,

but ‘btr’ and ‘bts’ are simplest to use:

– ‘btr’ means ‘bit-test-and-reset’

– ‘bts’ means ‘bit-test-and’set’

• Syntax and semantics:

– asm(“ btr $0, mutex “); // acquire the mutex

– asm(“ bts $0, mutex “); // release the mutex

Our two mutex-functions

void enter_critical_section( void )

{

asm(“spin: btr $0, mutex “);

asm(“

jnc spin

“);

}

void leave_critical_section( void )

{

asm(“

bts $0, mutex “);

}

Where to use the functions

void my_thread( int * data )

{

int

i, temp;

for (i = 0; i < maximum; i++)

{

enter_critical_section();

temp = counter;

temp += 1;

counter = temp;

leave_critical_section();

}

}

‘reentrancy’

• By the way, we point out as an aside that

our ‘my_thread()’ function (on the previous

slide) is an example of ‘reentrant’ code

• More than one process (or processor) can

be safely executing it concurrently

• It needs to obey two cardinal rules:

– It contains no ‘self-modifying’ instructions

– Access to shared variables is ‘exclusive’

‘concur2.cpp’

• We rewrote ‘concur1.cpp’ demo-program,

as ‘concur2.cpp’, inserting these functions

that will implement ‘mutual exclusion’ for

our thread’s ‘critical section’

• But note how much time it now consumes

$ time ./concur2

The x86 ‘lock’ prefix

• In order for the ‘btr’ instruction to perform

an ‘atomic’ update (when multiple CPUs

are using the same bus to access memory

simultaneously), it is necessary to insert

an x86 ‘lock’ prefix, like this:

asm(“ spin:

lock

btr $0, mutex “);

• This instruction ‘locks’ the shared systembus during this instruction execution -- so

another CPU cannot intervene

In-class exercise #1

• Add the ‘lock’ prefix to your ‘concur2.cpp’

demo, and then try executing it again on

the multiprocessor system

• Use the Linux ‘time’ command to measure

how long it takes for your demo to finish

• Observe the ‘degraded’ performance due

to adding the ‘mutex’ functions – penalty

for achieving a ‘correct’ parallel program

The ‘nanosleep()’ system-call

• Your multithreaded demo-program shows

poor performance because your threads

are doing lots of ‘busy-waiting’

• When a thread can’t acquire the mutex, it

should voluntarily give up control of the

CPU (so another thread can do real work)

• The Linux ‘nanosleep()’ system-call allows

a thread to ‘yield’ its time-slice

In-class exercise #2

• Revise your ‘concur3.cpp’ program so that

a thread will ‘yield’ if it cannot immediately

acquire the mutex (see our ‘yielding.cpp’

demo for header-files and call-syntax)

• Use the Linux ‘time’ command to compare

the performance of ‘concur3’ and ‘concur2’