CMSC 414 Computer and Network Security Lecture 24 Jonathan Katz

advertisement

CMSC 414

Computer and Network Security

Lecture 24

Jonathan Katz

Application-level security

Application-level security

I.e., programming-language security

Previous focus was on protocols and

algorithms to prevent attacks

– Are they implemented correctly?

Here, focus is on programming errors and

how to deal with them

– Reducing/eliminating/finding errors

– Containing damage resulting from errors

Classifying flaws

Intentional flaws

– E.g., “backdoors”

Unintentional flaws

– E.g., programmer errors

Buffer overflows

50% of reported vulnerabilities

Overflowing a buffer results in data written

elsewhere:

– User’s data space/program area

– System data/program code

• Including the stack, or memory heap

Can also occur in other contexts

– E.g., parameters passed via URL

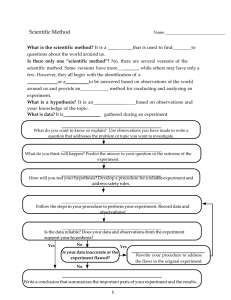

Example

Suppose a web server contains a function:

void func(char *str) {

char buf[128];

strcpy(buf, str);

do-something(buf);

}

When the function is invoked the stack looks like:

buf

sfp ret-addr str

What if *str is 136 bytes long?

*str

ret

str

top

of

stack

top

of

stack

Basic exploit

Suppose stack looks like:

*str

ret

Code for P

top

of

stack

Program P: exec( “/bin/sh” )

(exact shell code by Aleph One)

When func() exits, user will be given a shell !!

Note: attack code runs in stack

Finding buffer overflows

Hackers find buffer overflows as follows:

– Run web server on local machine

– Issue requests with long tags.

All long tags end with “$$$$$”

– If web server crashes:

search core dump for “$$$$$” to find

overflow location.

Incomplete mediation

E.g., changing symbolic link between

checking and use

E.g., parameters passed via URL

– Parameters may be checked at client-side…

– …but checking still necessary server-side

E.g., changing prices in URL…

Cross-site scripting

Violation of privacy…

General rule: always check inputs from

untrusted source!

Time-to-check vs. time-of-use

“Serialization/synchronization” flaw

E.g., presenting command; then changing

command while it is being verified

Covert channels

Intentionally inserted by programmers into

software, to later leak information…

Examples in book…e.g., spacing

information in printed page, formats, etc.

Other examples: “file lock” channel,

existence/non-existence of file, etc.

Analysis of covert channels

“Shared resource matrix”

– Tabulates subjects and the resources thave have

access to

Information flow analysis (in source code)

– E.g., “B = A” supports info. flow from A to B

– “If (D == 1) then B = A” supports info. flow

from D to B also!

– Trace information flow throughout program…

Timing attacks

Password checking routines…

Web caching

Finding/preventing flaws

Penetration testing?

Limited to finding/patching existing flaws

– Cannot be used to guarantee that software is

free of all flaws

Patching flaws in this way has its own

problems

– Narrows focus to fixing a specific flaw, rather

than addressing issues more broadly

– May introduce new flaws

Automated testing

Successful to some extent

Hard to catch all flaws

– Traditional program verification/testing focuses

on what a program should do

– Here, we are concerned with things a program

should not do

Techniques for preventing flaws

Secure programming

– Developmental controls

– Better techniques

– Secure programming languages

– Static analysis

Secure compilation

– Dynamic analysis

– Software fault isolation

Techniques…

Inferring trust

– Source authentication/code signing

– Proof-carrying code

OS controls

– Sandboxing

– System-call interposition techniques

– Secure boot of OS

Developmental controls…

Modularity

– Improves ability to locate flaws

– Easier to verify/fix code

Encapsulation/information hiding

Peer review/testing/analysis

Automated code testing

Secure programming techniques

(Based on: “Programming Secure Applications

for Unix-Like Systems,” David Wheeler)

Overview

Validate all input

Avoid buffer overflows

Program internals…

Careful calls to other resources

Send info back intelligently