SPEECH_ERROR_CONCEALMENT.DOC

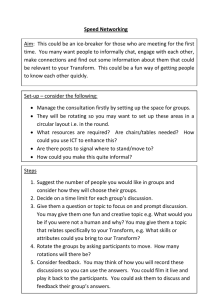

advertisement

A NEW TRANSFORM TECHNIQUE FOR ERROR CORRECTION AND CONCEALMENT1 F. Marvasti1, Paul Strauch2, P. Ferreira3, Ran Yan2 Dept of Electronics, King’s College London Strand, London WC2R 2LS, UK 2 Lucent Technology, Swindon, SN5 6PP, UK 3 University of Aveiro, Aveiro, Portugal 1 ABSTRACT We will discuss a new transform method that is suitable for a burst of erasures and error concealment for speech, image and video signals over ATM and wireless channels. The advantage of this transform is that it works on the field of real and or complex numbers as opposed to finite fields. 1. INTRODUCTION FFT has been used for error detection and correction of real or complex numbers [1-3]. Efficient techniques for decoding FFT codes can be devised under erasure channels [4]. However, error detection and correction of real numbers are very sensitive to noise [5]; there are methods to get around this sensitivity issue [6-7]. In these techniques, redundancy is added by inserting zeros in the transform domain; the inverse transform can correct for erasures. Error Concealment methods have always been regarded as an engineering art to subjectively conceal errors for speech and image signals. Simple methods such as previous frame substitution for these types of signals are always regarded as ad-hoc techniques for improving the quality or concealing errors of a received signal. The rational behind this approach is attributed to the correlation of adjacent samples/frames and the insensitivity of the ear or the eye to the concealed errors. Error concealment is closely related to the source and channel coding. If the correlation among adjacent frames is not utilised by the source encoder, then error concealment can help the quality especially when some frames are lost. On the other hand, the more powerful a channel encoder, the less frequent the possibility of a lost or bad frame and hence the less frequent the need for error concealment. In this paper, we would like to relate error concealment methods to error correction codes. The consequence of this relationship is the use of Reed Solomon (RS) like decoding methods for erasure recovery (error concealment) except that we will use the Galois Fields of real and/or complex numbers. The 1 Patent protection for this technique has been applied for. basic idea is as follows. If e percent of the frames need error concealment, we can pre-distort the signal by e percent at the encoder globally and then convert the error concealment problem into an error correction problem. A simple example can clarify this idea. If e is equal to 10 percent, we can reduce the bandwidth of the source signal at the encoder by 10 percent. This reduction will not have a noticeable distortion on the signal. In essence the 10 percent distortion is distributed on all the samples (frames) and is concealed from subjective evaluation. Due to the bandwidth reduction by 10 percent, the encoder is now over-sampled. Either we can remove the redundancy and then use the classical source and error correction codes or, alternatively, over-sampling can be regarded as non-binary redundant symbols similar to RS codes [4] and [7]. Hence RS decoding can be used for decoding the lost (erased) frames. Essentially at the encoder, the source encoder compresses the over-sampled signal and then the channel encoder adds parity bits for error protection. At the decoder, the received bits sequentially go through the channel decoder, source decoder, and the Error Concealment (EC) decoder. The EC decoder corrects (conceals) for the 10 percent bad frames on the average. Below, we will describe the practical issues related to EC and then describe the technique in more mathematical detail. 1. DELAY AND SENSITIVITY ISSUES There are various methods for reducing the bandwidth of the source signal: 1- An ideal low pass filter, 2- An FIR or IIR filter, 3- Zeroing the high frequencies in the FFT or the proposed transform domain. The first method creates infinite delay at the decoder. The second method is equivalent to the convolutional codes but is not suitable for a bursty frame loss since the decoder is an iterative method, which fails for bursty losses. The third approach is the preferred method and creates a delay of n samples- the block size of the FFT or the proposed transform; this is equivalent to the block codes for error correction codes. The problem with FFT is that it requires very accurate precision for the samples and become unstable for large values of n (n>64)[3] and [7]. Below we will describe a new transform technique that does not have the drawbacks of FFT. 2. THE NEW TRANSFORM METHOD The new transform is actually derived from FFT and hence the fast algorithm can still be used. If the elements of the FFT transform matrix are ai ,k exp( j * 2 * * i * k / n ) for ~ i, k ~ 1,2,...n, the modified transform is bi ,k exp( j * 2 * * p * i * k / n ) for ~ i, k ~ 1,2,...n, where p is relatively prime with respect to n2. With this condition satisfied, we are guaranteed that bi ,1 ~~ are distinct for all values of i ~ 1,2,..., n and are the sorted version of the FFT kernels a i ,1 ; we can show that the new transform has still conjugate symmetry. The advantage of this transform is that, unlike FFT, it is no longer sensitive to the quantization of samples and additive noise. The forward transform can be handled by FFT and sorting; the sorting of coefficients is as follows: sort i mod( p * i, n), ~~ i ~ 1,2,..., n . The inverse transform of the frequency coefficients is equivalent to the inverse sorting and the inverse FFT, respectively. Since the kernel of the new transform is exponential, RS decoding procedure can be used for recovering lost (bad) frames [4]. The value of p can be optimised based on the value of n, and the number of bad frames in a block of n; this issue will be discussed in the next section. 3. THE CHOICE OF P Besides the fact that p and n have to be relatively prime, there are two contradictory requirements for the choice of p, the first one is that we need a p such that the decoding is stable. For stability, it is necessary that the error locator polynomial [6] spans the unit circle, i.e., the following complex points should be spread over the unit circle. exp( j * 2 * * p * m / n ) m ~ n * s,..., n where is the number of bad frames and s is the number of samples per frame. In other words, we require p * * s n . On the other hand, filtering in the new transform domain creates some distortion in the original source signal. In order to reduce this distortion, p has to be chosen such that low energy coefficients are in the high frequency region. FFT (p=1) is an extreme example, where almost all the high frequency coefficients are zero. However, the FFT transform is highly unstable. If p is around n/2, almost half of the high frequency coefficients of FFT remain in the high frequency region of the new transform. However, the transform may not be stable depending on . Also, we can show that the transform for p=n/2+e is the conjugate of p=n/2-e for even n. Therefore, a compromise value for p between n / * s p n / 2 has to be chosen. For example, for n=480, 1 , s=160, p=197 can be found experimentally to be the best compromise. 4. PROPERTIES OF EC CODES This class of EC codes, similar to the classical error correction codes, become more powerful by increasing n and . Assume a pre-distortion measure D as a function of the code rate r =1- * s / n . If we keep the rate r constant (i.e., constant pre-distortion), we can decrease the Mean Square Error (MSE) of the erased frames to zero by increasing the block size n if the average probability of Bad Frame Indication (BFI) is less than the EC redundancy rate * s / n 3. The price to be paid is frames delay both at the encoder and the decoder. It is therefore, desirable to have shorter frame sizes, e.g., the speech standard G.729 which has 10ms (s=80 samples) frame size is more suitable for EC than GSM frames of 20ms (s=160 samples). 5. DECODING ALGORITHM The EC decoding algorithm is similar to RS decoding. For FFT decoding see [4]. The error locator polynomial is s XXk ttk exp If p and n are not integers, new classes of transforms are generated but are not related to FFT, and by zeroing the high frequencies we may not yield a real signal. These transforms can still be used for error correction and not EC. ii q m m=1 3 This is equivalent to the statement that the EC rate should be less than the channel capacity for BFI. For an erasure channel, the capacity is c= r*(1-Pe), where r is the transmitted sample rate and Pe is the probability of BFI. The source information rate is r* ( n 2 j * s ) / n . The channel capacity theorem implies that * s) / n * s / n Pe. r* ( n r*(1-Pe), or alternatively, where The above transform is actually a sorted version of FFT. The original FFT looks like Fig 2. ttk exp( j * k * q) and q 2 * * p /n. The value of p determines the type of the transform. For p=1, the error polynomial is for the FFT transform. The above error locator polynomial is h * tt equivalent to t t 0 t j ht . The values can 5.895704 e(im ) * (ttm ) r and then summing over m, we get Er 2 3 0 0 be found from the inverse transform (inverse sorting and inverse FFT). By multiplying the above equation by 4 Y k Y1 1.151008 10 6 1 100 150 200 250 300 k 350 400 450 500 4.8 10 2 trace 1 Fig 2. Normalised FFT coefficients of the same segment of the speech signal. E r t * h t E r t The quality of the pre-distorted signal for s = 80 is as shown in Fig 3. h -1 and r n / 2 / 2 1...n / 2 / 2 1 . where t = 1…, are the transform (FFT and the sorting algorithm) coefficients. Since * s samples of E r are known at “high frequency” positions, the remaining values of Er 1 and alk e(im ) ’s are the lost samples of the Bad Frame and E r ’s 50 2000 Re ( ye ( xx) e1 ) 1000 l l Re ( y) 0 l 1000 corr ( Re ( y) xx ) 0.908 are determined from the 2000 above recursive formula. Once E r ’s are determined for all values of r, its inverse transform yields the amplitudes of the lost samples of the Bad Frame. For computational complexity see [4] and [7]. 0 100 200 l 300 400 500 trace 1 trace 2 trace 3 Fig 3. The recovered, the original, and the predistorted signal for 1 and s = 80. 6. SIMULATION RESULTS We have simulated the above new transform method on Mathcad and have listened to the quality of predistorted signal. For n=480, and p=197, the transform of a typical speech signal looks like Fig 1. The quality of the recovery algorithm for = 80 is shown in Fig 4. 1 and s corr ( ( e ) ( e1) ) 1 2000 5.895704 6 1000 Y k Y1 1.151008 10 Re e 4 j Re e1 j 2 0 1000 3 2000 0 0 1 50 100 150 200 250 k 300 350 400 450 500 2 4.8 10 trace 1 Fig 1. Normalised Transform coefficients of a segment of speech signal. 0 100 200 j 300 400 500 trace 1 trace 2 Fig 4. The lost and the recovered speech samples for s=80 and 1 . xx lk n 2 1 For s=160 and other parameters remain as before, the quality of the pre-distorted signal is shown in Fig 5. alk 2000 Re ( ye ( xx) e1 ) 1000 l l 0 Re ( y) l 1000 corr ( Re ( y) xx ) 0.837 2000 0 100 200 l 300 400 500 trace 1 trace 2 trace 3 Fig 5. The recovered, the original, and the predistorted signal for 1 and s = 160. 7. CONCLUSIONS xx lk n 2 1 A new class of transform technique is derived that is useful for both error correction and error concealment of speech and video signals. Since the proposed transform method can be shown to be a sorted version of FFT, fast algorithms can be implemented. Also, because the elements of the new transform matrix are exponentially related to each other, efficient RS type of decoding can be used for both error correction and error concealment. Simulation results for both speech and video signals show the validity of the proposed transform method. Simulation results in this paper are related to speech signals; the results for video signals will be published later. 8. REFERENCES The quality of the recovery algorithm for = 160 is shown in Fig 6. 1 and s corr ( ( e ) ( e1) ) 1 2000 1000 Re e j Re e1 j 0 1000 2000 0 100 200 j 300 400 500 trace 1 trace 2 Fig 6. The lost and the recovered speech samples for s=160 and 1 . For n=480 and 1, a pre-distorted speech signal yields an acceptable quality of speech for s=80 samples. This pre-distorted signal can conceal one frame in a block of 6 frames. For present GSM applications where s=160, n needs to be at least 800 for an acceptable quality. This code can correct for one GSM frame per a block of 5 frames. In general the quality of pre-distorted signal can be improved by increasing n; but by increasing n, we get more delay. The above transform method has been successfully tested on video signals for both error correction and error concealment. The results will be published in a different paper. [1] R.E. Blahut, “Transform Techniques for error control codes,” IBM J of Res. Develop., vol.23, pp. 299-315, May 1979. [2] K. Wolf, “Redundancy, the discrete Fourier transform, and impulse noise cancellation,” IEEE Trans. Commun., vol. COM-31, no.3, March 1983. [3] F. Marvasti, “FFT as an alternative to error correction codes,” IEE Colloquium on DSP Applications in Communication Systems, March 1993. [4] F Marvasti, M Hasan and M Echhart, “Speech recovery with bursty losses”, in Proc of ISCAS’98, Monterey, CA, May31-June 3, 1998. [5] Wong, F. Marvasti, and W.C. Chambers, “Implementation of recovery of speech with impulsive noise on a DSP chip,” IEE Electronic Letters, vol.31, no.17, pp.1412-1413, 17 Aug., 1995. [6] M Hasan and F Marvasti, “Noise sensitivity analysis for a novel error recovery technique”, in Proc of ICT’98, Halkidiki, Greece, 21-25 June 1998. [7] F. Marvasti, M. Hasan, M. Echhart, S. Talebi, “Efficient techniques for burst error recovery,” accepted for publication in the IEEE Trans on SP.