Presented by Maryam Farboodi and Hyun Young Song (806KB)

advertisement

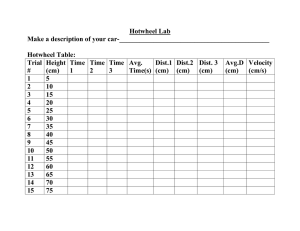

A Rank-by-Feature Framework for Interactive Exploration of Multidimensional Data Jinwook Seo, Ben Shneiderman University of Maryland Hyun Young Song (hsong@cs.umd.edu) Maryam Farboodi (farboodi@cs.umd.edu) Feb, 09 2006 1 HCE 3.0 HCE (Hierarchical Clustering Explorer) Main Idea: GRID principles Graphics, Ranking and Interaction for Discovery Feature Application http://www.cs.umd.edu/hcil/hce/ User Manual http://www.cs.umd.edu/hcil/hce/hce3manual/hce3_manual.html Dataset http://www.cs.umd.edu/hcil/hce/examples/application_e xamples.html 2 Axis-Parallel vs. Non Axis-Parallel Approach Definition 3 dimensions X, Y & Z Axis-parallel: Projection on either X & Y; X & Z or Y & Z Non axis-parallel: Can project on a.X+b.Y & Z Simplicity vs. power Users 3 Related Works Axis-parallel: Machine learning, Info. Vis. Pattern recognition Subset of dimensions to find specific patterns Machine learning and Data mining Supervised/ Unsupervised classification Subspace-based clustering analysis Projections naturally partitioning the data set Information Visualization Permutation Matrix Parallel coordinates: dimension ordering Conditional Entropy 4 Related Work (cntd.) Non axis-parallel: statisticians Two-dimensional projection SOM (Self Organizing Maps) XGobi: Grand tour, Projection pursuit No ranking HD-Eye interactive hierarchical clustering OptiGrid (partitioning clustering algorithm) 5 Major Contributions GRID (Graphics, Ranking and Interaction for Discovery) Study 1D, study 2D, then find features Ranking guides insight, statistics confirm Visualization Techniques Overview Coordination (multiple windows) Dynamic query (item slider) 6 General Overview Menu Toolbar Overviews, Color setting Dendrogram (binary tree), scatterplot 7 tabs Color mosaic, Table view, Histogram Ordering, Scatterplot ordering, Profile search, Gene ontology, K-means 7 General Overview back 8 Load/Transformation Data Natural Log Standardization Normalization To the first column Median Linear scaling back 9 Clustering Algorithm 1. Initially, each data a cluster by itself 2. Merge the pair with highest similarity value 3. Update similarity values 4. Repeat 2 & 3 for n - 1 times to reach one cluster of size n No predefined number of clusters 10 Choosing Algorithm Parameters 11 Linkage Method Average Linkage Dist (Cn , Ck ) Average Group Linkage Ci Ci C j Dist (Ci , Ck ) Cj Ci C j Dist (C j , Ck ) DIST (Cn , Ck ) DIST (Mean(Cn ), Mean(Ck )) Complete Linkage DIST (Cn , Ck ) Max ( DIST (Ci , Ck ), DIST (C j , Ck )) Single Linkage DIST (Cn , Ck ) Min ( DIST (Ci , Ck ), DIST (C j , Ck )) Scheinderman’s 1by1 Linkage Tries to grow the newly merged cluster of last iteration first 12 Dendrogram View back 13 7 Tabs 14 1D Histogram Interface Interface description Control panel, Score overview, Ordered list, Histogram browser 15 1D Histogram Ordering Ranking criteria Normality of the distribution (0~∞) | s | | k 3| s: skewness, k: kurtosis: Uniformity of the distribution (0~∞) k entrpy ( H ) pi log 2 ( pi ) i 1 Number of potential outliers (0~n) IQR = Q3 – Q1, d: item value d Q1 1.5 IQR Suspected outlier: d Q3 1.5 IQR d 3 IQR Extreme outlier: Number of unique values (0~n) Size of the biggest gap (0~max. dim. range) mf: max frequency, t: tolerance: t mf 16 2D Scatterplot Interface Interface description Control panel, Score overview, Ordered list, Scatterplot browser 17 2D Scatterplot Ordering Ranking criteria Statistical Relationship Correlation coefficient(-1~1): Pearson’s coefficient r ( S ) ( x x)( y y) ( x x) ( y y ) Least square error for curvilinear regression(0~1) Quadracity(-∞~∞) Distribution Characteristics Number of potential outliers(0~n) n n n 2 i 1 i i i 1 i 2 i 1 i LOF-based: Density-based outlier detection Number of items in area of interest(0~n) Uniformity(0~∞) : entropy(S ) p log ( p ) k k i 1 j 1 ij 2 ij 18 Demo 19 System Constraints Computational Complexity n data in m dimensional space : O(nm²) O(n) : scoring complexity O(m²) :combination of dimension Display Constraints Appropriate number of dimensions for score overview component: 0~130 Lack of sliders to adjust displacement 20 Evaluation of HCE 3.0 Linear color mapping (3 color or 1 color) Consistent layout of the components Focus-context F: dendrogram – C: rank-by-feature F: ordered list - C: histogram, scatter plot Item slider Dynamic query Multi-window view Dynamic update of data selection in different window 21 Futureworks HCE 3.0 (HCE 3.5) HCE 4.0 ?? 1D, 2D axis parallel projection 3D projection Numerical data format Numerical + categorical, binary, Nominal Limited number of applicable More meaningful datasets to datasets ( us cities, cereal, netscan …) 1D - 5 ranking criteria 2D – 6 ranking criteria demonstrate the power of each ranking criteria Incorporate more criterion into rank-byfeature framework User study Various statistical tools and data mining algorithms 22 Thank you! Questions? 23