Specialization Run-time Code Generation in the context of Dynamic

advertisement

Specialization

Run-time Code

Generation in the

context of Dynamic

Compilation

presented by Mujtaba Ali

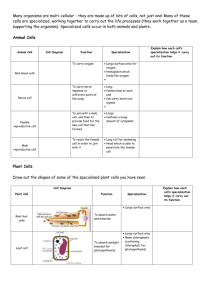

Earlier in the Semester

“VCODE: A Retargetable, Extensible, Very Fast

Dynamic Code Generation System”

–

–

Generate “assembly” at run-time

Run-time Code Generation, but not in the realm of

Dynamic Compilation

“Fast, Effective Dynamic Compilation”

–

–

–

Our only foray into Run-time Specialization

Same authors as the first paper we will discuss

Precursor to DyC – we’ll call it the “DyC paper”

Example Compiler Optimizations

Constant Propagation

(everyone’s favorite)

Copy Propagation

Constant Folding

Loop Unrolling

Branch Elimination

Dead Store Elimination

(Dead-assignment Elimination)

Order Matters

foo (b) {

a = 3;

print(a);

a = b;

print(a);

}

foo (b) {

a = 3;

print(3);

a = b;

print(a);

}

Constant Propagation

foo (b) {

a = 3;

print(3);

a = b;

print(b);

}

Copy Propagation

DA Elimination

foo (b) {

print(3);

print(b);

}

Partial Evaluation

Complete Set of

Compiler Optimizations

Typically:

Partial Evaluation

Constant Propagation

Constant Folding

Loop Unrolling

Inlining

DyC Paper Refresher

Towards Automatic Construction

of Staged Compilers

Matthai Philipose,

Craig Chambers, and

Susan J. Eggers

Contributions

Eases the burden on the compiler writer

–

Compiler writer simply writes optimizations as usual

Supporting contributions:

–

–

Enhancements to Partial Evaluation

Enhancements to Dead-assignment Elimination

Compiler

Writer

Staged Compilation Framework

–

–

Compiler

User

SCF-ML

Language in which compiler optimizations

are written

First-order side-effect free subset of ML

Stager

–

–

Specializes optimizations written in SCF-ML

to separate compile-time and run-time

optimizations

Requires “approximate” information about

program input

High-level Overview of SCF

Concrete Example

Function to be optimized:

–

Say this function is the only contents of “mul_add.c”

Concrete Example (con’t)

At compile-time, compiler user does the following:

O1:

const prop

(SCF-ML)

I1

O2:

copy prop

(SCF-ML)

stager

stager

I2

AST of

mul_add.c

Info:

a is const

at run-time

O3:

dae

(SCF-ML)

O1’:

staged

const prop

(SCF-ML)

stub code

stager

I3

O2’:

staged

copy prop

(SCF-ML)

O3’:

staged

dae

(SCF-ML)

Concrete Example (con’t)

In ML:

Concrete Example (con’t)

What does the stub function do?

P1

AST of

mul_add.c

O1’:

staged

const prop

(SCF-ML)

P2

O2’:

staged

copy prop

(SCF-ML)

Run-time

const value

of a

–

All this is happening at run-time

P3

O3’:

staged

dae

(SCF-ML)

Popt

Concrete Example (con’t)

In ML:

Stager

Stager specializes the optimizations

–

–

Partial Evaluator optimizes writer’s optimization and

generates run-time/staged part of optimization

Performance is crucial for run-time part of

optimizations hence Dead-assignment Elimination

It appears as if there are two level of

specialization

–

One of the “alleged” levels is just the automatic

separation between compile-time and run-time

Alternate View of Specialization

Standard compiler:

P

O1

M

Specializing compiler:

P

O1’

O1’/2

run it

M/2

I

M’ is specialized to I

Note that O1’ will execute faster than O1

M’

Compare and Contrast with DyC

Compiler writer’s job is much easier

–

–

–

Stager automatically does all the work

Not specific to C

Not limited to optimizations that come with the

staged compiler

Compiler user still has the same API:

–

–

Input (run-time constraints)

User program

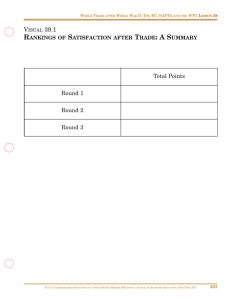

Benchmarking

Definitely easier than DyC

But is automatic staging as effective wrt:

–

–

Unstaged (standard) compiler

Hand-staged optimizations (as in DyC)

How fast are the combined static-time and runtime stages of the staged compiler?

Also, what about the size of the staged

compiler?

Benchmarking (con’t)

vs. Unstaged (standard) compiler

–

vs. Hand-staged optimizations

–

–

Hand-staged optimizations perform much better

Don’t know why, though

Unstaged compiler vs. staged compiler (both optimized)

–

Noticeably faster (it better be)

Who cares since the unstaged compiler does every thing at

static-time

Size of the automatically staged compiler

–

In practice, size grows linearly in the size of the input program

Drawbacks

Compiler user must understand specialization

–

Not clear if code generation during stub

function execution is language-specific

Implemented in ML

–

–

Doesn’t seem to be a way around this

Language interoperability (ML C)

ML is garbage collected and bounds checked

Not robust enough for public consumption

Supporting Contributions

Partial Evaluators have been developed for

many domains

–

–

However, partially evaluating optimization programs

presents its own challenges

SCF’s Partial Evaluator:

Knows about map and set types

Uses identity tags for better equality analysis

Supporting Contributions(con’t)

Also, SCF employs a smarter representation of

abstract values

–

–

More amenable to specialization of code

implementing optimizations

Abstract values are used to loosely interpret code

during partial evaluation

Towards Automatic Specialization

of Java Programs

Ulrik Pagh Schultz,

Julia L. Lawall,

Charles Consel, and

Gilles Muller

Contributions

Specialization is useful in an OO setting

Supporting contributions:

–

–

–

OO languages lend themselves to specialization

“Quick and dirty” proof of concept

Performance benchmarks

General Idea

The more generic you get, the more your

performance suffers

Genericity

Sluggish

–

–

–

Specificity

Efficient

OO is generic by nature

Specialization is our savior

Specialization will convert a generic program to a

specific one

Automatic Specialization?

What is meant by “automatic”?

–

Specialized code is not generated by hand

What is different about the “specialization”

here?

–

–

–

Let’s try and mimic run-time specialization at

compile-time

Specialize for each member of the most likely inputs

If actual input at run-time is not in this set, fall back

on the generic, non-specialized code

Concrete Example

Consider this Java class for the power function:

Concrete Example (con’t)

Assume the exponent is always 3

–

We can specialize the calculate() method

–

JSCC would allow us to automatically specialize calculate()

Concrete Example (con’t)

With JSCC, we would define a specialization

class as such:

–

JSCC will generate the specialized code and…

Concrete Example (con’t)

… JSCC adds a method for switching between

specialized implementations:

Specialization in an OO Context

How does OO naturally lend itself to

specialization?

–

–

–

Data Encapsulation

Virtual Method Calls

In the case of Java, specializing the “VM”

Specializing Data Encapsulation

Traditional program specialization, but…

–

… save on sequence of pointer dereferences (due to OO)

Specializing Object Types

Information fed to specializer can help eliminate

dynamic dispatch overhead

Specializing the Virtual Machine

Such low-level optimizations are possible because

Java is eventually translated to C in this scheme

Two Levels of Specialization (Again)

Specialization of original Java program

–

JSCC

Example earlier

Specialization of translated C program

–

Tempo

Only the compile-time specialization capabilities of Tempo

are exploited

“Quick and Dirty” Prototype

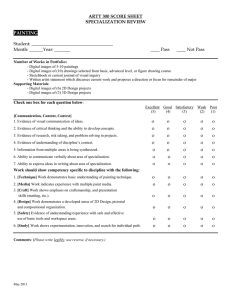

Benchmarks

Image Processing Application

Benchmarks (con’t)

What are “Prospective” Benchmarks?

Sparc

–

Pentium

Benchmarks on manual back-translation to Java

Future Work

Run-time specialization

–

Automatic back translation to Java

–

Tempo already supports run-time specialization

Why? Portability.

Apply to situations involving extreme genericity

–

–

Software components

For example: JavaBeans

Drawbacks

Lack of run-time specialization

–

Forces tedious compile-time specialization

How will exploiting Tempo’s run-time

specialization affect backporting to Java?

Looks and feels like an ugly hack

The Present

JSPEC is the new incarnation

–

–

Run-time specialization

Java-to-Java translation

JSPEC paper is unpublished but available

Compare and Constrast with SCF

Unlike SCF, adding additional optimizations to

the specialization is non-trivial

–

–

You’re stuck with the provided partial evaluator

But partial evaluation covers a lot of ground

No run-time specialization

–

Most significant drawback

That’s All Folks!

Use Cyclone because it’s good for you!

–

Cyclone is the bestest language.