COCOMO II/Chapter 3 tables/Boehm et al.

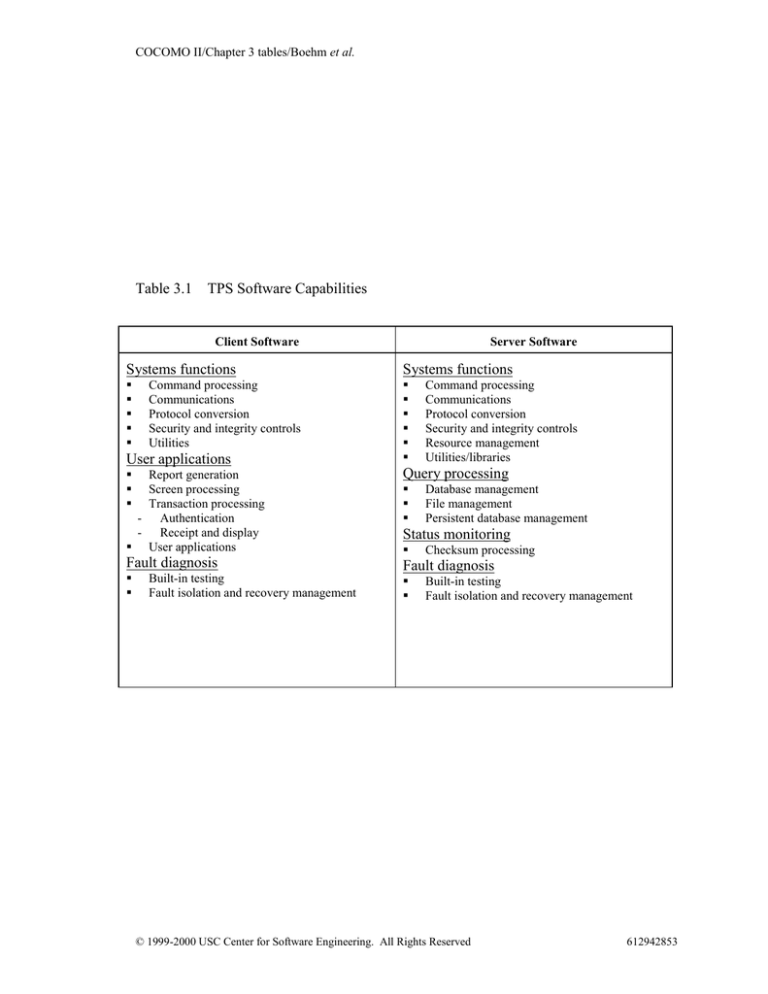

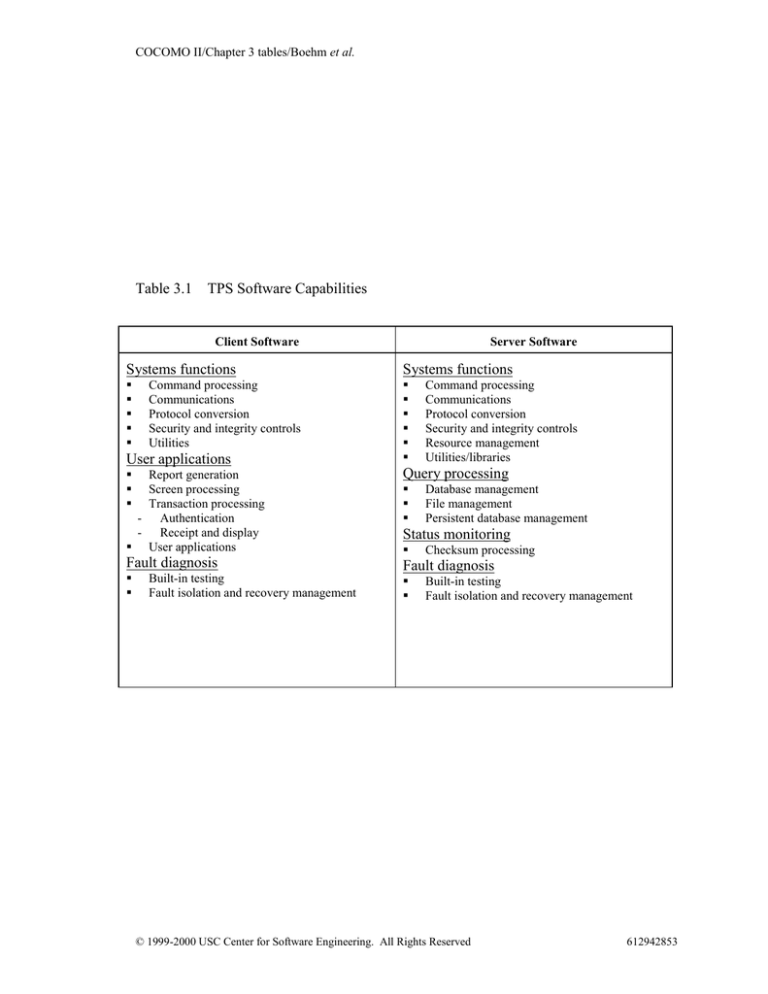

Table 3.1

TPS Software Capabilities

Client Software

Server Software

Systems functions

Systems functions

Command processing

Communications

Protocol conversion

Security and integrity controls

Utilities

User applications

Command processing

Communications

Protocol conversion

Security and integrity controls

Resource management

Utilities/libraries

Report generation

Screen processing

Transaction processing

- Authentication

- Receipt and display

User applications

Query processing

Fault diagnosis

Fault diagnosis

Built-in testing

Fault isolation and recovery management

Database management

File management

Persistent database management

Status monitoring

Checksum processing

Built-in testing

Fault isolation and recovery management

© 1999-2000 USC Center for Software Engineering. All Rights Reserved

612942853

COCOMO II/Chapter 3 tables/Boehm et al.

Table 3.2 Size for Identified Functions

Component

Systems

software

Functions

Communications drivers

Protocol converters

Authentication module

Class libraries (e.g., widgets, filters,

active components)

Size

18 KSLOC (new)

10 KSLOC (reused)

User

applications

Screens and reports

Interface for user developed

applications

800 SLOC (new)

Fault diagnosis

Built-in test

Fault isolation logic

Recovery management

8K function points

© 1999-2000 USC Center for Software Engineering. All Rights Reserved

Notes

Libraries are bought

as-is from vendors.

Extensions to the

library and glue code

included as new.

Human interface

developed using a GUIbuilder and graphical

4GL (Visual Basic)

Brand new concept using

neural nets to make

determinations and

findings

612942853

COCOMO II/Chapter 3 tables/Boehm et al.

Table 3.3 COCOMO Model Scope

ACTIVITY

Requirements (RQ)

Product Design (PD)

Implementation (IM)

Integration & Test (I&T)

Project Management (PM)

Project Configuration Management (CM)

Project Quality Assurance (QA)

Project Management activities done above

the project level

© 1999-2000 USC Center for Software Engineering. All Rights Reserved

IN OR OUT OF SCOPE

Out

In

In

In

In

In

In

Out

612942853

COCOMO II/Chapter 3 tables/Boehm et al.

Table 3.4

Summary of WBS Estimate

WBS Task

Estimate (staff hours)

Basis of Estimate

1. Develop software

requirements

1,600

Multiplied number of requirements by

productivity figure

2. Develop software

22,350

Multiplied source lines of code by

productivity figure

3. Perform task

management

2,235

Assumed a ten percent surcharge to account

for the effort

4. Maintain configuration

control

1,440

Assumed a dedicated person assigned to the

task

5. Perform software

quality assurance

1,440

Assumed a dedicated person assigned to the

task

TOTAL

29,065

© 1999-2000 USC Center for Software Engineering. All Rights Reserved

612942853

COCOMO II/Chapter 3 tables/Boehm et al.

Table 3.5

Scale Factor Ratings and Rationale

Scale Factor

Rating

Rationale

PREC

High

The organization seems to understand the project’s goals and have

considerable experience in working related systems.

FLEX

High

Because this is an organization in the midst of a process improvement

program, we have assumed that there is general conformity to

requirements and a premium placed on delivering an acceptable product

on time and within cost.

RESL

High

We assumed and checked that attention placed on architecture and risk

identification and mitigation is consistent with a fairly mature process.

TEAM

Very High

PMAT

Nominal

This is a highly cooperative customer-developer team so far. It isn’t

distributed and seems to work well together.

A level 2 rating on the Software Engineering Institute’s Capability

Maturity Model (CMM) scale is nominal.

© 1999-2000 USC Center for Software Engineering. All Rights Reserved

612942853

COCOMO II/Chapter 3 tables/Boehm et al.

Table 3.6

Product Cost Driver Ratings and Rationale

Cost

Drivers

Rating

Rationale

RELY

Nominal

Potential losses seem to be easily recoverable and do not lead to high financial losses.

DATA

Nominal

Because no database information was provided, we have assumed a nominal rating.

We should check this with the client to make sure.

CPLX

Nominal

Based upon the guidelines in Table 20 in the COCOMO II Model Definition Manual

and the available infrastructure software, we assume TPS software to be nominal

with the exception of the fault diagnosis software. We would rate this module “high”

because of the added complexity introduced by the neural network algorithms.

RUSE

Nominal

Again, we assumed nominal because we did not know how to rate this factor.

DOCU

Nominal

We assume the level of required documentation is right-sized to the life-cycle needs

of the project. This seems to be an inherent characteristic of using the organization’s

preferred software process.

© 1999-2000 USC Center for Software Engineering. All Rights Reserved

612942853

COCOMO II/Chapter 3 tables/Boehm et al.

Table 3.7

Platform Cost Driver Ratings and Rationale

Cost Drivers

Rating

Rationale

TIME

Nominal

Execution time is not considered a constraint.

STOR

Nominal

Main storage is not considered a constraint.

PVOL

Nominal

By its nature, the platform seems stable. The rating was selected because it

reflects normal change characteristics of commercially available operating

environments.

© 1999-2000 USC Center for Software Engineering. All Rights Reserved

612942853

COCOMO II/Chapter 3 tables/Boehm et al.

Table 3.8 Personnel Cost Driver Ratings and Rationale

Cost Drivers

Rating

Rationale

ACAP

High

PCAP

Nominal

PCON

High

Turnover in the firm averaged about 3% annually during the past few years.

We have doubled this figure to reflect assumed project turnover, based on

usual project experience of the firm.

APEX

High

Most of the staff in the organization will have more than 3 years of

applications experience in transaction processing systems.

LTEX

Nominal

PLEX

High

We have commitments to get some of the highest ranked analysts available.

However, the mix of personnel will be such that we can assume “high” as the

norm for the project.

Unfortunately, we do not know who we will get as programmers. Therefore, we

assumed a nominal rating.

Because of the Java/C/C++ uncertainties, we will assume the experience level

with languages & tools is between 1 and 2 years.

The mix of staff with relevant platform experience is high as wel1 based on

the information given about the project (more than 3 years of experience).

© 1999-2000 USC Center for Software Engineering. All Rights Reserved

612942853

COCOMO II/Chapter 3 tables/Boehm et al.

Table 3.9

Project Cost Driver Ratings and Rationale

Cost Drivers

Rating

Rationale

TOOL

High

We assume that we will have a strong, mature set of tools that are

moderately integrated.

SITE

Low

Because we don’t know how to rate this factor, we have conservatively

assumed Low for now.

SCED

Nominal

We will adjust this factor to address the schedule desires of management

after the initial estimates are developed.

© 1999-2000 USC Center for Software Engineering. All Rights Reserved

612942853

COCOMO II/Chapter 3 tables/Boehm et al.

Table 3.10

Risk

Risk Matrix

Priority

Description

1. Managers are

“techies”

?

2. New

programming

language

3. New processes

?

4. Lack of credible

estimates

?

5. New size metrics

?

6. Size growth

?

7. Personnel

turnover

?

8. New methods

and tools

9. Volatile

requirements

?

10. Aggressive

schedules

?

Managers are chosen based on their technical skills. This could lead to

problems as new supervisors perfect their skills. Learning curves

therefore must be addressed.

The organization is deciding whether to go Java or C/C++. Learning

curves again become a factor as does the negative potential impacts of

new, immature compilers and tools.

The organization has made major investments in process improvement.

New processes mean turmoil especially if you are the first to use them.

Learning curves becomes a factor again as does the time needed to pilot

and perfect processes.

The job is routinely under or over quoted. This process problem creates

unrealistic expectations and leads to people burning the midnight oil

trying to get the job done.

Either SLOC or function points will be chosen to measure the volume of

work involved. Setting up processes for both would result in redundant

work.

The basis of our estimates is size. Unfortunately, size estimates may

have large uncertainties and our estimates may be optimistic. Incorrect

size estimates could have a profound impact on the cost as the effects are

non-linear.

Often, the really good people are pulled to work the high priority jobs.

As a result, the experience mix may be less than expected or needed to

get the job done and the experienced people spend more time mentoring

the replacement people.

Like new processes, new methods and tools create turmoil. Learning

curves become a factor as does the maturity of the methods and tools.

Requirements are never stable. However, they become a major factor

when changes to them are not properly controlled. Designs and other

work done to date quickly become out-dated and rework increases as a

direct result.

Often, we tell management we will try to meet their schedule goals even

when they are unrealistic. The alternative would be cancellation or

descoping of the project.

?

?

© 1999-2000 USC Center for Software Engineering. All Rights Reserved

612942853

COCOMO II/Chapter 3 tables/Boehm et al.

Table 3.11

Factor

COTS

New

$100,000 (license)

$924,000*

Cost of acquiring software

Integration & test costs

100,000

Included

Run time license costs

50,000

Not applicable

Maintenance costs (5 year)

50,000

92,400

$300,000

$1,016,400

Total

*Assume cost of a staff-month = $10,000; therefore, the cost of 92.4 staff-months = $924,000

© 1999-2000 USC Center for Software Engineering. All Rights Reserved

612942853

COCOMO II/Chapter 3 tables/Boehm et al.

Table 3.12

Factor

Cost of compilers/tools (10 seats)

Training costs

Productivity

Total

C

Norm

Norm

Norm

Norm

© 1999-2000 USC Center for Software Engineering. All Rights Reserved

C++

$8,000

50,000

-187,500

-$129,500

612942853

COCOMO II/Chapter 3 tables/Boehm et al.

Table 3.13

Build No.

1

2

Module

Systems software

Applications software

Size (SLOC)

18,000 (new)

10,000 (reused equivalent = 1,900)

Build 1 (19,900 SLOC) +

User applications (800 SLOC)

Fault diagnosis (24,000 SLOC)

© 1999-2000 USC Center for Software Engineering. All Rights Reserved

612942853

COCOMO II/Chapter 3 tables/Boehm et al.

Table 3.14

Factor

Cost of acquiring software

Integration & test costs

Run time license costs

Maintenance costs

Total

COTS

$100,000 (license)

100,000

50,000

50,000

$300,000

© 1999-2000 USC Center for Software Engineering. All Rights Reserved

New

$924,000

Included

Not applicable

92,400

$1,016,400

612942853

COCOMO II/Chapter 3 tables/Boehm et al.

Table 3.15

Factor

Glue code development (4 KSLOC @ $50)

Integration & test costs – to test the interfaces

between the glue code and COTS

Education – to understand the COTS and its

capabilities (includes programmer time)

Documentation – to document the glue code and its

interfaces with the COTS

Risk reduction – assume fifty additional run-time

licenses may be needed as a worse case

Maintenance costs (5 year) – assume ten percent

annually for new and twenty-five percent annually for

the glue software

Acquisition costs

Total

COTS

$200,000

50,000

New

Not applicable

Not applicable

50,000

Not applicable

25,000

Not applicable

50,000

Not applicable

250,000

92,400

100,000

$725,000

924,000

$1,016,400

© 1999-2000 USC Center for Software Engineering. All Rights Reserved

612942853

COCOMO II/Chapter 3 tables/Boehm et al.

Table 3.16 ARS Software Components

Component

Functions

Radar Unit Control control of radar hardware, e.g. provide

commands to change radar mode or scan

particular area of sky

Radar Item

Processing

Radar Database

Display Manager

Display Console

Built In Test

Implementation Notes

the hardware supports the

same software commands as

earlier generation hardware

(backwards-compatible),

thus there is some reuse

(Ada)

radar item data processing and radar item

some new object-oriented

identification, which informs the crew about Ada development, updates

what type of radar items are where

for more sophisticated radar,

some Fortran algorithms

converted to Ada 95

data management of radar frequency and

new object-oriented Ada

radar item data

development

management of interactive displays of radar mostly new development,

and other sensor data; operates in the central some COTS software (Ada

embedded computer, and interfaces to the

and C). The development

display console for lower level processing.

tools support a mixedThe displays will be complex ones

language environment.

consisting of moving icons, adjustable scale

map displays, overlayed vector objects and

bitmapped data, multiple video planes, etc.

Sensor data from infrared and other devices

will displayed as real-time video.

operator input processing from the

custom hardware, some

keyboard, trackball and other devices;

COTS software for image

graphics primitive processing resident in the processing (C and

display console computer that supports the

microcode)

high level Display Manager (e.g. if the

Display Manager sends a “draw rectangle”

command, this software will actually draw

the component lines and change the pixel

states).

system performance monitoring, hardware

new development (Ada for

fault detection and localization. This

high-level control and

software is distributed in all processors and assembler for access to lowautomatically tests for hardware faults.

level hardware)

© 1999-2000 USC Center for Software Engineering. All Rights Reserved

612942853

COCOMO II/Chapter 3 tables/Boehm et al.

Table 3.17 ARS Prototype Application Elements

Component

Radar Unit

Control

Radar Item

Processing

Radar

Database

Display

Manager

Display

Console

Built In Test

Number of Screens

Simple

Medium

Complexity Complexity

(weight = 1) (weight = 2)

0

1

Difficult

Complexity

(weight = 3)

3

Number of Reports

Simple

Medium

Complexity Complexity

(weight = 2) (weight = 5)

0

0

Difficult

Complexity

(weight = 8)

0

Number of 3

GL

components

(weight = 10)

0

Total

Application

Points

0

4

4

0

1

0

4

65

0

2

3

0

0

0

1

23

0

2

6

0

0

0

2

42

1

2

4

0

0

0

0

17

0

0

0

0

0

0

0

0

© 1999-2000 USC Center for Software Engineering. All Rights Reserved

612942853

11

COCOMO II/Chapter 3 tables/Boehm et al.

Table 3.18 ARS Prototype Sizes

Component

Radar Unit Control

Radar Item Processing

Radar Database

Display Manager

Display Console

Built In Test

TOTAL

Size (Application

Points)

11

65

23

42

17

0

Estimated Reuse

20 %

30 %

0%

0%

0%

0%

© 1999-2000 USC Center for Software Engineering. All Rights Reserved

New Application

Points (NAP)

8.8

45.5

23

42

17

0

136.3

612942853

COCOMO II/Chapter 3 tables/Boehm et al.

Table 3.19 ARS Breadboard System Early Design Scale Drivers

Scale Driver

Rating

Rationale

PREC

Nominal

FLEX

Low

RESL

High

TEAM

Nominal

PMAT

Nominal

Even though Megaspace has developed many radar

systems, there is concurrent development of new

hardware and innovative data processing. Data

fusion from multiple sources will be done with a

new fuzzy logic approach.

Development flexibility is low since there are

stringent Navy requirements, but there is only a

medium premium on early completion of the

breadboard.

A subjective weighted average of the Architecture /

Risk Resolution components in Table 2.xx is high.

There is considerable consistency between the

stakeholder's objectives and their willingness to

compromise with each other, but there has been just

moderate effort in teambuilding and their

experience together is moderate.

This division of Megaspace has achieved a Level 2

rating on the SEI-CMM process maturity scale.

© 1999-2000 USC Center for Software Engineering. All Rights Reserved

612942853

COCOMO II/Chapter 3 tables/Boehm et al.

Table 3.20 ARS Breadboard System Early Design Cost Drivers

Cost Driver

Rating

Rationale

RCPX

High

RUSE

Very High

PDIF

High

PERS

High

PREX

Nominal

FCIL

Nominal

SCED

Nominal

There is a strong emphasis on reliability and

documentation, the product is very complex and the

database size is moderate. These sub-attributes are

combined to get an overall high rating.

The organization intends to evolve a product line

around this product. A great deal of early domainarchitecting effort has been invested to make the

software highly reusable.

The platform is a combination of off-the-shelf

software, custom software, and custom made

hardware. The time and storage constraints are at

70% and the platform volatility is somewhat volatile

since the hardware is also under development.

Megaspace has retained a highly capable core of

software engineers. The combined percentile for

ACAP and PCAP is 70% and the annual personnel

turnover is 11%.

The retained staff is also high in domain experience,

but they only have 9 months average experience in

the language and toolsets, and the platform is new.

There are basic lifecycle tools and basic support of

multisite development.

The schedule is under no particular constraint. Cost

minimization and product quality are higher

priorities than cycle time. (The situation would be

different if there was more industry competition.)

© 1999-2000 USC Center for Software Engineering. All Rights Reserved

612942853

COCOMO II/Chapter 3 tables/Boehm et al.

Table 3.21 ARS Breadboard System Size Calculations

Component

Language

Type

Radar Unit Control 4500

1238

Radar Item

19110

Processing

25450

Ada 95

Ada 95

Ada 95

New

Reused

New

Ada 95

Radar Database

Ada 95

Ada 95

Ada 95, C

Ada 95, C

C

C,

microcode

C,

microcode

Ada 95,

assembler

Translate

d

New

Modified

New

Reused

COTS

New

12

12

9

9

9

7

15

0

0

-

20

0

0

-

50

25

20

-

2

2

0

-

15

-

.1

-

COTS

7

0

0

20

0

New

15

-

-

-

-

Display Manager

Display Console

Size (SLOC)

6272

3222

12480

18960

24566

5400

2876

Built In Test

4200

REVL (%)

10

10

20

DM

CM

IM

AA

SU

UNFM

AAM

Equivalent

Size (SLOC)

0

-

0

-

30

-

1

-

-

-

10

-

4950

136

22932

0

TOTAL

© 1999-2000 USC Center for Software Engineering. All Rights Reserved

-

0

29.81

9.5

6

-

7025

1076

13603

1963

1607

5778

-

-

6

185

-

-

-

4830

64084

612942853

COCOMO II/Chapter 3 tables/Boehm et al.

Table 3.22 ARS Full Development Scale Drivers

Scale Driver

Rating

Rationale

PREC

Nominal

Same as BST-2

FLEX

Very Low

Same as BST-2

RESL

Very High

TEAM

Nominal

Unspecified interfaces and remaining major

risks are 10%, (or 90% specified/eliminated)

Same as BST-2 (rounded to Nominal)

PMAT

High

SEI CMM Level 3

© 1999-2000 USC Center for Software Engineering. All Rights Reserved

612942853

COCOMO II/Chapter 3 tables/Boehm et al.

Table 3.23 ARS Full Development Cost Drivers (Top Level)

Cost Driver

Rating

Rationale

RELY

Very High

Same as BST-2

DATA

Nominal

Same as MINK

CPLX

Very High

Same as BST-2

RUSE

Very High

Must be reusable across the product line

DOCU

Nominal

Same as BST-2

TIME

Very High

Same as BST-2

STOR

High

Same as BST-2

PVOL

Nominal

A major OS change every 6 months.

ACAP

High

Same as BST-2

PCAP

High

Same as BST-2

PCON

High

Same as BST-2

APEX

Very High

PLEX

Very High

LTEX

Nominal

TOOL

Very High

SITE

Nominal

Eight years of average experience (off the

chart) is still rated as Very High

Rounded off to Very High (5 years is closer to

6 than 3)

Even though the language experience is higher,

it must be averaged with the brand new toolset.

The highest capability and integration level of

software toolsets

Same as BST-2 (rounded off to Nominal)

SCED

Very Low

The constrained schedule is 76% of the nominal

schedule (i.e. the estimate when SCED is set to

nominal).

© 1999-2000 USC Center for Software Engineering. All Rights Reserved

612942853

COCOMO II/Chapter 3 tables/Boehm et al.

Table 3.24 ARS Full Development System Size Calculations

Component

Size

Radar Unit Control 3215

500

5738

Radar Item

13715

Processing

11500

58320

Radar Database

12200

9494

Display Manager

15400

31440

24566

Display Console

13200

1800

2876

Built In Test

16000

4200

REVL (%)

Language

Type

DM

CM

IM

AA

Ada 95

Ada 95

Ada 95

Ada 95

New

Modified

Reused

New

2

2

2

15

-

-

-

-

Ada 95

Ada 95

Ada 95

Ada 95

Ada 95, C

Ada 95, C

C

C

C

C,

microcode

Ada 95,

assembler

Ada 95,

assembler

Modified

Translated*

New

Reused

New

Reused

COTS

New

Modified

COTS

15

6

6

4

4

4

4

4

4

-

New

10

-

Reused

10

30

0

-

30

0

-

5

40

40

-

14

-

40

-

-

0

0

-

-

0

0

20

0

20

0

-

0

0

-

40

40

-

0

0

-

40

40

-

0

2

0

-

40

33

-

2 30

40

TOTAL

© 1999-2000 USC Center for Software Engineering. All Rights Reserved

6

0

SU

0

UNFM

.4

-

AAM Equivalent

Size

0

3279

47.7

243

12

702

0

15772

.4

24.6

30

-

.4

-

0

12

0

12

12

0

34.2

12

3249

0

12932

1208

16016

3924

3066

13728

641

359

-

-

0

17600

-

-

12

554

93274

612942853

COCOMO II/Chapter 3/Boehm et al.

Table 3.25 Radar Unit Control Detailed Cost Drivers – Changes from Top-level

Cost Driver

Rating

Rationale

STOR

Very High

PLEX

Nominal

85 % utilization; the unique hardware

constrains on-board memory additions

About 1 year of experience with the new

hardware platform

© 1999-2000 USC Center for Software Engineering. All Rights Reserved 612942853

COCOMO II/Chapter 3/Boehm et al.

Table 3.26 Radar Item Processing Detailed Cost Drivers – Changes from

Top-level

Cost Driver

Rating

Rationale

CPLX

Extra High

ACAP

Very High

PCON

Nominal

Highly complex mathematics and radar

processing algorithms with noisy data and realtime constraints

90th percentile; the very best analysts have

been put on this critical component

12% per year estimated personnel attrition,

since a couple team members are planned for

transfer in the middle of the project

© 1999-2000 USC Center for Software Engineering. All Rights Reserved 612942853

COCOMO II/Chapter 3/Boehm et al.

Table 3.27 Radar Database Detailed Cost Drivers – Changes from Top-level

Cost Driver

Rating

Rationale

DATA

Very High

2000 database bytes per SLOC

CPLX

Nominal

PLEX

Nominal

The database implementation is straightforward

with few real-time constraints.

This team has about 1 year of average platform

experience

© 1999-2000 USC Center for Software Engineering. All Rights Reserved 612942853

COCOMO II/Chapter 3/Boehm et al.

Table 3.28 Display Manager Detailed Cost Drivers – Changes from Top-level

Cost Driver

Rating

Rationale

DATA

Low

Very small database

PCON

Nominal

Higher personnel attrition than rest of project;

graphics programmers are relatively young and

in high demand; estimated 12% attrition per

year

© 1999-2000 USC Center for Software Engineering. All Rights Reserved 612942853

COCOMO II/Chapter 3/Boehm et al.

Table 3.29 Display Console Detailed Cost Drivers – Changes from Top-level

Cost Driver

Rating

Rationale

DATA

Low

Very small database for the display console

CPLX

Extra High

PVOL

High

PLEX

Low

LTEX

Nominal

TOOL

Nominal

Complex dynamic displays, micro-code control

and hard real-time constraints

Frequent hardware changes due to proprietary

hardware developed in parallel with the

software.

Only 6 months experience on new hardware

with revised graphics software version

Experienced C programmers are brand new to

the toolset.

The software toolsets are not made for this

hardware, so not all features can be used.

© 1999-2000 USC Center for Software Engineering. All Rights Reserved 612942853

COCOMO II/Chapter 3/Boehm et al.

Table 3.30 Built In Test Detailed Cost Drivers – Changes from Top-level

Cost Driver

Rating

Rationale

RUSE

High

TIME

Extra High

TOOL

Nominal

Must be reusable across program. The nature

of this function does not lend itself to very high

reuse due to unique hardware environment.

Using 95% of available CPU time in the central

computer when Built In Test is running on top

of other software.

This software is distributed in the two

processors, and the tools are not made for the

custom hardware environment. This software

also requires low-level hardware monitoring.

© 1999-2000 USC Center for Software Engineering. All Rights Reserved 612942853