Software Cost Estimation Metrics Manual

advertisement

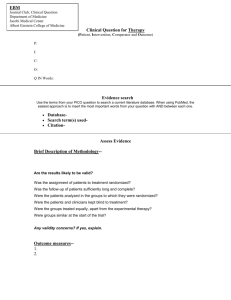

University of Southern California Center for Systems and Software Engineering Software Cost Estimation Metrics Manual 26th International Forum on COCOMO and Systems/Software Cost Modeling Ray Madachy, Barry Boehm, Brad Clark, Thomas Tan, Wilson Rosa November 2, 2011 University of Southern California Center for Systems and Software Engineering Project Background • Goal is to improve the quality and consistency of estimating methods across cost agencies and program offices through guidance, standardization, and knowledge sharing. • Project led by the Air Force Cost Analysis Agency (AFCAA) working with service cost agencies, and assisted by University of Southern California and Naval Postgraduate School • We will publish the AFCAA Software Cost Estimation Metrics Manual to help analysts and decision makers develop accurate, easy and quick software cost estimates for avionics, space, ground, and shipboard platforms. 2 University of Southern California Center for Systems and Software Engineering Stakeholder Communities • Research is collaborative across heterogeneous stakeholder communities who have helped us in refining our data definition framework, domain taxonomy and providing us project data. – – – – Government agencies Tool Vendors Industry Academia SLIM-Estimate™ TruePlanning® by PRICE Systems 3 University of Southern California Center for Systems and Software Engineering Research Objectives • Make collected data useful to oversight and management entities – Provide guidance on how to condition data to address challenges – Segment data into different Application Domains and Operating Environments – Analyze data for simple Cost Estimating Relationships (CER) and Schedule Estimating Relationships (SER) within each domain – Develop rules-of-thumb for missing data Productivity-based CER Data Conditioning and Analysis Cost (Effort) = Size * (Size / PM) Model-based CER/SER Cost (Effort) = a * Sizeb Data Records for one Domain Schedule = a * Sizeb * Staffc 4 University of Southern California Center for Systems and Software Engineering Data Source • The DoD’s Software Resources Data Report (SRDR) is used to obtain both the estimated and actual characteristics of new software developments or upgrades. • All contractors, developing or producing any software development element with a projected software effort greater than $20M (then year dollars) on major contracts and subcontracts within ACAT I and ACAT IA programs, regardless of contract type, must submit SRDRs. • Reports mandated for 1. Initial Government Estimate 2. Initial Developer Estimate (after contract award) 3. Final Developer (actual values) 5 University of Southern California Center for Systems and Software Engineering Data Analysis Challenges • Software Sizing – Inadequate information on modified code (only size provided) – Inadequate information on size change or growth, e.g. deleted – Size measured inconsistently (total, non-comment, logical statements) • Development Effort – Inadequate information on average staffing or peak staffing – Inadequate information on personnel experience – Missing effort data for some activities • Development Schedule – Replicated duration for multi-build components – Inadequate information on schedule compression – Missing schedule data for some phases • Quality Measures – None (optional in SRDR) 6 University of Southern California Center for Systems and Software Engineering Data Conditioning • Segregate data to isolate variations – Productivity Types (revised from Application Domains) – Operating Environments • Sizing Issues – Developed conversion factors for different line counts to logical line count – Interviews with data contributors recovered modification parameters for 44% of records • Missing effort data: use distribution by Productivity Type to fill in missing data (to possibly be improved using Tom Tan’s approach) • Missing schedule data: use distribution by Productivity Type to fill in missing data University of Southern California Center for Systems and Software Engineering Environments • Productivity types can be influenced by their environment. • Different Environments are grouped into 11 categories – Fixed Ground (FG) – Ground Surface Vehicles • Manned (MGV) • Unmanned (UGV) – Sea Systems • Manned (MSV) • Unmanned (USV) – Aircraft • Manned (MAV) • Unmanned (UAV) – Missile / Ordnance (M/O) – Spacecraft • Manned (MSC) • Unmanned (USC) 8 University of Southern California Center for Systems and Software Engineering Productivity Types Different productivities have been observed for different software application types. Software project data is segmented into 14 productivity types to increase the accuracy of estimating cost and schedule 1. Sensor Control and Signal Processing (SSP) 2. Vehicle Control (VC) 3. Real Time Embedded (RTE) 4. Vehicle Payload (VP) 5. Mission Processing (MP) 6. Command & Control (C&C) 7. System Software (SS) 8. Telecommunications (TEL) 9. Process Control (PC) 10. Scientific Systems (SCI) 11. Training (TRN) 12. Test Software (TST) 13. Software Tools (TUL) 14. Business Systems (BIS) 9 University of Southern California Center for Systems and Software Engineering CER/SER Reporting Operating Environments FG MGV UGV MSV … Mean Mean productivity for each type Productivity Types SSP VC RTE VP MP C&C … Productivity for each type and environment Mean Mean productivity for each environment University of Southern California Center for Systems and Software Engineering Current Status • Completing the Software Cost Estimation Metrics Manual • Initially available as an online wiki – Ability to print online version available • Inviting Reviewer comments using the online wiki “Discussion” capability • First version of manual ready Dec 31st, 2011 11