Proposed Approach to SERC EM Task: Assessing SysE Effectiveness in Major

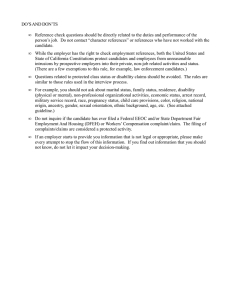

advertisement

Proposed Approach to SERC EM Task: Assessing SysE Effectiveness in Major Defense Acquisition Programs (MDAPs) Barry Boehm, USC-CSSE 26 November 2008 Outline • EM Task Statement of Work – Definition of “Systems Engineering Effectiveness” • EM Task Schedule and Planned Results • Candidate Measurement Methods, Evaluation Criteria, Evaluation Approach • Survey and evaluation instruments • Workshop objectives and approach 01/29/2009 2 EM Task Statement of Work • Develop measures to monitor and predict system engineering effectiveness for DoD Major Defense Acquisition Programs – Define SysE effectiveness – Develop measurement methods for contractors, DoD program managers and PEOs, oversight organizations • For weapons platforms, SoSs, Net-centric services – Recommend continuous process improvement approach – Identify DoD SysE outreach strategy • Consider full range of data sources – Journals, tech reports, org’s (INCOSE, NDIA), DoD studies • Partial examples cited: GAO, SEI, INCOSE, Stevens/IBM • GFI: Excel version of SADB • Deliverables: Report and presentation – Approach, sources, measures, examples, results, recommendations 01/29/2009 3 Measuring SysE Effectiveness - And measuring SysE effectiveness measures • Good SysE correlates with project success – INCOSE definition of systems engineering, “An interdisciplinary approach and means to enable the realization of successful systems” • Good SysE not a perfect predictor of project success – Project does bad SysE, but gets lucky at the last minute and finds a new COTS solution, producing a great success – Project does great SysE, but poor managers and developers turn it into a disaster • Goodness of a candidate SysE effectiveness measure (EM) – Whether it can detect when a project’s SysE is leading the project more toward success than toward failure • Heuristic for evaluating a proposed SysE EM – Role-play as underbudgeted, short-tenure project manager – Ask “How little can I do and still get a positive rating on this EM?”4 01/29/2009 RESL as Proxy for SysE Effectiveness: A COCOMO II Analysis Used on FCS 01/29/2009 5 Added Cost of Minimal Software Systems Engineering 01/29/2009 6 Percent of Time Added to Overall Schedule How Much Architecting is Enough? 100 90 10000 KSLOC 80 Percent of Project Schedule Devoted to Initial Architecture and Risk Resolution 70 Added Schedule Devoted to Rework (COCOMO II RESL factor) Total % Added Schedule 60 Sweet Spot 50 40 100 KSLOC 30 Sweet Spot Drivers: 20 10 High Assurance: rightward 0 0 01/29/2009 Rapid Change: leftward 10 KSLOC 10 20 30 40 50 60 Percent of Time Added for Architecture and Risk Resolution 7 EM Task Schedule and Results Period 12/8/08 – 1/28/09 1/29-30/09 2/1 – 3/27/09 3/30-4/3/09 week Activity Candidate EM assessments, surveys, Interviews, coverage matrix SERC-internal joint workshop with MPT Sponsor feedback on results and plans; Sponsor identification of candidate pilot organizations; Execution of plans; suggested EMs for weapons platform (WP) pilots SERC-external joint workshop with MPT, sponsors, collaborators, pilot candidates, potential EM users 4/7 – 5/1/09 Tailor lead EM candidates for weapons platform (WP) pilots; SADB-based evaluations of candidate EMs 5/6-7/09 Joint workshop with MPT, sponsors, collaborators, pilot candidates, stakeholders; Select WP EM pilots 5/11 – 7/10/09 WP EM pilot experiments; Analysis and evaluation of guidelines and results; Refinement of initial SADB evaluation results based on EM improvements Analyze WP EM pilot and SADB results; Prepare draft report on results, conclusions, and recommendations 7/13 – 8/14/09 8/17-18/09 Workshop on draft report with sponsors, collaborators, WP EM deep-dive evaluators, stakeholders 8/19 - 9/4-09 Prepare, present, and deliver final report 01/29/2009 Results Initial survey results, candidate EM assessments, coverage matrix Progress report on results, gaps, plans for gap followups Identification of WP pilot candidate organizations at Contractor, PM/PEO. Oversight levels; Updated survey, EM evaluation, recommended EM results Guidance for refining recommended EMs; Candidates for pilot EM evaluations Refined, tailored EM candidates for weapons platform (WP) pilots at Contractor, PM-PEO, oversight levels; Pilot evaluation guidelines Selected pilots; Guidance for final preparation of EM candidates and evaluation guidelines EM pilot experience database and survey results; Refined SADB EM evaluations Draft report on WP and general EM evaluation results, conclusions, and recommendations for usage and research/transition/education initiatives Feedback on draft report results, conclusions, and recommendations Final report on WP and general EM evaluation results, conclusions, and recommendations for usage and research/transition/education initiatives 8 Outline • EM Task Statement of Work – Definition of “Systems Engineering Effectiveness” • EM Task Schedule and Planned Results • Candidate Measurement Methods, Evaluation Criteria, Evaluation Approach • Survey and evaluation instruments • Workshop objectives and approach 01/29/2009 9 Candidate Measurement Methods • • • • • • • • NRC Pre-Milestone A & Early-Phase SysE top-20 checklist Air Force Probability of Program Success (PoPS) Framework INCOSE/LMCO/MIT Leading Indicators Stevens Leading Indicators (new; using SADB root causes) USC Anchor Point Feasibility Evidence progress UAH teaming theories NDIA/SEI capability/challenge criteria SISAIG Early Warning Indicators/ USC Macro Risk Tool 01/29/2009 10 Independent EM Evaluations and Resolution Candidate EM USC Stevens FC-MD PoPS Leading Indicators X INCOSE LIs X Stevens LIs X SISAIG LIs/ Macro Risk X X X NRC Top-20 List X X X SEI CMMI-Based LIs X X USC AP-Feasibility Evidence X X UAH Team Effectiveness X X 01/29/2009 X UAH X X X X X X X 11 Extended SEPP Guide EM Evaluation Criteria Functional Attributes 01/29/2009 Quality Attributes Accuracy and Objectivity. Does the EM accurately and objectively identify the degree of SE effectiveness? Or is it easy to construct counterexamples in which it would be inaccurate? Level of Detail. How well does the EM cover the spectrum between strategic and tactical data collection and analysis? Scalability. How well does the EM cover the spectrum between simple weapons platforms and ultra-large systems of systems with deep supplier chains and multiple concurrent and interacting initiatives? Ease of Use/Tool Support. Is the EM easy to learn and apply by non-experts, and is it well supported by tools, or does it require highly specialized knowledge and training? Adaptability. Is the EM easy to adapt or extend to apply to different domains, levels of assessment, changing priorities, or special circumstances? Maturity. Has the EM been extensively used and improved across a number and variety of applications? Cost Attributes Cost To Collect Data Cost To Relate Data To Effectiveness Schedule Efficiency Key Personnel Feasibility 12 Criteria validation (EM) - 2 01/29/2009 13 Candidate EM Coverage Matrix SERC EM Task Coverage Matrix V1.0 NRC Probability of Success LIPSF (Stevens) Anchoring SW Process (USC) PSSES (U. of Alabama) SSEE (CMU/SEI) x x x (w.r.t NPR) (x) x x (5 years is not explicitly stated) (x) (seems to be inferrable from the conclusions) (x) (implies this) x (x) x x x x x x x x (strongly implied) (x) (implied) x x x (x) (x) (There is no direct reference to this but is inferrable) x x (x) (there is a mention of a physical solution. That's the closest in this regard) x x SE Leading Indicators Macro Risk Model/Tool Concept Dev Atleast 2 alternatives have been evaluated Can an initial capability be achieved within the time that the key program leaders are expected to remain engaged in their current jobs (normally less than 5 years or so after Milestone B)? If this is not possible for a complex major development program, can critical subsystems, or at least a key subset of them, be demonstrated within that time frame? Will risky new technology mature before B? Is there a risk mitigation plan? Have external interface complexities been identified and minimized? Is there a plan to mitigate their risks? x x x (x) x x KPP and CONOPS At Milestone A, have the KPPs been identified in clear, comprehensive, concise terms that are understandable to the users of the system? At Milestone B, are the major system-level requirements (including all KPPs) defined sufficiently to provide a stable basis for the development through IOC? Has a CONOPS been developed showing that the system can be operated to handle the expected throughput and meet response time requirements? x x x (x) x x x (x) (x) (x) x (x) Legend: x = covered by EM (x) = partially covered (unless stated otherwise) 01/29/2009 14 EM “Survey” Instruments • Two web-/PDF-based “surveys” now in place • EM set exposure, familiarity and use – – – – Participants: industry, government, academia Short survey, seeking experience with EM sets Presently distributed only to limited audience Finding individual metrics used, less so for EM sets • EM evaluation form – – – – Participants: Task 1 EM team members Detailed survey, requires in-depth evaluation of EM’s Plan follow-up interviews for qualitative assessments Evaluation still in progress, too early to expect results Exposure to EM sets • • • • Only two EM sets used (one by DoD, other by industry) Even in limited audience, EM sets not widely used Several have no exposure at all Some “union of EM sets” might be required EM Task Schedule and Results Period 12/8/08 – 1/28/09 1/29-30/09 2/1 – 3/27/09 3/30-4/3/09 week Activity Candidate EM assessments, surveys, Interviews, coverage matrix SERC-internal joint workshop with MPT Sponsor feedback on results and plans; Sponsor identification of candidate pilot organizations; Execution of plans; suggested EMs for weapons platform (WP) pilots SERC-external joint workshop with MPT, sponsors, collaborators, pilot candidates, potential EM users 4/7 – 5/1/09 Tailor lead EM candidates for weapons platform (WP) pilots; SADB-based evaluations of candidate EMs 5/6-7/09 Joint workshop with MPT, sponsors, collaborators, pilot candidates, stakeholders; Select WP EM pilots 5/11 – 7/10/09 WP EM pilot experiments; Analysis and evaluation of guidelines and results; Refinement of initial SADB evaluation results based on EM improvements Analyze WP EM pilot and SADB results; Prepare draft report on results, conclusions, and recommendations 7/13 – 8/14/09 8/17-18/09 Workshop on draft report with sponsors, collaborators, WP EM deep-dive evaluators, stakeholders 8/19 - 9/4-09 Prepare, present, and deliver final report 01/29/2009 Results Initial survey results, candidate EM assessments, coverage matrix Progress report on results, gaps, plans for gap followups Identification of WP pilot candidate organizations at Contractor, PM/PEO. Oversight levels; Updated survey, EM evaluation, recommended EM results Guidance for refining recommended EMs; Candidates for pilot EM evaluations Refined, tailored EM candidates for weapons platform (WP) pilots at Contractor, PM-PEO, oversight levels; Pilot evaluation guidelines Selected pilots; Guidance for final preparation of EM candidates and evaluation guidelines EM pilot experience database and survey results; Refined SADB EM evaluations Draft report on WP and general EM evaluation results, conclusions, and recommendations for usage and research/transition/education initiatives Feedback on draft report results, conclusions, and recommendations Final report on WP and general EM evaluation results, conclusions, and recommendations for usage and research/transition/education initiatives 17 Target EM Task Benefits for DoD • Identification of best available EM’s for DoD use – Across 3 domains; 3 review levels; planning and execution • Early warning vs. late discovery of SysE effectiveness problems • Identification of current EM capability gaps – Recommendations for most cost-effective enhancements, research on new EM approaches – Ways to combine EM strengths, avoid weaknesses • Foundation for continuous improvement of DoD SysE effectiveness measurement – Knowledge base of evolving EM cost-effectiveness – Improved data for evaluating SysE ROI 01/29/2009 18 Candidate EM Discussion Issues • Feedback on evaluation criteria, approach – Single-number vs. range asessment – Effectiveness may vary by domain, management level • Experience with candidate EMs • Additional candidate EMs – Dropped GAO, COSYSMO parameters, NUWC open-systems • Feedback on EM survey and evaluation instruments – Please turn in your survey response • Feedback on coverage matrix • Other issues or concerns 01/29/2009 19 Fragmentary Competent but Incomplete Strong Externally Validated Impact (1-5) Meager Question # Unavailable Macro Risk Model Interface USC MACRO RISK MODEL Copyright USC-CSSE NOTE: Evidence ratings should be done independently, and should address the degree to which the evidence that has been provided supports a "yes" answer to each question. U M F C/I S EV EVIDENCE Rationale and Ar System and software objectives and constraints have been adequately defined and validated. Goal 1: Critical Success Factor 1 System and software functionality and performance objectives have been defined and prioritized. 1(a) 3 3 Are the user needs clearly defined and tied to the mission? 1(b) 3 3 Is the impact of the system on the user understood? 1(c) 2 2 Have all the risks that the software-intensive acquisition will not meet the user expectations been addressed? Critical Success Factor 2 The system boundary, operational environment, and system and software interface objectives have been defined. 2(a) 3 3 Are all types of interface and dependency covered? 2(b) 4 4 For each type, are all aspects covered? 2(c) 5 5 Are interfaces and dependencies well monitored and controlled? Critical Success Factor 3 3(a) CSF Risk (1-25) 5 5 01/29/2009 6/18/08 System and software flexibility and resolvability objectives have been defined and prioritized. 6 15 21 Has the system been conceived and described as "evolutionary"? ©USC-CSSE 20 20