Architecting Scientific Data Systems in the 21 Century

advertisement

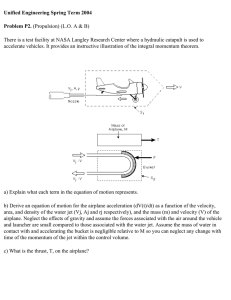

National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Jet Propulsion Laboratory California Institute of Technology Pasadena, California Architecting Scientific Data Systems in the 21st Century Dan Crichton Principal Computer Scientist Program Manager, Data Systems and Technology NASA Jet Propulsion Laboratory DJC-1 National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California • Architecting the “End-to-End” Science Data System Focus on – science data generation – data capture, end-to-end – access to science data by the community • Multiple scientific domains – Earth science – Planetary science – Biomedical research • Applied technology research – SW/Sys architectures – Product lines – Emerging technologies DJC-2 National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California • • • • Challenges in Science Data Systems A major challenge is in organizing the wealth of science data which requires both standards and data engineering/curation – Search and access are dependent on good curation – Community support is critical to capture and curate the data in a manner that is useful the community Usability of data continues to be a big challenge – Planetary science requires ALL science data and/or science data pipelines be peer reviewed prior to release of data – Standard formats are critical Data sharing continues to be a challenge – Policies at the grant level coupled with standard data management plans are helping Computational and Storage, historically major concerns, are now commodity services – Google, Microsoft Research, Yahoo! And Amazon try to provide services to e-science in the form of “Cloud Computing” DJC-3 National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California • National Research Council: Committee on Data Management and Computation CODMAC (1980s) identified seven core principles: – Scientific involvement; – Scientific oversight; – Data availability including usable formats, ancillary data, timely distribution, validated data, and documentation; – Proper facilities; – Structured, transportable, adequately documented software; – Data storage in permanent and retrievable form; and – Adequate data system funding. • • The CODMAC has led to national efforts to organize scientific results in partnership with the science community (particularly physical science) What does CODMAC mean in the 21st Century? DJC-4 National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California • The “e-science” Trend… Highly distributed, multi-organizational systems – Systems are moving towards loosely coupled systems or federations in order to solve science problems which span center and institutional environments • Sharing of data and services which allow for the discovery, access, and transformation of data – Systems are moving towards publishing of services and data in order to address data and computationally-intensive problems – Infrastructures which are being built to handle future demand • Address complex modeling, inter-disciplinary science and decision support needs – Need a dynamic environment where data and services can be used quickly as the building blocks for constructing predictive models and answering critical science questions 5 National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California JPL e-science Examples Planetary Data System Distributed Planetary Science Archive Rings Node Ames Research Center Moffett Field, CA Geosciences Node Washington University St. Louis, MO Imaging Node JPL and USGS Pasadena, CA and Flagstaff, AZ THEMIS Data Node Arizona State University Tempe, AZ Central Node Jet Propulsion Laboratory Pasadena, CA Small Bodies Node University of Maryland College Park, MD Atmospheres Node New Mexico State University Las Cruces, NM Planetary Plasma Interactions Node University of California Los Angeles Los Angeles, CA Navigation Ancillary Information Node Jet Propulsion Laboratory Pasadena, CA EDRN Cancer Research (8X) • Highly diverse (30+ centers performing parallel studies using different instruments) • Geographically distributed • New centers plugging in (i.e. data nodes) • Multi-center data system infrastructure • Heterogeneous sites with common interfaces allowing access to distributed portals Integrated based on common data standards Secure (e.g. encryption, authentication, authorization) Planetary Science Data System (4X) • Highly diverse (40 years of science data from NASA and Int’l missions) • Geographically distributed; moving int’l • New centers plugging in (i.e. data nodes) • Multi-center data system infrastructure • Heterogeneous nodes with common interfaces • Integrated based on enterprise-wide data standards • Sits on top of COTS-based middleware National Data Sharing Infrastructure Supporting Collaboration In Biomedical Research For EDRN Fred Hutchinson Cancer Research Center, Seattle (DMCC) Creighton University (CEC) University of Michigan (CEC) University of Pittsburgh (CEC) University of Colorado (CEC) UT Health Science Center, San Antonio (CEC) Moffitt Cancer Center, Tampa (BDL) National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California • • Architectural drivers in science data systems Increasing data volumes requiring new approaches for data production, validation, processing, discovery and data transfer/distribution (E.g., scalability relative to available resources) Increased emphasis on usability of the data (E.g., discovery, access and analysis) Archive Volume Growth 90 Increasing diversity of data sets and complexity for integrating across missions/experiments (E.g., common information model for describing the data) 80 70 TB (Accum) • 60 50 TBytes 40 30 20 • • • Increasing distribution of coordinated processing and operations (E.g., federation) Increased pressure to reduce cost of supporting new missions Increasing desire for PIs to have integrated tool sets to work with data products with their own environments (E.g. perform their own generation and distribution) 10 0 1990 1992 1994 1996 1998 2000 2002 2004 2006 2008 Year Planetary Science Archive DJC-7 National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California Architectural Focus • Consistent distributed capabilities • Develop on-demand, shared services (E.g. processing, translation, etc) • Deploy high throughput data movement mechanisms • Move capability up the mission pipeline • Reduce local software solutions that do not scale • Build value-added services and capabilities on top of the infrastructure – Resource discovery (data, metadata, services, etc), unified repository access, simple transformations, bulk transfer of multiple products, and unified catalog access – Move towards era of “grid-ing” loosely coupled science system – Processing – Translation – Increasing importance in developing an “enterprise” approach with common services DJC-8 National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California • Started in 1998 as a research and development task funded at JPL by the Office of Space Science to address • • • • • Object Oriented Data Technology* Application of Information Technology to Space Science Provide an infrastructure for distributed data management Research methods for interoperability, knowledge management and knowledge discovery Develop software frameworks for data management to reuse software, manage risk, reduce cost and leverage IT experience OODT/Science Web Tools Archive Client Navigation Service OBJECT ORIENTED DATA TECHNOLOGY FRAMEWORK Archive Service Profile Service Product Service Query Service Bridge to External Services Other Service 1 Other Service 2 Profile XML Data Data System 1 Data System 2 OODT Initial focus • • • Data archiving – Manage heterogeneous data products and resources in a distributed, metadata-driven environment Data location and discovery – Locate data products across multiple archives, catalogs and data systems Data retrieval – Retrieve diverse data products from distributed data sources and integrate * 2003 NASA Software of the Year Runner Up DJC-9 National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California • • • • • • • • • Architectural Principles* Separate the technology and the information architecture Encapsulate the messaging layer to support different messaging implementations Encapsulate individual data systems to hide uniqueness Provide data system location independence Require that communication between distributed systems use metadata Define a model for describing systems and their resources Provide scalability in linking both number of nodes and size of data sets Allow systems using different data dictionaries and metadata implementations to be integrated Leverage existing software, where possible (e.g., open source, etc) * Crichton, D, Hughes, J. S, Hyon, J, Kelly, S. “Science Search and Retrieval using XML”, Proceedings of the 2nd National Conference on Scientific and Technical Data, National Academy of Science, Washington DC, 2000. DJC-10 National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California 1. Science data tools and applications use “APIs” to connect to a virtual data repository Distributed Architecture 2. Middleware creates the data grid infrastructure connecting distributed heterogeneous systems and data Mission Data Repositories OODT API Visualization Tools OODT API Web Search Tools OODT API Analysis Tools 3. Repositories for storing and retrieving many types of data OODT Reusable Data Grid Framework Biomedical Data Repositories Engineering Data Repositories DJC-11 National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California • • • • • • • Software Implementation OODT is Open Source Developed using open source software (i.e. Java/J2EE and XML) Implemented reusable, extensible Java-based software components – Core software for building and connecting data management systems Provided messaging as a “plug-in” component that can be replaced independent of the other core components. Messaging components include: – CORBA, Java RMI, JXTA, Web Services, etc – REST seems to have prevailed Provided client APIs in Java, C++, HTTP, Python, IDL Simple installation on a variety of platforms (Windows, Unix, Mac OS X, etc) Used international data architecture standards – ISO/IEC 11179 – Specification and Standardization of Data Elements – Dublin Core Metadata Initiative – W3C’s Resource Description Framework (RDF) from Semantic Web Community DJC-12 National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California • • Often unique, one of a kind missions – – Highly distributed acquisition and processing across partner organizations Highly diverse data sets given heterogeneity of the instruments and the targets (i.e. solar system) Missions are required to share science data results with the research community requiring: – – – – • Can drive technological changes Instruments are competed and developed by academic, industry and industrial partners – • Characteristics of Informatics in Space Science Common domain information model used to drive system implementations Expert scientific help to the user community on using the data Peer-review of data results to ensure quality Distribution of data to the community Planetary science data from NASA (and some international) missions is deposited into the Planetary Data System DJC-13 National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California Distributed Space Architecture DJC-14 National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California • Planetary Science Data Standards JPL has led and managed development of the planetary science data standards for NASA and the international community – ESA, ISRO, JAXA, etc leveraging planetary science data standards – A diverse model used across the community that unifies data systems • Core “information” model that has been used to describe every type of data from NASA’s planetary exploration missions and instruments – ~4000 different types of data PDS Image Class (Object-Oriented) PDS Image Label (ODL) Describes An Image DJC-15 National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California • Pre-Oct 2002, no unified view across distributed operational planetary science data repositories – – • – Traditional distribution infeasible due to cost and system constraints Mars Odyssey could not be distributed using traditional method Current work with the OODT Data Grid Framework has provided the technology for NASA’s planetary data management infrastructure to – – – – – • Science data distributed across the country Science data distributed on physical media Planetary data archive increasing from 4 TBs in 2001 to 100 TBs in 2009 – • 2001 Mars Odyssey: A paradigm change 2001 Mars Odyssey Support online distribution of science data to planetary scientists Enable interoperability between nine institutions Support real-time access to data products Provided uniform software interfaces to all Mars Odyssey data allowing scientists and developers to link in their own tools Operational October 1, 2002 Moving to multi-terrabyte online data movement in 2009 DJC-16 National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California Explosion of Data in Biomedical Research • “To thrive, the field that links biologists and their data urgently needs structure, recognition and support. The exponential growth in the amount of biological data means that revolutionary measures are needed for data management, analysis and accessibility. Online databases have become important avenues for publishing biological data.” – Nature Magazine, September 2008 • The capture and sharing of data to support collaborative research is leading to new opportunities to examine data in many sciences – NASA routinely releases “data analysis programs” to analyze and process existing data • EDRN has become a leader in building informatics technologies and constructing databases for cancer research. The tools and technologies are now ready for wider use! 27-Jun-16 EDRN Data Repositories DJC-17 17 National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California • • • • Bioinformatics: National Cancer Institute Early Detection Research Network (EDRN) Initiated in 2000, renewed in 2005 100+ Researchers (both members and associated members) ~40 + Research Institutions Mission of EDRN – Discover, develop and validate biomarkers for cancer detection, diagnosis and risk assessment – Conduct correlative studies/trials to validate biomarkers as indicators of early cancer, preinvasive cancer, risk, or as surrogate endpoints – Develop quality assurance programs for biomarker testing and evaluation – Forge public-private partnerships • Leverage building distributed planetary science data systems for biomedicine DJC-18 National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California • • EDRN has been a pioneer in the use of informatics technologies to support biomarker research EDRN has developed a comprehensive infrastructure to support biomarker data management across EDRN’s distributed cancer centers – – • EDRN Knowledge Environment Twelve institutions are sharing data Same architectural framework as planetary science It supports capture and access to a diverse set of information and results – – – – – Biomarkers Proteomics Biospecimens Various technologies and data products (image, micro-satellite, …) Study Management DJC-19 National Aeronautics and Space Administration EDRN’s Ontology Model Jet Propulsion Laboratory California Institute of Technology Pasadena, California • • EDRN has developed a High level ontology model for biomarker research which provides standards for the capture of biomarker information across the enterprise Specific models are derived from this high level model – – • • Model of biospecimens Model for each class of science data EDRN CDE Tools EDRN is specifically focusing on a granular model for annotating biomarkers, studies and scientific results EDRN has a set of EDRN Common Data Elements which is used to provide standard data elements and values for the capture and exchange of data DJC-20 EDRN Biomarker Ontology Model National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California • Leveraged OODT software framework for constructing ground data systems for earth science missions – • Earth Science Distributed Process Mgmt SeaWinds on ADEOS II (Launched Dec 2002) Used OODT Catalog and Archive Service software Constructed “workflows” – Execution of “processors” based on a set of rules • Provided “lights out” operations • Multiple Missions User Interface (Process Monitoring & Control, Instrument Command ing, Data Verification) Instrument Commands PreProce ssors (PP) En gi ne e rin g An al ysis (EA) S cie n ce Le ve l Proce ssors (LP) S cie n ce An al ysis an d Q u ality Re portin g (S A) Spacecraft & Ancillary Files Product Delivery (PM) SeaWinds QuikSCAT Orbiting Carbon Observatory (OCO) NP Sounder PEATE SMAP File Transfer (FX) – – – – – Science Products Released to PO.DAAC Data Management and Automatic Process Control (PM) using OODT DJC-21 National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California • • Supporting Climate Research Earth Observing System Data and Information System (EOSDIS) serves NASA’s earth scientists data needs Two major legacies are left – Archiving of explosion in observational data in Distributed Active Archive Centers (DAACs) • Request-driven retrieval from archive is time consuming – Adoption of Hierarchical Data Format (HDF) for data files • Defined by and unique to each instrument but not necessarily consistent between instruments • What are the next steps to accelerating use of an ever increasing observational data collection? – What data are available? – What is the information content? – How should it be interpreted in climate modeling research? National Aeronautics and Space Administration EOSDIS DAAC’s Jet Propulsion Laboratory California Institute of Technology Pasadena, California Earth Observing System Data and Information System Distributed Active Archive Centers National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California EOSDIS DAAC’s Earth Observing System Data and Information System Distributed Active Archive Centers Cumulative Volume of L2+ Products at All DAACs 4,000 3,500 Cumulative Volume (TB) 3,000 2,500 2,000 1,500 1,000 500 0 FY00 FY01 FY02 FY03 FY04 FY05 FY06 FY07 FY08 Fiscal Year FY09 FY10 FY11 FY12 FY13 FY14 National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California Current Data System • System serves static data products. User must find move, and manipulate all data him/herself. • User must change spatial and temporal resolutions to match. • User must understand instrument observation strategies and subtleties to interpret. National Aeronautics and Space Administration Climate Data eXchange (CDX) Jet Propulsion Laboratory California Institute of Technology Pasadena, California • Develop an architecture that enables sharing of climate model output and NASA observational data – Develop an architectural model that evaluates trade space of model • Provide extensive server-side computational services side – Increase performance – Subsetting, reformatting, re-gridding • Deliver an “open source” toolkit • Connect NASA and DOE National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California Combining Instrument Data to enable Climate Research: AIRS and MLS Combining AIRS and MLS requires: – Rectifying horizontal, vertical and temporal mismatch – Assessing and correcting for the instruments’ scenespecific error characteristics (see left diagram) National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California Climate Data Exchange Key Questions to be Answered Specific Tools (H2O, CO2, …) DJC-28 28 National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California • Summary Software is critical to supporting collaborative research in science – Virtual organizations – Transparent access to data – End-to-end environments • Software architecture is critical to – – – – • Reducing cost of building science data systems Building virtual organizations Constructing software product lines Driving standards Science is still learning how to best leverage technology in a collaborative discovery environment, but significant progress is being made! DJC-29 National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California THANK YOU… Dan Crichton – Dan.Crichton@jpl.nasa.gov – +1 818 354 9155 DJC-30