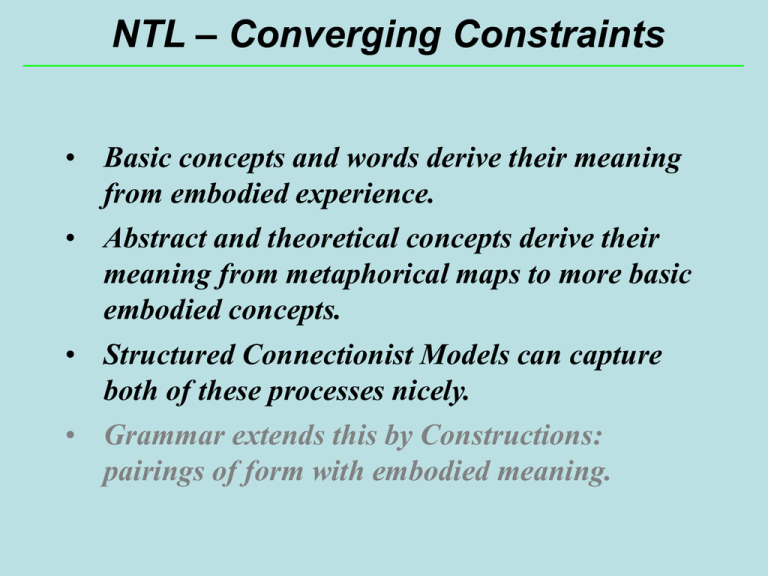

– Converging Constraints NTL

advertisement

NTL – Converging Constraints • Basic concepts and words derive their meaning from embodied experience. • Abstract and theoretical concepts derive their meaning from metaphorical maps to more basic embodied concepts. • Structured Connectionist Models can capture both of these processes nicely. • Grammar extends this by Constructions: pairings of form with embodied meaning. Simulation-based language understanding “Harry walked to the cafe.” Utterance Constructions Analysis Process General Knowledge Belief State Schema walk Trajector Harry Cafe Goal cafe Simulation Specification Simulation The ICSI/Berkeley Neural Theory of Language Project Background: Primate Motor Control • Relevant requirements (Stromberg, Latash, Kandel, Arbib, Jeannerod, Rizzolatti) – Should model coordinated, distributed, parameterized control programs required for motor action and perception. – Should be an active structure. – Should be able to model concurrent actions and interrupts. • Model – The NTL project has developed a computational model based on that satisfies these requirements (x- schemas). – Details, papers, etc. can be obtained on the web at http://www.icsi.berkeley.edu/NTL Active representations • Many inferences about actions derive from what we know about executing them • Representation based on stochastic Petri nets captures dynamic, parameterized nature of actions walker at goal energy walker=Harry goal=home Walking: bound to a specific walker with a direction or goal consumes resources (e.g., energy) may have termination condition (e.g., walker at goal) ongoing, iterative action Somatotopy of Action Observation Foot Action Hand Action Mouth Action Buccino et al. Eur J Neurosci 2001 Active Motion Model Evolving Responses of Competing Models over Time. Nigel Goddard 1989 Language Development in Children • • • • • • • • 0-3 mo: prefers sounds in native language 3-6 mo: imitation of vowel sounds only 6-8 mo: babbling in consonant-vowel segments 8-10 mo: word comprehension, starts to lose sensitivity to consonants outside native language 12-13 mo: word production (naming) 16-20 mo: word combinations, relational words (verbs, adj.) 24-36 mo: grammaticization, inflectional morphology 3 years – adulthood: vocab. growth, sentence-level grammar for discourse purposes cow apple ball juice bead girl bottle truck baby w oof yum go up this no m ore m ore spoon ham m er shoe d ad d y m oo w hee get out there bye banana box eye m om y uhoh sit in here hi cookie horse d oor boy choochoo boom oh open on that no food toys yes misc. people d ow n sound emotion action prep. demon. social Words learned by most 2-year olds in a play school (Bloom 1993) Learning Spatial Relation Words Terry Regier A model of children learning spatial relations. Assumes child hears one word label of scene. Program learns well enough to label novel scenes correctly. Extended to simple motion scenarios, like INTO. System works across languages. Mechanisms are neurally plausible. Learning System dynamic relations (e.g. into) structured connectionist network (based on visual system) We’ll look at the details next lecture Limitations • • • • • • • Scale Uniqueness/Plausibility Grammar Abstract Concepts Inference Representation Biological Realism Constrained Best Fit in Nature inanimate physics chemistry biology vision language animate lowest energy state molecular minima fitness, MEU neuroeconomics threats, friends errors, NTL Learning Verb Meanings David Bailey A model of children learning their first verbs. Assumes parent labels child’s actions. Child knows parameters of action, associates with word Program learns well enough to: 1) Label novel actions correctly 2) Obey commands using new words (simulation) System works across languages Mechanisms are neurally plausible. Motor Control (X-schema) for SLIDE Parameters for the SLIDE X-schema Feature Structures for PUSH System Overview Learning Two Senses of PUSH Model merging based on Bayesian MDL Training Results David Bailey English • 165 Training Examples, 18 verbs • Learns optimal number of word senses (21) • 32 Test examples : 78% recognition, 81% action • All mistakes were close lift ~ yank, etc. • Learned some particle CXN,e.g., pull up Farsi • With identical settings, learned senses not in English Learning Two Senses of PUSH Model merging based on Bayesian MDL Constrained Best Fit in Nature inanimate physics chemistry biology vision language animate lowest energy state molecular minima fitness, MEU neuroeconomics threats, friends errors, NTL Model Merging and Recruitment Word Learning requires “fast mapping”. Recruitment Learning is a Connectionist Level model of this. Model Merging is a practical Computational Level method for fast mapping. Bailey’s thesis outlines the reduction and some versions have been built. The full story requires Bayesian MDL, later. The Idea of Recruitment Learning K Y X N B F = B/N Pno link (1 F ) BK • Suppose we want to link up node X to node Y • The idea is to pick the two nodes in the middle to link them up • Can we be sure that we can find a path to get from X to Y? the point is, with a fan-out of 1000, if we allow 2 intermediate layers, we can almost always find a path Recruiting triangle nodes • Let’s say we are trying to remember a green circle • currently weak connections between concepts (dotted lines) has-color blue has-shape green round oval Strengthen these connections • and you end up with this picture has-color has-shape Green circle blue green round oval