Abstract Neuron { y

advertisement

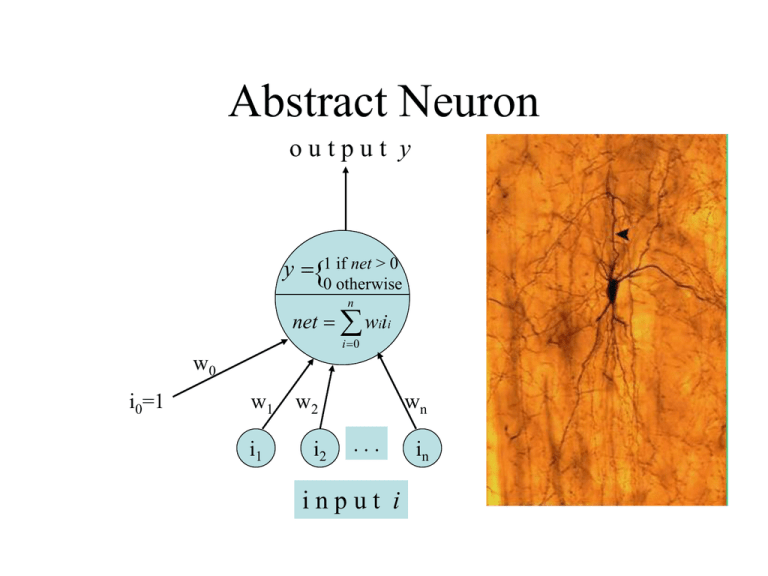

Abstract Neuron

output y

y {1 if net > 0

0 otherwise

n

net wiii

i 0

w0

i0=1

w1

i1

w2

i2

wn

...

input i

in

Link to Vision: The Necker Cube

Constrained Best Fit in Nature

inanimate

physics

chemistry

biology

vision

language

animate

lowest energy

state

molecular

minima

fitness, MEU

Neuroeconomics

threats,

friends

errors,

NTL

Computing other relations

• The 2/3 node is a useful function that

activates its outputs (3) if any (2) of its 3

inputs are active

• Such a node is also called a triangle node

and will be useful for lots of

representations.

Triangle nodes and McCulloughPitts Neurons?

Relation (A)

Object (B) Value (C)

A

B

C

“They all rose”

triangle nodes:

when two of the

abstract neurons

fire, the third

also fires

model of

spreading

activation

Basic Ideas

• Parallel activation streams.

• Top down and bottom up activation combine to

determine the best matching structure.

• Triangle nodes bind features of objects to values

• Mutual inhibition and competition between

structures

• Mental connections are active neural connections

Behavioral Experiments

•Identity – Mental activity is Structured Neural

Activity

•Spreading Activation — Psychological model/theory

behind priming and interference experiments

•Simulation — Necessary for meaningfulness and

contextual inference

•Parameters — Govern simulation, strict inference, link to

language

Bottom-up vs. Top-down Processes

• Bottom-up: When processing is driven by

the stimulus

• Top-down: When knowledge and context

are used to assist and drive processing

• Interaction: The stimulus is the basis of

processing but almost immediately topdown processes are initiated

Stroop Effect

• Interference between form and meaning

Name the words

Book Car Table Box Trash Man Bed

Corn Sit Paper Coin Glass House Jar

Key Rug Cat Doll Letter Baby Tomato

Check Phone Soda Dish Lamp Woman

Name the print color of the words

Blue Green Red Yellow Orange Black Red

Purple Green Red Blue Yellow Black Red

Green White Blue Yellow Red Black Blue

White Red Yellow Green Black Purple

Procedure for experiment that demonstrates the word-superiority effect. First the word is

presented, then the XXXX’s, then the letters.

Word-Superiority Effect

Reicher (1969)

• Which condition resulted in faster & more

accurate recognition of the letter?

– The word condition

– Letters are recognized faster when they are part

of a word then when they are alone

– This rejects the completely bottom-up feature

model

– Also a challenge for serial processing

Connectionist Model

McClelland & Rumelhart (1981)

• Knowledge is distributed and processing

occurs in parallel, with both bottom-up and

top-down influences

• This model can explain the WordSuperiority Effect because it can account for

context effects

Connectionist Model of

Word Recognition

Interaction in language processing:

Pragmatic constraints on lexical

access

Jim Magnuson

Columbia University

Information integration

• A central issue in psycholinguistics and cognitive

science:

– When/how are such sources integrated?

• Two views

– Interaction

• Use information as soon as it is available

• Free flow between levels of representation

– Modularity

• Protect and optimize levels by encapsulation

• Staged serial processing

• Reanalyze / appeal to top-down information only when needed

Reaction Times in Milliseconds

after: “They all rose”

0 delay

200ms. delay

flower

685

659

stood

677

623

desk

711

652

Example: Modularity and word recognition

• Tanenhaus et al. (1979) [also Swinney, 1979]

– Given a homophone like rose, and a context biased towards one

sense, when is context integrated?

• Spoken sentence primes ending in homophones:

– They all rose

vs. They bought a rose

• Secondary task: name a displayed orthographic word

– Probe at offset of ambiguous word: priming for both

“stood” and “flower”

– 200 ms later: only priming for appropriate sense

• Suggests encapsulation followed by rapid integration

• But the constraint here is weak -- overestimates modularity?

• How could we examine strong constraints in natural contexts?

Allopenna, Magnuson & Tanenhaus (1998)

Eye camera

Scene camera

‘Pick up the beaker’

Eye

tracking

computer

Do rhymes compete?

• Cohort (Marlsen-Wilson): onset similarity is

primary because of the incremental nature

of speech

(serial/staged; Shortlist/Merge)

– Cat activates cap, cast, cattle, camera, etc.

– Rhymes won’t compete

• NAM (Neighborhood Activation Model; Luce):

global similarity is primary

– Cat activates bat, rat, cot, cast, etc.

– Rhymes among set of strong competitors

• TRACE (McClelland & Elman): global

similarity constrained by incremental nature

of speech

TRACE predictions

– Cohorts and rhymes compete, but with

different time course

Allopenna et al. Results

Study 1 Conclusions

• As predicted by interactive models, cohorts and

rhymes are activated, with different time courses

• Eye movement paradigm

–

–

–

–

More sensitive than conventional paradigms

More naturalistic

Simultaneous measures of multiple items

Transparently linkable to computational model

• Time locked to speech at a fine grain

Theoretical conclusions

• Natural contexts provide strong constraints that are

used

• When those constraints are extremely predictive,

they are integrated as quickly as we can measure

• Suggests rapid, continuous interaction among

– Linguistic levels

– Nonlinguistic context

• Even for processes assumed to be low-level and

automatic

• Constrains processing theories, also has

implications for, e.g., learnability

Producing words from pictures or from other

words:

A comparison of aphasic lexical access from two

different input modalities

Gary Dell

with

Myrna Schwartz, Dan Foygel, Nadine Martin, Eleanor

Saffran, Deborah Gagnon, Rick Hanley, Janice Kay,

Susanne Gahl, Rachel Baron, Stefanie Abel, Walter

Huber

Boxes and arrows in the linguistic

system

Semantics

Syntax

Lexicon

Input

Phonology

Output

Phonology

Picture Naming Task

Semantics

Say: “cat”

Syntax

Lexicon

Input

Phonology

Output

Phonology

A 2-step Interactive Model of Lexical

Access in Production

Semantic Features

FOG

f

r

d

Onsets

k

DOG

m

CAT

ae

RAT

o

Vowels

MAT

t

g

Codas

Step 1 – Lemma Access

Activate semantic features of CAT

FOG

f

r

d

Onsets

k

DOG

m

CAT

ae

RAT

o

Vowels

MAT

t

g

Codas

Step 1 – Lemma Access

Activation spreads through network

FOG

f

r

d

Onsets

k

DOG

m

CAT

ae

RAT

o

Vowels

MAT

t

g

Codas

Step 1 – Lemma Access

Most active word from proper category is

selected and linked to syntactic frame

NP

FOG

f

r

d

Onsets

k

DOG

m

CAT

ae

RAT

o

Vowels

N

MAT

t

g

Codas

Step 2 – Phonological Access

Jolt of activation is sent to selected word

NP

FOG

f

r

d

Onsets

k

DOG

m

CAT

ae

RAT

o

Vowels

N

MAT

t

g

Codas

Step 2 – Phonological Access

Activation spreads through network

NP

FOG

f

r

d

Onsets

k

DOG

m

CAT

ae

RAT

o

Vowels

N

MAT

t

g

Codas

Step 2 – Phonological Access

Most activated phonemes are selected

FOG

DOG

CAT

RAT

MAT

Syl

On Vo Co

f

r

d

Onsets

k

m

ae

o

Vowels

t

g

Codas

Semantic Error – “dog”

Shared features activate semantic neighbors

NP

FOG

f

r

d

Onsets

k

DOG

m

CAT

ae

RAT

o

Vowels

N

MAT

t

g

Codas

Formal Error – “mat”

Phoneme-word feedback activates formal neighbors

NP

FOG

f

r

d

Onsets

k

DOG

m

CAT

ae

RAT

o

Vowels

N

MAT

t

g

Codas

Mixed Error – “rat”

Mixed semantic-formal neighbors gain activation

from both top-down and bottom-up sources

NP

FOG

f

r

d

Onsets

k

DOG

m

CAT

ae

RAT

o

Vowels

N

MAT

t

g

Codas

Errors of Phonological Access- “dat”

“mat”

Selection of incorrect phonemes

FOG

DOG

CAT

RAT

MAT

Syl

On Vo Co

f

r

d

Onsets

k

m

ae

o

Vowels

t

g

Codas

A Test of the Model:

Picture-naming Errors in Aphasia

“cat”

175 pictures of concrete nouns–Philadelphia Naming Test

94 patients (Broca,Wernicke, anomic, conduction)

60 normal controls

Response Categories

Correct

CAT

Semantic Formal Mixed

DOG

MAT

RAT

Unrelated Nonword

LOG

DAT

Continuity Thesis:

Normal Error Pattern:

97% Correct

cat dog mat rat log dat

Random Error Pattern:

80% Nonwords

cat dog mat rat log dat

Implementing the Continuity Thesis

Random Pattern

Model Random Pattern

cat dog mat rat log dat

1.Set up the model lexicon so

that when noise is very large, it

creates an error pattern similar

to the random pattern.

2. Set processing

parameters of the model so

that its error pattern matches

the normal controls.

Normal Controls

Model Normal Pattern

cat dog mat rat log dat

Lesioning the model: The semanticphonological weight hypothesis

Semantic Features

Semantic-word

weight: S

FOG

f

r

d

Onsets

k

DOG

m

CAT

ae

RAT

o

Vowels

MAT

t

Phonological

word weight:

P

g

Codas

Patient CAT

DOG

MAT

Correct Semantic Formal

LH

RAT

LOG

DAT

Mixed Unrelated Nonword

.71

.03

.07

.01

.02

.15

s=.024 p=.018 .69

.06

.06

.01

.02

.17

IG

.77

s=.019 p=.032 .77

.10

.09

.06

.06

.03

.01

.01

.04

.03

.03

GL

.29

s=.010 p=.016 .31

.04

.10

.22

.15

.03

.01

.10

.13

.32

.30

Representing Model-Patient Deviations

Root Mean Square

Deviation (RMSD)

LH .016

IG .016

GL .043

94 new patients—no exclusions

94.5 % of variance accounted for

Conclusions

The logic underlying box-and-arrow- models

is perfectly compatible with connectionist models.

Connectionist principles augment the boxes and arrows

with

-- a mechanism for quantifying degree of damage

-- mechanisms for error types and hence an

explanation of the error patterns

Implications for recovery and rehabilitation

Behavioral and Imaging

Experiments

Ben Bergen and Shweta Narayan

Do Words and Images Match?

• Behavioral – Image First

Does shared effector slow negative response?

• Imaging – Simple sentence using verb first

Does verb evoke activity in motor effector area?

• Metaphor follow-on experiment

Will “kick the idea around” evoke motor activity?

Structured Neural Computation in NTL

The theory we are outlining uses the computational

modeling mechanisms of the Neural Theory of Language

(NTL).

NTL makes use of structured connectionism (Not PDP

connectionism!).

NTL is ‘localist,’ with functional clusters as units.

Localism allows NTL to characterize precise

computations, as needed in actions and in inferences.

Simulation

To understand the meaning of the concept grasp,

one must at least be able to imagine oneself or

someone else grasping an object.

Imagination is mental simulation, carried out by

the same functional clusters used in acting and

perceiving.

The conceptualization of grasping via simulation

therefore requires the use of the same functional

clusters used in the action and perception of

grasping.

Multi-Modal Integration

Cortical premotor areas are endowed with sensory

properties.

They contain neurons that respond to visual,

somatosensory, and auditory stimuli.

Posterior parietal areas, traditionally considered to process

and associate purely sensory information, alsos play a

major role in motor control.

Somatotopy of Action Observation

Foot Action

Hand Action

Mouth Action

Buccino et al. Eur J Neurosci 2001

The Simulation Hypothesis

How do mirror neurons work?

By simulation.

When the subject observes another individual doing an action,

the subject is simulating the same action.

Since action and simulation use some of the same neural

substrate, that would explain why the same neurons are firing

during action-observation as during action-execution.

Conclusion 1

The Sensory-Motor System Is Sufficient

For at least one concept, grasp, functional clusters, as

characterized in the sensory-motor system and as modeled

using structured connectionist binding and inference

mechanisms, have all the necessary conceptual properties.

Conclusion 2

The Neural Version of Ockham’s Razor

Under the traditional theory, action concepts have to be

disembodied, that is, to be characterized neurally entirely

outside the sensory motor system.

If true, that would duplicate all the apparatus for

characterizing conceptual properties that we have

discussed. Unnecessary duplication of this sort is highly

unlikely in a brain that works by neural optimization.

Behavioral and Imaging

Experiments

Ben Bergen and Shweta Narayan

Do Words and Images Match?

Does shared effector slow negative response?

• Imaging – Simple sentence using verb first

• Behavioral – Image First

Does verb evoke activity in motor effector area?

WALK

GRASP

WALK

Preliminary Behavior Results

Same Action

40 Native

Speakers

Eliminate

RT > 2 sec.

Other Effector

Same Effector

788

804

871

767

785

825

5 levels of Neural Theory of

Language

Spatial

Relation

Motor

Control

Metaphor Grammar

Cognition and Language

abstraction

Computation

Structured Connectionism

Neural Net

Triangle Nodes

SHRUTI

Computational Neurobiology

Biology

Neural

Development

Quiz

Midterm

Finals