ex5m7_2.doc

advertisement

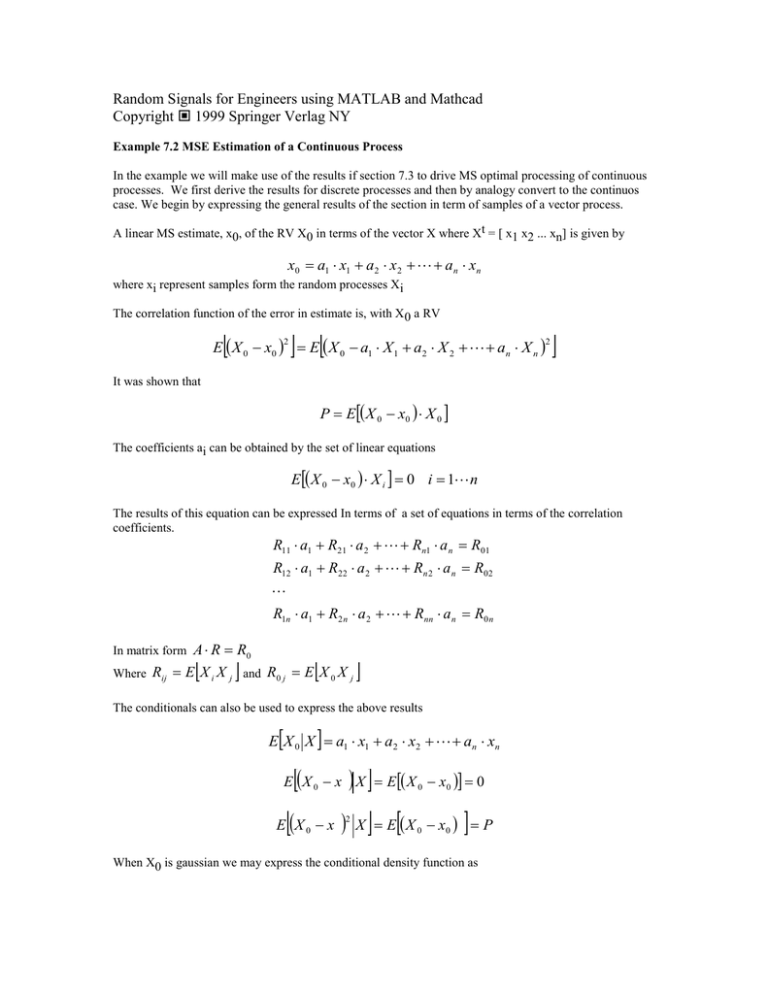

Random Signals for Engineers using MATLAB and Mathcad Copyright 1999 Springer Verlag NY Example 7.2 MSE Estimation of a Continuous Process In the example we will make use of the results if section 7.3 to drive MS optimal processing of continuous processes. We first derive the results for discrete processes and then by analogy convert to the continuos case. We begin by expressing the general results of the section in term of samples of a vector process. A linear MS estimate, x0, of the RV X0 in terms of the vector X where Xt = [ x1 x2 ... xn] is given by x0 a1 x1 a2 x2 an xn where xi represent samples form the random processes Xi The correlation function of the error in estimate is, with X 0 a RV E X 0 x0 E X 0 a1 X 1 a2 X 2 an X n 2 2 It was shown that P E X 0 x0 X 0 The coefficients ai can be obtained by the set of linear equations E X 0 x0 X i 0 i 1 n The results of this equation can be expressed In terms of a set of equations in terms of the correlation coefficients. R11 a1 R21 a 2 Rn1 a n R01 R12 a1 R22 a 2 Rn 2 a n R02 R1n a1 R2 n a 2 Rnn a n R0 n A R R0 Where Rij E X i X j and R0 j E X 0 X j In matrix form The conditionals can also be used to express the above results EX 0 X a1 x1 a2 x2 an xn E X 0 x X E X 0 x0 0 E X 0 x 2 X E X 0 x0 P When X0 is gaussian we may express the conditional density function as f X 0 X G x0 , P With these expression we can find a expression for the one dimensional MS estimation of X 0 EX 0 X a1 x1 with a R01 R11 P E X 0 a1 x1 X 0 R00 a R01 f X 0 x1 G a x1 , P When these expressions are used for stochastic processes, the correlation function is available and the RVs X0 and Xi are replaced by x(t+) and x(t). We have all the required correlation functions in terms of . If the RVs are related by a linear transformation we can also use linear transformation relationships to determine the required correlations between the RVs. For example when we are given a process with R e and we would like to find x(t + ) from x(t) then x(t ) a s(t ) We can identify X0 with x(t + ) and X1 with s(t) and the optimal value of a0 = a becomes a R01 R e R11 R0 The variance of the error in estimate of s(t+ ) is R 2 R0 1 e R0 2 2 e Another example the random variable we would observe is made up of a signal plus additive noise, let us assume that x( t ) s ( t ) n( t ) If we have x(t) we may perform a MS estimate of the process s(t) as s ( t ) a x( t ) In this case we must identify X0 and X1 as X0 with s(t) and X1 with x(t) and we have for the correlation coefficients assuming that the noise and signal are uncorrelated Rxx Rss Rnn Rsx Rss Solving for the coefficient we have a R01 Rss 0 R11 Rss 0 Rnn 0 and the covariance of the error in this estimate is e2 R00 a R01 Rss 0 Rss 0 Rss 0 Rss 0 Rnn 0 e2 R00 a R01 Rss 0 Rnn 0 Rss 0 Rnn 0 The expression the MSE is just the Product of the covariance of the signal and noise divided by the sum.