ex5m5_2.doc

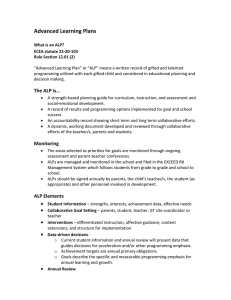

advertisement

Random Signals for Engineers using MATLAB and Mathcad

Copyright 1999 Springer Verlag NY

Example 5.2 Poisson Processes

The Poisson Random Variable T is formed by a summation of IID exponential Random Variables.

We have shown chapter 5.2 that the Density function for T is Erlang or

syms k t lam

kfac=sym('k!');

f= k/t*(lam*t)^k/subs(kfac,k,k)*exp(-lam*t);

pretty(f)

k

k (lam t) exp(-lam t)

---------------------t k!

If we define a new random variable , which is bounded by t and t k+1 or t < < w . We have defined the

IID Random Variable tk+1 as w for convenience in notation. The use of a new random variable, now

means that this new random variable can fall anywhere after t k and before tk+1. The range of the random

variable t is 0 < t < and w is < w < . The Random Variable T and W are independent and the joint

density function, f(t,w) becomes the product of the Erlang and the exponential as

k t

1

f TW t , w

e t e w

t

k!

k

The Probability that there will be k events in is just the integral of f(t,w) evaluated over the range of t and

w in relation to The integration range is shown below on the t-w plane. The w integral is taken along the

line u and is bounded by - t and as shown

2

t( w )

( u

1)

0

0

1

2

3

w u

4

5

6

The integral becomes

k t t

e e w dw dt

t

k

!

t

PK k in

0

Matlab can perform the symbolic integration and the substitution at the limits for w and t 0. The

assumptions that allow this to proceed are the conditions that > 0 and k > 0. This must be imposed on the

Maple computations

gt=sym('lam>0');

maple('assume',gt);

gtt=sym('k>0');

maple('assume',gtt);

syms w tau

ap=int(int(f*lam*exp(-lam*w),w,tau-t,inf),t,0,tau);

pretty(ap)

k~

k~

tau

lam~

exp(-lam~ tau)

--------------------------k~!

We will now show that in the range {0 to } each ti for i = 1 .. k will be uniformly distributed in this range.

Let us first take the case for k =1. This is a joint event where there is one event in {0 t i} and no events in

{ti }. This probability can be written as

Pone event in 0 PT Pone event in t one event in

The expression on the right hand side is the conditional probability and it can be evaluated using Bayes'

rule

Pone event in 0

PK 1 in t and K 1 in

PK 1 in

The Joint probability may be evaluated below since the two events are independent

PK 1 in t and K 1 in PK 1 in t PK 0 in t

Using the Poisson distribution for each of the probability evaluations in the right hand side of the equation

above, we have

PT

t e t e t t

e

The probability distribution can be recognized as the cumulative distribution function for a uniform

distribution in the range { 0 }. The same reasoning can be used to show that the i th event will also b e

uniformly distributed for each i = 1 .. k and all the events are independently located in the range { 0 }.

The mean and variance for a Poisson are computed next. For simplicity we let = t. The gamma

function has replaced k! since the two are mathematically equivalent.

syms alp

app=subs(ap,lam*tau,alp);

app=subs(app,tau,alp/lam);

app=simplify(app);

pretty(app)

k~

alp

exp(-alp)

--------------gamma(k~ + 1)

The mean of E[K] is

EK=symsum(k*app,0,inf)

simplify(EK)

EK =

alp*exp(-alp)*exp(alp)

ans =

alp

The second moment and then the variance become

EK2=symsum(k^2*app,0,inf);

simplify(EK2)

ans =

alp*(alp+1)

and

'VAR[K] = '

VAR=EK2-EK^2;

simplify(VAR)

ans =

VAR[K] =

ans =

alp