Professor Judy Sebba: Inter-disciplinary research / Innovative policy and practice [PPTX 3.20MB]

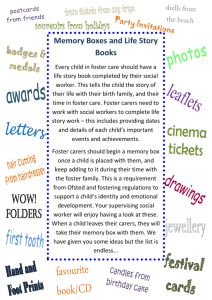

advertisement

![Professor Judy Sebba: Inter-disciplinary research / Innovative policy and practice [PPTX 3.20MB]](http://s2.studylib.net/store/data/014974091_1-183d73c8af8cd29d76de580b283a8184-768x994.png)

Inter-disciplinary research/innovative policy and practice: How to manage the interface effectively Centre for Social Work Innovation and Research University of Sussex 12 October 2015 Judy Sebba Director, Rees Centre for Research in Fostering and Education University of Oxford Department of Education judy.sebba@education.ox.ac.uk Rees Centre for Research in Fostering and Education The Rees Centre aims to: • identify what works to improve the outcomes and life chances of children and young people in foster care We are doing this by: • reviewing existing research in order to make better use of current evidence • conducting new research to address gaps • working with service users to identify research priorities and translate research messages into practice • employing foster carers and care experienced young people as co-researchers • developing research-mindedness in the services Centre is funded by the Core Assets Group and has grants from a range of other funders Rees Centre Research overview Reviews • • • • • • • • • • Motivation to foster (2012); Peer support between foster carers (2013); Selection of foster carers (2013); Impact of fostering on foster carers’ own children (2013); Effective parent-and-child fostering; Mental health interventions for LAC (NSPCC, 2014); Role of the Supervising Social Worker (2014); Recruitment & selection of LGBT carers (2015); Educational outcomes of LAC (2015); Impact of siblings placements (due Nov 2015). Research Projects – completed and current • Investigating people’s motivation to foster (completed); • Increasing the benefits of foster care support (completed); • Mental health of children in care across primary-secondary school transition (with Surrey NHS Trust & Sussex University); • Impact on carers of allegations (pilot completed, main study funded FosterTalk/Sir Halley Stewart Trust); • Educational progress of children in care (completed, funded Nuffield); • Evaluation of the Siblings Together programme (funded Siblings Together) • Evaluation of Step Down (moving children from residential to foster care, funded by Social Impact Bond/Cardiff CC and Birmingham LA); • Evaluation of pan-London Achievement for All/foster carer training to support education (with Loughborough, funded by GLA); • DfE Children’s Social Care Innovation Fund – Evaluation Coordinator (57 projects, 22 evaluation teams, quality control of evaluations, funded DfE); • Evaluation of Elev8 (intensive fostering for youth offenders); • Evaluation of Attachment-Aware Schools Programme (funded by Bath and NE Somerset LA); Doctoral research projects on education of children in care, impact of types of care in Romania, health experiences of children in care and characteristics and selection of foster carers in Portugal and England. Aim of the session Explore how research, policy and practice speak effectively together and with integrity, where innovation has become the primary task. Should innovation be the primary task? Does innovation improve people’s lives? What is innovation? Definition provided by NESTA in our project: The development and dissemination of a new product, service or process that produces economic, social or cultural change. Little doubt that we can all identify areas of children’s social care that need economic, social or cultural change. But is innovation the best way to achieve this? In NESTA project, ‘innovative’ applied to the initial idea or any part of the implementation process. Challenges • Generate and ‘translate’ (for practice) research findings/data analysis including evaluation of others’ innovations that are capable of leading to improvement AND informs sustainability, roll out and maybe further innovation; • Finding ways of ensuring your findings hit the button with policymakers at the right time; • To develop practice that reflects ‘evidence’ without having every social worker/teacher completing a doctorate; • To maintain your research integrity throughout. Which do you currently do best and why? The Children’s Social Care Innovation Programme • 57 projects, 22 evaluation teams, Rees Centre coordinates evaluation & provides over-arching evaluation of programme; • Over half all LAs involved, 55% of projects led by LAs; • Three main areas: Rethinking children’s social work – improving the quality and impact of children’s social work – 16 projects; Rethinking support for adolescents in or on the edge of care – improving the quality and impact of services for adolescents to transition successfully into adulthood – 5 projects; Other priorities – residential, adoption, mental health, CSE, housing, commissioning and delegation - 36 Started Jan-now 2015, end March 2016 Truly innovative??? Generating findings that are capable of leading to improvement • Engage practitioners and policy makers from the outset – inform research questions, data collection methods & interpretations – at all levels of practice – impact ongoing; • Does the evidence allow us ‘to intervene with the most benefit and the least harm’ as Oakley (2000) urged us to; • Value of full range of methodologies is acknowledged – IP reiterate principle of evaluations being fit for purpose but national policymaking inevitably needs numbers, practice needs examples – better understanding of mixed methods; • Work through implications to targeted recommendations; • Taking account of context - innovations developed elsewhere or that are to be implemented elsewhere, transferability? • Oakley, A. (2000) Experiments in Knowing. London: John Wiley. The current evidence base in children’s services (adapted from Stevens, M., Liabo, K., Witherspoon, S. and Roberts, H. (2009) What do practitioners want from research, what do funders fund and what needs to be done to know more about what works in the new world of children's services? Evidence & policy: vol 5, no 3: 281-294 Methods (selective) used in 625 studies No of studies % of studies Qualitative 230 37 Mixed method 108 17 Longitudinal 74 12 Quantitative dataset analysis 16 3 Non-randomised trial 8 1 RCT 3 <1 Systematic review 2 <1 Hitting the button with policymakers/funders • Early negotiation of expectations is crucial; • Understanding their concerns, interests and trying to reframe these as evaluation or research questions; • Timing – maybe not now but findings can still be relevant later e.g. permanence research, AfL, etc. used years later; • Only the research analysts are interested in your methodology and technical debates – just bare essentials; • Don’t underestimate the power of senior civil servants over ministers – continuity is important source of power; • Keep communications crisp, clear and short – keep the discursive debate for your academic colleagues; • Stay positive, constructive, flexible, adopt ‘can do’ approach. Developing practice that reflects evidence Sounds so easy, so difficult to achieve. • Engage as co-researchers with training, realistically, though Gray et al (2015) noted that some want to be engaged in trhe whole process while others prefer translated ‘guidelines’; • Help practitioners to avoid ‘recycling garbage’ by replacing the footprints of gurus with some ‘knowledge claims’; • Get managers on board with developing better use of research by starting with their key concerns; • Researchers-in-residence, embedded researchers? • Professional expectations – still not expected to use research in SW or teaching standards. Gray, M., Joy, E., Plath, D., & Webb, S. A. (2015). What supports and impedes evidencebased practice implementation? A survey of Australian social workers. British Journal of Social Work, 45(2), 667-684. Maintaining your research integrity • Winch (2001) warns of the dangers of giving the sponsors what it is they want to hear in order to increase the chances of influencing and being consulted in the future; • He notes the opposite extreme of failing to understand and acknowledge views that extend beyond the researcher’s own ideals by remaining ‘excessively true to oneself’; • Both are important. Be prepared to say no or not yet but also be prepared to ‘drip feed’ best estimate to date; • Reiterate the ethical principles by which you operate, in particular when service providers seem happy to impose data collection on their service users without their consent; • Look to your colleagues for support! Winch, C. (2001) Accountability and relevance in educational research Journal of Philosophy of Education 35, 443-459 Preventing and treating poor mental health http://reescentre.education.ox.ac.uk/research/mental-health/ • Report commissioned by NSPCC • Practitioners need to know whether a particular intervention is likely to work • Report provides an indication of the strength of the evidence for a range of interventions • Also looks at the context in which these interventions operate – i.e. the importance of 'ordinary care' as an intervention in itself – evaluations of interventions often miss out the importance of context and children's individual experiences – important to think how quality of care environment and decisions made can influence well-being before using targeted (and often costly) interventions School for Policy Studies The Educational Progress of Looked After Children in England: Linking Care and Educational Data Funded by The Nuffield Foundation February 2014 – April 2015 Educational outcomes of looked after children in England (Source: DfE, 2013) Project aims and purpose • What are the key factors contributing to the low educational outcomes of children in care in secondary schools in England? • How does linking care and educational data contribute to our understanding of how to improve their attainment and progress? To inform resource priorities of central and local government, practice of professionals and the databases used to monitor outcomes. School for Policy Studies Research design How did we do this? • Linking national data sets on the education (National Pupil Database) and care experiences of looked after children in England (SSDA903) – to explore the relationship between educational outcomes, the children’s care histories and individual characteristics, and practice and policy in different local authorities • Interviews with 36 children in six local authorities and with their carers, teachers, social workers and Virtual School staff – to complement and expand on the statistical analyses, and to explore factors not recorded in the databases (e.g. foster carers’ attitudes to education, role of the Virtual School) – FCs and YP co-researchers. C There are 10 main barriers to radical improvement and innovation in children’s services in England (1/3) External context ▪ ▪ ▪ ▪ Significant growth in demand e.g. looked-after children 1.8% pa Confusion on State’s role in sector Intense public and media response to public scandal puts spotlight on industry LA facing significant budget pressure –will increasingly be looking to children’s services for efficiencies Structure C1 LAs can lack the critical mass and organisational competence to commission for radical improvement and innovation in services C2 Service provider organisations aren’t always incentivised to radically improve and innovate their services Conduct C3 Frontline social care staff don’t always have the time, skills or confidence to radically improve and innovate services Performance ▪ C4 LA leadership can lack capability and incentives to radically improve and innovate services C5 There are some legal barriers, and many perceived barriers, to radically improving and innovating services C6 New innovations often fail to ‘spark’, and proven innovations often fail to spread effectively across LAs C7 Poor data quality and availability makes it hard for social workers, LAs, regulators, and central government to drive radical improvement and innovation C8 Current performance management system tends to promote compliance rather than radical improvement or innovation C9 Challenges in collaboration at the interface of different agencies limits innovation, particularly for child protection ▪ ▪ High variability across LAs in child outcomes: – Highest GCSE attainment gap ~2.6x size of the lowest) – Care quality (% of children experiencing <3 placements) highest is 1.4x lowest) – Value for money (up to 7.5x more costly) Limited correlation between spend and outcomes There is a significant gap in outcomes between cared-for children and other children e.g. 44% attainment gap at GCSE C10 Culture of resistance to change and risk aversion 0 Challenging our own practice – Knowledge Claims 1. 2. 3. 4. 5. The quality of ‘ordinary care’ influences the outcomes of mental health interventions – what are we doing to help social workers improve ‘ordinary care’ before requesting specialist services e.g. MST? Young people who changed school in Year 10 or 11 scored 33.9 points (over five grades) less at GCSE than those who did not - what are you doing to ensure social workers prevent this? Preparing and supporting carers’ own children for fostering reduces placement disruptions – how is this done in the services? Do the social workers you train who become Supervisory Social Workers challenge foster carers as well as supporting them? Do the services undertake assessments of potential LGBT carers that include questions that would not be asked of other potential carers? How you can be involved • Express interest in being involved in future possible research projects; • Come along to lectures & seminars and log into webinars; • Join our mailing list and receive newsletters 5 times/year rees.centre@education.ox.ac.uk; • Web - http://reescentre.education.ox.ac.uk/; • Comment on our blog – or write for us; • Follow us on Twitter - @reescentre and Facebook

![What research suggests is the most effective use of the Pupil Premium [PPTX 414.70KB]](http://s2.studylib.net/store/data/014995430_1-bda778eb6edee6956d7b8dab1f835646-300x300.png)