OceanStore Toward Global-Scale, Self-Repairing, Secure and Persistent Storage John Kubiatowicz

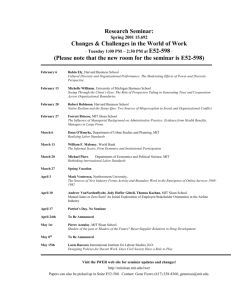

advertisement

OceanStore

Toward Global-Scale, Self-Repairing,

Secure and Persistent Storage

John Kubiatowicz

University of California at Berkeley

OceanStore Context:

Ubiquitous Computing

• Computing everywhere:

– Desktop, Laptop, Palmtop

– Cars, Cellphones

– Shoes? Clothing? Walls?

• Connectivity everywhere:

– Rapid growth of bandwidth in the interior of the net

– Broadband to the home and office

– Wireless technologies such as CMDA, Satelite, laser

• Where is persistent data????

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:2

Utility-based Infrastructure?

Canadian

OceanStore

Sprint

AT&T

Pac IBM

Bell

IBM

• Data service provided by storage federation

• Cross-administrative domain

• Pay for Service

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:3

OceanStore:

Everyone’s Data, One Big Utility

“The data is just out there”

• How many files in the OceanStore?

– Assume 1010 people in world

– Say 10,000 files/person (very conservative?)

– So 1014 files in OceanStore!

– If 1 gig files (ok, a stretch), get 1 mole of bytes!

Truly impressive number of elements…

… but small relative to physical constants

Aside: new results: 1.5 Exabytes/year (1.51018)

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:4

OceanStore Assumptions

• Untrusted Infrastructure:

– The OceanStore is comprised of untrusted components

– Individual hardware has finite lifetimes

– All data encrypted within the infrastructure

• Responsible Party:

– Some organization (i.e. service provider) guarantees

that your data is consistent and durable

– Not trusted with content of data, merely its integrity

• Mostly Well-Connected:

– Data producers and consumers are connected to a highbandwidth network most of the time

– Exploit multicast for quicker consistency when possible

• Promiscuous Caching:

– Data may be cached anywhere, anytime

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:5

The Peer-To-Peer View:

Irregular Mesh of “Pools”

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:6

Key Observation:

Want Automatic Maintenance

• Can’t possibly manage billions of servers by hand!

• System should automatically:

–

–

–

–

Adapt to failure

Exclude malicious elements

Repair itself

Incorporate new elements

• System should be secure and private

– Encryption, authentication

• System should preserve data over the long term

(accessible for 1000 years):

–

–

–

–

Geographic distribution of information

New servers added from time to time

Old servers removed from time to time

Everything just works

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:7

Outline:

Three Technologies and

a Principle

Principle: ThermoSpective Systems Design

–

Redundancy and Repair everywhere

1. Structured, Self-Verifying Data

–

Let the Infrastructure Know What is important

–

A new abstraction for routing

–

Long Term Durability

2. Decentralized Object Location and Routing

3. Deep Archival Storage

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:8

ThermoSpective

Systems

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:9

Goal: Stable, large-scale systems

• State of the art:

– Chips: 108 transistors, 8 layers of metal

– Internet: 109 hosts, terabytes of bisection bandwidth

– Societies: 108 to 109 people, 6-degrees of separation

• Complexity is a liability!

–

–

–

–

More components Higher failure rate

Chip verification > 50% of design team

Large societies unstable (especially when centralized)

Small, simple, perfect components combine to generate

complex emergent behavior!

• Can complexity be a useful thing?

– Redundancy and interaction can yield stable behavior

– Better figure out new ways to design things…

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:10

Question: Can we exploit Complexity

to our Advantage?

Moore’s Law gains Potential for Stability

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:11

The Thermodynamic Analogy

• Large Systems have a variety of latent order

– Connections between elements

– Mathematical structure (erasure coding, etc)

– Distributions peaked about some desired behavior

• Permits “Stability through Statistics”

– Exploit the behavior of aggregates (redundancy)

• Subject to Entropy

– Servers fail, attacks happen, system changes

• Requires continuous repair

– Apply energy (i.e. through servers) to reduce entropy

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:12

The Biological Inspiration

• Biological Systems are built from (extremely)

faulty components, yet:

– They operate with a variety of component failures

Redundancy of function and representation

– They have stable behavior Negative feedback

– They are self-tuning Optimization of common case

• Introspective (Autonomic)

Computing:

– Components for performing

Dance

– Components for monitoring and

model building

– Components for continuous

adaptation

Adapt Monitor

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:13

Dance

Adapt Monitor

ThermoSpective

• Many Redundant Components (Fault Tolerance)

• Continuous Repair (Entropy Reduction)

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:14

Object-Based

Storage

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:15

Let the Infrastructure help You!

• End-to-end and everywhere else:

– Must distribute responsibility to guarantee:

QoS, Latency, Availability, Durability

• Let the infrastructure understand the

vocabulary or semantics of the application

– Rules of correct interaction?

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:16

OceanStore Data Model

• Versioned Objects

– Every update generates a new version

– Can always go back in time (Time Travel)

• Each Version is Read-Only

– Can have permanent name

– Much easier to repair

• An Object is a signed mapping between

permanent name and latest version

– Write access control/integrity involves managing

these mappings

versions

Comet Analogy

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

updates

OceanStore:17

Secure Hashing

DATA

SHA-1

160-bit GUID

• Read-only data: GUID is hash over actual data

– Uniqueness and Unforgeability: the data is what it is!

– Verification: check hash over data

• Changeable data: GUID is combined hash over a

human-readable name + public key

– Uniqueness: GUID space selected by public key

– Unforgeability: public key is indelibly bound to GUID

• Thermodynamic insight: Hashing makes

“data particles” unique, simplifying interactions

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:18

Self-Verifying Objects

AGUID = hash{name+keys}

VGUIDi

Data

BTree

VGUIDi + 1

backpointe

r

M

M

copy on

write

Indirect

Blocks

copy on

write

d1

d2

d3

Data

Blocks

d4 d5 d6

d7

d8

d'8 d'9

d9

Heartbeat: {AGUID,VGUID, Timestamp}signed

Heartbeats +

Read-Only Data

MIT Seminar

Updates

©2002 John Kubiatowicz/UC Berkeley

OceanStore:19

Second-Tier

Caches

The Path of an

OceanStore Update

Inner-Ring

Servers

Clients

Multicast

trees

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:20

OceanStore Consistency via

Conflict Resolution

• Consistency is form of optimistic concurrency

– An update packet contains a series of predicate-action

pairs which operate on encrypted data

– Each predicate tried in turn:

• If none match, the update is aborted

• Otherwise, action of first true predicate is applied

• Role of Responsible Party

– All updates submitted to Responsible Party which

chooses a final total order

– Byzantine agreement with threshold signatures

• This is powerful enough to synthesize:

– ACID database semantics

– release consistency (build and use MCS-style locks)

– Extremely loose (weak) consistency

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:21

Self-Organizing Soft-State

Replication

• Simple algorithms for placing replicas on nodes

in the interior

– Intuition: locality properties

of Tapestry help select positions

for replicas

– Tapestry helps associate

parents and children

to build multicast tree

• Preliminary results

show that this is effective

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:22

Decentralized

Object Location

and Routing

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:23

Locality, Locality, Locality

One of the defining principles

• The ability to exploit local resources over remote

ones whenever possible

• “-Centric” approach

– Client-centric, server-centric, data source-centric

• Requirements:

– Find data quickly, wherever it might reside

• Locate nearby object without global communication

• Permit rapid object migration

– Verifiable: can’t be sidetracked

• Data name cryptographically related to data

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:24

Enabling Technology: DOLR

(Decentralized Object Location and Routing)

GUID1

DOLR

GUID2

MIT Seminar

GUID1

©2002 John Kubiatowicz/UC Berkeley

OceanStore:25

Basic Tapestry Mesh

Incremental Prefix-based Routing

3

4

NodeID

0xEF97

NodeID

0xEF32

NodeID

0xE399

4

NodeID

0xE530

3

4

3

1

1

3

NodeID

0x099F

2

3

NodeID

0xE555

1

NodeID

0xFF37

MIT Seminar

NodeID

0xEF34

4

NodeID

0xEF37

3

NodeID

0xEF44

2

2

2

NodeID

0xEFBA

NodeID

0xEF40

NodeID

0xEF31

4

NodeID

0x0999

1

2

2

3

NodeID

0xE324

NodeID

0xE932

©2002 John Kubiatowicz/UC Berkeley

1

NodeID

0x0921

OceanStore:26

Use of Tapestry Mesh

Randomization and Locality

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:27

Stability under Faults

• Instability is the common case….!

– Small half-life for P2P apps (1 hour????)

– Congestion, flash crowds, misconfiguration, faults

• Must Use DOLR under instability!

– The right thing must just happen

• Tapestry is natural framework to exploit

redundant elements and connections

– Multiple Roots, Links, etc.

– Easy to reconstruct routing and location information

– Stable, repairable layer

• Thermodynamic analogies:

– Heat Capacity of DOLR network

– Entropy of Links (decay of underlying order)

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:28

Single Node Tapestry

Application-Level

Multicast

OceanStore

Other

Applications

Application Interface / Upcall API

Dynamic Node

Management

Routing Table

&

Router

Object Pointer DB

Network Link Management

Transport Protocols

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:29

It’s Alive!

• Planet Lab global network

– 98 machines at 42 institutions, in North America,

Europe, Australia (~ 60 machines utilized)

– 1.26Ghz PIII (1GB RAM), 1.8Ghz PIV (2GB RAM)

– North American machines (2/3) on Internet2

• Tapestry Java deployment

–

–

–

–

MIT Seminar

6-7 nodes on each physical machine

IBM Java JDK 1.30

Node virtualization inside JVM and SEDA

Scheduling between virtual nodes increases latency

©2002 John Kubiatowicz/UC Berkeley

OceanStore:30

Object Location

RDP (min, median, 90%)

25

20

15

10

5

0

0

20

40

60

80

100

120

140

160

180

200

Client to Obj RTT Ping time (1ms buckets)

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:31

Tradeoff: Storage vs Locality

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:32

Management Behavior

1.4

2000

1.2

Control Traffic BW (KB)

Integration Latency (ms)

1800

1600

1400

1200

1000

800

600

1

0.8

0.6

0.4

400

0.2

200

0

0

0

100

200

300

400

500

0

50

100

Size of Existing Network (nodes)

150

200

250

300

350

400

Size of Existing Network (nodes)

• Integration Latency (Left)

– Humps = additional levels

• Cost/node Integration Bandwidth (right)

– Localized!

• Continuous, multi-node insertion/deletion works!

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:33

Deep Archival Storage

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:34

Two Types of OceanStore Data

• Active Data: “Floating Replicas”

– Per object virtual server

– Interaction with other replicas for consistency

– May appear and disappear like bubbles

• Archival Data: OceanStore’s Stable Store

– m-of-n coding: Like hologram

• Data coded into n fragments, any m of which are

sufficient to reconstruct (e.g m=16, n=64)

• Coding overhead is proportional to nm (e.g 4)

• Other parameter, rate, is 1/overhead

– Fragments are cryptographically self-verifying

• Most data in the OceanStore is archival!

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:35

Archival Dissemination

of Fragments

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:36

Fraction of Blocks Lost

per Year (FBLPY)

• Exploit law of large numbers for durability!

• 6 month repair, FBLPY:

– Replication: 0.03

– Fragmentation: 10-35

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:37

The Dissemination Process:

Achieving Failure Independence

Model Builder

Human Input

Set Creator

probe

type

fragments

Inner Ring

Inner Ring

fragments

fragments

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:38

Independence Analysis

• Information gathering:

– State of fragment servers (up/down/etc)

• Correllation analysis:

– Use metric such as mutual information

– Cluster via that metric

– Result partitions servers into uncorrellated clusters

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:39

Active Data Maintenance

L3

4577

9098

L2

L2

1167

0128

L2

L3

L1

L1

AE87

L3

3213

L1

L1

6003

L2

5544

L2

3274

L2

L2

Ring of L1

Heartbeats

• Tapestry enables “data-driven multicast”

– Mechanism for local servers to watch each other

– Efficient use of bandwidth (locality)

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:40

1000-Year Durability?

• Exploiting Infrastructure for Repair

– DOLR permits efficient heartbeat mechanism to

notice:

• Servers going away for a while

• Or, going away forever!

– Continuous sweep through data also possible

– Erasure Code provides Flexibility in Timing

• Data continuously transferred from physical

medium to physical medium

– No “tapes decaying in basement”

– Information becomes fully Virtualized

• Thermodynamic Analogy: Use of Energy (supplied

by servers) to Suppress Entropy

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:41

PondStore

Prototype

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:42

First Implementation [Java]:

• Event-driven state-machine model

– 150,000 lines of Java code and growing

• Included Components

DOLR Network (Tapestry)

• Object location with Locality

• Self Configuring, Self R epairing

Full Write path

• Conflict resolution and Byzantine agreement

Self-Organizing Second Tier

• Replica Placement and Multicast Tree Construction

Introspective gathering of tacit info and adaptation

• Clustering, prefetching, adaptation of network routing

Archival facilities

• Interleaved Reed-Solomon codes for fragmentation

• Independence Monitoring

• Data-Driven Repair

• Downloads available from www.oceanstore.org

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:43

Event-Driven Architecture

of an OceanStore Node

• Data-flow style

World

– Arrows Indicate flow of messages

• Potential to exploit small multiprocessors at

each physical node

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:44

First Prototype Works!

• Latest: it is up to 8MB/sec (local area network)

– Biggest constraint: Threshold Signatures

• Still a ways to go, but working

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:45

Update Latency

• Cryptography in critical path (not surprising!)

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:46

Working Applications

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:47

MINO: Wide-Area E-Mail Service

Local network

Replicas

Internet

Replicas

Traditional

Mail Gateways

OceanStore Client API

Mail Object Layer

IMAP

Proxy

Client

MIT Seminar

SMTP

Proxy

• Complete mail solution

– Email inbox

– Imap folders

OceanStore Objects

©2002 John Kubiatowicz/UC Berkeley

OceanStore:48

Riptide:

Caching the Web with OceanStore

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:49

Other Apps

• Long-running archive

– Project Segull

• File system support

– NFS with time travel (like VMS)

– Windows Installable file system (soon)

• Anonymous file storage:

– Nemosyne uses Tapestry by itself

• Palm-pilot synchronization

– Palm data base as an OceanStore DB

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:50

Conclusions

• Exploitation of Complexity

– Large amounts of redundancy and connectivity

– Thermodynamics of systems:

“Stability through Statistics”

– Continuous Introspection

• Help the Infrastructure to Help you

–

–

–

–

Decentralized Object Location and Routing (DOLR)

Object-based Storage

Self-Organizing redundancy

Continuous Repair

• OceanStore properties:

– Provides security, privacy, and integrity

– Provides extreme durability

– Lower maintenance cost through redundancy,

continuous adaptation, self-diagnosis and repair

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:51

For more info:

http://oceanstore.org

• OceanStore vision paper for ASPLOS 2000

“OceanStore: An Architecture for Global-Scale

Persistent Storage”

• Tapestry algorithms paper (SPAA 2002):

“Distributed Object Location in a Dynamic Network”

• Bloom Filters for Probabilistic Routing

(INFOCOM 2002):

“Probabilistic Location and Routing”

• Upcoming CACM paper (not until February):

– “Extracting Guarantees from Chaos”

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:52

Backup Slides

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:53

Secure Naming

Out-of-Band

“Root link”

Foo

Bar

Baz

Myfile

• Naming hierarchy:

– Users map from names to GUIDs via hierarchy of

OceanStore objects (ala SDSI)

– Requires set of “root keys” to be acquired by user

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:54

Self-Organized

Replication

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:55

Effectiveness of second tier

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:56

Second Tier Adaptation:

Flash Crowd

• Actual Web Cache running on OceanStore

– Replica 1 far away

– Replica 2 close to most requestors (created t ~ 20)

– Replica 3 close to rest of requestors (created t ~ 40)

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:57

Introspective Optimization

• Secondary tier self-organized into

overlay multicast tree:

– Presence of DOLR with locality to suggest placement

of replicas in the infrastructure

– Automatic choice between update vs invalidate

• Continuous monitoring of access patterns:

– Clustering algorithms to discover object relationships

• Clustered prefetching: demand-fetching related objects

• Proactive-prefetching: get data there before needed

– Time series-analysis of user and data motion

• Placement of Replicas to Increase Availability

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:58

Statistical Advantage of Fragments

Time to Coalesce vs. Fragments Requested (TI5000)

180

160

140

Latency

120

100

80

60

40

20

0

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

Objects Requested

• Latency and standard deviation reduced:

– Memory-less latency model

– Rate ½ code with 32 total fragments

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:59

Parallel Insertion Algorithms

(SPAA ’02)

• Massive parallel insert is important

– We now have algorithms that handle “arbitrary

simultaneous inserts”

– Construction of nearest-neighbor mesh links

• Log2 n message complexityfully operational routing

mesh

– Objects kept available during this process

• Incremental movement of pointers

• Interesting Issue: Introduction service

– How does a new node find a gateway into the

Tapestry?

MIT Seminar

©2002 John Kubiatowicz/UC Berkeley

OceanStore:60

Can You Delete (Eradicate) Data?

• Eradication is antithetical to durability!

– If you can eradicate something, then so can someone else!

(denial of service)

– Must have “eradication certificate” or similar

• Some answers:

– Bays: limit the scope of data flows

– Ninja Monkeys: hunt and destroy with certificate

• Related: Revocation of keys

– Need hunt and re-encrypt operation

• Related: Version pruning

–

–

–

–

MIT Seminar

Temporary files: don’t keep versions for long

Streaming, real-time broadcasts: Keep? Maybe

Locks: Keep? No, Yes, Maybe (auditing!)

Every key stroke made: Keep? For a short while?

©2002 John Kubiatowicz/UC Berkeley

OceanStore:61