Efficient Time-Aware Prioritization with Knapsack Solvers

advertisement

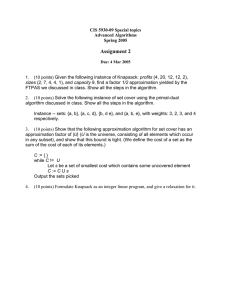

Efficient Time-Aware Prioritization with Knapsack Solvers Sara Alspaugh Kristen R. Walcott Mary Lou Soffa University of Virginia ACM WEASEL Tech Michael Belanich Gregory M. Kapfhammer Allegheny College November 5, 2007 Test Suite Prioritization Testing occurs throughout software development life cycle Challenge: time consuming and costly Prioritization: reordering the test suite Goal: find errors sooner in testing Doesn’t consider the overall time budget Alternative: time-aware prioritization Goal 1: find errors sooner in testing Goal 2: execute within time constraint Motivating Example Original test suite with fault information T1 4 faults 2 min. T2 1 fault 2 min. T3 2 faults 2 min. T4 6 faults 2 min. Assume: - Same execution time - Unique faults found Prioritized test suite T4 6 faults 2 min. T1 4 faults 2 min. T3 2 faults 2 min. T2 1 fault 2 min. Testing time budget: 4 minutes The Knapsack Problem for Time-Aware Prioritization P n c i=1 i ci ¤x i Maximize: , where is the code x i coverage of test and i is either 0 or 1. P Subject to the constraint: ¤ · n i=1 ti ti xi tmax i where is the execution time of test and tmax is the time budget. The Knapsack Problem for Time-Aware Prioritization Assume test cases cover unique requirements. T1 4 lines 2 min. T2 1 line 2 min. T3 2 lines 2 min. T4 5 lines 2 min. Time Budget: 4 min. Total Value: 9 5 0 Space Remaining: 0 2 4 min. The Extended Knapsack Problem Value of each test case depends on test cases already in prioritization Test cases may cover same requirements T1 4 lines 0 2 min. T2 1 line 2 min. T3 2 lines 2 min. T4 5 lines 2 min. UPDATE Time Budget: 4 min. Total Value: 7 5 0 Space Remaining: 0 2 4 min. Goals and Challenges Evaluate traditional and extended knapsack solvers for use in time-aware prioritization Effectiveness Efficiency Coverage-based metrics Time overhead Memory overhead How does overlapping code coverage affect results of traditional techniques? Is the cost of extended knapsack algorithms worthwhile? The Knapsack Solvers Random: select tests cases at random Greedy by Ratio: order by coverage/time Greedy by Value: order by coverage Greedy by Weight: order by time Dynamic Programming: break problem into sub-problems; use sub-problem results for main solution Generalized Tabular: use large tables to store sub-problem solutions The Knapsack Solvers (continued) Core: compute optimal fractional solution then exchange items until optimal integral solution found Overlap-Aware: uses a genetic algorithm to solve the extended knapsack problem for timeaware prioritization The Scaling Heuristic Ti Order the test cases by their coverage-toexecution-time ¸ ratio ¸ such ¸ that: c1 £ j tmax t1 k c1 t1 ¸c £ 2 ³ c2 t2 tmax t2 ::: ´ cn tn If , then it is possible T1 to find an optimal solution that includes . Check then]inequality for each test case Tx ; x 2 [1; until it no longer holds. hT ; : : : T i ¡ 1 x 1 belong in the final prioritization. Implementation Details Program Under Test (P) Coverage Calculator Test Transformer Test Suite (T) Knapsack Solver New Test Suite (T ’) Knapsack Solver Parameters 1. Selected Solver 2. Reduction Preference 3. Knapsack Size Evaluation Metrics Code coverage: Percentage of requirements executed when prioritization is run Basic block coverage used Coverage preservation: Proportion of code covered by prioritization versus code covered by entire original test suite Order-aware coverage: Considers both the order in which test cases execute in addition to overall code coverage Experiment Design Goals of experiment: Case studies: Measure efficiency of algorithms and scaling in terms of time and space overhead Measure effectiveness of algorithms and scaling in terms of three coverage-based metrics JDepend Gradebook Knapsack Size 25, 50, and 75% of execution time of original test suite Summary of Experimental Results Prioritizer Effectiveness: Overlap-aware solver had highest overall coverage for each time limit Greedy by Value solver good for Gradebook All Greedy solvers good for JDepend Prioritizer Efficiency: All algorithms took small amount of time and memory except for Dynamic Programming, Generalized Tabular, and Core Overlap-aware solver required hours to run Generalized Tabular had prohibitively large memory requirements Scaling heuristic reduced overhead in some cases Conclusions Most sophisticated algorithm not necessarily most effective or most efficient Trade-off: effectiveness versus efficiency Efficiency or effectiveness most important? Effectiveness overlap-aware prioritizer Efficiency low-overhead prioritizer Prioritizer choice depends on test suite nature Time versus coverage of each test case Coverage overlap between test cases Future Research Use larger case studies with bigger test suites Use case studies written in other languages Evaluate other knapsack solvers such as branch-and-bound and parallel solvers Incorporate other metrics such as APFD Use synthetically generated test suites Questions? Thank you! http://www.cs.virginia.edu/walcott/weasel.html Case Study Applications Gradebook JDepend Classes 5 22 Functions 73 305 NCSS 591 1808 Test Cases 28 53 7.008 s 5.468 s Test Suite Exec. Time