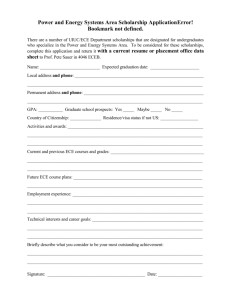

Ranking hackers through network traffic analysis

advertisement

Ranking Attackers Through Network Traffic Analysis Andrew Williams & Nikunj Kela 11/28/2011 CSC/ECE 774 1 Agenda • • • • • Background Tools We've Developed Our Approach Results Future Work 11/28/2011 CSC/ECE 774 2 Background: The Problem Setting 1: Corporate Environment •Large number of attackers •How do you prioritize which attacks to investigate? RSA 11/28/2011 CSC/ECE 774 3 Background: The Problem Setting 2: Hacking Competitions •How do you know who should win? 11/28/2011 CSC/ECE 774 4 Background: Information Available • Network Traffic Captures • Alerts from Intrusion Detection Systems (IDS) • Application and Operating System Logs 11/28/2011 CSC/ECE 774 5 Background: Traffic Captures • HUGE volumes of data • A complete history of interactions between clients and servers* Information available: • Traffic Statistics • Info on interactions across multiple servers • How traffic varies with time • Everything up to and including application layer info** 11/28/2011 CSC/ECE 774 6 Background: IDS Alerts • Messages indicating that a packet matches the signature of a known malicious one • Still a fairly large amount of data • Same downsides as anti-virus programs, but most IDS signatures are open source! • If IDS is compromised, these might not be available Information available: • Indication that known attacks are being launched • Alert Statistics • How alerts vary with time 11/28/2011 CSC/ECE 774 7 Background: Application/OS Logs Ex: mysql logs, apache logs, Windows 7 Security logs, ... • Detailed, application-specific error messages and warnings • Large amount of data • If a server is compromised, logs may not be available Information available: • Very detailed information with more context • Access to errors/issues even if traffic was encrypted 11/28/2011 CSC/ECE 774 8 Background: iCTF 2010 Contest • 72 teams attempting to compromise 10 servers • Vulnerabilities include SQL Injection, exploitable off-by-one errors, format string exploits, and several others* • Pretty complex set of rules Dataset from competition: • 27 GB of Network Traffic Captures • 46 MB of Snort Alerts (from competition) • 175 MB of Snort Alerts (generated with updated rulesets) • No Application or OS Logs More information on the contest can be found here: http://www.cs.ucsb.edu/~gianluca/papers/ctf-acsac2011.pdf 11/28/2011 CSC/ECE 774 9 Tools We've Developed We wrote scripts to... • Parse the large amount of data: o Extract network traffic between multiple parties o Filter out less important Snort Alerts o Track connection state to generate statistics and stream data • Visualize the data o Show all of the alerts and flag submissions with respect to time • Analyze the data o Pull out the transaction distances and find statistics on them • Generate Application and OS Logs o Replay network traffic to live virtual machine images 11/28/2011 CSC/ECE 774 10 Our Approach: Intuition • Vulnerability Discovery Phase Identify the type of vulnerability • Vulnerability Exploitation Phase Refine the attack string • It is quite intuitive that a skilled attacker will come up with the attack-string in less time than an unskilled attacker • How do we know if the attacker has broken into the system? We only have logs to work with! • Time taken to break into the system reflects the learning capabilities of an attacker Fast learner implies good attacker 11/28/2011 CSC/ECE 774 11 Our Approach: Identify the attack string • Once the attacker break into the system, he/she would use the same attack string almost every time to gather information • We observed from the traffic logs that in most of the cases, the attacker used one TCP stream to break into the system One TCP connection for each attempt! • We chose Levenshtein distance (Edit Distance) as our metric to compare the two TCP communication from attacker to server • Consecutive zero as the distance between TCP data means the attacker has successfully broken into the system 11/28/2011 CSC/ECE 774 12 Example: Identify the attack string Stream1: "%27%20or%20%27%27%3D%27%0Alist%0A" Stream2: "%27%20OR%20%27%27%3D%27%0ALIST%0A" Stream3: "asdfasd%20%27%20UNION%20SELECT%20%28%27secret.txt%27%29 %3B%20--%20%20%0AMUGSHOT%0ASADF%0A" Stream4: "asdfasd%20%27%20UNION%20SELECT%20%28%27secret.txt%27%29 %3B%20--%20%20%0AMUGSHOT%0A39393%0A" Stream5: "asdfasd%20%27%20UNION%20SELECT%20%28%27secret.txt%27%29 %3B%20--%20%20%0AMUGSHOT%0A1606%0A" S Stream6: "asdfasd%20%27%20UNION%20SELECT%20%28%27secret.txt%27%29 %3B%20--%20%20%0AMUGSHOT%0A1606%0A" 11/28/2011 CSC/ECE 774 13 Our Approach: Features Selection • Time taken to successfully break into the system • Mean and standard deviation of the distances between consecutive TCP streams • Number of attempts before successfully breach into the service • Length of the largest sequence of consecutive zero's 11/28/2011 CSC/ECE 774 14 Result: Distance-Time Plot 11/28/2011 CSC/ECE 774 15 Interesting Findings from the contest • Although the contest involved only attacking the vulnerable services, yet the teams tried to break into each others systems • We noticed that teams shared the Flag value with each other through the chat server • The active status of the service was maintained through a complex petri-net system and most of the teams struggled to understand it • Hints about different vulnerabilities in the services were released time to time through out the contest by the administrators 11/28/2011 CSC/ECE 774 16 Future Work • Use of data mining tools(e.g. SAS miner) to analyse the relationships among the features • Use of data mining tools for developing a scoring systems to give scores to each teams based on the feature set • Continue improving the replay script to handle the large number of connections 11/28/2011 CSC/ECE 774 17 Thank You! Questions? 11/28/2011 CSC/ECE 774 18 Image Sources WooThemes, free for commercial use Icons-Land, free for non-commercial use Fast Icon Studio, used with permission 11/28/2011 CSC/ECE 774 19