Annual Assessment Report to the College 2009‐2010 Social and Behavioral Science Social Work Master of Social Work

advertisement

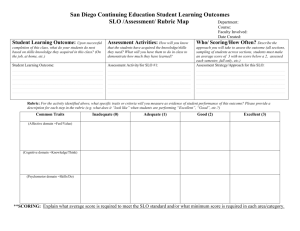

Annual Assessment Report to the College 2009‐2010 College: Social and Behavioral Science Department: Social Work Program: Master of Social Work Note: Please submit report to your department chair or program coordinator and to the Associate Dean of your College by September 30, 2010. You may submit a separate report for each program which conducted assessment activities. Liaison: Hyun-Sun Park, Ph.D. 1. Overview of Annual Assessment Project(s) 1a. Assessment Process Overview: Provide a brief overview of the intended plan to assess the program this year. Is assessment under the oversight of one person or a committee? During 2009-2010, assessment was under the oversight of a committee which was comprised of four members, Dr. Hyun-Sun Park (Chair), Dr. Susan Love, Dr. Eli Bartle, and Beth Halas. The assessment plan for the Master of Social Work program for the 2009-2010 academic year included the following activities: (1) Administered the Student Self-Efficacy Scale to two cohorts of MSW students: pre-test to the incoming students during the first 2 weeks of curriculum instruction and post-test to the graduating students during the last 2 weeks of instruction (2) Administered a course rubric to the instructors who taught a required course (except for electives) at the end of each semester (3) Conducted an assessment of the field component (4) Held a focus group with graduating students in Spring 2010 To carry out the above assessment process, the measurement tools needed to be refined to improve reliability and validity. Therefore, the following steps were planned for assessment instruments: (1) Refined The Student Self-Efficacy Scale in order to be consistent with the new EPAS (Educational Policy and Accreditation Standards) that are mandated by CSWE (Council on Social Work Education), accrediting body , in October 2008. March 30, 2009, prepared by Bonnie Paller (2) Developed a course rubric based on the 2008 EPAS for each required curriculum course using the common assignment (a total of 19 rubrics) (3) Developed a rubric for field seminar courses based on the 2008 EPAS; One rubric was used for both foundation and concentration year field seminar courses. (4) Developed a Field Evaluation Questionnaire that corresponds to 2008 EPAS which can be completed by field faculty (agency person who supervises student interns) 1b. Implementation and Modifications: Did the actual assessment process deviate from what was intended? If so, please describe any modification to your assessment process and why it occurred. The most significant innovation of MSW assessment for the 2009-2010 year is the change made in the mode of collecting information: from paper and pencil questionnaires to on-line questionnaires using Moodle. During Spring 2010, Hyun-Sun Park, Ph.D., assessment liaison, created a “MSW Assessment” site in Moodle and managed it by posting the instruments (18 questionnaires) for each semester. Moodle has not only saved resources tremendously by going paperless but has also been an effective assessment tool by providing a systematic way of administering questionnaires and managing large-scale data. Using Moodle as the major tool for gathering information has truly strengthened the infrastructure of MSW assessment. One activity that was performed in addition to the initial plan was extending the participants of the Student Self-Efficacy post-test to all MSW students, not just graduating students. This idea was discussed in the MSW assessment meeting in February 2010 and implemented in April 2010 in order to obtain enough information that could be used in preparing the MSW reaffirmation study which is due by 4/1/2011 to CSWE. The other modification made concerned field work. The external accrediting body, CSWE, required that field be signature pedagogy of a MSW program. However, students’ performance in acquiring field relevant competencies has never been evaluated in the past as part of assessment. Therefore, for the first time this year, the field component was included in assessment and two field-related measurement tools (a rubric for field seminars and a field evaluation questionnaire) were developed for that purpose in Spring 2010. The field course rubrics were used by field liaisons to measure students’ level of performance in executing field relevant competencies following a year of field training. The adoption of the Field Evaluation Questionnaire had been agreed upon, but the implementation was delayed by the field office to Fall 2010. The field office had planned to adopt a software system to manage field information on-line and the system would be ready during Fall 2010. Also, the field office wanted to process all the field data in one system and decided to include the Field Evaluation Questionnaire under the new software system, not in Moodle. Other than those specified above, all the other MSW assessment activities proposed in section, 1a. Assessment Process Overview have been implemented as planned and have been accomplished. March 30, 2009, prepared by Bonnie Paller 2. Student Learning Outcome Assessment Project: Answer questions according to the individual SLO assessed this year. If you assessed an additional SLO, report in the next chart below. 2a. Which Student Learning Outcome was measured this year? MSW program uses “competency” as a term for SLO in order to be consistent with the new 2008 EPAS developed by CSWE. MSW assessment measured the following 10 competences that CSWE required: (1) Identify as a professional social worker and conduct oneself accordingly (2) Apply social work ethical principles to guide professional practice (3) Apply critical thinking to inform and communicate professional judgments (4) Engage diversity and difference in practice (5) Advance human rights and social and economic justice (6) Engage in research-informed practice and practice-informed research (7) Apply knowledge of human behavior and the social environment (8) Engage in policy practice to advance social and economic well-being and to deliver effective social work services (9) Respond to contexts that shape practice (10) Engage, assess, intervene, and evaluate with individuals, families, groups, organizations, and communities 2b. What assessment instrument(s) were used to measure this SLO? The following instruments were used to measure social work competencies: (1) The Student Self-Efficacy Scale: 38-items, 5-point Likert-type scale (self-report) to measure students’ level of confidence in performing social work competencies; In Fall 2009, the previous Student Self-Efficacy Scale, 52 items, was used as pre-test. During February and March 2010, this scale was revised and shortened to a 38-item version and this scale was used for post-test in Spring 2010. The 38 items were summated for each EPAS and the mean scores were used for analysis. (2) 16 course rubrics: A course rubric was developed and refined for each of the 17 required courses and completed by the course faculty; each rubric has 8 to 11 items, a 5-point Likert-type scale to measure students’ level of performance in executing competencies based on the course common assignment (3) Field course rubric: A rubric was developed for two field seminars and completed by field liaisons; 13-item, 5-point Likert-type scale (4) A semi-structured interview guide for a focus group: little structure with flexibility for rich, contextual information; used to ask graduating students about their experience and feedback in association with the 4 areas of MSW curriculum (Practice, Policy, Research, and Human Behavior and the Social Environment), Field, and Department Administration. March 30, 2009, prepared by Bonnie Paller 2c. Describe the participants sampled to assess this SLO: discuss sample/participant and population size for this SLO. For example, what type of students, which courses, how decisions were made to include certain participants. The sample of The Student Self-efficacy Scale pre-test consisted of all incoming MSW students in August 2009 and the sample of the post-test consisted of all MSW students registered during the 2009-2010 academic year. The completion rate of the pre-test was 96.2% (N=76) and that of the post-test was 95.8% (N=161). The completion rate of paper and pencil pre-test wasn’t much different from that of the on-line post-test in Moodle because it was administered to the classes by the faculty. The respondents who completed 17 course rubrics (including the field rubric) consisted of the faculty who had taught the required courses, except for electives, during Fall 2009, Spring 2010, and/or Summer 2010. The response rate of Fall 2009 course rubrics, collected in paper and pencil format, was 68% (N=295) and that of Spring/Summer 2010, completed on-line, was 83.4% (N=466). The response rate significantly increased in Spring 2010 when faculty were able to complete the course rubrics on-line in Moodle. 2d. Describe the assessment design methodology: For example, was this SLO assessed longitudinally (same students at different points) or was a cross‐sectional comparison used (comparing freshmen with seniors)? If so, describe the assessment points used. The Student Self-Efficacy Scale was administered twice in the program to measure student’s confidence in executing the10 EPAS, once as a pre-test upon entry to the MSW program and the second as a post-test upon exit of the program (a 2-year lapse for the 2-year program and a 3-year lapse for the 3-year program). However, for the 2009-2010 academic year, the post-test was administered to all MSW students for two reasons: (1) data gathered before the 2009-2010 academic year was not available, and (2) there was a strong need for more data in order to prepare MSW reaffirmation documents which are due April 1, 2011. This additional information allowed me to compare the 1st year students with the 2nd year students as well as full-time students in the 2-year program with part-time students in the 3-year program. The 16 course rubrics were used to measure course-related competencies at one time point by the end of the semester (cross-sectional). Also, the field rouse rubric was completed at one time point by the end of the academic year, May 2010. March 30, 2009, prepared by Bonnie Paller 2e. Assessment Results & Analysis of this SLO: Provide a summary of how the data were analyzed and highlight important findings from the data collected. All the measurement instruments employed a uniform response category, 5-point Likert scale from very weak to very strong for comparison to be meaningful, and a score of 4.0 was set as a benchmark. Student Performance on the Student Self-Efficacy Scale (Self-report) (1) Pre-test on the Student Self-Efficacy Scale: administered to incoming MSW students in the class by the faculty using a paper and pencil questionnaire in August 2009 a. The total number of students who completed the Student Self-Efficacy Scale was 76, with a 96.2% completion rate. (42 fulltime 2-year program students and 34 part-time 3-year program students) b. The mean of all the measured EPAS was below the benchmark of 4.0. : Professionalism (3.54), Ethics (3.28), Critical thinking (3.10), Diversity (3.69), Justice (3.41), Evidence-based Practice (2.79), Human Behavior and the Social Environment (3.17), Policy (3.03), Respond to contexts (2.86), Engage (3.70), Assess (3.26), and Intervene (3.30) c. All the EPAS have a mean between 3.0 and 4.0 except for the following two EPAS whose mean was below 3.0: Evidencebased Practice (2.79) and Respond to contexts (2.86) d. 2-year program students and 3-year program students were not significantly different on the pre-test except for one EPAS professionalism. 3-year program students reported significantly higher mean on professionalism than 2-year program students. e. Post-test will be administered to 2-year program students in April 2011 and to 3-year program students in April 2012. (2) Post-test on the Student Self-Efficacy Scale: administered to all the enrolled MSW students using an on-line questionnaire in Moodle in April 2010 a. The total number of students who completed the Student Self-Efficacy Scale was 161, with a 95.8% completion rate (78 fulltime 2-year program students and 83 part-time 3-year program students) b. The mean of the following 6 EPAS were above the benchmark of 4.0. : Professionalism (4.20), Ethics (4.24), Human Behavior and the Social Environment (4.17), Diversity (4.41), Engage (4.16), Assess (4.09), and Evaluation (3.54) c. The mean of the following 7 EPAS were below the benchmark of 4.0: Critical thinking (3.86), Justice (3.83), Evidence-based practice (3.74), Policy (3.71), Respond to contexts (3.77), Intervene (3.66), and Evaluation (3.54). f. The mean of 2-year program students were significantly higher than that of 3-year program students on the following 6 EPAS: professionalism (4.33 vs. 4.07), Critical thinking (4.03 vs. 4.08), Diversity (4.54 vs. 3.71), Engage (4.37 vs. 3.95), Assess (4.26 vs. 3.92), and Intervene (3.89 vs. 3.45). March 30, 2009, prepared by Bonnie Paller g. For 2-year program students, the graduating students reported significantly higher confidence than the 1styear students in performing the following 6 EPAS: Professionalism (4.48 vs. 4.19), Critical thinking (4.24 vs. 3.83), Diversity (4.68 vs. 4.41), Engage (4.62 vs. 4.13), Assess (4.45 vs. 4.09), and Intervene (4.12 vs. 3.67). h. For 3-year program students, all the 2nd year students reported significantly higher confidence than the 1st year students on all EPAS except for one, Justice. However, the mean scores of the 3rd year graduating students were not significantly higher than those of 2nd year students except for one EPAS, professionalism. i. Pre-test scores of the graduating students were not available, which did not allow comparing entry and exit scores. Student Performance on Course Rubrics Completed by the Instructors (1) Course Rubrics for Fall 2009: Seven required courses were offered in Fall 2009 and a course rubric for each of the seven courses were developed and refined based on the new EPA. All the instructors of the seven courses were asked to complete a course rubric in a paper- and-pencil format for each student in their courses to measure the level of student’s performance in executing the related EPAS based on the shared assignment. a. The total number of rubrics completed was 295, with a 68.3% completion rate. b. All the items of the following course rubrics yielded a mean score above 4.0: SWRK 501, 510, 521. 525, 601, and 645. c. SWRK 635 (Advanced Research course) showed one item whose mean was below 4.0. “The research critically addressed the application of social work values and ethics and followed the standards set for ethical research with human participants.” d. The items measuring students writing skill and ability to utilize APA format barely met the benchmark score of 4.0 in most of the foundation year course rubrics. (2) Course Rubrics for Spring & Summer 2010: Nine required courses and two field seminars were offered during Spring and Summer 2010 and a course rubric for each of the courses was developed and refined based on the new EPAS. For Field seminars, the same rubric was used for both foundation and concentration year field seminars for comparison purposes. All instructors of the courses were asked to complete a course rubric on-line in Moodle for each student in their courses. a. The total number of course rubrics completed for Spring 2010 was 417, with a 96.8% completion rate. b. The total number of course rubrics completed for Summer 2010 was 128, with a 100% completion rate. c. A rubric for field seminar was developed in Spring 2010, the first time since the MSW program started. The total number of field course rubrics for Spring & Summer 2010 was 139, with a 96.5% completion rate. e. All the items of the following eight course rubrics yielded a mean score above 4.0: SWRK 502, 520, 535, 602, 603, 630, 645, and 698. f. SWRK 521 (Generalist Social Work Theory and Practice) had two items whose mean was below 4.0.: March 30, 2009, prepared by Bonnie Paller “Student integrates multiple sources of knowledge in needs assessment” “Student attends to changes including technological developments to plan relevant services” g. Most of the items of the field course rubrics yielded a mean score above 4.0 except for the three items that addressed Research, Policy and Evaluation related EPAS. However, analysis revealed that the low scores were produced due to the high frequency of the response - “no opportunity to observe the competency”. Among the 13 items of the field rubric, concentration year field students were rated by their field liaisons to be significantly better on the 5 items which respectively addressed the following EPAS: Policy (3.36 vs. 4.04), Respond to context (4.05 vs. 4.56), Assess(4.14 vs. 4.48), Intervene (4.06 vs. 4.48), and Evaluate (3.73 vs. 4.46). Focus Group with Graduating Students A focus group was held in March 2010 with graduating students and the following feedback was provided: (1) Research: a. Needs more consistency between research professors and capstone advisors b. Need a facility (computer lab) to get hands-on experience of SPSS for student’s capstone project c. 3-year program students: There was too much time lapse between the first research course and the second research course. (2) HBSE a. A bit skewed towards child development and need a balance between child development and adult development (3) Practice a. The first year practice education did not really help with future practice. b. Having a combined class with another section of the course was not helpful in learning practice. (4) Policy a. The first policy course was not sequenced appropriately. b. Need more specific guidance for assignments. (5) Field Seminar a. The size of field seminar needs to be kept small, desirably less than 10. b. There was an issue with productivity of the field seminar due to the lack of preparedness by the professor. c. The class time, 5pm, was a challenge for 3-year program students. (6) Field Internship a. Too much emphasis on clinical rather than a broad range of social work experience b. There was an issue of lack of respect by field staff and faculty. (7) Administration a. Need more structure and consistency in grading students’ work March 30, 2009, prepared by Bonnie Paller 2f. Use of Assessment Results of this SLO: Think about all the different ways the results were or will be used. For example, to recommend changes to course content/topics covered, course sequence, addition/deletion of courses in program, student support services, revisions to program SLO’s, assessment instruments, academic programmatic changes, assessment plan changes, etc. Please provide a clear and detailed description of how the assessment results were or will be used. (1) Each curriculum committee met to discuss whether their course involved any EPAS whose score was below the benchmark of 4.0 on the Student Self-Efficacy Scale, and if so, they adapted their curriculum to make sure that the contents were delivered to the students. (2) Course rubric of SWRK 635: Based on the poor score on one item of the rubric, the course rubric was refined to be more consistent with the common assignment. (3) Course Rubric of SWEK 521: Based on the poor score on two items of the rubric, the course rubric was refined to be more consistent with the common assignment. (4) To assist incoming students’ writing and their understanding of APA format that is required in the social work profession, a mandatory session of “Writing and Ethics Workshop” is scheduled to be held on September 14, 2010. (5) Based on the feedback obtained from the focus group, the following activities or changes were made: a. The effectiveness of the current sequence/order of the courses was discussed and rearranged to better meet the students’ needs: (1) The one-year time lapse between the first and second research course of the 3-year program was minimized and they are now offered in consecutive semesters starting in the 2010-2011 academic year; and (2) the second policy course was moved from fall semester of concentration year to spring of concentration year. b. A focus group will not be a routine part of MSW assessment because most of the information obtained from the focus group were overlapping with the information gathered through the other assessment activities. Rather, a focus group will be used on an “as needed” basis when the department plans any significant changes with the program or curriculum. c. Starting 2010-2011, a focus group will be under the oversight of the department administration, not assessment. Some programs assess multiple SLOs each year. If your program assessed an additional SLO, report the process for that individual SLO below. If you need additional SLO charts, please cut & paste the empty chart as many times as needed. If you did NOT assess another SLO, skip this section. 2a. Which Student Learning Outcome was measured this year? March 30, 2009, prepared by Bonnie Paller 2b. What assessment instrument(s) were used to measure this SLO? 2c. Describe the participants sampled to assess this SLO: discuss sample/participant and population size for this SLO. For example, what type of students, which courses, how decisions were made to include certain participants. 2d. Describe the assessment design methodology: Was this SLO assessed longitudinally (same students at different points) or was a cross‐ sectional comparison used (comparing freshmen with seniors)? If so, describe the assessment points used. 2e. Assessment Results & Analysis of this SLO: Provide a summary of how the data were analyzed and highlight important findings from the data collected. 2f. Use of Assessment Results of this SLO: Think about all the different ways the results were (or could be) used. For example, to recommend changes to course content/topics covered, course sequence, addition/deletion of courses in program, student support services, revisions to program SLO’s, assessment instruments, academic programmatic changes, assessment plan changes, etc. Please provide a clear and detailed description of each. 3. How do your assessment activities connect with your program’s strategic plan? March 30, 2009, prepared by Bonnie Paller 4. Overall, if this year’s program assessment evidence indicates that new resources are needed in order to improve and support student learning, please discuss here. A computer lab would be a great additional resource to provide hands-on experience of SPSS, which would effectively used for students’ capstone projects. 5. Other information, assessment or reflective activities not captured above. 6. Has someone in your program completed, submitted or published a manuscript which uses or describes assessment activities in your program? Please provide citation or discuss. March 30, 2009, prepared by Bonnie Paller