What “Extras” Do We Get with Extracurriculars? Technical Research Considerations

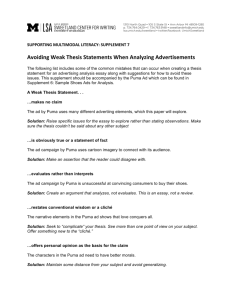

advertisement