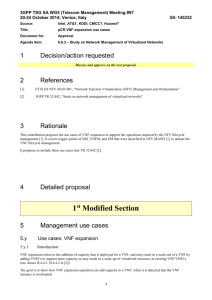

ETSI GS NFV-SWA 001 V1.1.1 Network Functions Virtualisation (NFV);

advertisement