Study Guide: Eigenvalues, Eigenvectors, and Diagonalization

advertisement

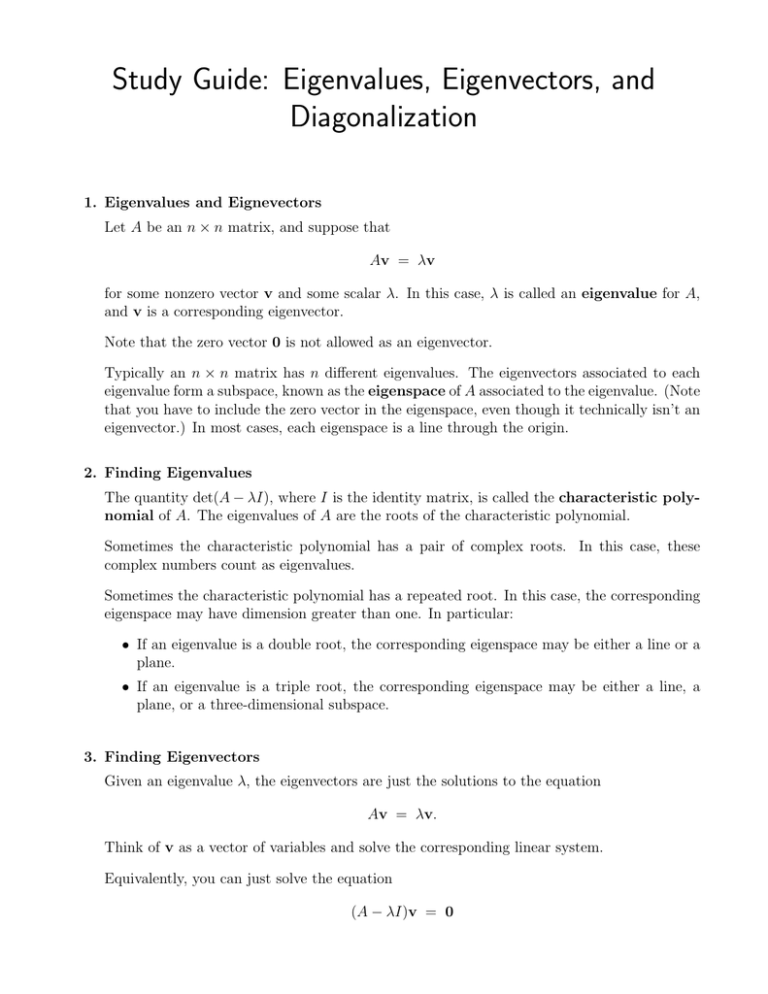

Study Guide: Eigenvalues, Eigenvectors, and Diagonalization 1. Eigenvalues and Eignevectors Let A be an n × n matrix, and suppose that Av = λv for some nonzero vector v and some scalar λ. In this case, λ is called an eigenvalue for A, and v is a corresponding eigenvector. Note that the zero vector 0 is not allowed as an eigenvector. Typically an n × n matrix has n different eigenvalues. The eigenvectors associated to each eigenvalue form a subspace, known as the eigenspace of A associated to the eigenvalue. (Note that you have to include the zero vector in the eigenspace, even though it technically isn’t an eigenvector.) In most cases, each eigenspace is a line through the origin. 2. Finding Eigenvalues The quantity det(A − λI), where I is the identity matrix, is called the characteristic polynomial of A. The eigenvalues of A are the roots of the characteristic polynomial. Sometimes the characteristic polynomial has a pair of complex roots. In this case, these complex numbers count as eigenvalues. Sometimes the characteristic polynomial has a repeated root. In this case, the corresponding eigenspace may have dimension greater than one. In particular: • If an eigenvalue is a double root, the corresponding eigenspace may be either a line or a plane. • If an eigenvalue is a triple root, the corresponding eigenspace may be either a line, a plane, or a three-dimensional subspace. 3. Finding Eigenvectors Given an eigenvalue λ, the eigenvectors are just the solutions to the equation Av = λv. Think of v as a vector of variables and solve the corresponding linear system. Equivalently, you can just solve the equation (A − λI)v = 0 which saves you the step of having to combine like terms. Sometimes you are asked to find a basis for an eigenspace. In this case, you are simply trying to find a basis for the null space of the matrix A − λI. In particular, you should use the following procedure: 1. Reduce A − λI to reduced echelon form. 2. Each column without a pivot corresponds to a free variable. 3. There is one basis vector for each free variable. The value of the free variable for this basis vector is 1, and the values of the other free variables for this basis vector is 0. Use the reduced system of equations to compute the remaining entries of this vector. 4. Diagonalization Almost any matrix A can be written as SDS −1 , where S is an invertible matrix and D is a diagonal matrix. In particular, the columns of S consist of a basis of eigenvectors for each eigenspace, and the diagonal entries of D are the corresponding eigenvalues. We say that A is diagonalizable if there are enough eigenvectors to be the columns of an n × n matrix S. This always happens if the roots of the characteristic polynomial are distinct, but if you have a repeated root then one of the eigenvalues may not have enough eigenvectors. In particular: • If an eigenvalue is a double root of the characteristic polynomial but the corresponding eigenspace is only a line, then A is not diagonalizable. • If an eigenvalue is a triple root of the characteristic polynomial but the corresponding eigenspace is either a line or a plane, then A is not diagonalizable.