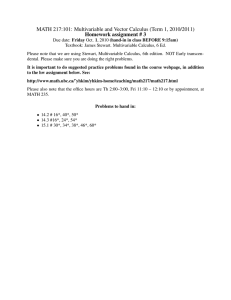

Multivariable Vector-Valued Functions

advertisement